As institutions prepare for fall 2020 and beyond, they are exploring their needs for data and analytics. These efforts emphasize measuring and improving student engagement, but many questions remain unanswered.

It's rare that a single life event can produce a sense of gratitude around human relationships and technology at the same time. We had the pleasure of co-facilitating an analytics roundtable session recently, and participants expressed this exact sentiment. During the rush to remote instruction at the start of the COVID crisis, their institutions relied heavily on two things: the relationships built within their organizations and the technology infrastructure (strong emphasis on cloud) that allowed them to scale quickly. As we often see in times of human crisis, the sense of doing "whatever it takes" was in full force; luckily, technology infrastructure created a stable foundation for that esprit de corps.

Supporting the "New Normal"

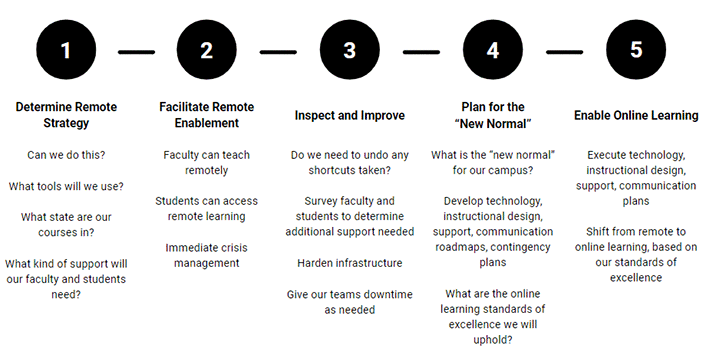

This ELI 2020 Online session created a forum for higher education teaching and learning professionals to come together and share thoughts and perspectives around what critical analytics/data they will need to support a "new normal." We started the session with a look back at how far their institutions had come in a short time. They had moved courses and instructors and students to remote engagement, redesigned and supported new interactions, doled out laptops and troubleshot home networks—all in the name of academic continuity. We then turned the conversation to the future and heard that nearly 94% of respondents (representing primarily IT and instructional designer roles) are improving upon the emergency remote instruction measures put into place during early part of the crisis and are actively planning for the elusive "new normal" (see phases 3 and 4 in figure 1).

What Does Student Engagement Look Like?

Whatever this new normal looks like, it is centered around students. When asked what insights institutions will need to be able to report on and measure this fall, the overwhelming response was student engagement. Most definitions of engagement reference the relationship between student activity (in the form of attention, interest, and curiosity) and motivation. The concept of student engagement is generally predicated on the belief that learning improves when students are inquisitive, interested, or inspired and that learning tends to suffer when students are bored, dispassionate, disaffected, or otherwise "disengaged."1

Implications of Moving Online

One key challenge caused by the pandemic was that instructors and student support staff lost a very important interaction data point: the ability to assess student engagement face-to-face. Furthermore, while plans for fall are still up in the air, 57.1% of our participants anticipated their fall instruction to be "primarily remote" with some on-the-ground interaction (where technology cannot replicate a hands-on experience). Pre-pandemic measurements of student engagement would have drawn from in-person observation as well as metrics from the student's digital ecosystem, such as number of LMS logins. Now, institutions must learn how to measure the active interest and motivation of their students and assess learning progress in a fully online interaction, with the added challenge that every single student has a valid reason to be distracted or "disengaged." Discussion during the ELI session drew out several implications of the move to online interaction, both in the early stages of the crisis and now as we all plan for the fall semester:

- LMS data became instantly valuable. When trying to identify and measure student engagement, data from the LMS was deemed particularly valuable here, as learning interactions are often centered on the LMS. However, there was an acknowledgment that it takes more than LMS data, and it takes more than cursory participation, to understand whether learners are truly engaged and actively learning. In addition, participants wondered how to use data to pinpoint learners who were previously engaged and actively learning but who struggled to engage in the same way after the move to remote learning. What data markers would help denote those behavior patterns?

- Now is a good time to devise a written standard of excellence. When thinking about how faculty rubrics or sentiment analysis can aid us and what role learning science might play, panelist Mitchell Colver from Utah State University offered a model that starts with a written standard of excellence to be adopted by faculty and instructional designers. This includes a rubric against which online content can be measured to ensure a uniform level of quality and consistency, especially important in resulting data analysis. Although this model worked for Utah State, participants expressed concern about finding a middle ground between giving faculty autonomy in tools versus creating a consistent experience for students.

- Who is your "data therapist"? Colver suggested that when data is put in the hands of instructors, it needs to come with a "data therapist" to help people process not only what they see but how it makes them feel. Many participants wanted to know what best practices institutions recommended around interventions, specifically what the data says about the effectiveness of a nudge, and whether it matters how that nudge is initiated.

- Supported students are successful students. From the discussion, there was a sense that services outside the classroom need to be prioritized as much as those available during class. Anecdotally, students we've spoken with have said that replacing their in-classroom experience was only one piece of their college experience and that they wish more emphasis had been put on replacing supplemental services. Creating a comprehensive view of a student's interactions requires data aggregation across not only the learning ecosystem but the digital campus environment more broadly.

- To get started, prioritize the most actionable insight. For institutions that are approaching student engagement from the ground up, what is the best way to get started? Panelist Catherine Zabriskie from Brown University suggested prioritizing the most actionable insight first. This approach puts the focus on areas with the most impact, engendering trust and showing accountability. In addition, an important step is acknowledging that the student population is not uniform and that different segments will need different levels of support. Assessing the engagement of the incoming freshmen class, already at risk due to "summer melt," will require careful and planned communications, with interaction options that can be measured.

The questions still outnumber the answers at this stage, but we can assure you they are actively being asked and enthusiastically debated.

Answering the Hard Questions

We've each spent our careers in enterprise application integration, including more than 20 years in education technology. If ever there were a watershed moment for ubiquitous, institution-wide data integration, it is now. The beautiful thing about this moment is that the infrastructure and technology exists to allow for the aggregation of disparate systems to help weave together the student story. Human insight will need to pick up from there and answer the hard questions of measuring student engagement and supporting the holistic experience of students across a virtual interaction landscape. As we encounter the realities of the new normal, our models will need to shift to answer novel and changing questions. It will take both humans and technology to answer these questions. The good news is, we've proven that's a combination that works. Let's get to it.

For more insights about advancing teaching and learning through IT innovation, please visit the EDUCAUSE Review Transforming Higher Ed blog as well as the EDUCAUSE Learning Initiative and Student Success web pages.

The Transforming Higher Ed blog editors welcome submissions. Please contact us at [email protected].

Note

- See "Student Engagement," Glossary of Education Reform. ↩

Kate Valenti is Vice President of Operations at Unicon, Inc.

Linda D. Feng is Software Architect at Unicon, Inc.

© 2020 Kate Valenti and Linda Feng. The text of this work is licensed under a Creative Commons BY-SA 4.0 International License.