Northwestern University Information Technology built an AI-enabled chatbot to answer questions about the university's LMS on a 24/7 basis.

Institutional Profile

Northwestern University is a comprehensive research university comprising twelve schools. With approximately 8,000 undergraduates and 14,000 graduate students, the university has three campuses: Evanston and Chicago, Illinois, and Doha, Qatar. It is top-ranked for undergraduate education and in many graduate areas and brings in more than $1 billion per year in sponsored research.

The Challenge/Opportunity

In 2017, members of a small software development group at Northwestern wondered if a newly licensed set of cognitive services by IBM held the potential to improve user support in the university's instance of Canvas. Help desk staff frequently answered the same questions, and the help desk wasn't staffed 24 hours a day. IBM Watson services helped in the creation of tools capable of answering questions using natural language and that users could access anytime. Could the developers build a tool that fulfilled the most common help desk requests and was available 24/7? In collaboration with the Teaching and Learning Technologies (TLT) support team, the developer group conceived the idea for the Canvas Chatbot and began working on it.

One year later, a little robot icon in Northwestern purple was embedded in Canvas. Clicking on the icon started the chatbot, which could answer student and instructor questions and point them to key resources. The chatbot increased user engagement with IT support and expanded the user base from being mostly faculty—who submit the majority of learning management system (LMS) support tickets—to include students, who seemed comfortable interacting with a chatbot. Likely because of this, the chatbot did not reduce the number of support requests; in fact, it seemed to increase them.

The Process

Starting in 2017, the software development group developed an initial interaction-level taxonomy of chatbot user interaction workflows (direct responses, direct responses via decision trees, recommended readings, targeted escalations) and an initial content-level taxonomy based on the existing IT/Canvas knowledge-base guides and actual support interactions. TLT staff categorized six months' worth of support interactions to fit into the content-level taxonomy and also expanded it in the process. Both taxonomies were used in the creation of the original intent model and conversational AI dialogue for the chatbot using the IBM Watson Assistant. The intent model and dialogue integrated with a custom orchestration engine built on top of the Botkit bot construction library and various Amazon Web Services–based technologies.

Creation of the chatbot required significant effort, especially in the initial development phase. A single developer, Patricia Goldweic, estimates that initial product development took about six months. Once the chatbot was developed, adding meaningful improvements could take from three to five weeks, depending on the complexity of the new feature. Maintenance of the chatbot involves a variety of tasks for the developer and for the chatbot content team, a group of four staff who collaboratively review transcripts, develop new content, and test new features. The developer dedicates from two to five full days each month to maintenance, and each member of the content team dedicates one full day.

When it went to production pilot in fall 2018, the chatbot was meant to help users answer their most frequently asked questions while providing special, step-by-step help for issues related to student enrollment and course availability. The team designed a decision tree that could guide users through an enrollment-related question process that determined whether their issues could be readily answered or should be escalated to the IT Service Desk. Team members targeted only the most common questions to be covered by the chatbot's intent model, including NetID-troubleshooting issues and Canvas-specific questions.

Initially, the team incorporated three innovative features in the chatbot:

- Canvas Guides search: A fallback behavior that searches public Canvas resources for information relevant to the user's question. Although this search feature predated the availability of vendor-provided "search/discovery" cognitive services within IBM Watson Assistant, it also proved so useful that the developers did not feel they would be better served by switching over to those vendor-provided services when they became available.

- Dynamic learning from feedback: A form of dynamic learning that would expand both the chatbot's intent model and the conversational AI dialogue based on user-acknowledged helpfulness of search results.

- Integration with the ITSM ticketing system to directly create tickets for users.

After a year of continuous chatbot improvements, the team expanded its original goals. Facing a significant increase in support requests for routine activities, team members wanted to see whether the chatbot could help with more than answering questions. Could it be programmed to take action and complete tasks within the LMS? In 2020, with the onset of the pandemic, the LMS support team was fully engaged helping the university move to remote instruction and learning, leaving less time for routing help requests. Having the chatbot shoulder some of the support burden became even more important. The team added new skills to the chatbot: increasing a course's storage capacity and creating prep (sandbox) sites upon request. These two tight integrations with the Canvas APIs automated a couple of support tasks that in the past required support tickets and human intervention.

Content Improvements

With an expanded content team in 2021, the project added additional Northwestern-specific expertise within the chatbot's model in the following areas:

- Northwestern-specific ways of using Canvas resources (or how to determine the applicability and routing of certain Canvas Guides to the situation at hand), such as how to add someone to a Canvas course

- Recognition of the symptoms of a student in distress and the subsequent workflow, which integrates with the counseling office via an email notification, allowing the office to follow up directly with the student (this item was requested by the Office of the Provost)

- Use of video at Northwestern, including the Zoom and Panopto tools as well as lecture capture services

- General knowledge about all the learning application integrations supported by TLT

- General knowledge about AccessibleNU (the office for students with disabilities) and Canvas Commons

- Canvas LTI Integrations supported by other groups within Northwestern

Additional content was added that helps students and others navigate some of the many non-Canvas university resources, such as questions about student IDs or NetIDs, tutoring resources, and issues or questions concerning areas such as tuition and course registration, graduation, and orientation and placement tests.

Algorithmic Improvements

The content improvements were accompanied by an upgraded chatbot implementation that introduced various features during 2020–23.

For better dialogue support:

- Disambiguation, a chatbot feature that presents options to clarify the user's intent

- Digression, which allows the user to change topics

- Alternative searches (when users are not satisfied with the response provided by the chatbot, they are offered the ability to search Canvas Guides)

- Handoff to live chat with Instructure (the company that developed Canvas)

- As of September 2023, incorporation of generative AI in the production of Canvas Guides–related responses (recommended readings). Generative AI improved the set of answers that, until then, merely pointed users to resources; the chatbot can now incorporate into its responses the direct answers found by the AI, making the chatbot responses more helpful.

For continuous improvement:

- Dynamic learning from user rephrasing (again improving both the chatbot model and the conversational AI dialogue)

- Analytics tracking via custom-defined events integrated with the conversational AI dialogue

- Analytics reporting (summary and content based, plus historical comparisons) via integration with the Splunk data platform (these reports are now included in a monthly automated email to the content team)

Outcomes and Lessons Learned

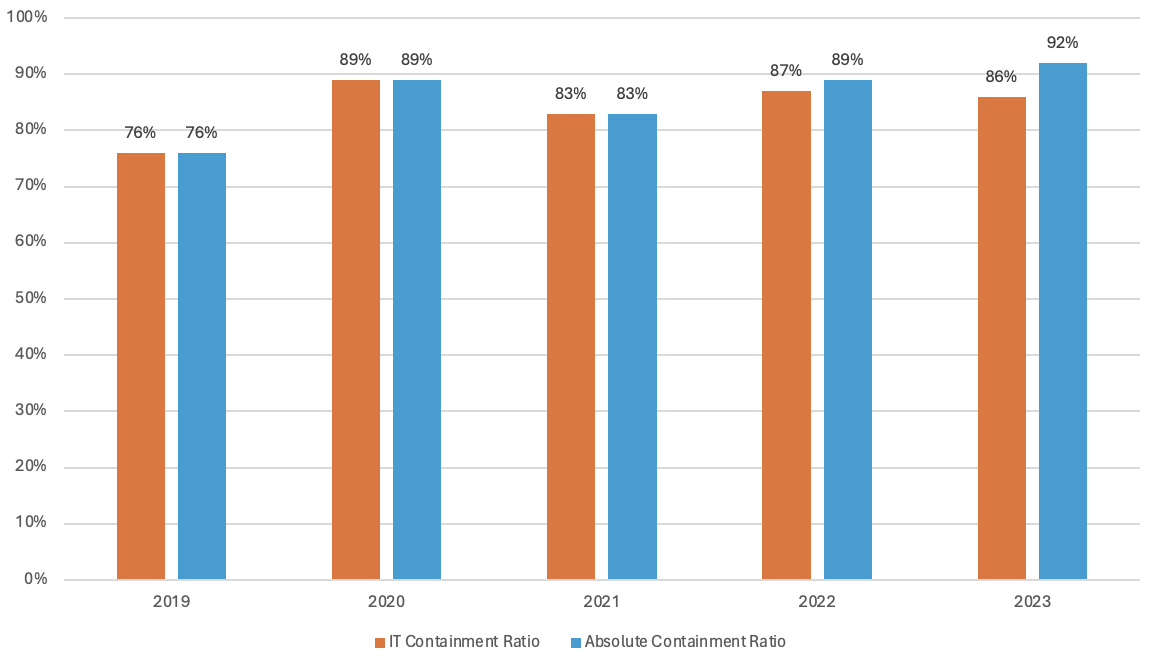

Across several dimensions, the chatbot produced measurable improvements for the IT staff and users. The absolute containment ratio measures how successful the chatbot is in answering a user's question without involving Northwestern IT. The IT containment ratio measures how successful the Canvas chatbot is in answering a user's question without the involvement of Northwestern IT or Instructure staff members. Together, these measures indicate that the chatbot has relieved Northwestern IT of a portion of its support burden. The absolute containment ratio peaked in 2023 at 92%. Since its establishment, the chatbot has had a measure of between 80% and 90% IT containment (see figure 1).

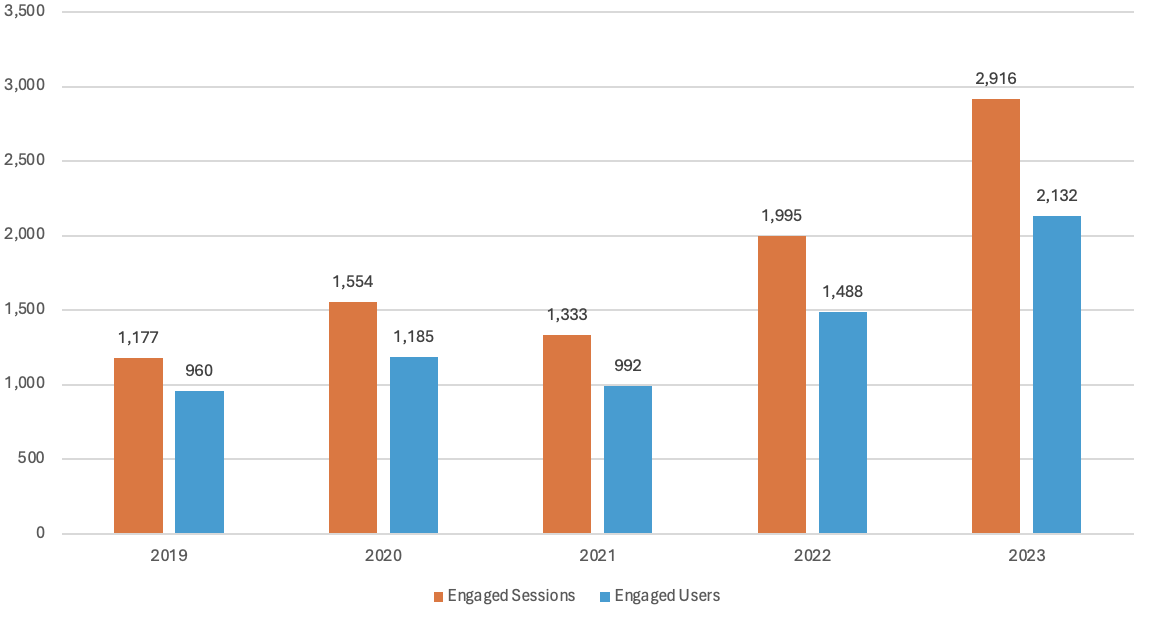

Engagement with the chatbot has been steadily increasing. We saw an increase of about 50% from 2021 to 2022 in both the number of users and the numbers of sessions, and 2023 saw an increase of 45% over 2022 (see figure 2). This suggests that the chatbot is fulfilling a user community need and likely getting better at doing it. (Engaged sessions are actual interactions; engaged users are those participating in the sessions.)

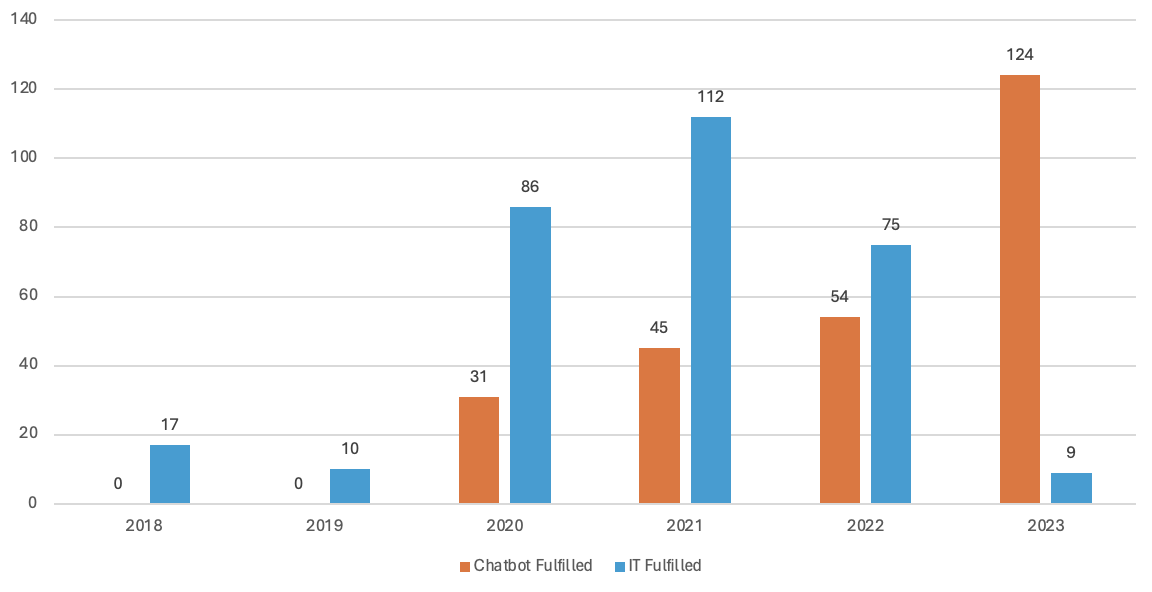

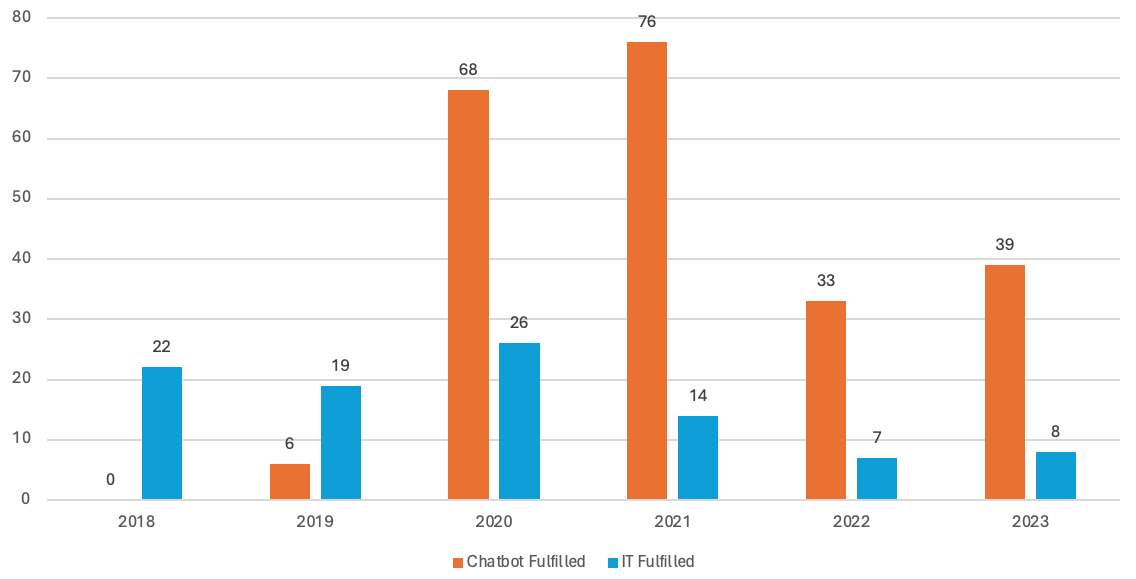

Targeted deep API integrations can directly contribute to the decrease of the IT support burden. The support burden for creating prep sites (sandbox sites for instructors) and for increasing course storage shifted dramatically from Northwestern IT staff to the chatbot over the past three years (see figures 3 and 4).

Throughout the chatbot journey, the team learned many lessons, some of which were surprising and unexpected:

- The quality of the content embedded in the chatbot model makes a huge difference in its effectiveness and is ideally backed by user-facing support expertise.

- Many users are averse to reading documentation guides, and even when the chatbot provides pointers to all the resources needed to answer their questions, these users nonetheless want to escalate their issue and interact with a human in the process. This suggests two recommendations:

- Chatbot responses should ideally be very direct (for example, "Go to this page in your Canvas course and click on this button") and should try to avoid, as much as possible, simply pointing to external resources.

- Because support escalations will not necessarily decrease in number after introducing a support chatbot (the opposite could, in fact, be true), it's important not to have this as a goal to justify the existence of a chatbot. Instead, improving user support should be the aim.

- Deep API integrations are essential contributors to chatbot helpfulness. Taking action in the users' domain is much more helpful than simply answering their questions.

- Two forms of escalation are better than one. The Northwestern chatbot can hand off to live chat with the corporate LMS support as well as create a ticket in Northwestern systems. The handoff capability is crucial in cases that require LMS knowledge but not Northwestern specificity, and this contributes to more immediate, and thus better, support.

- Chatbot workflows should not necessarily depend on feedback from users because this input can be scarce. Instead, a chatbot should be able to act regardless of feedback but be able to respond to feedback when provided (our dialogue evolved dramatically based on this realization).

- Chatbot workflows should account for language scarcity, that is, from users who intentionally or unintentionally address the chatbot with only one or two words and not with a complete natural language sentence (disambiguation is a useful feature in these cases).

- Chatbot transcript reviews and retraining are essential to maintaining a robust AI intent model, particularly in the face of dynamic model improvement. Such improvements can result in model drift, which occurs when a chatbot learns with no oversight, allowing it to potentially learn the wrong things. In addition, retraining allows us to augment the model and make it more robust.

- Support and documentation on how to best use the chatbot are helpful and needed. For example, Canvas workshop leaders demonstrate how to request a prep site or storage increase during a session.

- The onboarding of content experts takes considerable time, perhaps as much as six months, as they gain proficiency in understanding the structure of the dialogue and intent models and are able to review output and suggest new content.

- Content team workload will occasionally add up to 20–25 hours monthly, particularly during the busiest support months of the academic year while reviewing interaction transcripts.

The Canvas chatbot has been a successful experiment in the use of AI-based technology that resulted not only in better support for users—more immediate, and with an increased user base—but also in efficiencies for IT support.

Where to Learn More

Patricia Goldweic is a Lead Software Developer at Northwestern University.

Victoria Getis is Senior Director, Teaching & Learning Technologies, at Northwestern University.

© 2024 Patricia Goldweic and Victoria Getis. The content of this work is licensed under a Creative Commons BY-NC-ND 4.0 International License.