The use of generative AI in higher education shows no signs of slowing, and the need for supporting resources and processes means institutions should be focused on creating strategies to set themselves up for success.

EDUCAUSE and Amazon Web Services, a 2024 EDUCAUSE Mission Partner, collaborated to identify the topic for this QuickPoll, formulate and evaluate the research objectives, and develop the poll questions.

EDUCAUSE is helping institutional leaders, IT professionals, and other staff address their pressing challenges by sharing existing data and gathering new data from the higher education community. This report is based on an EDUCAUSE QuickPoll. QuickPolls enable us to rapidly gather, analyze, and share input from our community about specific emerging topics.Footnote1

For the purposes of this QuickPoll survey, we use the following definition of "generative AI":

Generative AI is the general descriptor for algorithms that create new content (e.g., text, images, audio, computer code). Like all AI, generative AI is powered by machine learning models—very large models that are pre-trained on vast data sets and commonly referred to as Foundation Models (FMs). Examples of generative AI include Llama 2, Claude, ChatGPT, DALL-E, Musico, and many more.

The Challenge

Discussion continues to be rampant on the use of generative AI and its integration across higher education institutions by faculty, staff, and students. Institutional leaders, staff, and faculty are struggling with myriad challenges in adopting various AI tools coming out of the woodwork. Staff burnout and financial strains have been reported in recent EDUCAUSE workforce reports, and because AI remains quite new and requires resources and effort to support, it surely adds stress, at least for some in higher education. Privacy concerns and the risks of reinforcing inequalities due to data biases are real, especially if institutions move too quickly to adopt AI tools or adopt them in silos across campus without proper oversight. Still, the opportunities to enhance student learning experiences and help prepare students for a changing workforce mean faculty, staff, and leaders should work to identify how they can prepare to adapt as generative AI uses evolve. Involving stakeholders from across campus and building a strategy incorporating proper data governance and other policies will help institutions avoid costly mistakes.

The Bottom Line

Although some respondents reported that their institutions already have some AI tools and resources in place, the majority do not. Respondents expect and hope that in the coming years, their institutions will do more across the board to support and develop the opportunities available from generative AI. About half of respondents indicated their institution has begun developing an enterprise AI strategy and has already been involving stakeholders from across the institution. Respondents want their institution to use AI tools to benefit the student experience, learning design, administrative automation, and more. Realizing those hopes may be difficult, but creating a strategy now can help institutions ease later burdens and costs.

The Data: Current Offerings

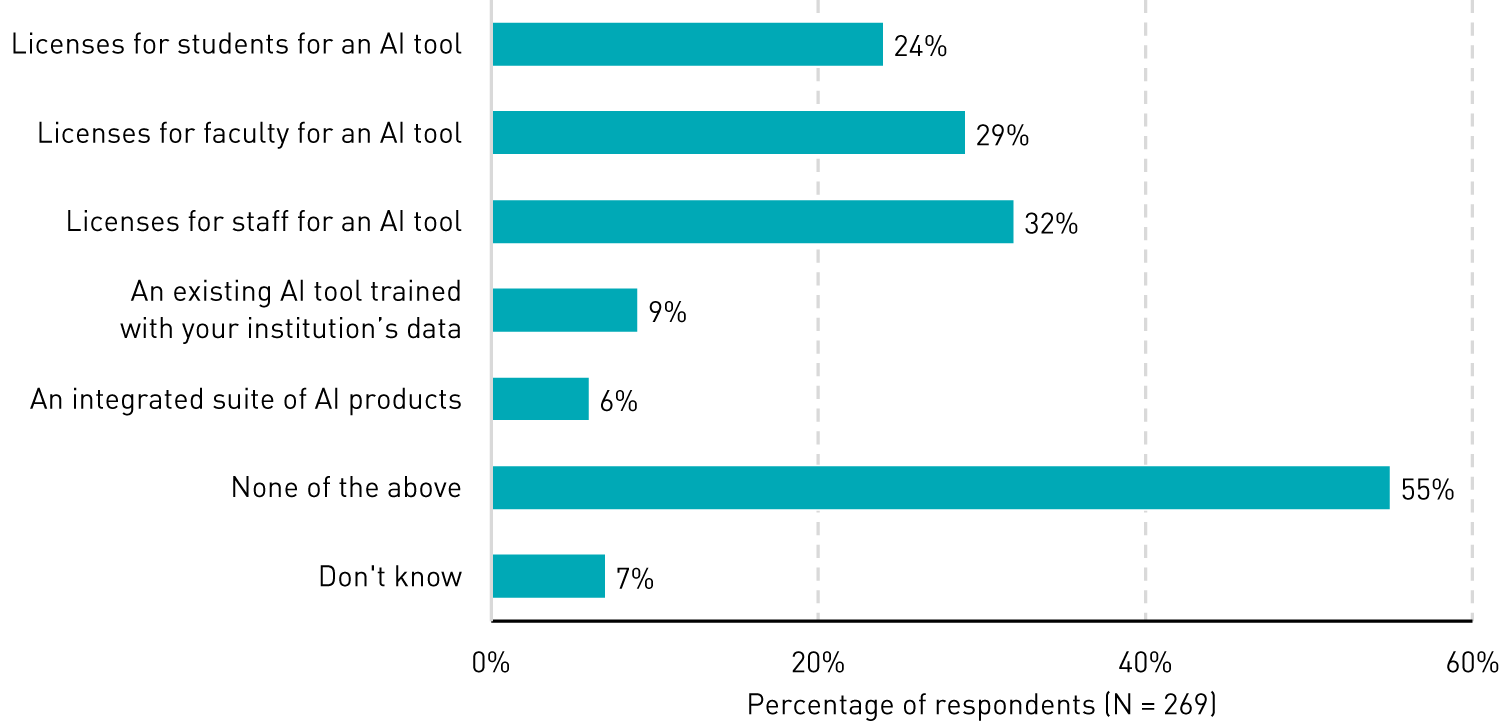

Most institutions are focusing on guidelines and resources rather than licenses for AI tools. A majority of respondents (55%) indicated that their institution does not currently provide any of the AI tools or services we asked about—licenses for students, faculty, and staff for AI tools, AI tools trained with institutional data, and integrated AI products (see figure 1). Only about a third or less of respondents indicated that their institution currently provides licenses for AI tools, and less than 10% said that their institution currently provides AI tools trained on institutional data or an integrated suite of AI products.

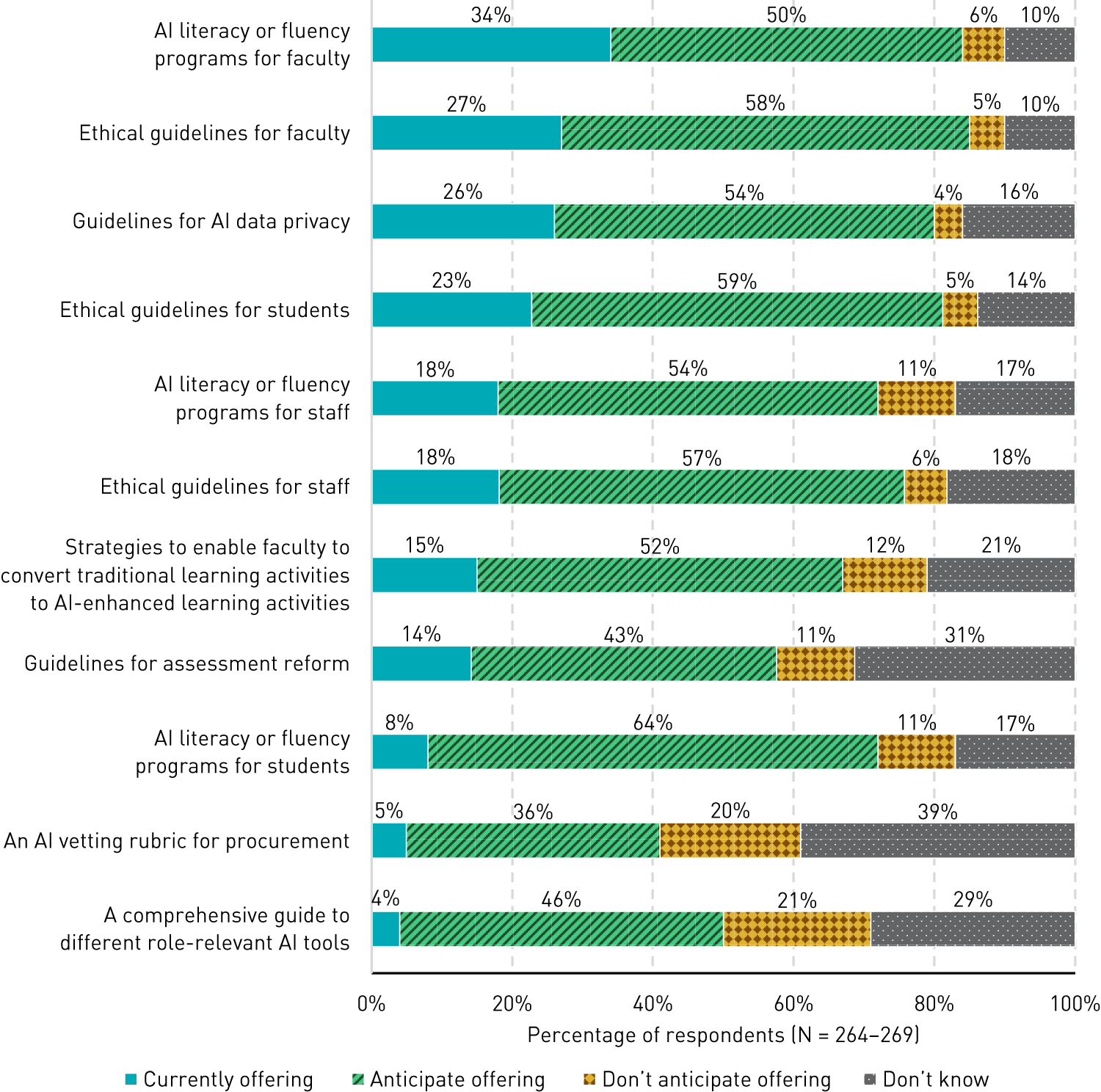

A minority of respondents said that their institution is currently offering any of various AI resources—the most common was AI literacy or fluency programs for faculty (34%), and the least common was a comprehensive guide to different role-relevant AI tools (4%) (see figure 2). Although few respondents said their institution is currently offering resources, majorities (50–64%) did say that their institution is anticipating offering literacy or fluency programs, ethical guidelines, and strategies to develop enhanced-AI learning activities soon, suggesting that many of these institutions are still developing resources. Respondents were less certain about whether their institution currently or will eventually offer AI vetting rubrics for procurement (39% selected "don't know"), guidelines for assessment reform (31% selected "don't know"), and a comprehensive guide to difference role-relevant AI tools (29% selected "don't know"). Overall, relatively few respondents (21% or less) indicated that their institution does not plan to offer the resources listed in figure 2.

The Data: Strategy and Stakeholders

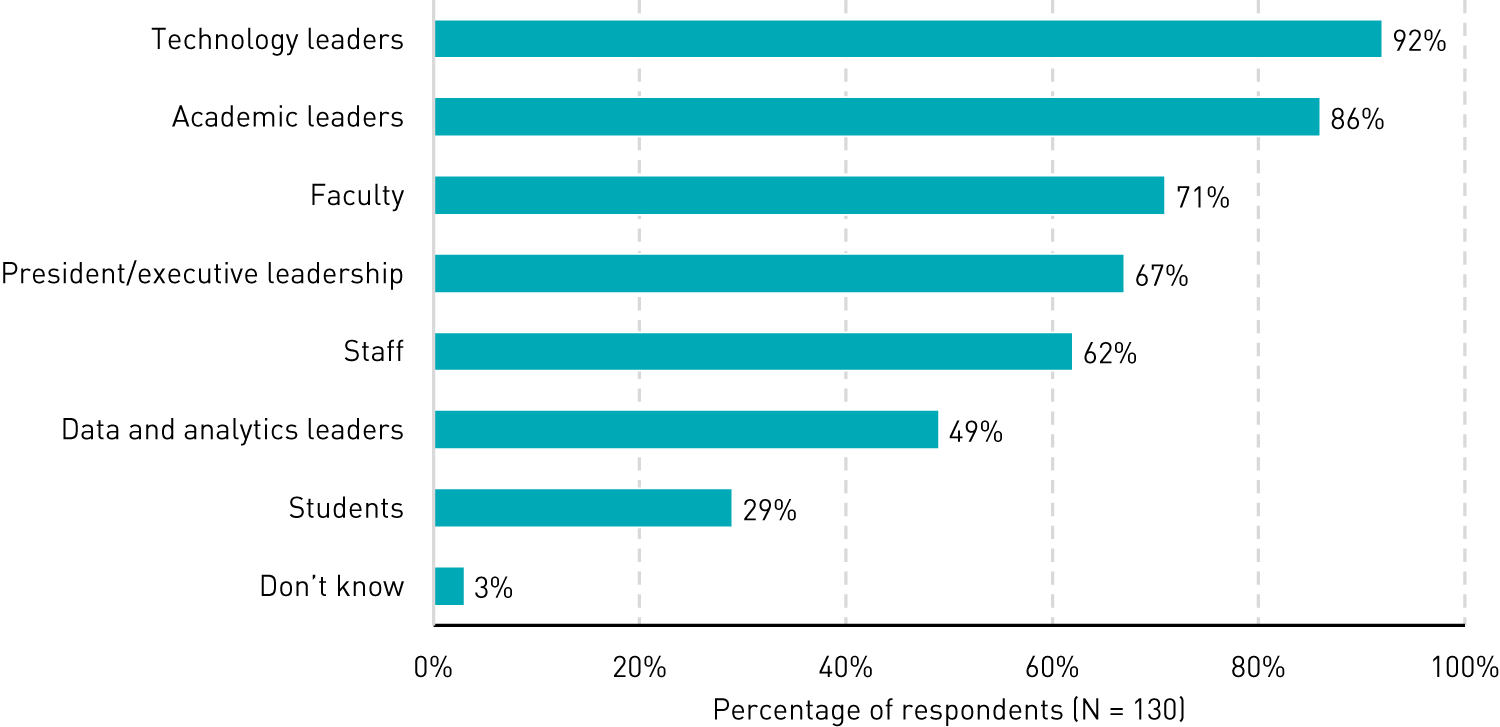

Institutions are listening to voices from various stakeholders. Just under half (48%) of respondents indicated that their institution has begun developing an enterprise strategy for generative AI; 33% have not, and the remaining 19% didn't know. Institutions that have begun developing a strategy have been involving stakeholders from across the organization (see figure 3). Most notably, technology leaders (92%) and academic leaders (86%) were reported as the most involved, while students (29%) were the least likely to have been recruited to participate in the discussion. When asked about other stakeholders who were involved, the most common responses included vendors or other third-party organizations, libraries, and centers for teaching and learning. Of the few respondents who reported that their institution involves students, a majority indicated that students make recommendations but don't necessarily have direct decision-making authority. The recent 2024 EDUCAUSE AI Landscape Study showed that the primary motivator for AI-centric planning is the rise of student AI use in their courses (73% of respondents), so it may benefit institutions to bring more students into the conversation.Footnote2

The Data: Current and Future Points of Focus

Institutions involve generative AI in many use cases, but stakeholders want more focus across the board. A plurality of respondents indicated that their institution currently focuses to some extent on using generative AI to enhance student learning experiences (47%), create new learning design and assessment approaches (41%), and boost productivity (35%) (see figure 4). However, a majority of respondents said that their institution currently focuses very little or not at all on using generative AI to create new curricular programs or to create new core capabilities (55% and 52%, respectively). Looking forward, more respondents felt that their institution will focus to a great extent on all five AI uses within the next two years, especially on using generative AI to enhance student learning experiences.

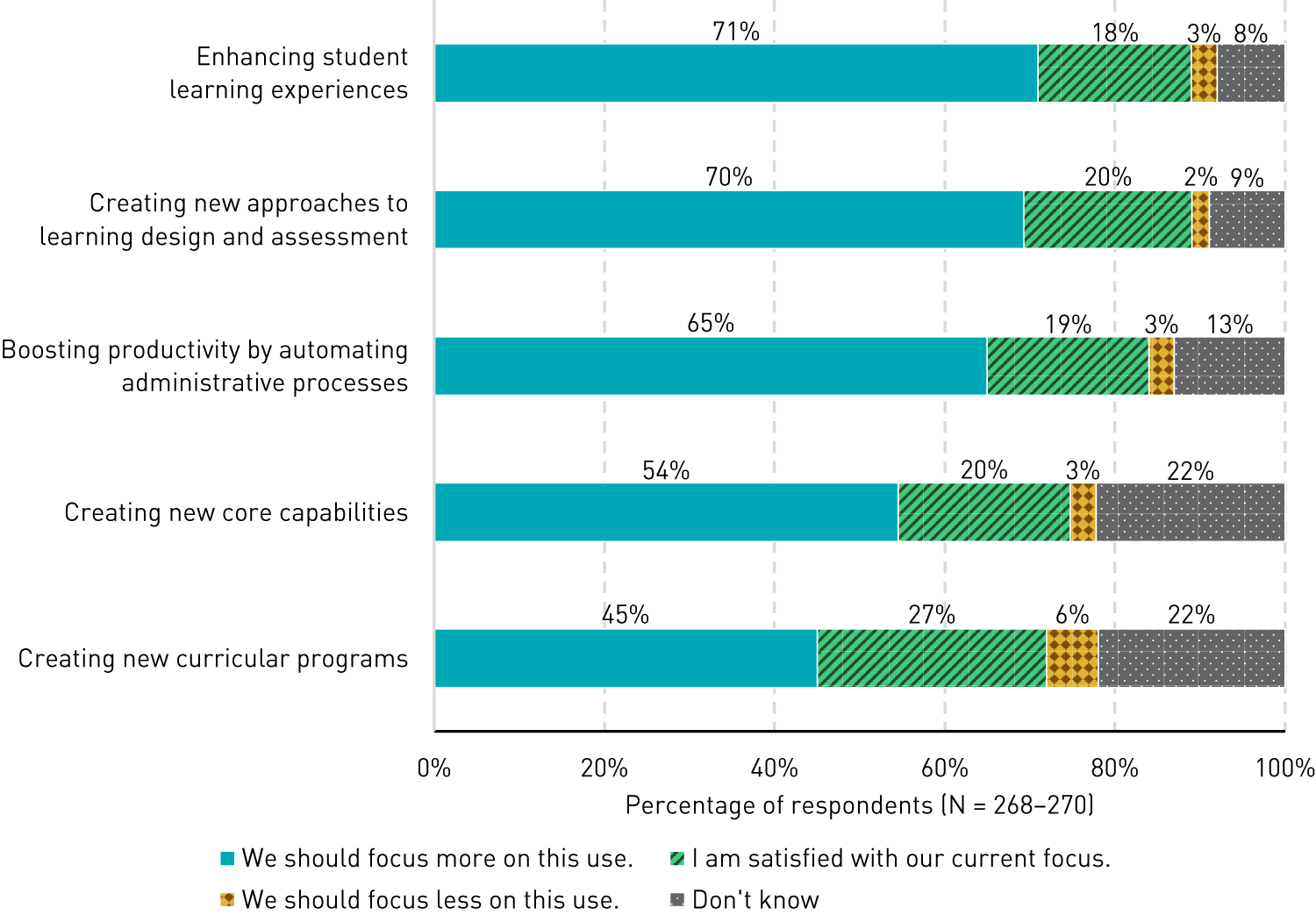

Across all five areas of AI usage, most respondents think their institution should increase its focus (see figure 5). Notably, a large majority felt that their institution should focus more on using AI to enhance student learning experiences (71%) and to create new approaches to learning design and assessment (70%). Interestingly, few respondents (27% or less) were satisfied with their institution's current amount of focus on AI uses, and far fewer respondents thought their institution should focus less on AI uses (6% or less). The low percentages of currently satisfied respondents may be due to the relative lack of maturity of institutional AI planning, strategy, and implementation (i.e., institutions may be in the early stages of strategy and implementation or may have not even started the process). The low number of respondents reporting that their institution should focus less on different generative AI uses suggests that respondents in general see importance, value, and perhaps growing influence of AI in various areas of higher education.

Common Challenges

Budgeting and staffing constraints are hampering the creation of strategies to support the adoption of AI tools. When respondents were asked what challenges they face in developing AI strategy, budget and staffing concerns were the most frequently cited. Limited financial and human resources are commonplace in higher education, and that lack of resources makes it hard to keep up with current needs, let alone creating and supporting something new and as big as these emerging AI technologies. Identifying cost-effective solutions will be essential. Looking to others in the higher education community who are able to bear the costs and lead the way may help, and identifying smaller projects or encouraging staff and faculty to look for grant support might help alleviate some of the burdens. For example, on December 1, 2023, the NSF wrote a Dear Colleague Letter (DCL) calling for more AI use in its program pages, so now is a good time to look for new opportunities.

Lack of awareness and support across the institution creates roadblocks. Respondents reported that concerns about privacy and ethics have been raised by committees and leaders at their institution, impeding any forward progress on developing a strategy to adopt AI tools. While those concerns are valid, institutions can mitigate them by creating a strategy that develops safeguards and includes guidelines for data governance to help improve data transparency and the ethical use of data.

Promising Practices

Interdisciplinary committees and task forces help bridge gaps in knowledge and create paths forward. Many respondents reported that their institution is utilizing existing committees, which saves resources, or has created new groups to gather information and recommendations on AI adoption strategy from stakeholders in various departments and positions on campus. Similar to other projects that can impact such a diverse group of end users and support staff, soliciting input from these different sources helps put together informed and comprehensive guidelines and strategies.

Piloting individual tools helps stakeholders understand AI tool benefits and challenges. Identifying individual use cases for generative AI use has allowed several respondents' institutions to test ways to improve efficiency (through easier code development and faster data analysis) and improve student learning experiences (through adoption by faculty and instructional designers). Pilot programs that use AI tools can help identify issues to address such as data security, usability, accessibility, and privacy, while reflecting on the process and results can lead to the creation of recommendations and guides for future work with other AI tools.

Further your knowledge and discuss with the community. Perhaps the most promising practice we have at our disposal is collaborative discussion with our colleagues. Together, we can make sense of new opportunities. As always, EDUCAUSE is here to support you. We encourage the higher education community to keep up with this rapidly evolving topic through our Connect Community Groups, such as Instructional Technologies, Instructional Design, Blended and Online Learning, and CIO.

- Wondering how ready your institution is to tackle AI? EDUCAUSE, in conjunction with AWS, has just released the Higher Education Generative AI Readiness Assessment. This assessment is designed to provide a sense of your institution's preparedness for strategic AI initiatives. Use the assessment to develop an understanding of the current state and the potential of generative AI at your institution and as an opportunity for discussion with others.

- The 2024 EDUCAUSE AI Landscape Study summarizes the higher education community's current sentiments and experiences related to strategic planning and readiness, policies and procedures, workforce, and the future of AI in higher education.Footnote3 EDUCAUSE will be releasing a follow-up report on AI sentiments based on roles and responsibilities.

- "EDUCAUSE QuickPoll Results: Positioning Higher Education IT Governance as a Strategic Function" speaks about the need for formally managed data governance to enable work on an AI strategy.Footnote4

All QuickPoll results can be found on the EDUCAUSE QuickPolls web page. For more information and analysis about higher education IT research and data, please visit the EDUCAUSE Review EDUCAUSE Research Notes topic channel. For information about research standards, including for sponsored research, see the EDUCAUSE Research Policy.

Notes

- QuickPolls are less formal than EDUCAUSE survey research. They gather data in a single day instead of over several weeks and allow timely reporting of current issues. This poll was conducted between April 8 and April 9, 2024, consisted of 15 questions, and resulted in 278 complete responses. The poll was distributed by EDUCAUSE staff to relevant EDUCAUSE Community Groups rather than via our enterprise survey infrastructure, and we are not able to associate responses with specific institutions. Our sample represents a range of institution types and FTE sizes. Jump back to footnote 1 in the text.

- Jenay Robert, 2024 EDUCAUSE AI Landscape Study, research report (Boulder, CO: EDUCAUSE, February 2024). Jump back to footnote 2 in the text.

- Ibid. Jump back to footnote 3 in the text.

- Ashley Caron, "EDUCAUSE QuickPoll Results: Positioning Higher Education IT Governance as a Strategic Function," EDUCAUSE Review, February 21, 2024. Jump back to footnote 4 in the text.

EDUCAUSE Mission Partners

EDUCAUSE Mission Partners

EDUCAUSE Mission Partners collaborate deeply with EDUCAUSE staff and community members on key areas of higher education and technology to help strengthen collaboration and evolve the higher ed technology market. Learn more about Amazon Web Services, 2024 EDUCAUSE Mission Partner, and how they're partnering with EDUCAUSE to support your evolving technology needs.

Sean Burns is Corporate Researcher at EDUCAUSE.

Nicole Muscanell is Researcher at EDUCAUSE.

© 2024 Sean Burns and Nicole Muscanell. The content of this work is licensed under a Creative Commons BY-NC-ND 4.0 International License.