Generative AI is arriving in higher education, but stakeholders are uncertain about its potential opportunities and challenges. One thing is clear: there is work to be done, and there's no time to waste.

EDUCAUSE is helping institutional leaders, technology professionals, and other staff address their pressing challenges by sharing existing data and gathering new data from the higher education community. This report is based on an EDUCAUSE QuickPoll. QuickPolls enable us to rapidly gather, analyze, and share input from our community about specific emerging topics.Footnote1

The Challenge

Few technologies have garnered attention in the teaching and learning landscape as quickly and as loudly as generative artificial intelligence (AI) tools—tools that use AI to create content such as text, images, and sounds. Specifically, the text-generating tool ChatGPT has been making headlines, despite GPT models having been around for a few years. The recent attention is undoubtedly due to major advancements in GPT 3, which allows people to easily generate content that is comparatively sophisticated and increasingly difficult to discern as having been written by a computer. People are using these tools to do everything from negotiating cable bills to writing love songs. As a society, we're still trying to figure out how we want to use generative AI, and this uncertainty is creating some tension among higher education stakeholders. Faculty seem firmly divided; some are excited about leveraging generative AI in the classroom, while others are banning its use. Administrators are rushing to put together institutional policies governing generative AI use.

The challenge? We asked ChatGPT, and what we got is shown in figure 1.

The Bottom Line

These QuickPoll data reveal multiple emerging tensions and points of view. Far from arriving at a consensus, institutional stakeholders are still forming opinions about generative AI. Students have already begun to use the technology for coursework, and faculty and staff are using it for work. However, generative AI is so emergent that most institutions don't have policies on its use. Generative AI poses serious implications for ethics, equity and inclusion, accessibility, and data privacy and security, to name just a few. With such high stakes, institutional leaders are under pressure to make the right decisions, but as users quickly adopt generative AI for life and work, time is of the essence. The most productive immediate action stakeholders can take is to bridge institutional silos for focused discussion around the implications of generative AI for their communities.

The Data: General Sentiments

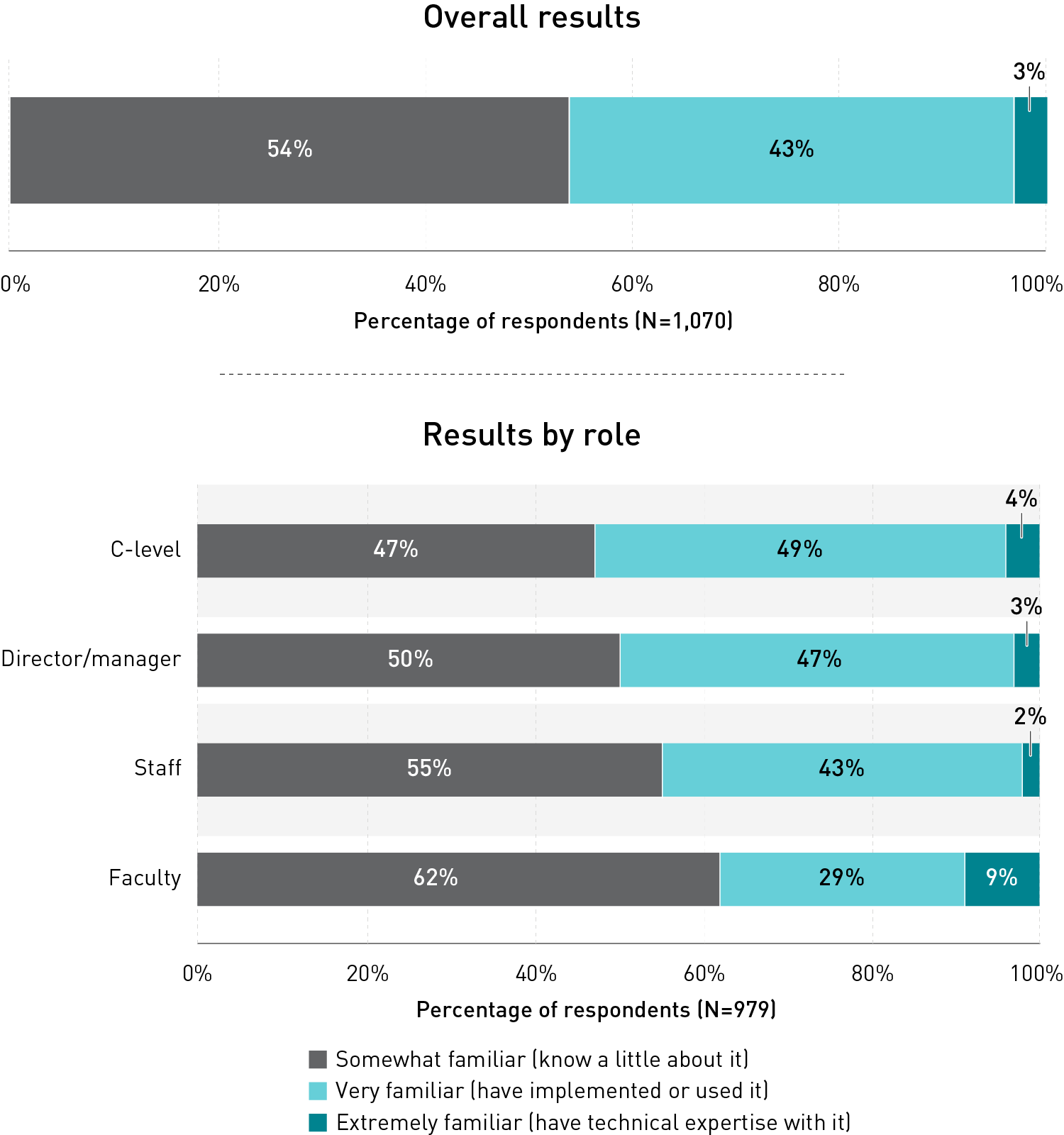

Almost everybody has heard of generative AI. Only 21 respondents indicated that they were not at all familiar with generative AI and that this QuickPoll was the first they had heard of the technology.Footnote2 Nearly half (46%) of respondents indicated that they are very or extremely familiar with generative AI (see figure 2). Leaders expressed the highest level of familiarity with the technology. About half of managers (50%) and C-level leaders (53%) described themselves as very or extremely familiar with technology, compared to 45% of staff and 38% of faculty.

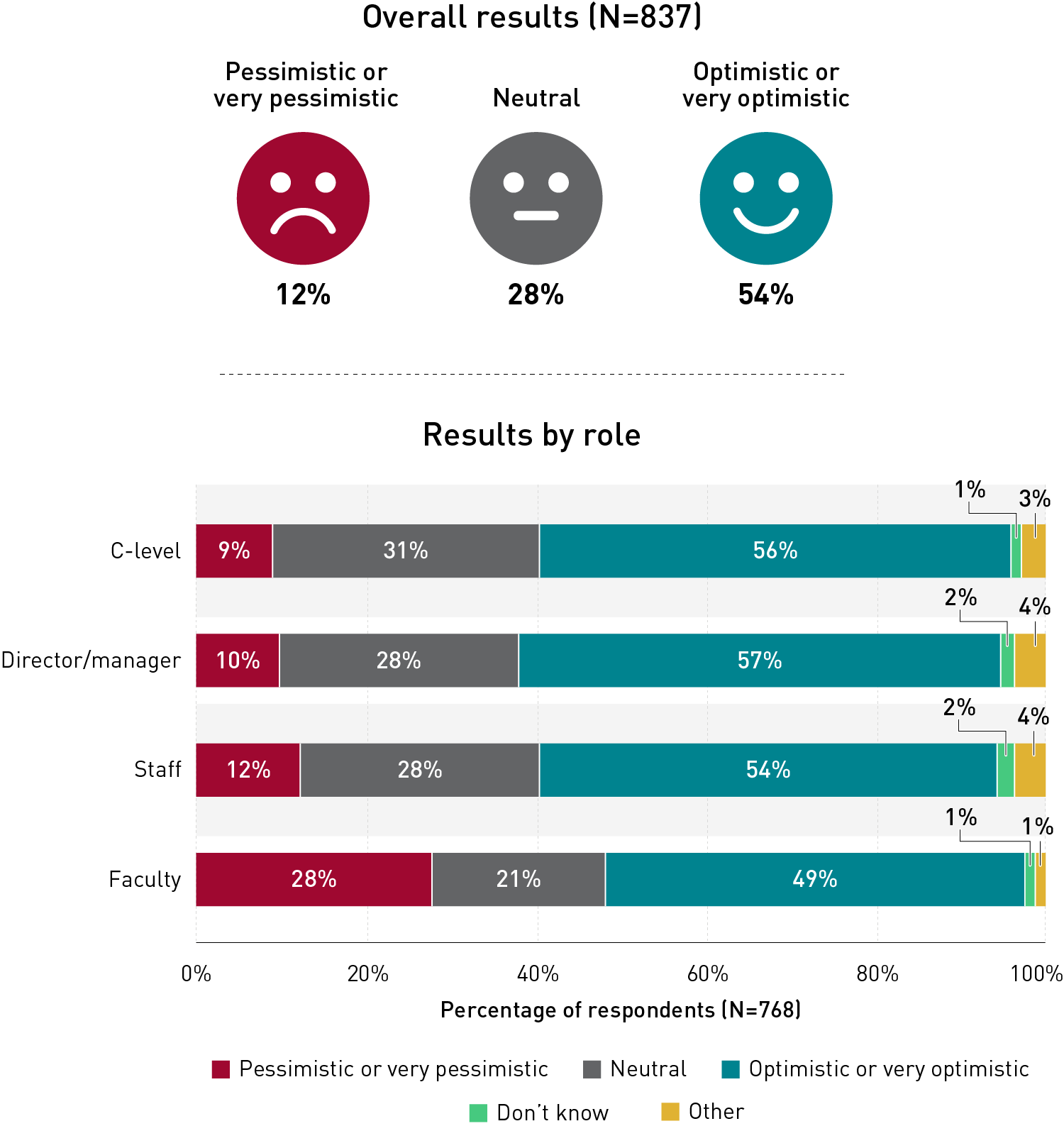

When it comes to sentiment toward generative AI, it isn't all sour grapes. More than half (54%) of respondents indicated that they are optimistic or very optimistic about generative AI in general, with only 12% describing themselves as pessimistic or very pessimistic (see figure 3).Footnote3 A small percentage of respondents (3%) selected "other" when asked about sentiment towards AI. These respondents generally described themselves as cautious or concerned.

Several respondents described a mixture of feelings, aptly summarized by one respondent as "all over the map."Footnote4

Cautious because of how it could be misused.

Not very much interested. Just a new shiny toy.

Critical: see both benefits and challenges associated with it.

A combination of high optimism and some trepidation.

Curious as to how it impacts the future of higher ed.

I am optimistic about the technology itself but pessimistic about how it will be used within education and society.

Faculty respondents were the most pessimistic about generative AI. Over a quarter (28%) of faculty respondents indicated they are pessimistic or very pessimistic about the technology, whereas just 9% and 10% of C-level leaders and managers, respectively, indicated that they feel pessimistic or very pessimistic. These differences may be related to leaders reporting having more familiarity with generative AI. Familiarity with the tool might lend itself to more optimism. Conversely, individuals who are more optimistic about a technology could be more inclined to seek knowledge and experience with it. Overall, differences between faculty and other stakeholders at institutions point to the need for more collaborative discussions about the current and future impact of this emerging technology on higher education.

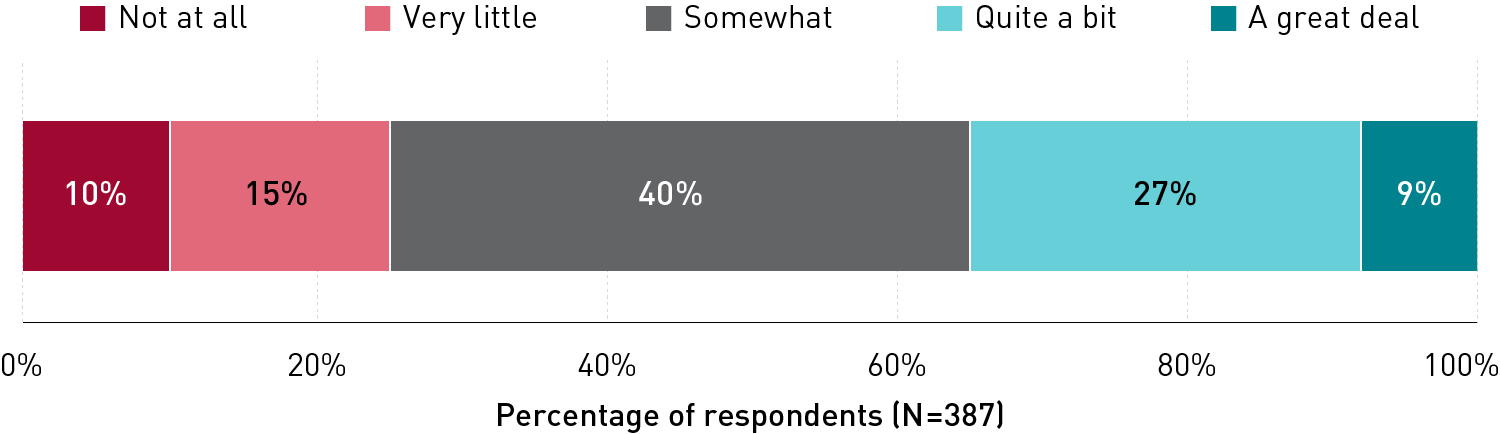

Leaders are talking to other leaders about generative AI. We asked institutional leaders about the extent to which they are engaging in conversations about generative AI with other leaders at their institution. Leaders are clearly engaged in discussions with each other about using, supporting, and planning for generative AI. A wide majority (90%) of managers and C-level leaders indicated that they are discussing generative AI with other leaders at least a little bit, with three-quarters (75%) of managers and C-level leaders indicating that they are having those discussions somewhat, quite a bit, or a great deal (see figure 4).

The Data: The Current State

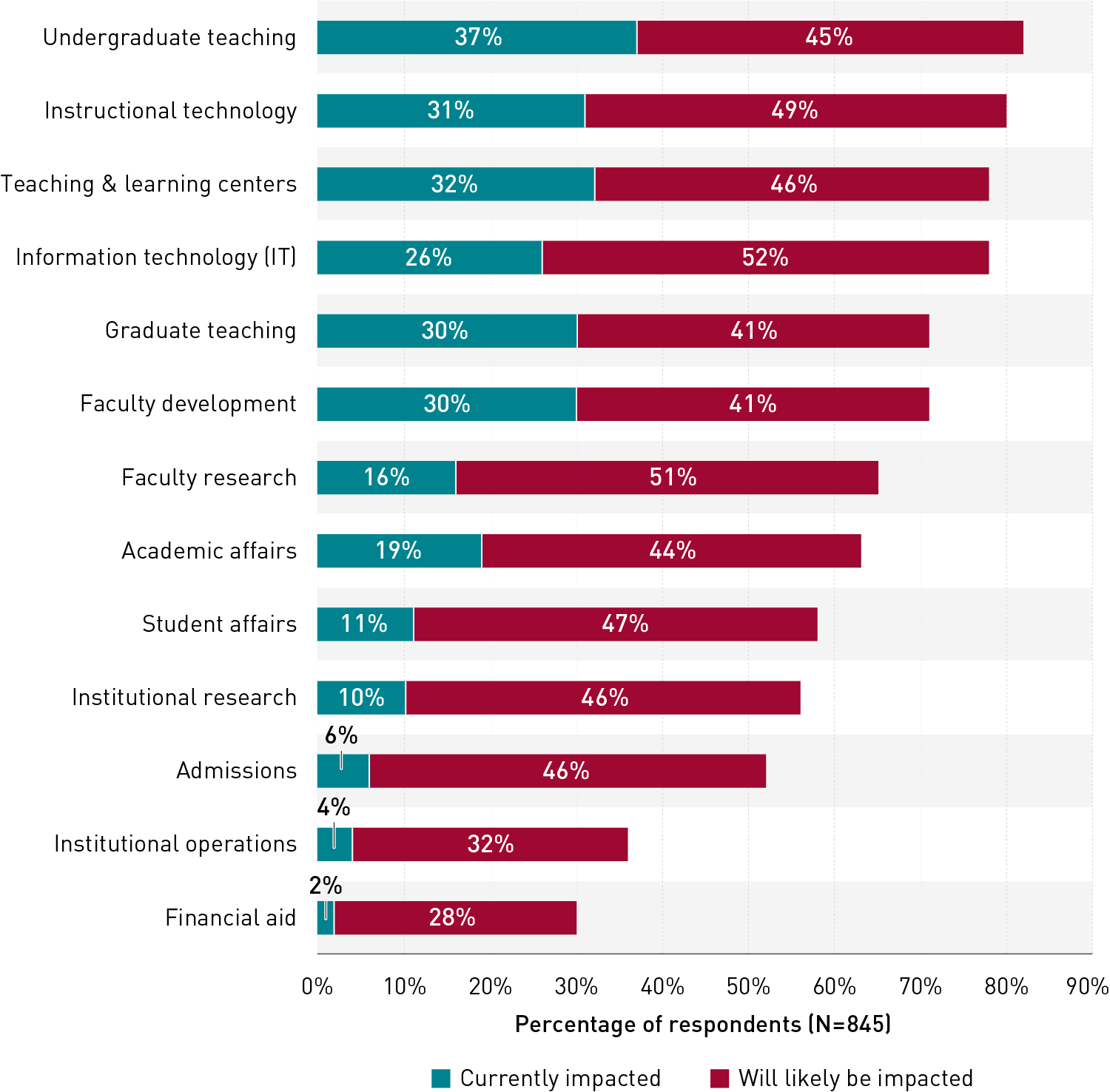

Generative AI is already beginning to impact teaching and learning. Asked whether 13 institutional areas are currently being or are likely to be impacted by generative AI, 54% of respondents indicated that one or more of these areas are being impacted now, while surprisingly, 46% did not see a current impact in any of these areas (see figure 5). The fact that almost half of respondents did not report any current impacts may be due to the quickness with which generative AI has gained attention, in addition to many institutions still being in early-response mode. Of those who identified current areas of impact, the most frequently selected were related to teaching and instructional support: undergraduate teaching (37%), teaching and learning centers (32%), instructional technology (31%), and graduate teaching (30%).

Everybody wants to know more. Respondents were asked to describe how these areas are currently being impacted by generative AI. A majority of respondents said that the recent spike in attention to generative AI has led to an onslaught of questions and ongoing discussions. Faculty want to know what policies to implement into their courses, in addition to what support is available. Across the institution, leaders, faculty, staff, and IT are all asking questions about curriculum and assessment design, plagiarism detection and student misconduct, and what the broader impacts will be outside teaching and learning:

I'm trying to think of a pithy statement that captures all of it in one shot, and I can't...everyone is trying to figure out how to respond to generation, detection, cost, training (of both students and faculty), and scholarship...all at the same time.

Students and perhaps others in and around the university community are using it, and faculty and administration are reacting to it, both optimistically and critically. If *nothing* else, people across the community are spending tremendous time exploring and discussing it, to the point it is already a distraction from teaching and learning.

Currently there are discussions occurring around campus about how best to incorporate generative AI into the teaching, learning, and research environment. Faculty are wanting to learn more from people like me who work with instructional and emerging technologies.

Instructional tech is looking at the impact across broad audiences at our institution, as well as assessing the impact on current practices.

[There are] increasing requests for plagiarism detection within LMS or supported educational technologies. [There is] growing interest from faculty in ways to leverage generative AI for teaching (i.e., generating frequently asked questions during communication / feedback about course materials / assessments with students). [There is] growing interest in how to automate frequent communications (e.g., nudging emails / text / chats) for departmental use.

For now, it's taking a great deal of attention and focus. My IT unit has taken a lead on hosting university-wide discussion sessions, and a partner unit (teaching center) has created a resource around policy language, particularly for syllabi.

Some are being proactive and opportunistic. Institutions that have already taken steps in addressing the use of generative AI vary in their approaches and response. Some are embracing the opportunity of generative AI by incorporating it into their curriculum or using it to improve work efficiency:

[My university] has conducted six studies on the integration of AI and generative AI into all areas and fields. We are teaching students how to use AI art generators and ChatGPT-3 responsibly and to set them up for success in the future.

We're currently using ChatGPT as part of undergraduate instruction in Computer Science. Our Teaching and Learning Center is already gathering information and generating resources to help faculty make informed decisions.

We have been using [a commercial AI program] to assist our students to write "better questions" through inquiry-based learning, and we are adopting their writing tool to help students with the mechanics and to assist faculty in grading. I am using a variety of AI to assist in multiple tasks.

Our curriculum management system is looking into implementing/integrating ChatGPT.

Our Distance Education is using a chatbot to help our distance students with administrative issues. I hope our IT Service Desk can utilize a chatbot to assist with IT problems.

AI is already being used to reduce alert fatigue. I see this as a good thing.

As faculty, I am already using it to help develop course topics. I have used it to help assist setting up server technology and to write code to assist in developing course resources much faster than if I had to rely on traditional methods.

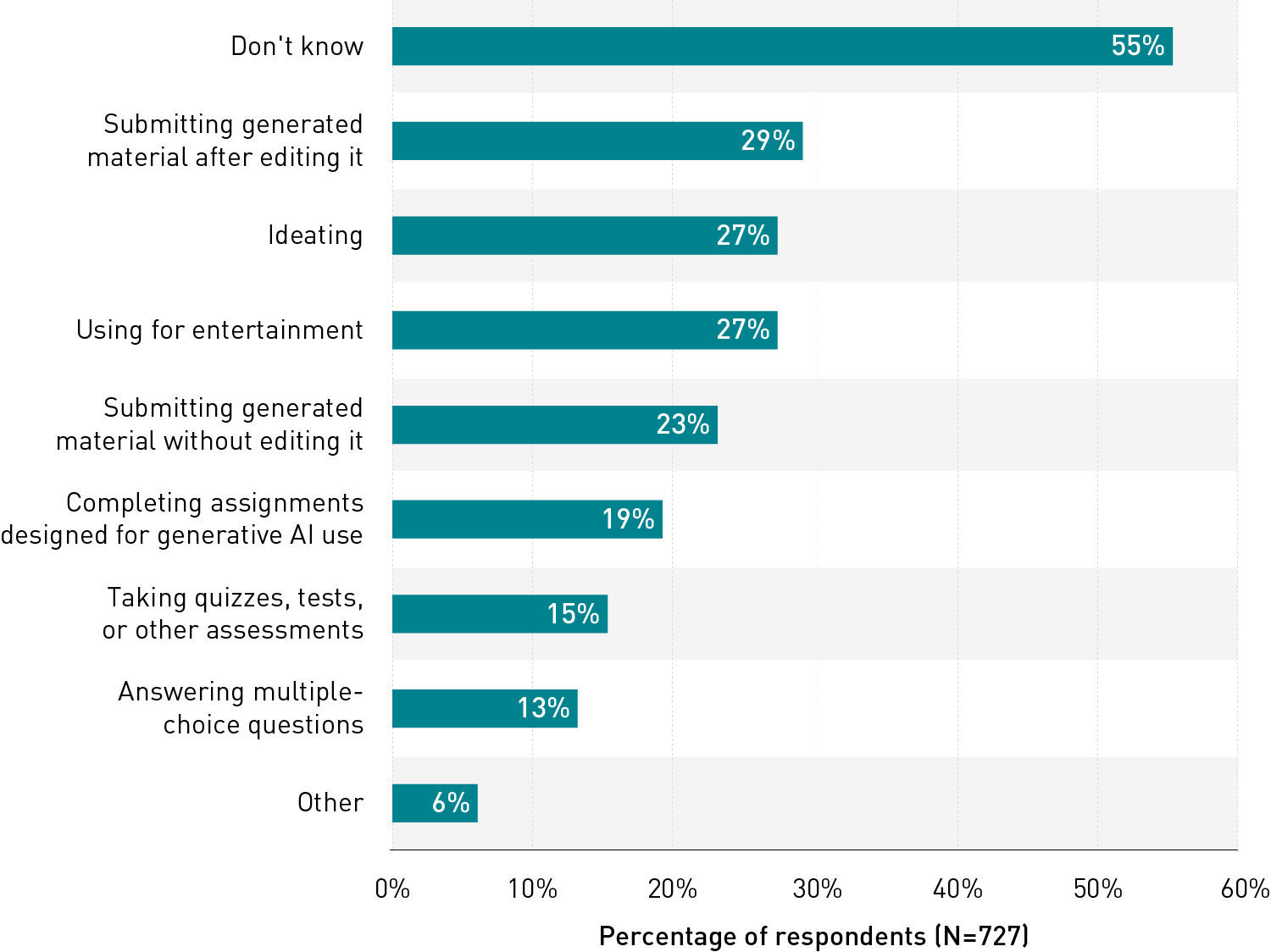

It's too early to know everything about how students, faculty, and staff are using generative AI. Respondents were asked how, to their knowledge, students are using generative AI. A majority indicated that they do not know (55%) (see figure 6). For those who did identify areas of student use, the most common usages were for submitting generated material after editing it (29%), ideating (27%), entertainment (27%), and submitting generated material without editing it (23%).

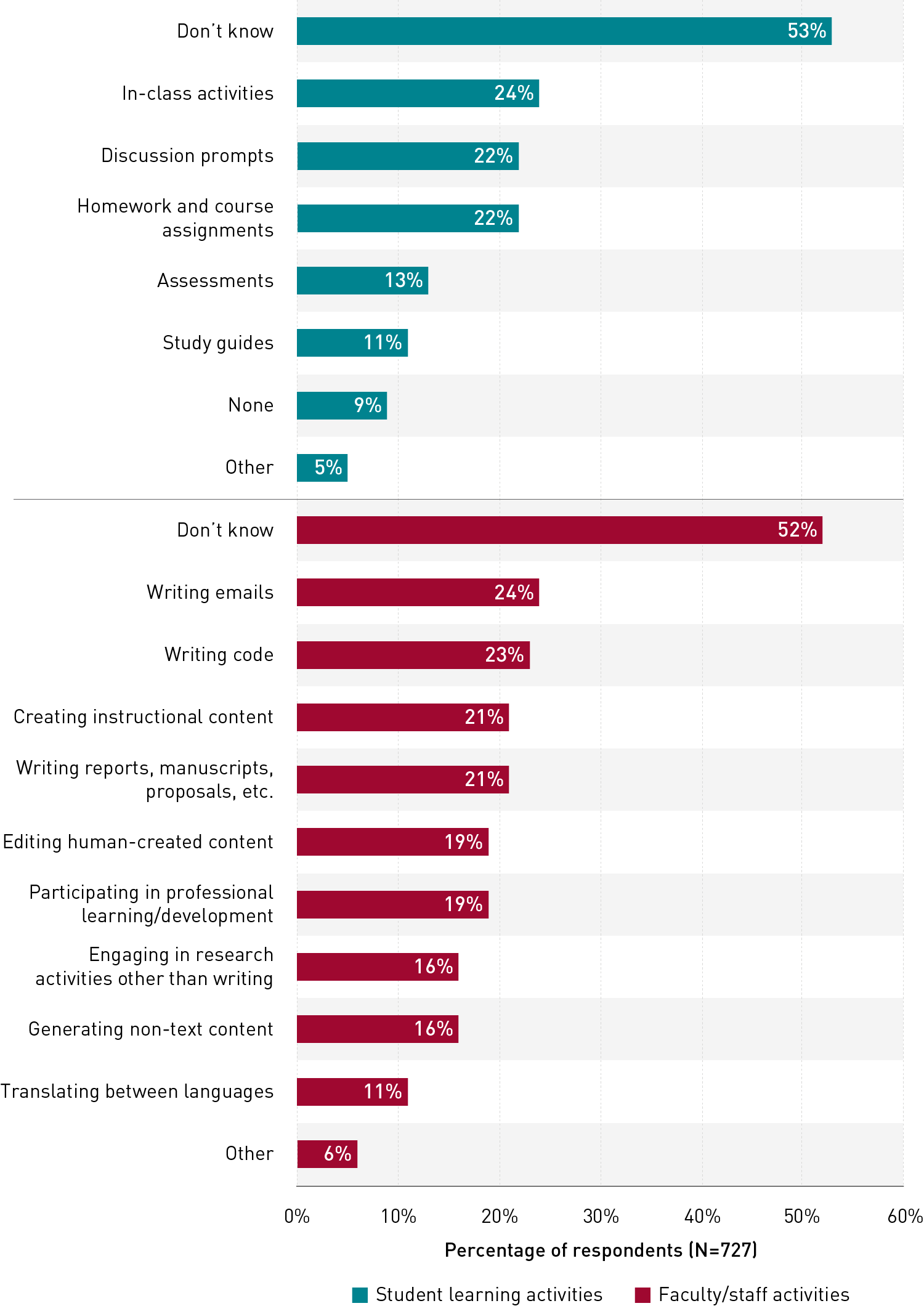

We also asked respondents how, to their knowledge, faculty and staff are using or planning to use generative AI. Again, a majority indicated that they do not know. For student learning activities, 53% selected "don't know," and for faculty and staff activities, 52% selected "don't know" (see figure 7). Those who had knowledge of the use of generative AI tools for student learning activities indicated in-class activities (24%), discussion prompts (22%), and homework and course assignments (22%). For faculty and staff activities, a number of respondents indicated that faculty and staff have implemented or are planning to implement these tools into activities such as writing emails (24%), code (23%), and reports, manuscripts, and proposals (21%).

When it comes to how generative AI is being and should be used, it's a mixed bag. Respondents described a variety of other ways students, faculty, and staff are using generative AI. As one respondent explained, the "sky's the limit."

Students

- Accommodations and accessibility

- Tutoring or course content comprehension

- Conducting research

- Résumé writing and career development

- Improving existing tools such as tutor bots

Faculty and Staff

- Assessment of other AI tools

- Including "wise use" of AI tools in learning objectives

- Institutional research

- Student support services (e.g., advising)

- Website content

- Marketing materials

- Letters of recommendation

- Malware analysis

Amid so much uncertainty, what's clear is that institutional stakeholders are still grappling with generative AI and have yet to reach consensus about how it should be used or even whether it should be used. Yet, despite having some concerns, these data show that those using these tools are being opportunistic, using them in a wide variety of creative ways:

Some instructors are exposing students to [generative AI] while others are telling them they will receive a zero score for using it and [will be] referred to the [dean].

Some faculty and staff might be using it, but people are keeping it pretty quiet since many are afraid of it and consider it cheating. That voice is drowning out the voices of those who think using it will help with digital literacy.

As far as I know, we are planning none of these because we have deep ethical concerns about being complicit in technologies that are released without much regard for their effect on society.

The Data: Planning for the Future

In the future, impact will extend beyond academic integrity. Although respondents indicated that most of the areas currently being impacted by generative AI are related to teaching and learning, especially academic integrity issues, they felt across the board that each of the 13 institutional areas we asked about will be more strongly impacted in the future. While only 54% identified one or more of these areas as currently being impacted, 85% indicated that one or more of these areas will be impacted in the future (see figure 5). Close to half of respondents said that areas such as information technology (IT) (52%), faculty research (51%), instructional technology (49%), student affairs (47%), institutional research (46%), admissions (46%), teaching and learning centers (46%), and faculty development centers (41%) will likely be impacted by generative AI in the future.

Respondents were also asked to describe how their institutional areas or units will likely be impacted by generative AI in the future. While many noted that teaching and learning issues will still be of utmost importance, they also felt that generative AI would be integrated into and impact other areas of the institution, signaling that everyone will need to be prepared:

AI is not going to go anywhere. We have to adapt to it, and the earliest we can do that the less damage it will do.

It's difficult to imagine that generative AI in some form WON'T touch every area of the institution. From data mining to decision forecasting, what-if analysis, marketing materials etc.,...All can and should prepare to be flexible and innovative instead of pushing back blindly.

All administrative areas will be impacted as ChatGPT features make their way into [commonly used software]. Whether they are consciously aware or not, administrative workers will be using AI features. Researchers will begin to use AI to help them analyze and explore data sets. IT professionals will encounter self-monitoring and updating hardware and software tools. Faculty will begin using AI to cut down on the amount of time spent generating questions and assignments and to create more individualized prompts.

Any area that requires an individual to write a response or assessment on well-defined topics will be impacted.

Policy plans are in the works, but widespread changes aren't evident (yet). Respondents were asked to describe which types of generative AI policies (if any) are being developed at their institution. Many said either that they don't know or that no policies are currently being developed. For those who were aware of possible policy changes, many indicated that their institution is currently in the early stages of discussion and planning and, as part of this, is reviewing existing policies and planning or has already implemented some general guidelines and best practices for using generative AI, particularly in areas pertaining to student use of generative AI to complete coursework:

It seems it's all focused on cheating right now. Very disappointed. We're missing the opportunity to develop critical thinking skills because faculty worry their 10-year old exam is being compromised.

We are in the very early stages of discussion concerning how to address use of these tools in our Academic Honesty policies.

Reviewing existing academic integrity statements in school catalogs, on school websites, and honor pledges in LMS, etc. (to make sure that the concept of "no unauthorized assistance" does not suggest only "human" assistance but also includes AI or technological assistance as well).

We have not yet written [a] policy, just suggestions on the best use (and ways to avoid its use, if needed).

Our teaching center has drafted sample language for faculty to include on their syllabi based on their acceptance/rejection of AI tools.

[We are] working on honor code integration first, which is needed but largely a red herring. Additional guidelines about how to use the tool, rather than deny its use, will be key.

Best practices fall on the shoulders of faculty and students. A number of respondents said that policies on generative AI are being left up to faculty to incorporate into their courses, and students are then encouraged to follow these policies:

None; it is up to the individual faculty to create their own course policy.

As far as I know, policies are in their infancy. Basically, we are telling students right now, don't use AI for your submitted work.

The faculty executive committee decided that no overall policy was required but that each faculty member should take account of AI issues and mention their approach in the syllabus.

Since there are opportunities mixed with the risks, it is being left to instructors. However, there are faculty development resources to support the discovery and choices that the instructors make.

Common Challenges

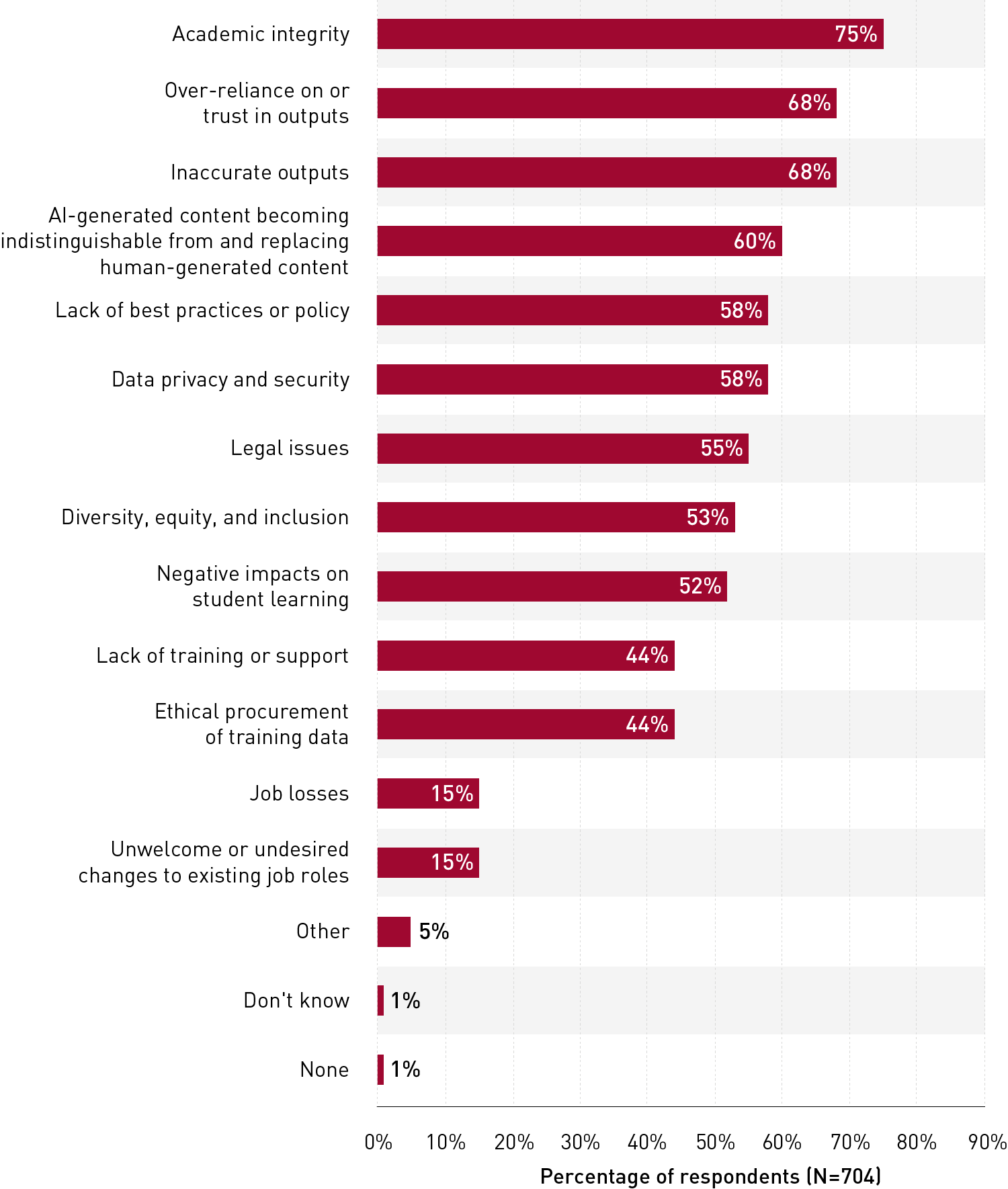

Survey says the top concern about generative AI is…wait for it…cheating. We asked respondents to select from a list of areas of concerns about generative AI. A broad majority (87%) were concerned with at least four areas. Consistent with respondent comments on current impacts of generative AI, the most selected area of concern was academic integrity (75%) (see figure 8). A majority of respondents also indicated that they have concerns about the outputs from generative AI, including over-reliance on or trust in output (68%), inaccurate output (68%), and AI-generated content becoming indistinguishable from and replacing human-generated content (60%). Many respondents also felt concerned about the lack of best practices for policy (58%) and data privacy and security (58%). A small number of respondents (5%) identified other challenges, including:

Over emphasis on enforcement and punishment

Fear overtaking policies instead of looking at it as a positive challenge

Misplaced concerns, overreactions at both ends of the spectrum (embracing or rejecting)

Everybody involved will require an uplift in their digital dexterity in order to use these tools effectively and safely.

Looking ahead to policies and support. Though academic integrity is currently the most pressing issue, many respondents indicated that the impact of generative AI would be felt by most areas of the institution in the future and that there would be broad challenges in areas such as diversity, equity, and inclusion (DEI), the overall impact on student learning, ethical issues surrounding data, legal issues, and a lack of training, support, and policy. It is hard to know how prolific generative AI will be. To be prepared, conversations need to focus on the broader impacts and potential uses of generative AI, and leaders need to make sure everyone has a seat at the table. If generative AI is here to stay, higher education stakeholders will need to collectively engage around policy issues and provide support and ways to empower those using these tools. It is important to keep in mind that it is still very early—students were ahead of the game as early adopters of ChatGPT, and faculty had little time to adjust their teaching strategies before students started using it. Strategic planning is going to take time and will likely be an iterative process. As one participant noted:

It is taking an outside [sic] amount of time to develop policies and to help faculty respond to this technology. People keep comparing it to the calculator—but the calculator was not placed into student hands during the last paper of the semester before others had a chance to engage and respond to it.

Promising Practices

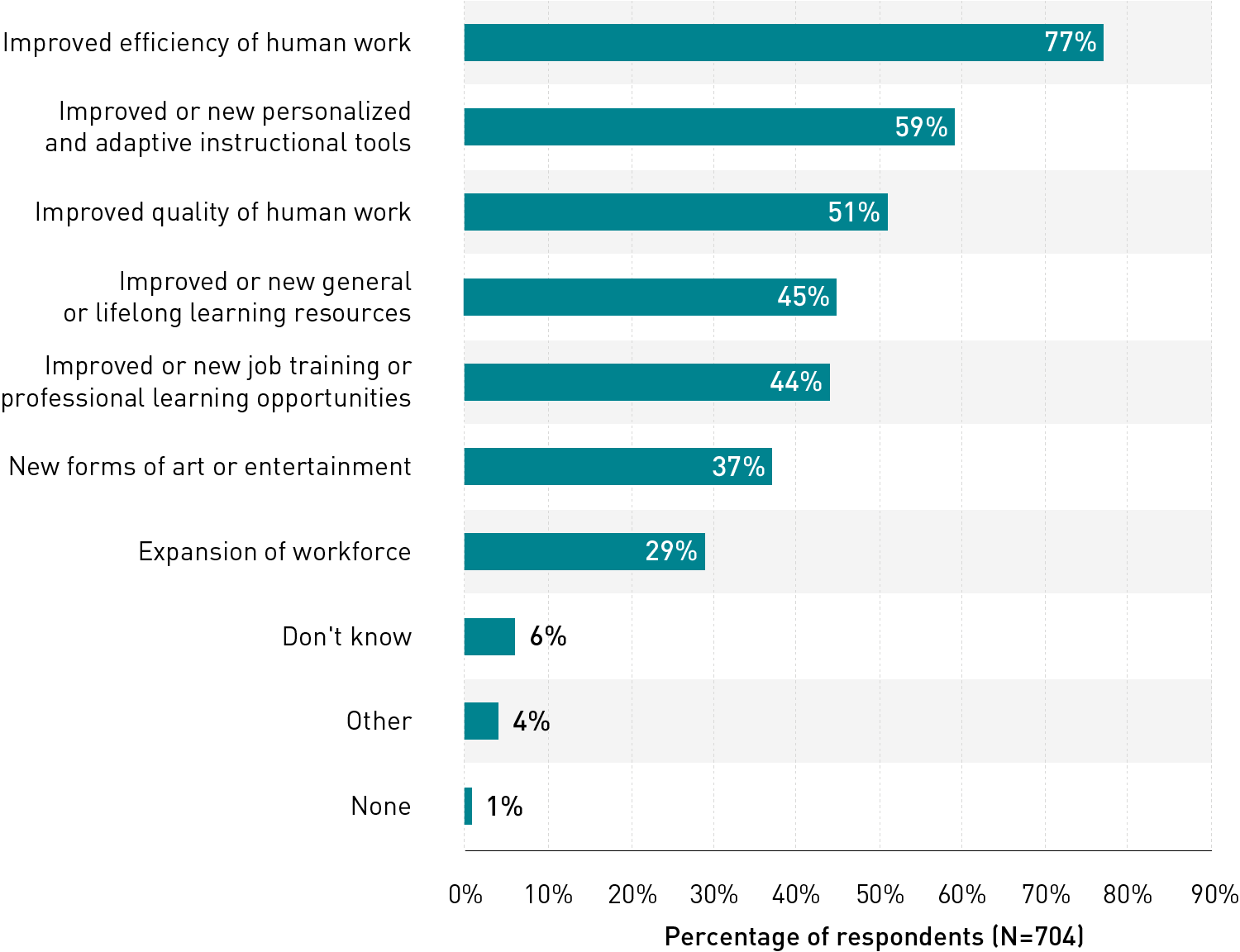

Generative AI holds real potential for the future of higher education. We asked respondents to select from a list of opportunities related to generative AI. A majority (81%) selected two or more items from the list, and more than three-quarters (77%) identified the potential to improve the efficiency of human work (see figure 9). Respondents also felt that generative AI can be used to develop or improve personalized and adaptive instructional tools (59%) and to improve the quality of human work (51%). Overall, respondents were relatively optimistic about future opportunities: less than 1% thought that generative AI provides no opportunities. Four percent of respondents also identified other areas of opportunity. Of these, a number of them thought that generative AI could be used to help spark curiosity and facilitate creative endeavors and the learning process:

I hope that it pulls intellectual curiosity back from obscurity (in the age of teaching-to-the-test)

Opportunities for students to analyze the output from the technologies to enhance their learning.

Another opportunity to shift our educational model toward one that is process-based, authentic, reflective, and meaningful.

Allow humans to focus on other more humanistic and/or creative endeavors.

New ways to spark creativity and get unstuck.

Further your knowledge and discuss with the community. Perhaps the most promising practice we have at our disposal is collaborative discussion with our colleagues. Together, we can make sense of new opportunities. As always, EDUCAUSE is here to support you. We've compiled a list of resources below, and we encourage all of our community to keep up with this rapidly evolving topic through our Connect Community Groups, for example: Instructional Technologies, Instructional Design, Blended and Online Learning, and CIO.

EDUCAUSE Resources

- EDUCAUSE Review: Special Report | Artificial Intelligence: Where Are We Now?

- Member QuickTalk | GPT: The Generative AI Revolution

- EDUCAUSE Showcase | AI: Where Are We Now?

- Upcoming EDUCAUSE Showcase | Moving from Data Insight to Data Action

- EDUCAUSE Exchange: The Impact of AI on Accessibility

- EDUCAUSE Showcase | Privacy and Cybersecurity 101

Community Resources

We have curated this list from emergent work in generative AI. We are excited to share the collective wisdom of our community. Please note that many of these resources are live, shared documents, and we can not guarantee their durability over time.

- The practical guide to using AI to do stuff

- AI in Higher Education Resource Hub | Welcome to TeachOnline

- AI in Higher Education Metasite

- Classroom Policies for AI Generative Tools

- Chatbot Prompting: A guide for students, educators, and an AI-augmented workforce

- ChatGPT & Education

- Is AI the New Homework Machine? Understanding AI and Its Impact on Higher Education

All QuickPoll results can be found on the EDUCAUSE QuickPolls web page. For more information and analysis about higher education IT research and data, please visit the EDUCAUSE Review EDUCAUSE Research Notes topic channel, as well as the EDUCAUSE Research web page.

Notes

- QuickPolls are less formal than EDUCAUSE survey research. They gather data in a single day instead of over several weeks and allow timely reporting of current issues. This poll was conducted between February 6 and 7, 2023, consisted of 15 questions, and resulted in 1,070 complete responses. The poll was distributed by EDUCAUSE staff to relevant EDUCAUSE Community Groups rather than via our enterprise survey infrastructure, and we are not able to associate responses with specific institutions. Our sample represents a range of institution types and FTE sizes. Jump back to footnote 1 in the text.

- Respondents who indicated that they had never heard of generative AI were not allowed to respond to any other questions and were not included in the final number of respondents. Jump back to footnote 2 in the text.

- Note that percentages have been rounded to the nearest whole number, occasionally resulting in sums slightly over or under 100%. Jump back to footnote 3 in the text.

- Open-ended responses have been lightly edited for readability and confidentiality. Jump back to footnote 4 in the text.

Nicole Muscanell is Researcher at EDUCAUSE.

Jenay Robert is Researcher at EDUCAUSE.

© 2023 Nicole Muscanell and Jenay Robert. The text of this work is licensed under a Creative Commons BY-NC-ND 4.0 International License.