Analyzing data across the institution to build a true picture of student engagement and using targeted, scripted reporting for meaningful insights has significantly impacted student retention at Charles Sturt University.

What We Faced

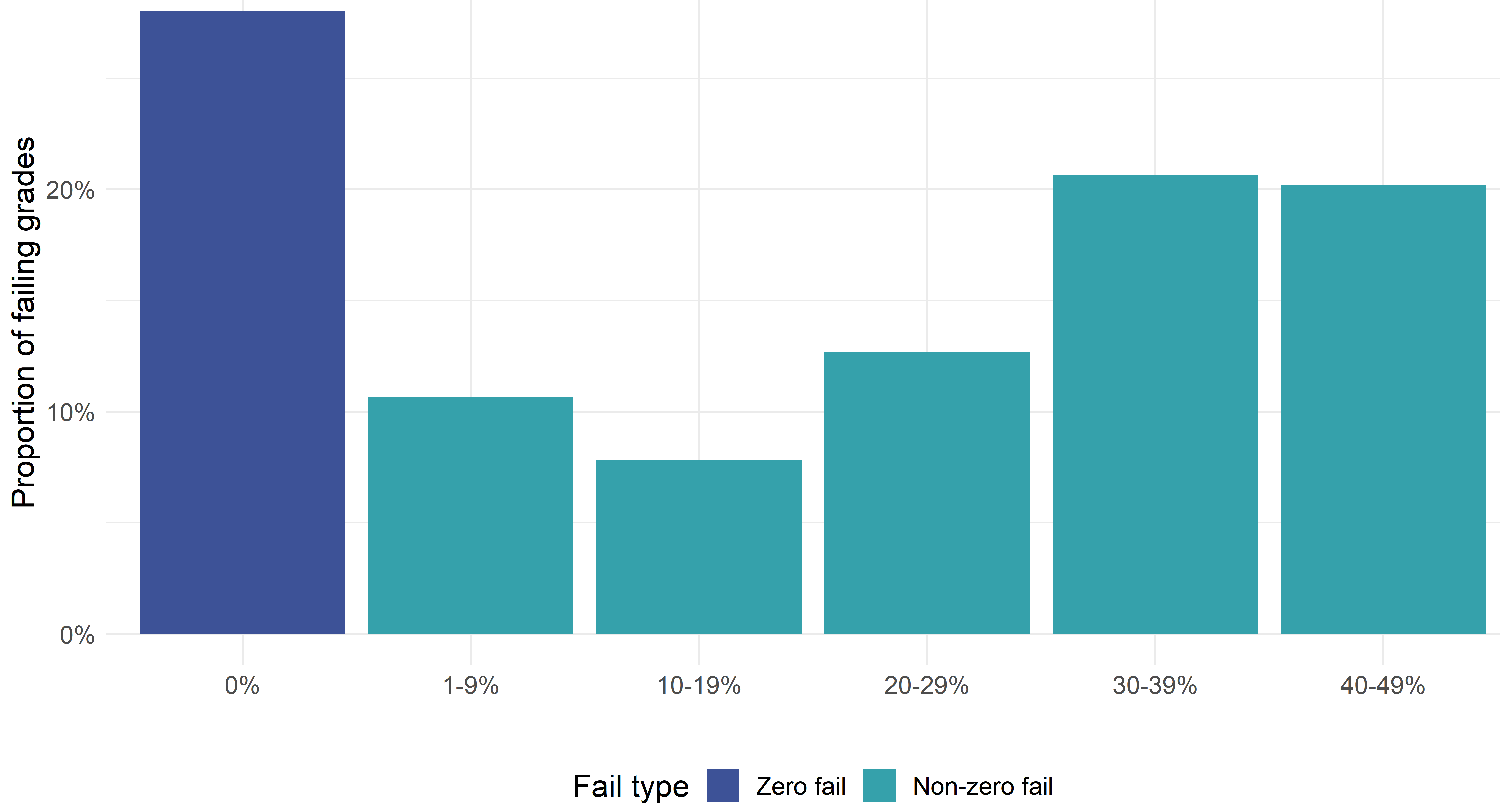

For many years, student attrition, progression, and completion rates have been a concern for government, universities, and the broader community. Across the higher education sector, the majority of attrition occurs in the first year of study, and as a result, retention strategies often focus on students' first year in university. At Charles Sturt University—our large Australian regional university—the most common failing grade is a zero fail, which is given to students who have not submitted any assessment items (see figure 1). Our research and that of others has shown that receiving a zero-fail grade in the commencing session of university is associated with poor academic outcomes into the futureFootnote1 Avoiding this kind of failure can benefit students by keeping them from accruing debt for courses that earn no credit and by increasing their chance of success in future courses, and it benefits the university through improved progress rates. The second group of students commonly targeted for support are those on track to receive a "near miss" grade of 40–49%. These students have been engaged for at least part of the semester and may simply require a helping hand to get across the line.

What We Did

The Charles Sturt University Retention Team was founded by two scientists who received funding for a small faculty project and combined their analytical skills and passion for teaching to identify disengaged students. Like most institutions, Charles Sturt had developed an "at risk" model, but this model was not accurate for commencing students, when little learning information was available. Non-submission of an early assessment item was found to accurately identify disengaged students, and offering targeted support to these students significantly increased commencing progress rates. In 2019, the project was expanded to the whole university, and processes were automated and improved to relay key subject information from Subject Coordinators to a Student Outreach Team.

The Retention Team has created a data-driven model to identify and support students in their first year of university. Data are analyzed from across the institution to build a true picture of student engagement, including assessment submission, LMS activity, and live enrollment data. The team uses two main strategies: an early intervention, which targets disengaged students, and an Embedded Tutors Program, which targets students who are engaged but struggling. Students are targeted at two points in the commencing semester of undergraduate students:

- An early intervention in weeks 3 and 4 of the 14-week semester provides targeted outreach support to students who have not engaged in their studies. This work supports students who have not submitted an early assessment item or have low LMS activity and are on track to receiving a zero fail.

- Embedded tutor draft assessment support is available in the lead-up to selected assessment items. In addition, students who have performed poorly on earlier assessments are proactively contacted and encouraged to book time with a tutor. This work supports students who are on track to receiving a "near miss" fail.

Early Intervention

The early-intervention program uses learning analytics and non-submission of early assessment items to identify students with low engagement in key subjects. Subsequently those students are contacted by the Student Outreach Team and offered support based on their context. That support can include directions to academic support services, information about seeking an extension for a missed assignment, or options for reducing academic load. This intervention is incredibly time sensitive. Students must make a decision about their studies by the end of week 4 in order to avoid accruing debt for the subject, and enough time must have been spent in the subject to show some academic engagement, ideally through submission of an assessment item.Footnote2 To complicate matters, the program must account for the fluidity of the data environment in the first few weeks of an academic session. Students are moving in and out of subjects, assessment due dates vary, extensions are being given, and communication between everyone involved happens across a multitude of channels.

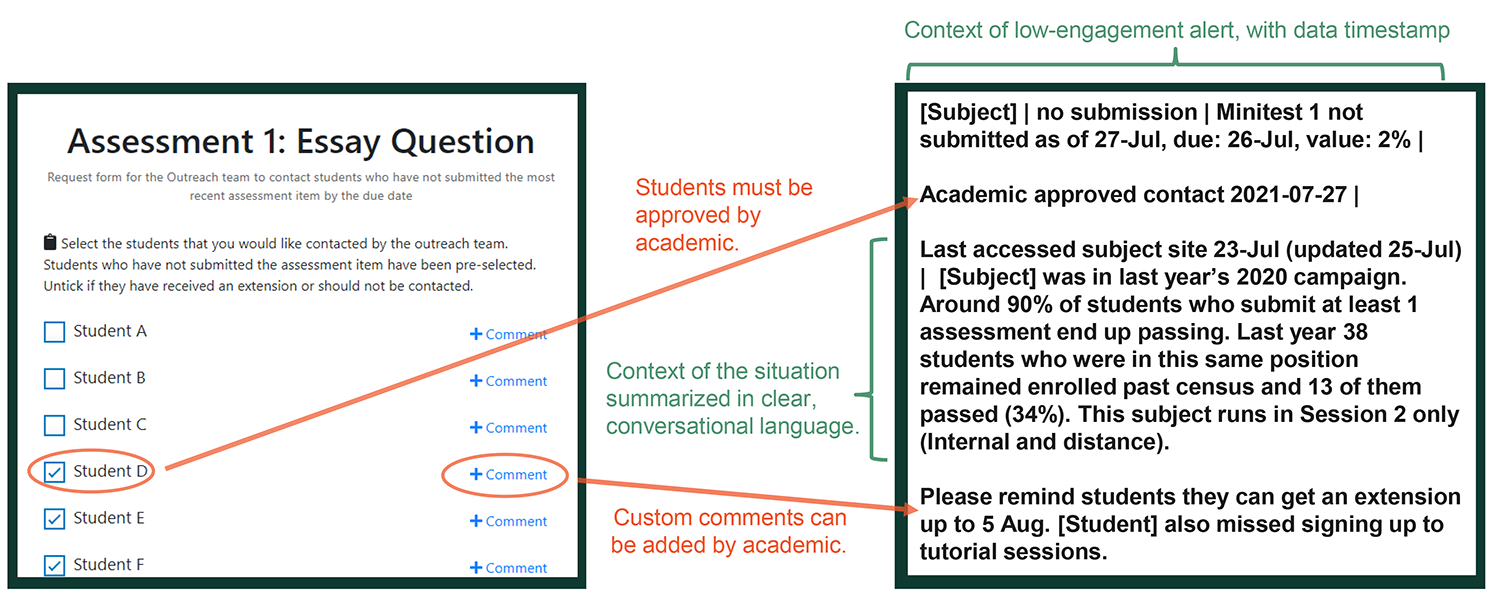

These factors place a key restriction on using automation to scale such a program: the academics teaching the subjects must be kept in the system. The Retention Team found that roughly one in four students who miss an early assessment item have extensions and have been in communication with the instructor. These data are simply not reliably stored in a central location. As such, the scaling of the project involved a focus on augmenting communication with the instructors as opposed to an increase in automation. Custom-built web-based forms (as shown in the left side of figure 2) helped reduce the friction of communicating with numerous academics in a short space of time while eliciting key contextual information such as individual extensions or messages to the class.

Another key consideration of our early-intervention system was two-fold: who was the end user, and how might they be included in the design process? The intervention being planned was a phone call with the student, so our key end user was the person who makes the phone call. An Outreach Team caller is certainly not a common stakeholder in applications of human-centered learning analytics but is a vital voice to be heard in order to increase the quality of the conversations with students and in turn the efficacy of the intervention.Footnote3 Through an iterative design process and consultation with the outreach callers, a minimalistic data summary was designed to best help the callers have a meaningful conversation with the student (as shown in the right side of figure 2). This allowed the breaking down of silos and sharing of information with student support about what is actually happening in the class. Several key questions arose from the design process:

- How serious was the student's situation? Callers needed to find the right tone to their conversation.

- How "fresh" were the data? Callers needed to be able to deal with responses such as "But I submitted the assignment last night" with appropriate data in a fluid situation.

- Could the statistical language be made more usable in a conversation—for instance, "Of the 10 students who remained enrolled, 8 failed" instead of "80% failed"?

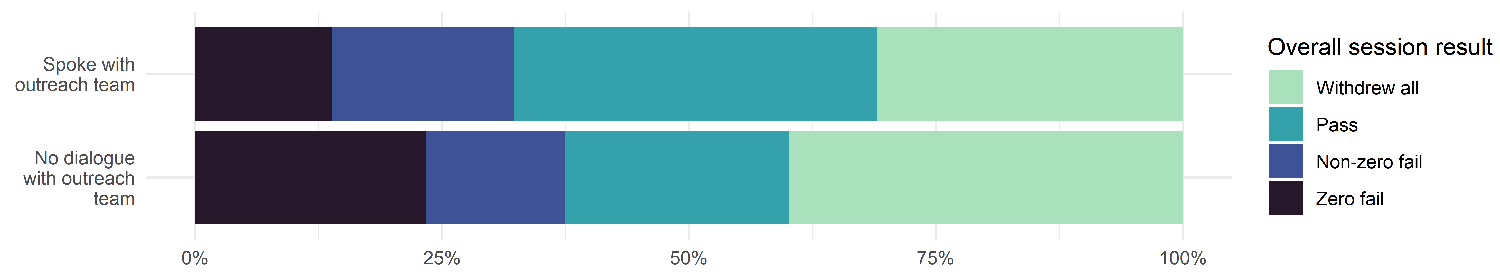

Anecdotal evidence of improved conversations supported by the data summary is very positive. Additionally, those students who have some dialogue with the outreach calling team have a much lower risk of earning zero-fail grades in that session (see figure 3), though this is in part due to the fact that highly disengaged students are less likely to answer a phone call from the university.

Embedded Tutor Draft Assessment Support

Students who are at risk of performing poorly in assessment tasks, or who are simply disengaged, are usually unaware of the support services available to them at university. In response, the Retention Team has adopted a targeted approach to identifying students at risk of failing a subject and connecting them with an embedded tutor. In semester 1 of 2022, embedded tutor draft assessment support was available in 22 first-year subjects, with approximately 50% of all commencing undergraduate students enrolled in at least one subject that had an embedded tutor.

The Retention Team works with Subject Coordinators to identify students who perform poorly in early assessment items or are showing signs of disengagement during the semester. In semester 1 of 2022, 512 students were identified as at risk and were contacted by the Student Outreach Team. Of these students, 77 were repeating the subject after failing in 2021, 72 had received an extension, and 363 had failed a previous assessment item in the subject.

The Retention Team worked closely with the Outreach Team, who could directly book a student with a tutor, and provided the link via SMS and email and a direct link to the online booking page. Students who accessed the link were taken to a page specific to the subject and could book a one-on-one tutor session to be held online via Zoom. Tutors have subject-specific content knowledge and are generally recruited by the Subject Coordinator. Embedded tutors are able to provide suggestions on how students could make improvements to their assessment to achieve a passing grade. This feedforward allowed students to improve assessment before submitting.

Following the contact, 136 students booked with a tutor, and of those students, all but 7 were active in the LMS up until the end of session. This model has been shown to increase student cumulative mark for a subject by 6 percentage points. Both programs have had a significant impact on student retention. Students identified as disengaged had a retention rate that was 6 percentage points higher (they continued into their second year) if they spoke with a member of the Outreach Team. In subjects where embedded tutors were available, students who attended a tutor session had a 13 percentage point higher retention rate compared to those who did not attend.

What We Learned

Learning is not always clear skies and sunshine, and as educators we must keep an eye out for the storms that beset our students and help them weather them. A small but critically timed gesture such as a phone call or personal tutoring session can mean the difference between success and failure and can also aid a student in feeling supported.

- Learning analytics can be used to predict patterns for student engagement. However, analytics is most effective when using a human-centered approach that includes information provided by instructors to guide real conversations.

- Early identification of disengaged students and outreach support are effective in mitigating the number of zero-fail grades. Students who have successful dialogue are also more likely pass a subject and continue into the second year of their studies.

- Offering additional support in the form of consultations with embedded tutors has provided the support needed to assist the "near miss" students in getting across the line. First year is where we have identified the greatest potential for impact, and students who meet with an embedded tutor are much more likely to continue into their second year of university.

- Communication is key for impact across the whole institution. Breaking down the silos between divisional and academic staff is essential for timely and meaningful interventions. Building relationships with a diverse range of stakeholders including instructors, university leadership, divisional staff, and data custodians was critical when creating this program.

Notes

- Kelly Linden, Neil Van Der Ploeg, and Ben Hicks, "Ghostbusters: Using Learning Analytics and Early Assessment Design to Identify and Support Ghost Students," Back to the Future—ASCILITE '21: 38th International Conference on Innovation, Practice and Research in the Use of Educational Technologies in Tertiary Education, University of New England, Armidale, Australia, November 29–December 1, 2021: 54–59; Bret Stephenson, Beni Cakitaki, and Michael Luckman, "'Ghost Student' Failure among Equity Cohorts: Towards Understanding Non-Participating Enrolments," National Centre for Student Equity in Higher Education, March 2021. Jump back to footnote 1 in the text.

- Linden, Van Der Ploeg, and Hicks, "Ghostbusters." Jump back to footnote 2 in the text.

- Simon Buckingham Shum, Rebecca Ferguson, and Roberto Martinez-Maldonado, "Human-Centred Learning Analytics," Journal of Learning Analytics 6, no. 2 (2019): 1–9. Jump back to footnote 3 in the text.

Kelly Linden is the Senior Retention Lead in the Division of Student Success at Charles Sturt University.

Ben Hicks is a Data Scientist for the Retention Team in the Division of Student Success at Charles Sturt University.

Sarah Teakel is Embedded Tutors Academic Coordinator at Charles Sturt University.

© 2022 Kelly Linden, Ben Hicks, and Sarah Teakel. The text of this work is licensed under a Creative Commons BY 4.0 International License.