Other Posts in this Series

Virtual and Augmented Reality are poised to profoundly transform the STEM curriculum. In this article, we offer several inspiring examples and key insights on the future of immersive learning and the sciences. Immersive technologies will revolutionize learning through experiential simulations, modelling and spatial representation of data, and a sense of presence in contextual gamification.

Understanding our place in the universe, building the next Martian Rover, designing new transportation systems, fostering sustainable communities, modeling economic stability — finding the solution for these pressing and interconnected challenges brings us to STEM and STEAM in teaching and learning. The movement behind STEAM advocates incorporating the arts and humanities to the science, technology, engineering and math curriculum.

Projects in Higher Ed

We’ll highlight just a few of the groundbreaking implementations of immersive technologies in STEM that we see on university campuses. Earlier this year, the Stanford Human Computer Interaction Lab released a free virtual reality simulation, The Stanford Ocean Acidification Experience, which transports students to a simulated ocean of the future.

The goal of the simulation is to educate, spread awareness and inspire action on the issue of ocean acidification. Director Jeremy Bailenson and his team developed the experience in collaboration with Stanford marine biologists Fiorenza Micheli, Kristy Kroeker and Roy Pea, a professor at Stanford Graduate School of Education.

Designed as a field trip to a location that few scientists will ever visit in person, students use the HTC Vive headset to observe the effect of carbon dioxide on marine life and collect samples from the ocean floor. The simulation is one of many virtual experiences developed by the Lab in its mission to use VR in research and to “improve everyday life, such as conservation, empathy, and communications systems.”

At the University of Michigan–Ann Arbor, a variety of STEM projects are supported through MIDEN (Michigan Immersive Digital Experience Nexus), formerly known as the CAVE. The CAVE concept was originally developed through the groundbreaking work at the University of Illinois at Chicago.

CAVE environments create an immersive experience [http://um3d.dc.umich.edu/learning/m-i-d-e-n/#How_it_Works] by projecting stereo images on the inner surfaces of a room-sized cube. Users wear special glasses that, along with an optical motion-tracking system, help render digital objects in response to the viewer’s position. Since the system is “see-through” users can see their own hands and actual physical objects can be brought into the space.

The College of Engineering uses MIDEN to experiment with 3-D building models to better understand the structural stability of engineering projects. Students and faculty in other programs use it for architectural walkthroughs, virtual reconstruction of archeological sites, human ergonomic studies and training scenarios for dangerous situations.

Among a variety of projects at Texas A&M, students and faculty in the Immersive Mechanics Visualization Lab are bringing computational and experimental data into AR and VR environments. In virtual reality, 3D CAD models can be manipulated and refined within virtual worlds, turning industrial design into a highly intuitive and collaborative process.

In addition to their work in the lab, Professor Darren Hartl and undergraduate student Michayal Mathew connected with high school students to help them prepare for F1 in Schools, an international STEM competition, by inserting their 3D CAD designs into a virtual world, which allowed aerodynamic experts to provide feedback on their car models using Tilt Brush.

As Matthew pointed out, “To be honest, seeing things in 2-D on a whiteboard just doesn’t cut it, because almost all our important concepts are three-dimensional.” For Professor Hartl, these projects serve as an opportunity to assess whether his graduate students can improve their intuition about their results by interacting with data in virtual environments.

Immersive Software Platforms

If you can turn your data into a VR world, how about stepping into a VR science lab? This is what Labster and other software platforms are doing to bring immersive technology into the STEM classroom. Labster has developed a suite of advanced lab simulations that encourage open-ended investigation. With a gamified experience, students can play the role of a CSI-type forensics analyst who solves a crime.

More importantly, the simulations provide access to high-cost NGS machines and electron microscopes that many institutions are unable to afford. While today Labster is a platform for learning, tomorrow’s science research will likely happen over distributed virtual environments.

Students As Creators

While research centers will continue to build advanced immersive labs, the developments in standalone VR headsets and software platforms like Unity and Unreal engine are driving a democratization of immersive experiences. New VR native applications like Tilt Brush will enable our students to become creators in virtual worlds. They will not only have the opportunity to observe unseen phenomena but to prototype solutions to complex problems.

NYU’s Tandon School of Engineering made a splash with its Tandon Labs experience at the 2017 SXSW Gaming Expo. Incoming students at Tandon for a second year were sent a 3D cardboard viewer in their acceptance packages. It took them into a game-like environment that “. . . enables users to explore and engage with a microscopic, intracellular world.”

The game built on the research by Alesha Castillo, an assistant professor in the Department of Mechanical and Aerospace Engineering, the project was done in collaboration with NYU’s Mobile Augmented Reality Lab, and a graduate student from the Integrated Digital Media program. Using a female undergraduate to narrate the experience, it also helps support Tandon’s commitment to diversity. Projects like this inspire and encourage collaboration across disciplines.

Immersive Technologies and The Future of STEM

Scientists are also using immersive technologies to communicate difficult concepts to the broader public. Brian Greene, the director of Columbia’s Center for Theoretical Physics, uses VR to explain string-theory which posits that the universe exists in more than three dimensions. As part of the World Science Festival in New York, Greene taught a featured session on string theory with students from across the world wearing VR headsets. We can expect to see many more immersive global experiences transforming the classroom.

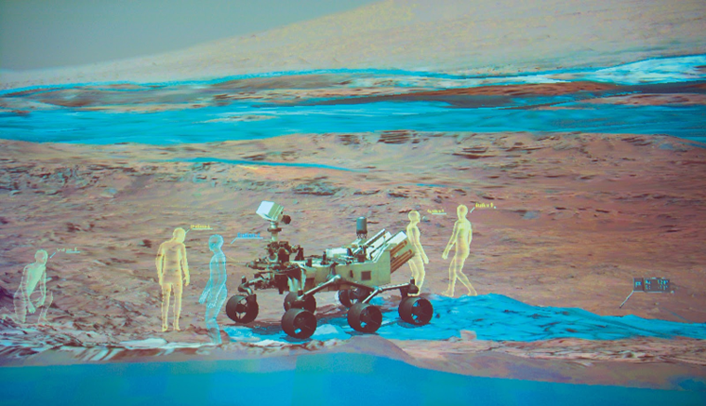

At NASA, scientists and engineers use Microsoft’s HoloLens mixed reality headset to collaborate in building a true-to-scale visualization of the next Martian Rover in an embodied experience, which is something unique to immersive technologies. They also use HoloLens to “teleport” to Mars and “walk on” the Martian surface to determine the optimal path of the current Rover. While today this only available to people at JPL, in the future researchers and students from universities and libraries across the world will be able to participate in the explorations of the solar system.

All these projects are a window into the future of STEM education. Immersive technologies along with AI, computer vision and machine learning will transform engineering, enable scientists to visualize data to advance exploration research, and provide new opportunities for collaboration between faculty and students and across disciplines.

Emory Craig is Director of eLearning & Instructional Technology, College of New Rochelle and Co-Founder at Digital Bodies.

Maya Georgieva is Chief Innovation Officer at Digital Bodies – Immersive Learning.