TritonGPT, developed at UC San Diego, is a suite of AI-powered assistants designed to streamline administrative tasks, enhance productivity, and provide institution-specific insights by retrieving, summarizing, and generating content securely.

Colleges and universities today face a pressing challenge: unlocking the full potential of their vast institutional data. Even though higher education institutions maintain extensive information repositories, extracting meaningful insights from them often requires specialized tools and technical expertise. Bottlenecks arise when leaders must rely on report developers and analysts for answers and insights, slowing down critical decision-making. One might say that higher education has become data-rich but information-poor.

Now, imagine a system that allows employees and students to access data-driven answers simply by asking questions in natural language, thereby removing barriers and accelerating informed decisions.

This vision inspired UC San Diego to develop TritonGPT, a suite of artificial intelligence (AI) assistants that is revolutionizing how the campus community interacts with structured and unstructured institutional data. Supporting 38,000 employees and scaling to serve 40,000 students, TritonGPT democratizes data access through AI-driven answers and analytics.

TritonGPT: A Vertical AI Solution

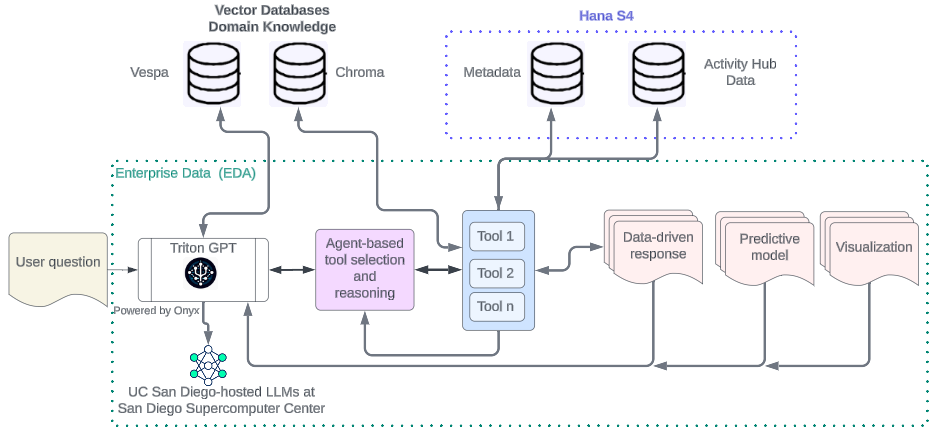

Powered by a low-cost orchestration platform and open-source frameworks, TritonGPT leverages state-of-the-art large language models (LLMs) as a vertical AI solution—meaning it's specifically designed to address institutional goals rather than function as a general-purpose AI tool. The orchestration platform acts as a front-end interface and middleware, connecting the LLMs to the university's data systems. This integration allows TritonGPT to access local datasets, policies, and procedures—and the enterprise data warehouse—all while maintaining the conversational ease of natural language processing (NLP).

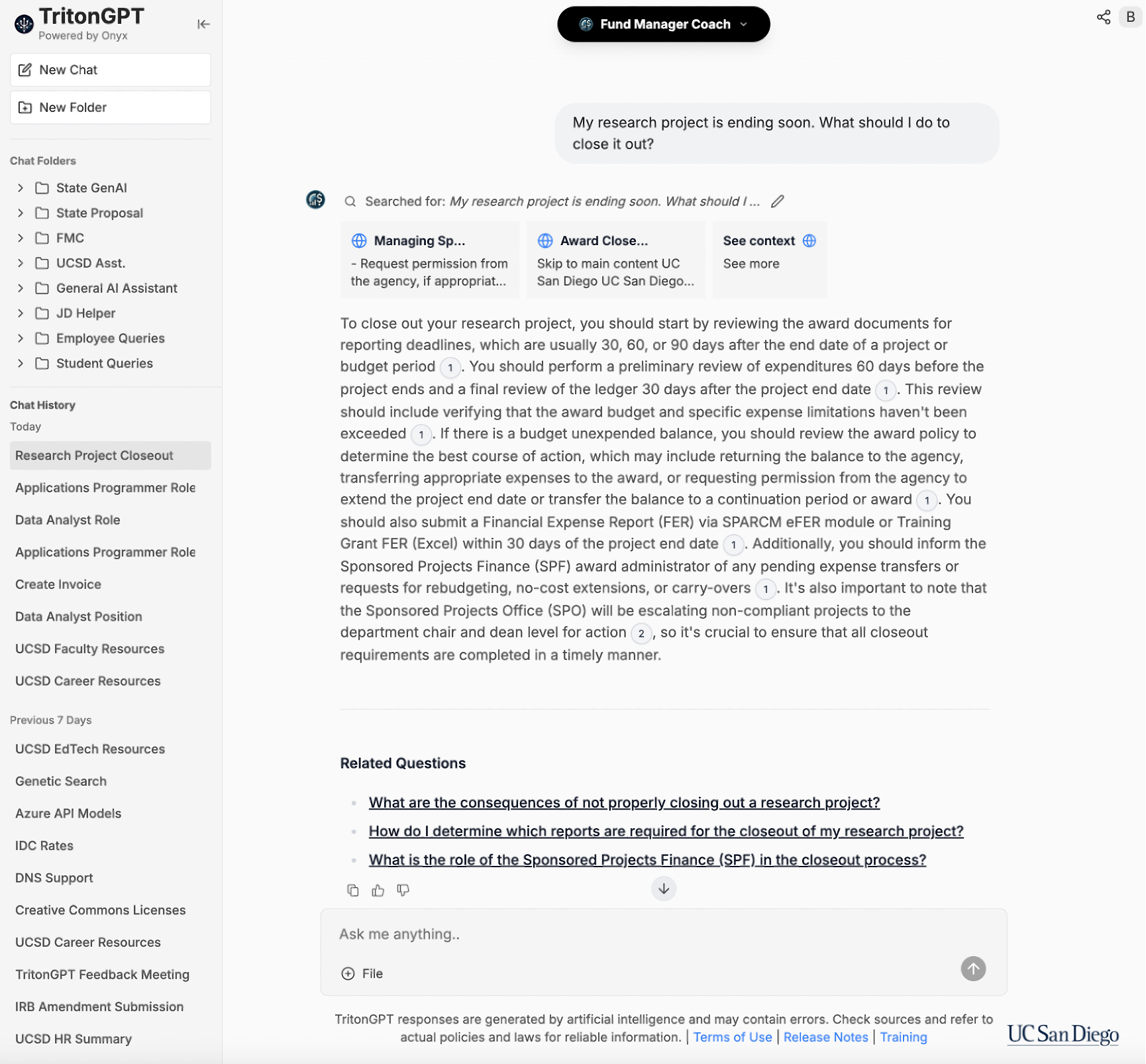

Hosted securely on premises at the San Diego Supercomputer Center, TritonGPT ensures complete data control, privacy, and compliance. It offers the flexibility of an interface like ChatGPT but is purpose-built to meet the unique needs of UC San Diego (see figure 1).

Specialized AI Assistants

TritonGPT currently offers five specialized assistants: the UC San Diego Assistant, Job Description Helper, Fund Manager Coach, Internet Search Assistant, Email Phishing Analyzer, and General AI Assistant. More assistants are in development.

These AI assistants simplify tasks such as navigating policies, generating reports, drafting job descriptions, and creating communication materials. Employees can quickly access professional development resources, review benefits information, and verify time-off policies. Students can explore financial aid options, enrollment processes, and campus life services. They can even evaluate how a change in major may affect their graduation timeline—all through natural language interactions. Each assistant delivers responses that include references to source documents, ensuring the information it provides is accurate and transparent.

The Enterprise Data Agent: Bridging Natural Language and Structured Data

Although many institutions now utilize techniques like retrieval-augmented generation (RAG) to extract information from unstructured sources such as documents and websites, accessing data within structured databases remains a significant challenge. To address this, UC San Diego developed the Enterprise Data Agent (EDA), an innovative solution that enables TritonGPT's AI assistants to retrieve and deliver analytics directly from the enterprise data warehouse.

This approach builds on the modern data architecture principles outlined in the EDUCAUSE Review article "21st-Century Analytics: New Technologies and New Rules." These principles informed the design of the UC San Diego enterprise data warehouse.Footnote1

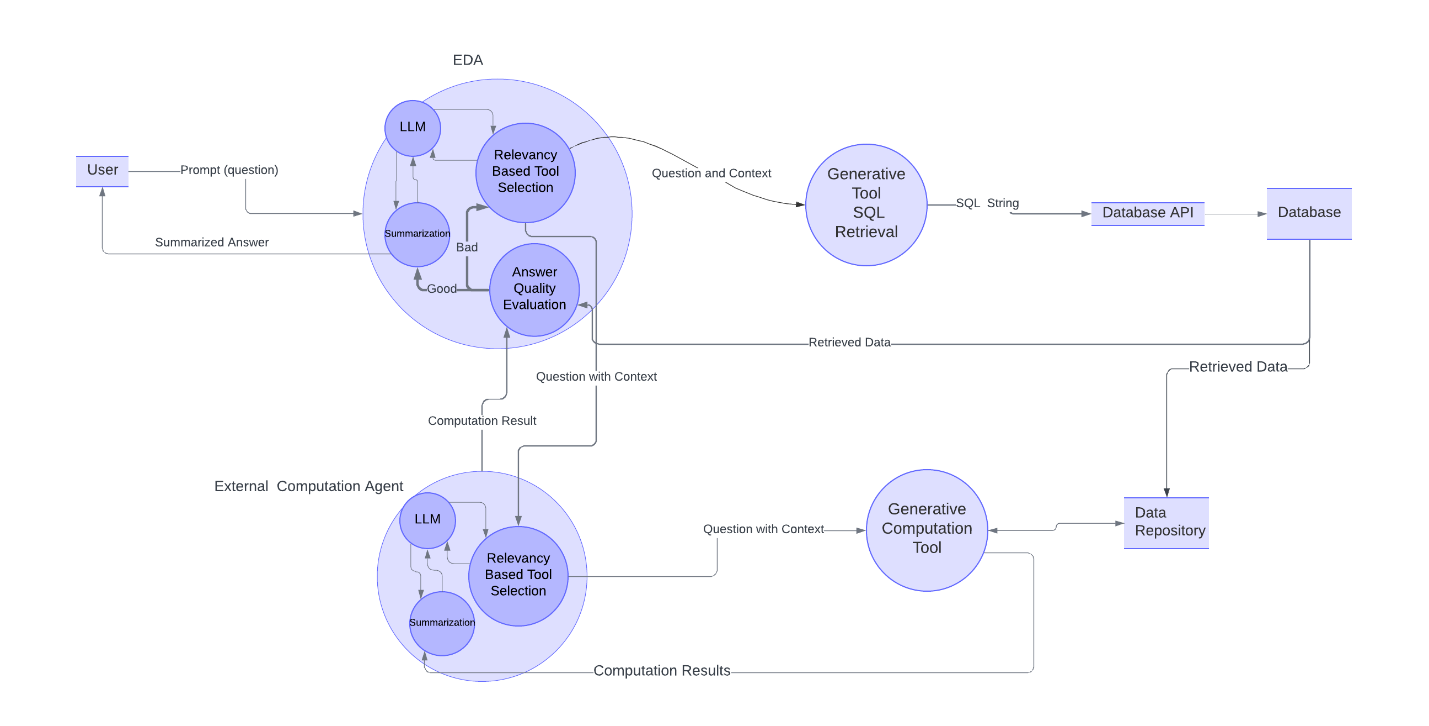

The EDA enables users to query complex datasets through natural language. For example, when a principal investigator or fund manager asks, "What's our research expenditure trend over the last three fiscal quarters, broken down by funding source?" the EDA launches a multistep process to generate precise, actionable results (see figure 2).

- Natural language processing: The agent breaks down the request by identifying key parameters: the time frame (last three fiscal quarters), the type of data (research spending), and the level of detail needed (breakdown by funding source). The EDA has already been trained on contextual information about UC San Diego's data structure, which improves the accuracy of the result.

- Query translation and optimization: The system uses an LLM to turn the user's question into a structured database query (SQL code). The AI relies on predefined metadata mappings that connect common business terms (such as "research spending") to the actual database fields. This helps ensure the results are accurate and delivered efficiently.

- Security validation: User permissions are checked against the university's security framework to ensure data governance and security compliance. Only authorized data is retrieved.

- Data retrieval and processing: The EDA securely connects to the enterprise data warehouse to retrieve the requested data. The system then processes the data for output. Requests that involve complex analyses—such as charting, visualizations, or trend calculations—are automatically generated based on the user's prompt.

- Validation and error handling: The system invokes a process to make sure it understands the intent of the question to ensure accuracy. If a request is not clear, the system will ask follow-up questions for clarification.

TritonGPT EDA: A Multi-Agent Framework

Architectural Advantages

Integrating agents into AI systems like TritonGPT significantly enhances the capabilities of the system. Each agent acts as a coordinator that can call on various tools to perform specific tasks. For example, an agent might use a tool such as Wolfram Mathematica to complete advanced mathematical computations or retrieve specialized information from domain-specific databases. An agent can have multiple tools at its disposal, enabling it to handle a wide range of functions. This setup allows TritonGPT to tackle complex tasks—such as analyzing census data, running scientific models, and interpreting detailed information—by selecting and utilizing the right tool for each specific need (see figure 3).

Agents can also break down user requests into smaller parts and combine them with metadata elements to form "knowledge fragments." These fragments contain metadata, reports, expert answers, code examples, and large documents. This approach allows the system to do the following:

- Utilize multiple "knowledge fragments" to categorize user queries into highly specialized topics.

- Select and execute relevant tools, then leverage knowledge fragments to generate precise answers.

- Utilize LLMs to evaluate knowledge fragments based on predefined criteria.

This approach helps build comprehensive data structures (knowledge bundles) that connect information from multiple sources, resulting in a complete and up-to-date knowledge base. By incorporating user feedback, machine learning, and natural language processing, UC San Diego is developing a self-improving knowledge ecosystem that adapts to the changing needs of stakeholders.

Data Privacy and Compliance

TritonGPT prioritizes data security and compliance, securely managing institutional data classified up to protection level 3 (P3). It leverages the established security framework used by the university's analytics tools, ensuring consistent protection and controlled access for authorized users.

The Road Ahead

TritonGPT is poised for rapid expansion, with a major milestone on the horizon: launching the EDA with finance data. This initiative will enhance the capabilities of the Fund Manager Coach AI Assistant. With its scalable design, the EDA can be applied across all UC San Diego activity hubs, integrating student, financial, research, facilities, and employee data.

Tracking Adoption and Impact

TritonGPT adoption follows a Pareto distribution, with approximately 25 percent of users engaging daily and experiencing substantial time savings. By automating repetitive administrative tasks and enhancing output quality, power users have experienced significant benefits, including a 60 percent reduction in time spent drafting job descriptions and an 80 percent improvement in policy and document searches. Another solution in development leverages an AI-driven ruleset to generate initial redlines on contract agreements, with early tests showing a 70 percent reduction in non-disclosure agreement (NDA) modification time.

Addressing Limitations and Challenges

As TritonGPT evolves, acknowledging the limitations of LLMs is crucial, given that they can be prone to hallucinations and biases when confronted with complex or nuanced queries. To mitigate these risks, the system contains a robust validation framework in which multiple LLMs serve as reviewers, scrutinizing output for potential inaccuracies or biases and flagging responses that may not align with institutional values.

To support AI adoption, UC San Diego is offering AI literacy training to address varying user comfort levels. The institution has prioritized workforce readiness, recognizing that successful AI deployment requires a balance of technological advancements and human preparedness.

Enhancing and Scaling the Platform

Several initiatives are underway to enhance the TritonGPT platform. UC San Diego is currently focused on expanding data integrations with additional sources. This expansion, combined with the introduction of personalization features, will enable tailored responses based on user roles, preferences, and interaction history.

Sharing our expertise and collaborating with like-minded higher education institutions has been a key pillar of our strategy. TritonGPT has been successfully deployed at UC Berkeley (BearGPT) and San Diego State University (SDSU GPT). The system's context-aware architecture ensures that responses meet the unique needs of each institution while maintaining the highest data security, privacy, and compliance standards.

The Future of Work and Technology

Integrating platforms such as TritonGPT with data warehouses raises some pivotal questions: Will AI-powered interfaces eventually supplant traditional reporting tools? How will traditional software-as-a-service (SaaS) models be impacted as agent-based conversational interfaces become more prevalent?

The answers to these questions are complex and multifaceted. However, they must be carefully considered as higher education navigates the future of work and technology and as AI continues to transform how people interact with data.

A shift is underway. As foundation LLMs continue to evolve and autonomous agents assume more responsibility and demonstrate greater reliability, the roles of the CIO, the CDO, and the entire IT organization are being redefined.

Note

- Vince Kellen, "21st-Century Analytics: New Technologies and New Rules," EDUCAUSE Review, May 20, 2019. Jump back to footnote 1 in the text.

Brett Pollak is Executive Director, Workplace Technology & Infrastructure Services, at UC San Diego.

Jack Brzezinski is Senior AI Architect at UC San Diego.

Vince Kellen is Chief Information Officer at UC San Diego.

© 2025 Brett Pollak, Jack Brzezinski, and Vince Kellen.