Academic and technologies teams at Barnard College developed an AI literacy framework to provide a conceptual foundation for AI education and programming efforts in higher education institutional contexts.

Barnard College is an undergraduate liberal arts college for women. It is both a distinct institution and a member of the broader Columbia University ecosystem in New York City. Several small but agile campus teams are working to advance conversations about generative artificial intelligence (AI) topics. As members of Instructional Media and Academic Technology Services (IMATS) and the Center for Engaged Pedagogy (CEP), we have developed educational programming on various AI topics for the Barnard community. Over the past year, we have held open lab sessions to test different text- and image-based AI tools, hosted guest speakers on copyright and fair use, facilitated generative AI syllabus statement workshops for faculty, conducted instructional workshops (GenAI 101), and led individualized education sessions for faculty departments. This process has been ongoing and iterative as the tools shift and campus community members' needs change. We have also put internal survey, evaluation, and feedback mechanisms in place to better understand faculty and staff needs related to the use of generative AI tools.

The Need for AI Literacy

Barnard College has established several internal working groups and task forces to discuss bigger questions about the impact of AI on our institution. Currently, there is no mandate or recommendation for faculty to embrace or ban AI in their classrooms. However, faculty are encouraged to define and discuss their expectations around the use of AI in their assignments. (The CEP has created many faculty resources, including decision trees to guide faculty planning, sample syllabus statements, assignments integrating generative AI, and other materials, to help guide decision-making about whether and how to incorporate generative AI in the classroom.) Higher levels of AI literacy can help faculty make informed decisions about the use of AI in their courses and assignments. On the academic technology services side, the IMATS team has decided not to implement or pursue AI surveillance technology to monitor academic integrity due to bias and questionable reliability of these tools.Footnote1 However, the landscape and corollary policies could change as generative AI technologies evolve.

A Framework for AI Literacy

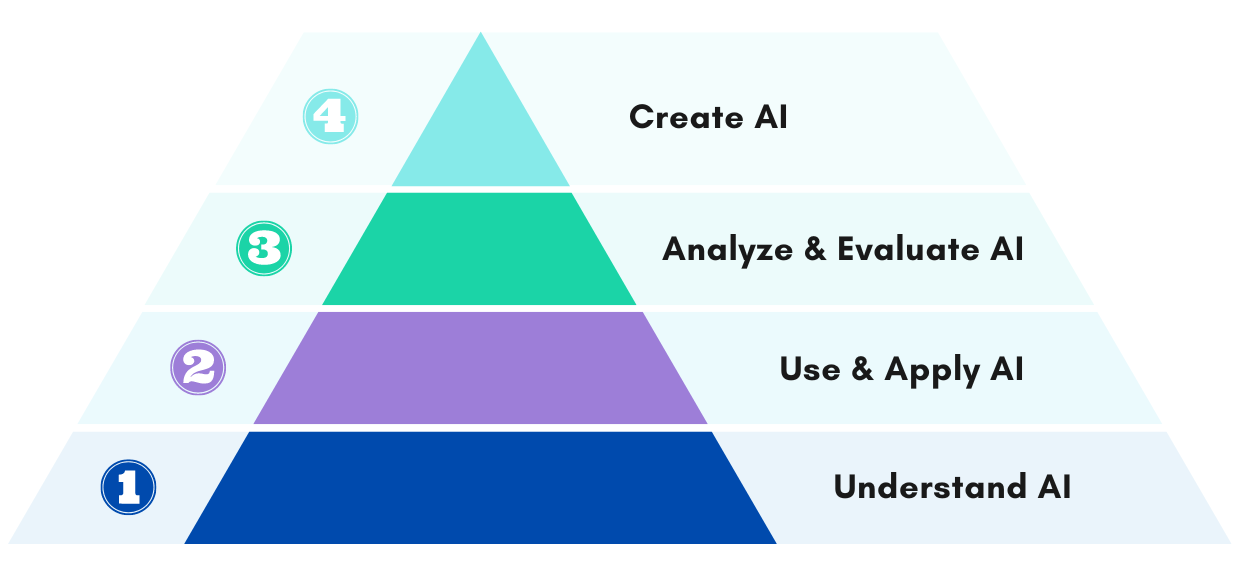

Members of the IMATS and CEP developed the following framework to guide the development and expansion of AI literacy among faculty, students, and staff at Barnard College. Our framework provides a structure for learning to use AI, including explanations of key AI concepts and questions to consider when using AI. The four-part pyramid structure was adapted from work done by researchers at the University of Hong Kong and the Hong Kong University of Science and Technology (see figure 1). (The Hong Kong researchers' work builds upon Bloom's Taxonomy.)Footnote2 The framework is intended to meet people where they are and scaffold upon their current AI literacy level, whether they have little to no knowledge of AI or are prepared to build their own large language model (LLM). It breaks AI literacy into the following four levels:

- Understand AI

- Use and Apply AI

- Analyze and Evaluate AI

- Create AI

When applying this framework, it is important to keep in mind that AI is a broad field. There are many types of AI, both real and theoretical. While the concepts enumerated in this article focus primarily on generative AI, the overall structure of the framework can be applied to other forms of AI and technology literacies (e.g., cybersecurity). And, while the information in each level builds on the concepts discussed in the previous level, it is not necessary to learn everything at one level before moving on to the next. For instance, when analyzing how generative AI could impact the labor market, understanding how generative AI models are trained is helpful; however, it's not necessary to master the intricacies of neural networks to conduct such an analysis.

Level 1: Understand AI

The bottom of the pyramid covers basic AI terms and concepts. Most of the programming and instruction at Barnard has focused on levels one and two (understanding, using, and applying AI), as this is a rapidly evolving technology, and there is still a lot of unfamiliarity with it.

Core Competencies

- Be able to define the terms "artificial intelligence," "machine learning," "large language model," and "neural network"

- Recognize the benefits and limitations of AI tools

- Identify and explain the differences between various types of AI, as defined by their capabilities and computational mechanisms

Key Concepts

- Artificial intelligence, machine learning, artificial neural networks, large language models, and diffusion models

- Artificial narrow intelligence, artificial general intelligence, artificial super intelligence, reactive machines, limited memory, theory of mind, and self-aware

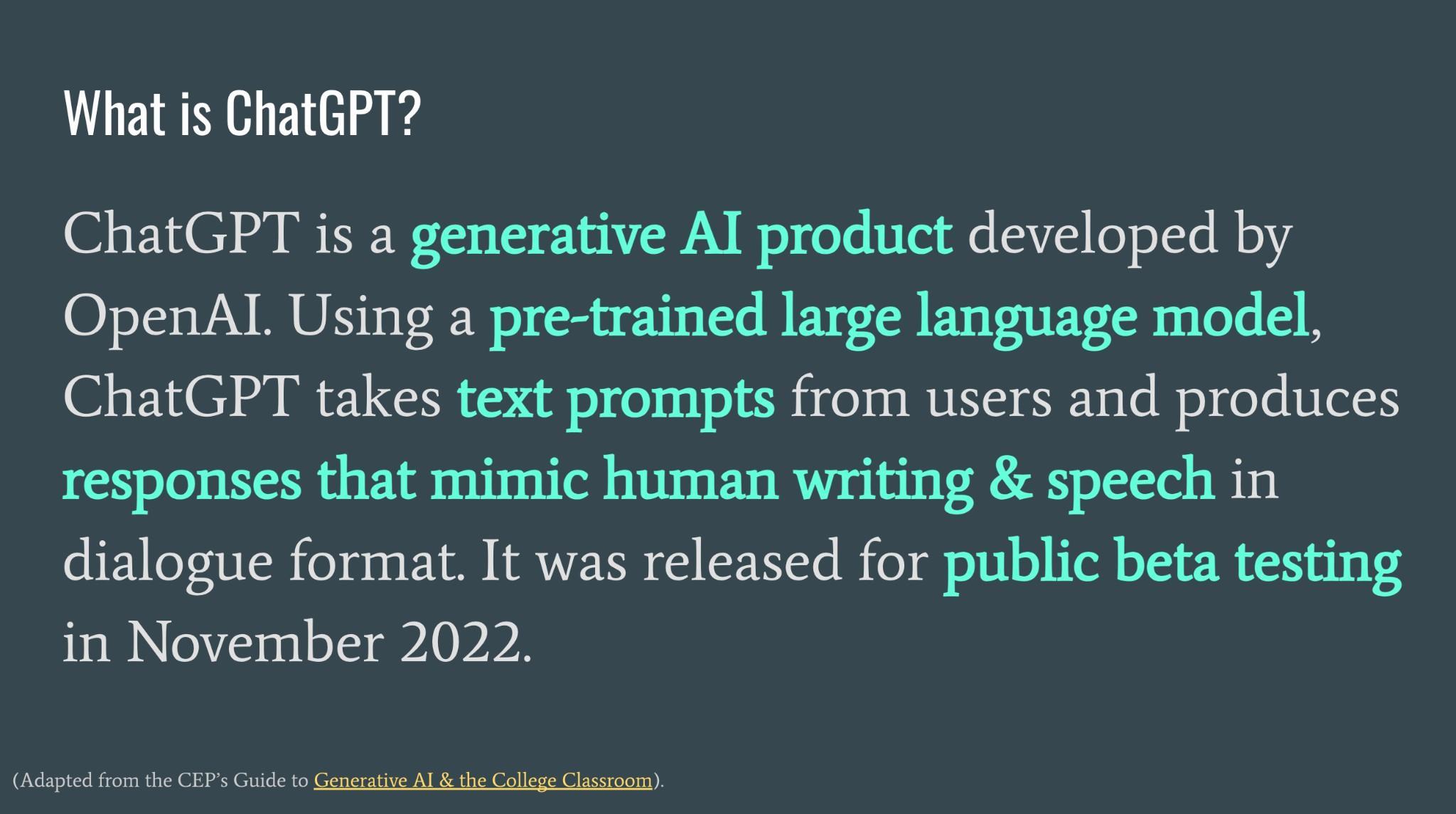

- AI tools, such as ChatGPT (see figure 2), Siri, Alexa, Deep Blue, and predictive text

- Technical frameworks related to AI (open-source models versus closed models, APIs and how they are used)

Reflection Questions

- What type of AI is this?

- What technologies does this AI tool utilize?

- What was this tool designed to do? What kind of information does it accept as input and return as a response (text, video, audio, etc.)?

- What might this tool be particularly useful for?

- What would it not be useful for?

Level 2: Use and Apply AI

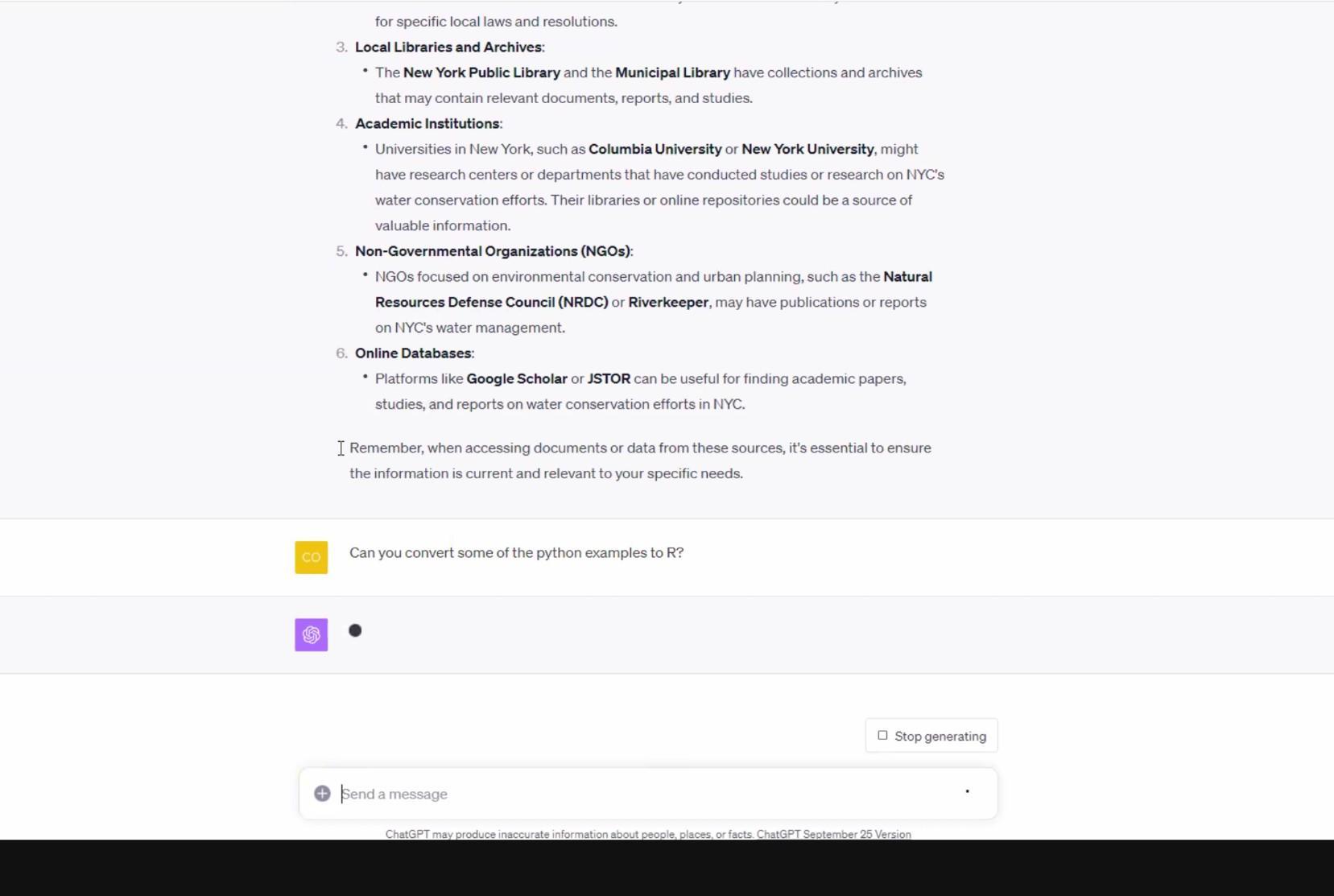

The second level of AI fluency indicates that users can use tools like ChatGPT to achieve their goals; these users are familiar with prompt engineering techniques and know how to collaboratively refine, iterate, and edit with generative AI tools. Programming designed to build level two fluency at Barnard College includes hands-on labs and real-time collaborative prompt engineering (see figure 3).

Core Competencies

- Successfully utilize generative AI tools for desired responses

- Experiment with prompting techniques and iterate on prompt language to improve AI-generated output

- Review AI-generated content with an eye toward potential "hallucinations," incorrect reasoning, and bias

Key Concepts

- Prompt engineering, context windows, hallucinations, bias, zero-shot prompting, and few-shot prompting

- Prompting techniques for text-based generative AI, such as adding specificity, using context and details, and asking the model to consider pros and cons or evaluate alternative positions

- Privacy, confidentiality, and copyright considerations for information fed into prompting tools

Reflection Questions

- Why did a prompt generate a particular response?

- How could the prompt be adjusted to get a different response?

- What strategies can be used to reduce bias and hallucinations?

- How can AI output be checked for bias and hallucinations?

Level 3: Analyze and Evaluate AI

Analyzing and evaluating AI involves a more complex meta-understanding of generative AI. At this level, users should be able to critically reflect on outcomes, biases, ethics, and other topics beyond the prompt window. An example of programming at this level is an event that featured an expert who discussed the current copyright and intellectual property questions surrounding AI and the environmental and climate impacts that generative AI might have (see figure 3). Of course, one can participate in conversations about these questions and ideas without knowing all of the AI definitions. However, familiarity with the preceding levels in the pyramid informs one's baseline understanding and vocabulary, helping the individual to understand how AI intersects with other fields.

Core Competencies

- Examine AI in a broader context, bringing in knowledge from one's discipline or interests

- Critique AI tools and offer arguments in support of or against their creation, use, and application

- Analyze ethical considerations in the development and deployment of AI

Key Concepts

- Critical perspectives on AI (The following examples are not intended to be all-encompassing.)

- environmental sustainability

- labor

- privacy

- copyright

- race, gender, class, and other biases

- misinformation

Reflection Questions

- What other perspectives or frameworks might be useful in assessing the implications of using generative AI tools?

- Where might biases in AI come from?

- In what ways does the use of generative AI tools align with or diverge from your personal values?

Level 4: Create AI

At this level of AI fluency, users are able to engage with AI at a creator level. For example, users can build on open APIs to create their own LLM or leverage AI to develop new systems (see figure 5). Currently, Barnard offers less programming at level four than at the other three levels, but there have been workshops at the Computational Science Center that provide technical instruction related to building AI and machine-learning models. Engaging people at all levels of AI fluency is important.

Core Competencies

- Synthesize learning to conceptualize or create new ideas, technologies, or structures that relate to AI. Reaching this level of literacy could include the following:

- Conceive of novel uses for AI

- Build software that leverages AI technology

- Propose theories about AI

Reflection Questions

- What is uniquely human about your ideas, technologies, or structures? How might they differ from what an AI could create?

- What specific AI features lend unique affordances to ideas, technologies, or structures?

Conclusion and Next Steps

While this AI literacy framework is not exhaustive, it provides a conceptual foundation for AI education and programming efforts, particularly in higher education institutional contexts. The intention is to maintain neutrality with AI use, recognizing that technology literacy can lead to the decision not to use it. The impact of AI on higher education will likely be significant, impacting admissions, research, and curricula. Education and foundational literacy are the first steps for a community to engage productively with this rapidly changing technology.

There are many possible next steps Barnard College might take related to generative AI, but specific to the AI literacy framework, the IMATS and CEP teams may explore "moving up" the literacy pyramid in programming, resources, and events as awareness and basic literacy grow. Currently, the majority of our offerings are at levels one and two, but we hope to shift our programming focus to levels two and three. A recent survey revealed that a significant number of faculty and students still have never used generative AI and have negative perceptions of these tools, so our teams are also exploring ways to better facilitate practical and critical engagement.

Another goal of the AI literacy initiative is to underline the human aspect of these technologies. While using generative AI can almost feel like alchemy—spinning gold from plain text via black box technology—it is very much built upon human knowledge, which has its own biases and inequities. Using a critical lens when engaging with generative AI may help users identify existing biases and prevent users from exacerbating them.

Notes

- Weixin Liang, Mert Yuksekgonul, Yining Mao, Eric Wu, and James Zou, "GPT Detectors Are Biased Against Non-native English Writers," Patterns 4, no. 7 (July 2023): 100779. Jump back to footnote 1 in the text.

- Davy Tsz Kit Ng, Jac Ka Lok Leung, Samuel Kai Wah Chu, and Maggie Shen Qiao, "Conceptualizing AI Literacy: An Exploratory Review," Computers and Education: Artificial Intelligence 2 (2021); Benjamin S. Bloom, Max D. Engelhart, Edward J. Furst, Walker H. Hill, and David R. Krathwohl, Taxonomy of Educational Objectives: The Classification of Educational Goals, Handbook I: Cognitive Domain (New York: David McKay, 1956). Jump back to footnote 2 in the text.

Melanie Hibbert is Director of Instructional Media and Academic Technology Services and the Sloate Media Center at Barnard College, Columbia University.

Elana Altman is Senior Associate Director for UX and Academic Technologies at Barnard College, Columbia University.

Tristan Shippen is Senior Academic Technology Specialist, Barnard Library and Academic Information Services at Barnard College, Columbia University.

Melissa Wright is Executive Director, Center for Engaged Pedagogy, at Barnard College, Columbia University.

© 2024 Melanie Hibbert, Elana Altman, Tristan Shippen, and Melissa Wright. The content of this work is licensed under a Creative Commons BY-NC 4.0 International License.