Key Takeaways

- To take its existing data analysis efforts deeper, Chico State University is studying how student achievement is related to (LMS) use and student characteristics.

- The project is also assessing whether student achievement in courses that have been redesigned to include technology correlates with LMS use.

- Among the project's early findings are the importance of LMS data filtering, incongruences between LMS course hits and time spent on activities, and that students from low-income families use the LMS more frequently (and longer) than students from higher-income families.

- If successful, this project will be scaled out across other courses at Chico State, University and it is designed for easy adoption by other California State University campuses by using common student data definitions and shared interests about student success.

California State University, Chico (Chico State University) has been analyzing how students use its learning management system (LMS) since 2003 to demonstrate usage and growth in faculty adoption. Detailed LMS log files record every student interaction with the LMS and the time spent on each activity. Previously, these log files were aggregated at the college and institutional level in reports to campus administration. These reports answered questions about institution-wide usage, but didn't answer some major questions that we wanted to address, such as how to evaluate the effectiveness of professional development programs on student achievement and identify areas in courses for improvement.

We therefore launched a pilot project to address two key questions:

- What is the relationship between LMS usage and student academic achievement?

- How do student characteristics — such as gender, race/ethnicity, family income, and previous academic achievement — affect this relationship?

To find answers, our project joins LMS log file data with student characteristics and course performance data tables from the student information system and expands previous analysis with data analysis including visualizations and inferential statistics. This allows us to move beyond understanding only "what" students are doing with the LMS, and to begin understanding how it matters, and for whom it makes a difference. This approach extends our existing efforts and provides data that is relevant to administrators, faculty and instructional designers.

Right now, in Chico State University's Academy e-Learning program faculty members team up with instructional designers to redesign their courses for increased student engagement, which includes the integration of technology applications. Our current program evaluation efforts assess the difference in average student performance that results from a redesigned course. However, these efforts do not tell us whether students with increased performance actually used any of the redesigned technology elements. We thus have a limited understanding of the redesign program's impact.

For example, one recently redesigned course showed a higher average grade on the final course project — that is, it showed improved learning outcomes — but it also showed a higher rate of drops, withdrawals, and failing grades. This course had the highest amount of LMS activity of any section that semester, with more than 250,000 hits to course activities by 373 students. Our previous data couldn't tell us what caused this dichotomy in results: W were students trying to use redesigned course materials and not learning the concepts? Or did they never access the materials, hiding in the anonymity of the online environment? Without knowing the actual student usage of the materials, it was difficult to identify the most effective way to improve the course.

We also understand from educational research that students' backgrounds, motivations, and college experience prior to taking a course have a large impact on whether they are likely to succeed or not and the importance of their behavior within the LMS. This student data, although difficult to obtain due to technical factors and administrative processes, is crucial to obtain to accurately decode LMS activity.

Our new pilot project is therefore analyzing both LMS activity and student characteristics in this course and four others in the Academy eLearning program to address four key questions:

- What types of LMS activities are students participating in during the course?

- How is that activity related to student grade achievement?

- Does the activity–grade relationship vary by student characteristics?

- Do at-risk students (as predicted by race/ethnicity and family income) have the same outcomes from using the LMS as students whose background predicts course success?

Numerous studies have demonstrated a relationship between the frequency of student LMS usage and academic performance.1 Such research has created predictive models that can give students, faculty, and advisors an early warning when students are likely to fail or receive a low course grade.2 These alerts are triggered by a low frequency of LMS usage relative to other students (such as fewer logins or fewer discussion messages read).

Our pilot project seeks to build on this research by grouping detailed LMS actions (such as discussion forum reading) into broader categories of LMS usage (such as administration or engagement) to uncover the deeper patterns of LMS use and determine how these patterns are affecting achievement.

Active participants in this project come from several campus departments, including:

- Academic Technologies

- Data Warehouse

- Institutional Research

- Enrollment Management

Serving a Diverse Population of Digital Natives

Chico State University is a midsized campus (14,640 full-time enrolled students in 2010–2011) in the California State University (CSU) system.3 The campus has a large service area that extends across 12 counties in the sparsely populated northern quadrant of California. In fall 2011, 32 percent of enrolled students were identified as "students of color." Serving these students is a key institutional priority as identified in the campus strategic plan.

Author Kathy Fernandes talks about Academy eLearning

To serve this diverse and remote population as well as the "digital native" undergraduate students who live in Chico, the university has made significant investments in academic technology infrastructure and faculty professional development. As part of this effort, the campus created the faculty development program Academy e-Learning, drawing on the work of Carol Twigg and the National Center for Academic Transformation.4 In this year-long program, a faculty team redesigns an existing course with the goal of improving student achievement and reducing costs, integrating technology as appropriate to meet these goals.

Expanding and Customizing Analysis

The reporting tools built in to WebCT Vista, the LMS run at Chico State University prior to migration to Bb Learn, were insufficient to answer strategic and operational questions about usage of the LMS. Academic Technology Services (ATS) has run custom SQL queries of the WebCT Vista log files for several years to provide expanded reporting functionality beyond what is native to the LMS application. ATSATS has used Excel Pivot tables to analyze these results and provide summary statistics of overall LMS usage to the campus CIO. However, these reports only gave aggregate statistics at the institution-wide level, and did not provide insight into the relationship between usage and student achievement.

To conduct the research for this project, we likewise found off-the-shelf tools insufficient to ask the questions we wanted to answer — especially since some of the questions required data mining (e.g., which student characteristic has the strongest relationship with student success using the LMS?) Therefore, we expanded and customized this analysis using several general-purpose data warehousing and analysis applications. First, we imported the LMS log file data into an Oracle11G data warehouse using a normalized star schema. The use of a data warehouse has several advantages over a flat file extract, led by the ability to join LMS usage tables with other database tables through a common field. We used Microsoft reporting services to extract the LMS data and join it with tables from the student enrollment system and course participation database using a unique student identifier. An observation was produced for every action within the LMS.

We conducted preliminary data analysis using Tableau data visualization software. Through visualizations, we examined the data for outliers including minimum and maximum dwell times — that is, the amount of time a student "dwells" on a particular activity before moving onto the next activity. By using Tableau, we came to realize that dwell time was an important indicator to add to LMS activity hit count to decipher the educational relevance of LMS usage. Although dwell time is easily biased (e.g., students could be multitasking or have left their computers), the large amount of LMS activity permits filtering and use of aggregate measures to add to our understanding.

We are now beginning to use the Stata statistical analysis package to conduct more rigorous data analysis, including both final filtering and statistical testing. The statistical tests will include correlations, factor analysis, and multivariate regression.

Once we've completed this prototype, we'll produce a revised data model and enter it into the data warehouse to make this type of analysis more broadly available to faculty and administrators across the institution. The data model will also be shared with sister California State University campuses. By aggregating the LMS usage data, we hope that many of these procedures will be agnostic to the specific application used, and require only a moderate amount of customization.

Operational Challenges

Gerry Hanley talks about the CSU Graduation Initiative and data-driven decision making

The most surprising finding has been the absence of resistance in project approval. We have seen widespread endorsement, interest, and curiosity in how we are conducting this analysis and in the potential findings. This endorsement has come from various constituencies, including multiple areas of administration, institutional research, faculty, and student affairs. This might be due to the fact that, through the CSU Graduation Initiative and other efforts, data-driven inquiry is a part of the institutional culture in the CSU system.

That said, our project faced several operational challenges.

Security and Confidentiality

To join LMS usage data with detailed information about students, we had to carefully consider the ramifications in terms of Family Educational Rights and Privacy Act (FERPA) and campus security policies. This was an interesting issue, as the research crossed functional areas of the institution and asked questions that had not previously been investigated. To ensure that campus policy was respected and the results could be disseminated, we requested permission from the registrar, who was (and is) continually updated on the project's progress. No individually identifiable information is included in the data files, and the target course has a large enrollment. Further, Institutional Research Board (IRB) applications were filed and approved to ensure that we could disseminate the research results.

Strategic and Technical Stakeholder Availability

Although there is strong commitment to this project in principle, finding time in the schedules of busy stakeholders was a challenge. A formal project plan was submitted and approved before any staff time could be dedicated. Necessary resources included the director of Academic Technology, the director of Institutional Research, and the lead Data Warehouse engineer, each of whom invested substantial time in defining meaningful queries and helping us understand the context in which we were conducting our analytics project. Given the multitude of priorities on a campus, finding the time to dedicate to a new, innovative project is a particular challenge.

Big Data and Data Silos

Even with a data warehouse in place, accessing student characteristics and course information required queries across multiple databases. To extract the appropriate data, we had to know the details of the plethora of tables, fields, and data sources, along with the update frequency. We used data filtering and consolidation techniques from statistical analysis procedures to consolidate the 250,000 records into a single meaningful record for each of the 373 students in the course. The director of Institutional Research offered us substantial support in overcoming these challenges.

Early Findings

So far, we have made substantial progress in defining and collecting the project data, although the analysis phase (the fun part!) has just begun. So far, four key findings have emerged.

- The importance of LMS data filtering. In reviewing the data, we discovered that the native LMS data was extremely dirty and required extensive filtering to transform the "data exhaust" in the LMS log file into educationally relevant information. For example, a single action to read a compiled discussion thread resulted in a hit for each of the messages compiled, which could easily result in 30 hits for a single student action. Thus, without filtering, compiled discussion messages were the most frequent activity; after filtering, this action was the fifth most popular.

- A surprising lack of congruence between the number of hits and time spent on activities. In the filtering process, conducting visual analysis with dual plots of time and hits has produced interesting findings on how students spend their time using the LMS. For example, students spend relatively little time in discussion forums and more time on content. However, questions linger about the accuracy of time recorded by the LMS and the actual time students spent on these activities. Statistical analysis has found that the distribution of time spent (e.g. "dwell time") is heavily skewed toward 0, suggesting that this variable is not a reliable measure. Regardless, it is helpful to add this data to hit count.

- Direct positive relationship between LMS usage and student final grade. The more activity and/or time students spent on the LMS, the higher their final grade. This was the result we had anticipated, but the results were more direct than we had anticipated. This finding suggests that to improve student performance, we need to find out why students did not access the course.

- Low-income students spend more time in the LMS. Students eligible for the Pell Grant (a proxy for low income) show an increased amount of course hits activity and time spent on each activity compared to students who are not eligible for a Pell Grant. Out of the top 20 students in terms of hit count to the course, 14 were Pell Grant–eligible (70 percent, compared to 44 percent in the overall population). Of Out of these 14 students, 13 were students of color. All but one of these students received a grade of 3.0 or higher.

- Lower "efficiency" of time on task for low-income students. Within the same grade category, Pell Grant–eligible students spent more time than non-Pell Grant–eligible students. The difference in activity is concentrated in content-related areas. This difference could reflect many learning challenges for these students, including a lack of prior academic preparation, study skills, or experience using technology. We are pursuing this finding in further research.

- Missing data. Some student characteristics variables that we had hoped to examine (such as high school GPA and SAT/ACT scores) have high rates of missing data, but are correlated to the student enrollment status (e.g., transfer students come in without a high school GPA). This prevents us from including such variables in statistical analysis and suggests that in future research we may cluster students by their enrollment status, with unique variables for each cluster.

Author John Whitmer talks about project accomplishments, challenges and next steps in this research

Campus leadership has shown strong interest in this project and efforts to improve student graduation rates. Our evidence of impact will follow once we've completed the statistical analysis and can assess the relationship of LMS usage, student characteristics, and student academic performance. We anticipate that this project will provide an opportunity to repurpose data once used only for LMS baseline metrics. Through this project, this data will support institutional accountability, data-driven decision making, and outcomes-based educational programs.

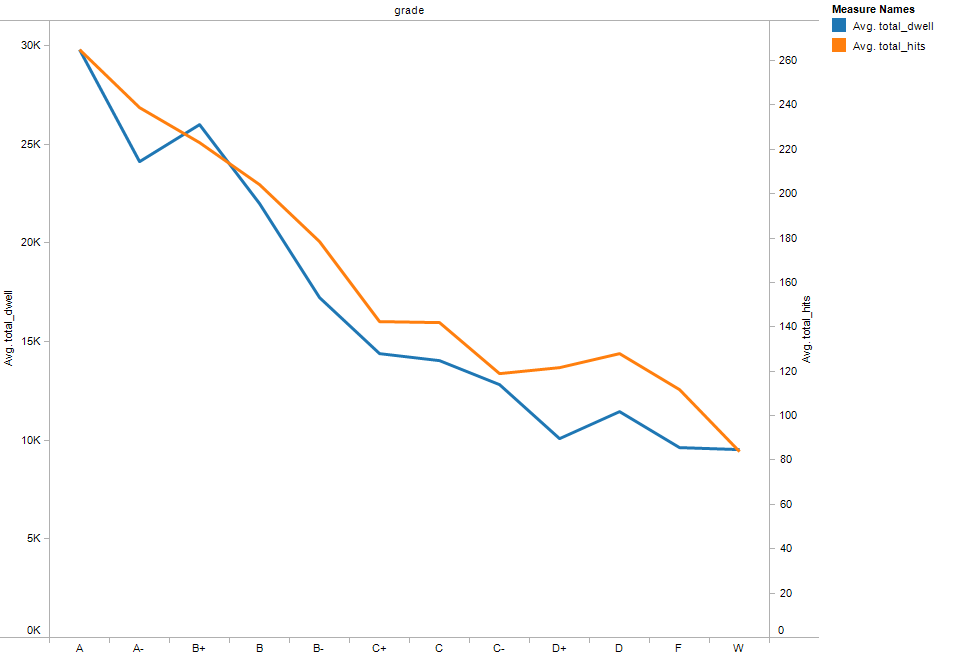

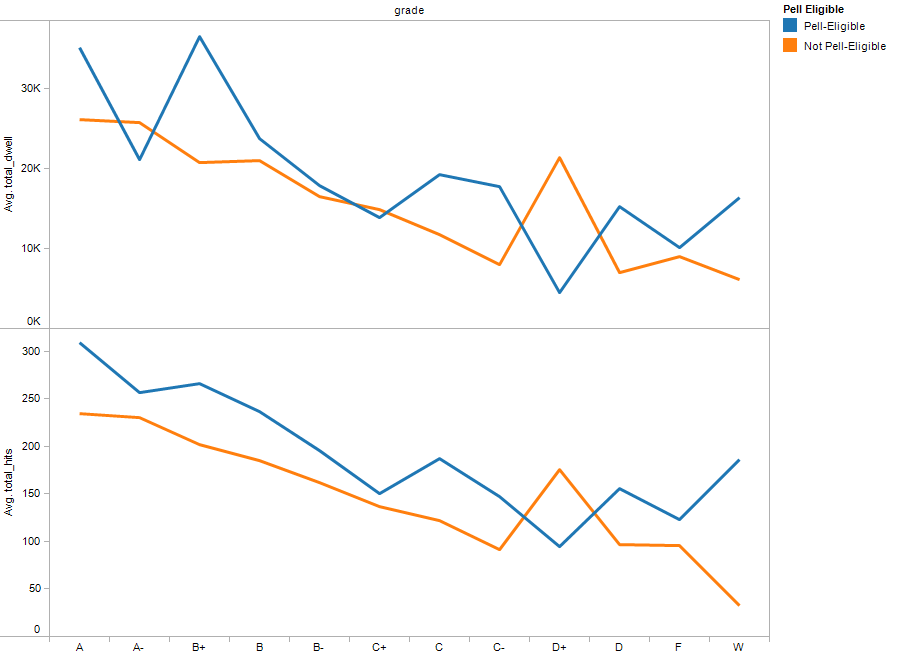

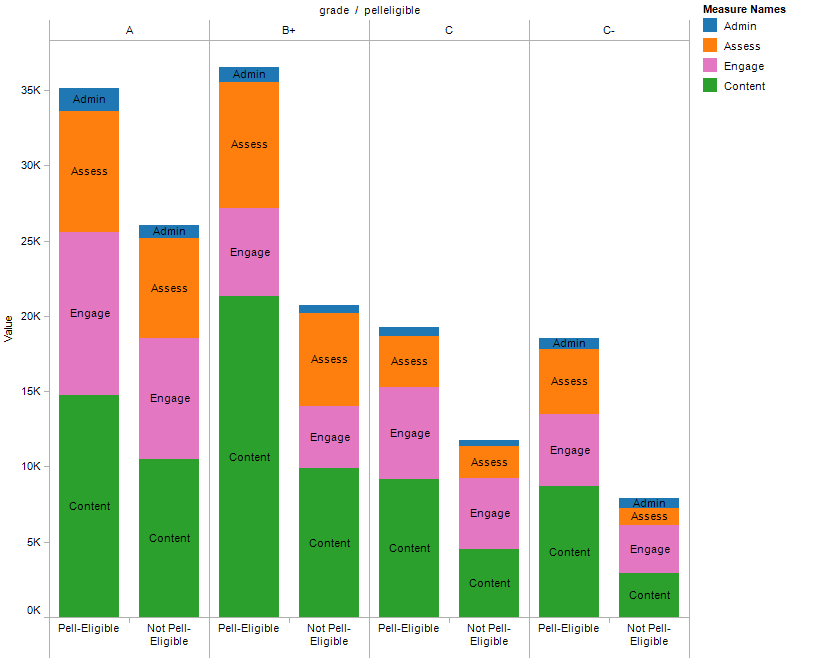

Following are charts from our preliminary analysis in the redesigned course. These results were produced in exploratory visualizations and might be revised in the final statistical analysis. Also, due to scaling differences among charts, some results might appear to vary between charts.

Figure 1 plots the student grade by LMS activity as measured by website hits and dwell time. The dwell time variable is the number of seconds that a student "dwelled" before starting the next LMS activity; it is a rough proxy for time on task. The dwell time is calculated in the total number of hits over the length of the course.

Figure 1. Final course grade vs. dwell time and hit count

This chart validates our commonsense notion that LMS activity, time on task, and a student's final grade are positively correlated. Future statistical analysis will determine the level of magnitude of this relationship.

In figure 2, the average dwell time and average hits by final grade of students is shown according to their Pell Grant eligibility status. This status is a proxy for a student who comes from a low-income family (whether dependent or independent student).

Figure 2. Final course grade vs. dwell time and hit count according to Pell grant eligibility

Students who qualified for a Pell Grant usually spent more time, and had more total hits per grade category than students who were did not qualify for a Pell Grant (e.g., from higher-income families). In some grade categories, such as the A, B+, and C-, students spent substantial additional time.

In figure 3, the differences in dwell time by activity type between Pell and non-Pell eligible students are plotted. For reasons of space, only the activities with the highest differences are shown.

Figure 3. Final course grade and LMS activities vs. dwell time by Pell Grant eligibility

These findings led to us to wonder which activities Pell-eligible students are spending more time on, and whether we could reduce this extra time by, for example, redesigning course activities or offering a program to assist students with study skills.

As figure 3 shows, it is clear that students with Pell Grant eligibility spent more time on content-related activities than their counterparts earning the same grades. This finding is persistent across the grade categories, and the difference is much larger than with any other disparity. In the case of the B+ students, for example, the ratio of time spent on content is almost two to one.

This finding raises more questions and suggests different potential actions to address the disparity. Perhaps these students need different study skills, or perhaps other background characteristics and academic experiences necessitate this extra study time. These findings can help us identify differences and target interventions to make the most difference for our students.

Our future research will include statistical analysis of these findings and the differences, including adding multiple additional student characteristics such as race, age, and current college GPA.

Understanding Effectiveness of LMS Activities

Faculty members and administrators can use our pilot project to assess the effectiveness of LMS activities. If students who frequently used a certain LMS activity had higher performance in one course, for example, faculty members could increase those activities in future courses. Alternately, activities with a small impact on student grades could be reviewed and potentially improved to make them more effective. In either case, our project could help improve understanding about the educational effectiveness of various pedagogical approaches to using the LMS.

This project could be replicated at any campus with access to LMS log file data and student characteristics data. The filtering experience has proven instructive and could be useful — even at campuses without access to student characteristics. As our experiences show, using this LMS data can improve understanding of LMS usage at both the course and institutional level.

- John Patrick Campbell, Utilizing Student Data within the Course Management System to Determine Undergraduate Student Academic Success: An Exploratory Study, doctoral dissertation, Educational Studies, Purdue University, 2007; Leah P. Macfadyen and Shane Dawson, "Mining LMS Data to Develop an 'Early Warning System' for Educators: A Proof of Concept," Computers & Education, 54 (2) (February 2010): 588–599; see page 12; Libby V. Morris, Catherine Finnegan, and Sz-Shyan Wu, "Tracking Student Behavior, Persistence, and Achievement in Online Courses," The Internet and Higher Education, 8 (3) (2005): 221–231; and Seizaf Rafaeli and Galid Ravid, "OnLine, Web Based Learning Environment for an Information Systems Course: Access logs, Linearity and Performance," presented at Information Systems Education Conference (ISECON '97), Information Systems Journal (2000): 1–14.

- Kimberly E. Arnold, "Signals: Applying Academic Analytics," EDUCAUSE Quarterly, 33 ( 1) (2010); and John Fritz, "Classroom Walls that Talk: Using Online Course Activity Data of Successful Students to Raise Self‐Awareness of Underperforming Peers," The Internet and Higher Education, 14 (2) (2010): 89–97.

- California State University Analytic Studies, College Year Report 2010–2011, "Table 3: Total Full-Time Equivalent Students (FTES) by Term, 2010–11 College Year."

- Carol Twigg, "Improving Learning and Reducing Costs: New Models for Online Learning," EDUCASE Review, 38 (5) (September/October 2003): 28–38.

© 2012 John Whitmer, Kathy Fernandes, and William R. Allen. The text of this EDUCAUSE Review Online article (July 2012) is licensed under the Creative Commons Attribution-ShareAlike 3.0 license.