Having a systematic review process in place can help instructional designers and educational technologists as they consider proposals for the adoption of new teaching and learning tools.

At various times, instructional designers and educational technologists (IDs/ETs) are put in the position of recommending teaching and learning technologies. While large systems (e.g., an LMS) are assigned a project manager and a team, smaller applications (e.g., assessment development systems, wikis, and most apps) are left to be managed by the IDs/ETs. Surprisingly, although review requests for smaller technology applications are received fairly often, higher education institutions often have no systematic process in place to determine how to conduct the review. As a result, staff members sometimes end up revisiting the same issue multiple times, starting from scratch each time because they have no historic documentation. At one of my institutions, for example, a team investigated the use of Top Hat at least three times, and another had numerous discussions about using Confluence versus our LMS's built-in wiki. To complicate matters, instructors who ask IDs/ETs for help in identifying a technology solution often expect the ID/ETs to support the product even if it is not adopted as an institutional solution.

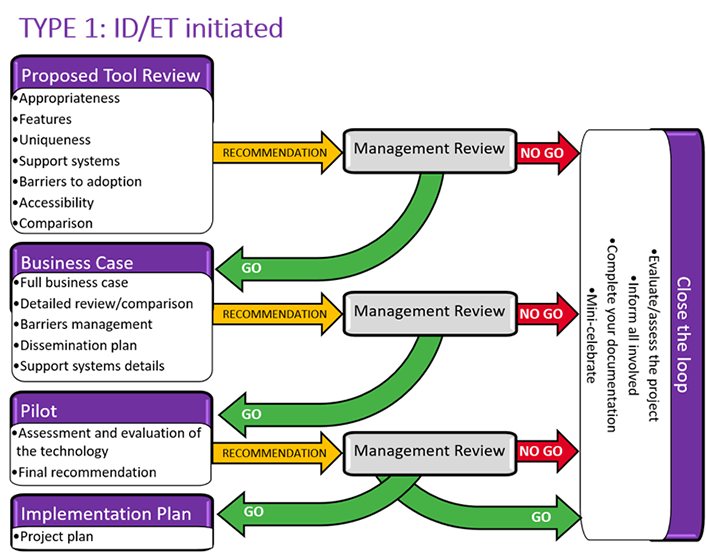

A systematic procedure for reviewing teaching and learning tools can help IDs/ETs support faculty more quickly and more effectively. Most of these technology reviews will fit into one of three types: (1) ID/ET initiated; (2) specific tool request; (3) solution request. Any final recommendations for a new technology or a change in technology should include the following: a proposed tool review, a business case, a pilot, an implementation plan, and "close the loop." These steps are basically the same for all three types of reviews. However, different actions may be required based on the type of request. For example, when investigating whether to start supporting a new tool (Type 2), the review team will want to consider not only the proposed tool but also how it compares with tools currently being supported. And when looking for a solution to an instructor's problem (Type 3), the review team should conduct an in-depth interview with the instructor (and possibly others) to determine the exact needs, wants, and constraints. For that reason, the full review process is discussed below for Type 1 requests, with the additional steps for Types 2 and 3 noted in the following sections.

Type 1: ID/ET Initiated

As an instructional designer or educational technologist, you may at times find information about a tool that you think is worth considering. This may be a tool that solves an outstanding problem, appears to offer more/better features than a currently supported tool, or provides a new ability for teaching and learning. Sometimes you may also learn about a specific category of technology. This could be based on reading various sources such as EDUCAUSE publications, Gartner research, and PCWorld, all of which provide periodic reviews of trends, comparisons of products, and other research results. Members of groups such as college and university consortiums and EDUCAUSE community groups also often share information on technologies they are using or have reviewed. Keeping an eye on these newly available technologies helps you support faculty as they run into technology problems or have questions on how to handle pedagogical issues.

Proposed Tool Review

By their very nature, the number of technology options in Type 1 reviews is fairly limited: they are new approaches or provide a solution previously unavailable. The first step, therefore, is to identify the goals for adding a technology to your "catalog" of supported tools. To ensure that you are meeting a real need (either current or emerging), try to be clear about the goals and objectives of adopting the tool. Start by asking a few instructors and/or your teaching and learning center (T&LC) staff to brainstorm what goals they could meet using this technology.

You do not need to complete a full investigation at this point, just a high-level determination to make sure the concept of the technology will actually be useful. You may want to refer to Lauren Anstey and Gavan Watson's evaluation rubric for topics to consider.1

Appropriateness

Is the new technology appropriate for your institution? Key questions:

- What will it solve/improve?

- What are the pedagogical values and implications?

- Does this provide additional capabilities?2

Features

Considering the intended audience (i.e., students and/or instructors), you should determine what features will be needed (e.g., ease of use, interaction with other technologies). Key question: Based on the identified goals, what features are needed?

Uniqueness

If your team supports a limited set of technology tools, adding every new type of technology would be counterproductive. Also, although a new technology may offer somewhat better features than a current supported technology, don't be too eager to make a change. Key questions:

- What about this technology makes it different from others? (This answer does not need to be in-depth at this point, just a sentence or two.)

- How important is this technology pedagogically?

Support Systems

Many technologies require supports including installation, security, user guides (for both students and instructors), troubleshooting, and/or "hand-holding" for users who need extra help. In addition, a centrally supported technology will need to be "marketed"—via newsletters, websites, and perhaps department meetings. Key questions:

- What group of people and approximately how many people could use this technology?

- How complex will the support need to be? (The answer will be a guestimate based on whether you will need an additional full-time equivalent person to support the tool and whether it will require support from other teams such as security.)

Barriers to Adoption

The Technology Acceptance Model (TAM) and other models offer details on the rate of adoption of new technologies.3 Key questions:

- What will limit an instructor and students from accessing this technology?

- How reliable is the technology?

- How complex is the technology?

- How difficult will it be for an instructor and students to use this technology?

- How difficult will it be for an instructor and students to improve teaching and learning with this technology?

Accessibility

Many sites have information about tool accessibility requirements. If you are not aware of your institution's standards for tool procurement, contact your accessibility office or refer to NASCIO or National Federation of the Blind, which offer other institutions' guidelines.4 At this point, a Voluntary Product Accessibility Template (VPAT) may be sufficient, since you will conduct a more thorough review later. But do not assume that any VPAT is thorough and correct. Sometimes a vendor is overly optimistic about the abilities of a tool. Key questions:

- How accessible is the tool for instructors?

- How accessible is the tool for students?

- How accessible are the results of using the tool? (For example, does the student or instructor produce something that is accessible?)

Comparison

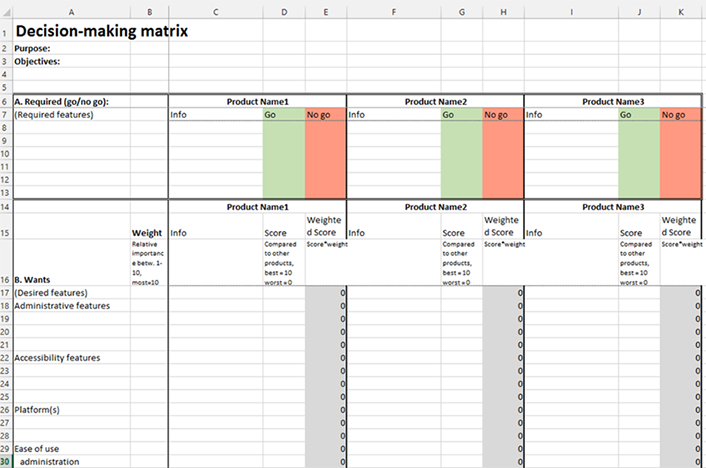

For new technologies where only one tool is available, a simple overview can be appropriate. However, for reviews where several tools meet the same goals, a decision-making matrix may be appropriate (see figures 1 and 2).5 At this point, keep this matrix high-level, since the goal is to determine if this category of technology would be beneficial to your institution. Key questions:

- If there are multiple tools providing the same solution, what are the very basic differences—or are there no discernable differences at first glance? (For example, one may offer a per-user fee, and another may offer an enterprise fee.) If this is a new version of a supported tool, what are the general advantages and disadvantages?

- How is this technology different from the others you currently support?

Recommendation

Finally, you need to document all of this as a proposed tool review and create a project plan for how you would build a business case (not how you would implement the technology tool but how you would conduct further research to fully determine a recommendation). In some cases, at this point you may realize that continued research would not be valuable. If so, you can skip directly to the "Close the Loop" step. If you decide to conduct further research, this recommendation (indicated in the chart in yellow) is sent to your management review team.

Management Review: Business Case

I propose frequent management reviews. Each step in the review process requires time. The management reviews will ensure that the technology tool is worth the time and any other costs. Depending on your institution, you may need to get approval from a governance board or your boss—or you may not need any approval. In some institutions, a consensus from a small team of co-workers is required. Ideally, each Management Review step would involve the same group, with the final go/no-go perhaps decided one level above the person or team completing the other review steps.

The first management review—the business case—is a high-level decision on whether the technology review should progress, based on the proposal and on knowledge of the campus culture and needs.

Full Business Case

The business case is the result of following the project plan laid out in the proposal. The research you do to complete the business case will be detailed and time-consuming. The purpose of the business case is to lay out the goals and then analyze the technology to determine how closely the goals can be met. A business case should look at both the tool features and the organizational side.6 Googling "business case" will produce a variety of outlines.

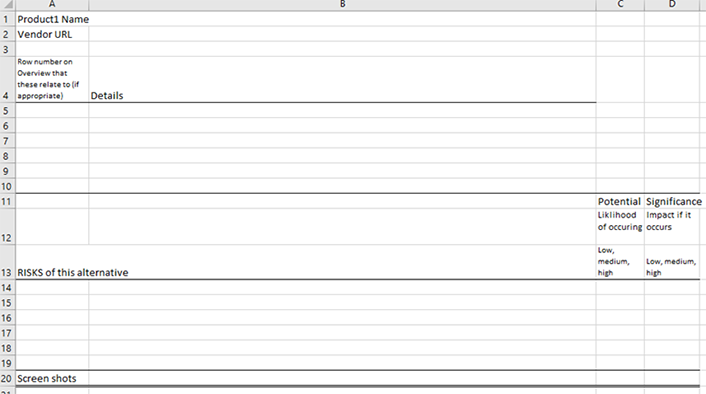

Detailed Review/Comparison

For new technologies, you may find that only one tool is currently available. To identify similar tools, you may need to conduct deeper research. If several tools meet the same goals, you may want to check ERIC, OLC, and additional databases to see if others have completed research on the tools. A more detailed decision-making matrix might also be appropriate, to compare both the tool features and the impact on the institution (e.g, predicted number of adopters, financial and human resource costs).

Barriers Management

In addition to the barriers outlined by TAM and similar models, you'll need to consider administrative and environmental barriers.7 For each barrier, identify the significance of the impact and the likelihood that it will occur. You may want to complete a detailed risk-management plan. Identify how you will eliminate, minimize, or mitigate any risk that is highly likely and has high impact.

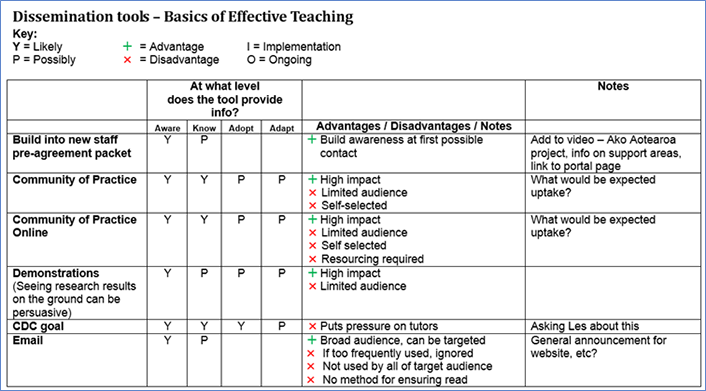

Dissemination Plan

An issue somewhat unique to higher education is that the target audience for a technology may refuse to adopt it. Instructors are notoriously difficult to reach through standard communications and usually are not required to use a new technology. Therefore, a dissemination plan is needed to ensure that you get the word out to the appropriate groups at the appropriate times. If done well, a dissemination plan can be used for multiple new and upgraded technologies.

I use the term dissemination instead of communication because to me, the former term has broader connotations. According to Everett M. Rogers, you can build awareness through mass media, but getting people to adopt an innovation generally requires a different level of messaging. In addition, various instructors need various messages, and as time passes, more detailed messages might be appropriate.8

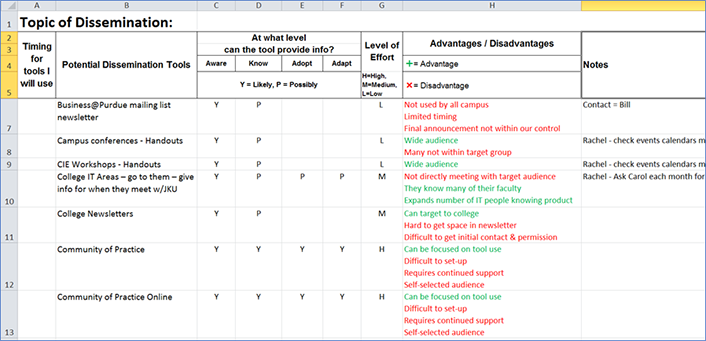

A good first step is to hold a team brainstorming meeting to identify both the desired messages and the new communication methods. These ideas can start off wild (e.g., sky writing), but they will trigger other, more practical ideas (e.g., scoreboard announcements at sports games or doorknob tags for instructors' offices). At one university, we identified 57 viable ways to get faculty members' attention. As a result of the brainstorming, a full plan can be developed (see figures 3 and 4). Evaluating the effectiveness of each item on the plan and of the overall plan should be done once a year to see (a) if it is working and (b) if there are new opportunities.

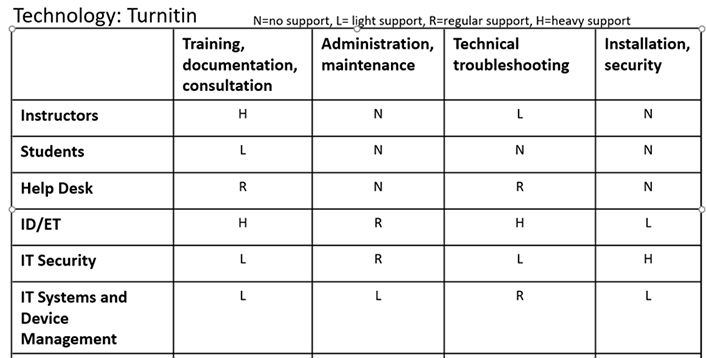

Support Systems Details

The group or person making the final decision on the adoption of a new technology will want to know the costs—not only for the technology but for the ongoing support. To develop a full cost identification, you may need to contact a variety of institutional areas, including central IT security, IT support, server administrators, web administrators, and anyone responsible for providing help desk or training support.

Common levels of support:

- Light: basic knowledge, webpage presence, no promotion

- Regular: same as above, with offer of consultations

- Heavy: same as above, with offer of training

Common categories of support:

- Financial

- Training, documentation, consultation

- Administration, maintenance

- Technical troubleshooting

- Installation, security

Depending on the technology, different categories may require different levels of support. For example, Turnitin might need heavy training, documentation, and consultation support for instructors, regular support for students, and light support for helpdesk staff. Building a chart of audiences and categories of support could help ensure that you have captured all audiences at the appropriate level. This will assist in determining any additional staffing costs (see figure 5).

Recommendation

Once the business case has been completed, you'll want to develop a recommendation (indicated in the chart in yellow) for management. This recommendation should include a summary, followed by the details of the investigation.

Management Review: Pilot

The purpose of this second management review is to determine—based on the proposal and knowledge of the campus culture and needs—if the technology should progress to a pilot. If a pilot is required/recommended before final approval, then a plan for managing the pilot would include who should be involved, how to gain their involvement, and timeframes for the pilot.

Assessment and Evaluation of the Technology

As the pilot is being completed, an evaluation of the technology should be conducted. This should be based on the key questions noted in the proposed tool review. Each pilot participant should be asked to complete an in-depth evaluation. You may want to use a chart based on Anstey and Watson's rubric or use that chart to build a decision-making matrix similar to Kepner Tregoe.9

Final Recommendation

The pilot results are added to the business case to produce a final recommendation (indicated in the chart in yellow) of go/no-go. The summary and other sections of the report should be revised to include a brief review of the assessment. If the report is long, include a list of changes and additions, including page numbers to help management quickly grasp the new information.

Management Review: Implementation Plan

This last management review is a final decision on whether the technology should be adopted. Based on the proposal, knowledge of the campus culture and needs, and the pilot results, management can determine if the technology should be adopted.

Project Plan

Once you receive the final go-ahead, a very detailed project plan is needed. The project plan should include specifics for both dissemination and all support systems (e.g., developing and implementing support and training, updating webpages). If you have limited experience creating a project plan, you may want support from a project manager in your organization.10

Close the Loop

Evaluate/Assess the Project

When you enter the implementation phase of the project, you will need to contact all the people connected with the review and ask them to evaluate how the project went from their point of view. This evaluation can be extremely helpful when the next technology review comes along. Key questions:

- What went well?

- What could have been handled better (and how)?

- What may have been missing?

Inform All Involved

If the review was initiated by instructors who were not involved in the review process, contact them with a final outcome.

Complete Your Documentation

- Set a review date for when this technology should be next evaluated against the marketplace to ensure that you continue to offer the best solutions.

- File all documentation, including copies of pertinent emails, in a single folder within a central location, using a naming convention that will be easy to understand by all team members.

- Update your list of reviewed technologies—both those supported and those reviewed but not supported. Indicate the file location for the documentation.

Mini-Celebrate

Even if the project resulted in a "No-Go" decision, a mini-celebration is important to bring closure. Thank everyone who was involved in any phase of the project. (Donuts are often appreciated.)

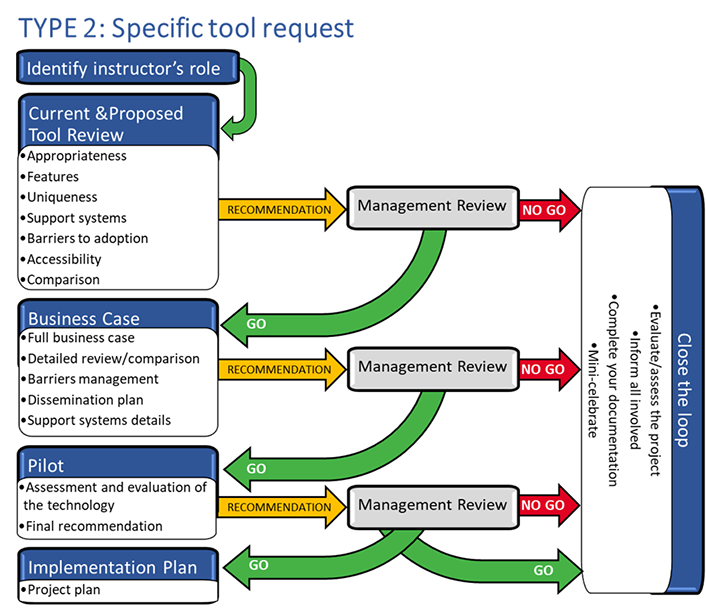

Type 2: Specific Tool Request

Most requests for reviews of new teaching and learning technologies come from faculty. Some instructors research extensively and find "the" tool they want to use. Others will select the first tool they see that appears to meet their needs. Some instructors hear recommendations from others. In addition, institutional administrators—a dean, the provost, or even the CIO—may request a review of a specific tool. Finally, vendors make many requests, of course. In a few circumstances, you may want or need to check out the specific tool the vendor is recommending.

If you have received a request to start supporting a specific tool, you can follow the basic process for Type 1 reviews, with a few additional actions. As a first step, you will need to identify the instructor's role.

Identify the Instructor's Role

When requesters provide a specific technology tool they want, they may be reluctant to consider other, similar tools. Keeping them involved in the project at each step is a primary way to help them understand the results of your research. You will need to determine the instructor's role to ensure the end-result will support as many faculty as possible.

Current and Proposed Tool Review

Most technologies have a main purpose, and some have additional features. When you are asked to review a specific tool, you will want to consider the main purpose of the proposed technology as well as the main purpose of the current tools being supported. Key questions:

- What is the requester's goal?

- How closely does this tool meet the instructor's goal?

- Do you currently have a supported tool that accomplishes most of this goal?

- What issue is the instructor facing that this tool solves?

- What is the instructor looking for as far as the kind of solution (e.g., app versus server versus cloud, student use or instructor use only)?

- How difficult is the instructor's job without this specific technology?

- How frequently would this technology be used?

- What group of people and approximately how many people could use this technology?

Business Case

For the Type 2 business case, you will need to present a comparison of current and proposed tools. To conduct a thorough review, you will probably want to complete a decision-making matrix (see figures 1 and 2). Key questions:

- Does this technology offer a new solution?

- Is this new technology significantly better than the currently supported product?

- Is this the right time to introduce a change?

- What are the pedagogical values and implications?

- Does this tool provide additional capabilities?11

- How might this negatively impact students?

- How many other instructors could benefit from this new tool?

- Is there funding available from the instructor's department or another area?

- Is there another solution, possibly among the currently supported tools?

The Pilot, Implementation Plan, and Close-the-Loop steps for a Type 2 review are the same as in a Type 1 review.

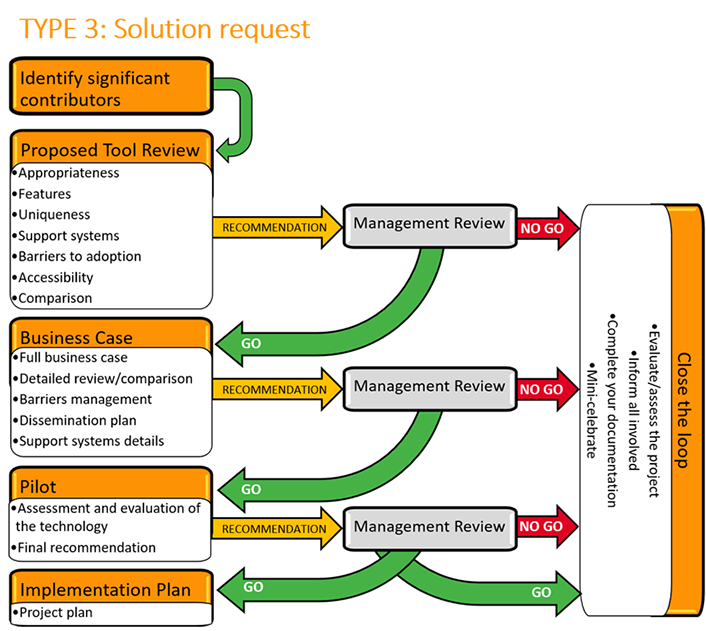

TYPE 3: Solution Request

Many instructors want simple solutions to common problems such as a way to allow for backchannel discussion in a classroom, quickly provide formative feedback to each student on an assignment, or share introductions electronically. While many tools are available as solutions, instructors often feel frustrated trying to find, evaluate, and compare them. Instructors therefore turn to the people (i.e., IDs/ETs) who have a better technical background to compare the solutions and build a recommendation.

If you have received a request for a solution to an issue, you can follow the basic process for Type 1 reviews, with a few additional actions. First, you will need to conduct an in-depth interview with the requester to identify the instructor's issue, assumptions, and objectives. Additionally, talking with other instructors, IDs/ETs, and your T&LC staff can help you identify the likelihood that other instructors are looking at a similar issue.

Identify Significant Contributors

A new tool for a specific application may support only a small number of instructors. A careful and complete interview with the requester will help determine the underlying issue or objective. Following this with discussions with a wide range of people before beginning your research may help you identify the overall value of providing a solution.

The TAM and similar models will be invaluable in determining if this instructor and others will actually implement the solution you identify. The initial interview is critical to ensuring that the solution actually resolves the problem within the parameters the instructor has set.

The primary difference between a Type 3 review and the other two types of review is that here you do not have a specific technology to consider. You will therefore need to interview the instructor in-depth to be sure that you completely understand what is needed. Key questions:

- What issue is the instructor facing?

- What is the instructor looking for as far as the kind of solution (e.g., app versus server versus cloud, student use or instructor use only)?

- What is the instructor assuming about costs, support/training, ease of use, etc.?

- Are the instructor's technical skills and attitude in line with the tool's level of complexity?

- Is there another solution, possibly among the currently supported tools?

- What are the pedagogical values and implications?

- Does this tool provide additional capabilities?12

- How might this negatively impact students?

- How difficult is the instructor's job without this specific technology?

- How frequently would this technology be used?

- How many other instructors could benefit from this tool?

- Is there funding available from the instructor's department or another area?

To ensure that the issue is widely applicable to instructors, you should consider asking the twelve questions above in interviews with others as well: instructors, IDs/ETs, and T&LC staff. Additional key questions:

- Have other instructors indicated that this is an issue for them? If so, what are their names and departments?

- Is this an emerging issue for others?

- Would the proposed solution provide a valuable service to the general community?

The Proposed Tool Review, Business Case, Pilot, Implementation Plan, and Close-the-Loop steps for a Type 3 review are the same as in a Type 1 review.

Conclusion

Reviewing new teaching and learning technologies can be exciting. Too often, however, higher education institutions do not have the staffing and the money to continually review and replace technologies. Having a systematic review process in place can help. Careful analysis will go far to ensure that requesters know their requests have been taken seriously. Although these processes may seem complicated and burdensome, they can be adapted for an individual institution, giving the review team a common approach that will save time, address similar requests in the future, and also meet the changing needs of instructors.

Notes

- Lauren Anstey and Gavan Watson, "A Rubric for Evaluating E-Learning Tools in Higher Education," EDUCAUSE Review, September 10, 2018. ↩

- See Lorin W. Anderson et al., A Taxonomy for Learning, Teaching, and Assessing: A Revision of Bloom's Taxonomy of Educational Objectives, abridged edition (Pearson, 2000), and H.L., "SAMR Model: A Practical Guide for EdTech Integration," Schoology Exchange, October 30, 2017. ↩

- Andrina Granić and Nikola Marangunić, "Technology Acceptance Model in Educational Context: A Systematic Literature Review," British Journal of Educational Technology 50, no. 5 (September 2019). See also Everett M. Rogers, Diffusion of Innovations, 5th ed. (New York: Free Press, 2003), 222. ↩

- See "Accessibility in IT Procurement, Part 1," NASCIO, July 28, 2015, and "Higher Education Accessibility Online Resource Center," National Federation of the Blind (website), accessed August 18, 2020. ↩

- See also "Kepner Tregoe Decision Making: The Steps, the Pros, and the Cons," Decision Making Confidence (website), accessed August 18, 2020. ↩

- For a good list of tool feature considerations, see Anstey and Watson, "A Rubric for Evaluating E-Learning Tools in Higher Education." ↩

- Pat Reid, "Categories for Barriers to Adoption of Instructional Technologies," Education and Information Technologies 19 (June 2014). ↩

- Rogers, Diffusion of Innovations. ↩

- Anstey and Watson, "A Rubric for Evaluating E-Learning Tools in Higher Education"; "Kepner Tregoe Decision Making: The Steps, the Pros, and the Cons," ↩

- If you have access to Franklin-Covey training, I recommend its project-management essentials course. In addition, literally hundreds of books on project management are available, and online courses can be found through LinkedIn Learning, Skillsoft, and many other sources. ↩

- Anderson et al., A Taxonomy for Learning, Teaching, and Assessing; H.L., "SAMR Model." ↩

- Anderson et al., A Taxonomy for Learning, Teaching, and Assessing; H.L., "SAMR Model." ↩

Pat Reid is Founding Partner, Curriculum Design Group (CDG), and was formerly Director, Instructional Innovation, at the University of Cincinnati.

© 2020 Pat Reid. The text of this article is licensed under the Creative Commons Attribution-NonCommercial-ShareAlike 4.0 International License.