Key Takeaways

- The 21st-century workplace differs radically from the relatively stable and structured organizations of the past century; if our students are to be adequately prepared for its challenges, traditional pedagogy must adapt.

- This adaptation is particularly problematic in large courses, where key skill sets such as communication, collaboration, and critical thinking are more difficult to implement and assess.

- The Tufts University School of Dental Medicine set out to take on this challenge, despite an average class size of 200 students.

- Among its projects are a pilot course originally for fourth-year clinic-based students that now involves a team of students from each year and a real-time student response tool that plants the seeds of community in previously anonymous auditorium classes.

The 21st-century workplace has numerous characteristics, but perhaps none are as obvious as its interconnectedness and mutability. The skills needed to thrive in this environment place new demands on higher education and the ways in which we prepare students for the world.

This idea of adapting how and what we teach is rapidly moving out of the theoretical realm and into actual practice on campuses across the country. At Tufts University School of Dental Medicine (TUSDM) we are using technology to change the way students learn and faculty teach to better meet 21st-century demands.

Here, I describe our efforts, which include a pilot course, online tools to increase faculty-student interactions, and e-portfolios that students can use in their coursework as well as beyond campus in what will most likely be their ever-evolving careers.

The 21st-century Challenge

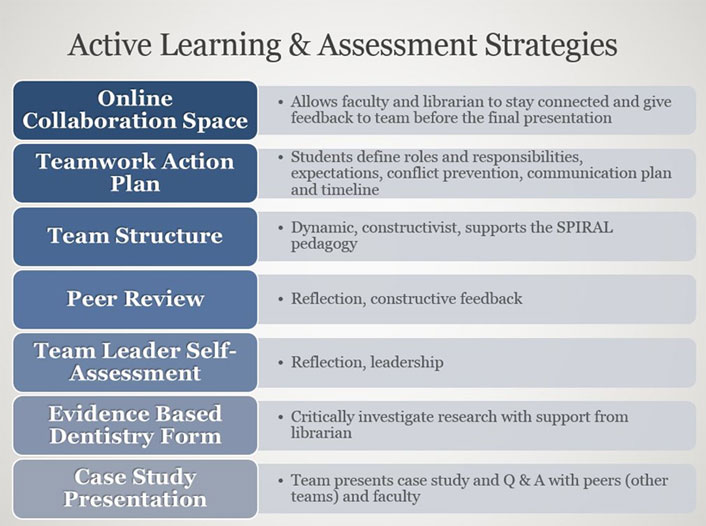

As our world becomes more globalized, cultures intertwine and teamwork plays an increasingly pivotal role in the workplace. In higher education, we need to translate teamwork and other key skills for students so that they graduate ready to perform in that workplace. Traditional learning environments, which typically focus on lecturing to largely passive students, simply do not meet those needs. Figure 1 shows some guidelines and assessments moving more to active learning, taken from our pilot course.

Figure 1. Guidelines and assessments taken from our pilot project

Pilot Project

TUSDM is one of the oldest dental schools in the country. Our four-year program begins with two years of didactic, lecture-based course work in basic science concepts such as microbiology and anatomy. Then, in their third and fourth years, students enter the clinic and begin working with patients.

To better emphasize skills such as teamwork, communication, and self-assessment, we took an existing learning experience — the fourth-year case presentation — and used it as the basis for giving students in earlier years opportunities to demonstrate their skills, learn new ones, and engage with upper-level students and coursework.

The Original Course

In the traditional case presentation, fourth-year students choose a patient they have been working with in the clinic and do a live presentation on the procedures they have performed, the patient's history, any drugs they are taking, and so on.

The goal is for each student to link everything together to show that his or her treatment approach and decisions are sound. The presentation occurs in an open forum, where faculty members can ask questions and challenge the students on their reasoning, choices, and practices.

Integrating a Team Approach

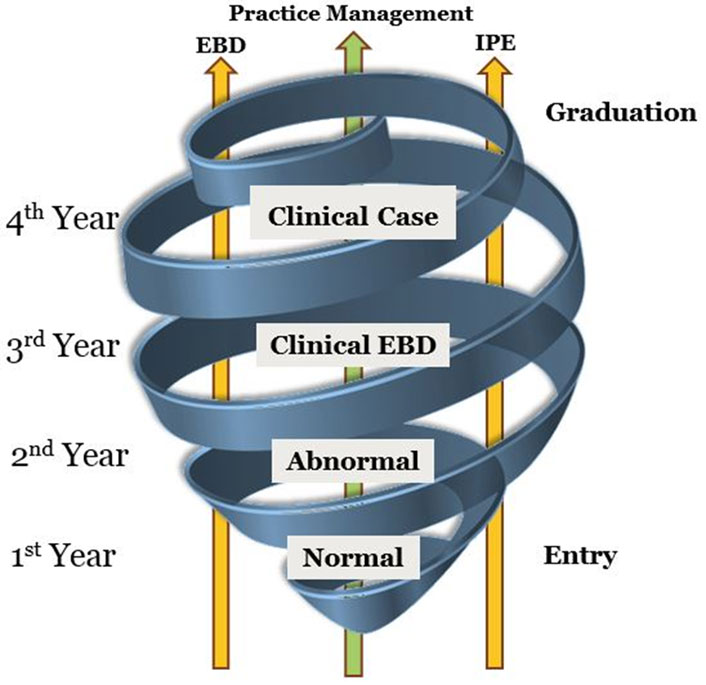

As our pilot project, we decided to team each fourth-year student with a third-year student, a second-year student, and a first-year student so they could work together on the fourth-year student's case. This is a new pedagogical approach called "Student-driven Pedagogy of Integrated, Reinforced Active Learning (SPIRAL)," modified from a program designed by Mark Wolff and Andrew Spielman from New York University College of Dentistry (see figure 2).

Figure 2. The SPIRAL model for dentistry

Each student has particular responsibilities to align with their progress through the program.

- First-year students are responsible for presenting information pertinent to the case that has to do with the patient but represents "Normal" (see figure 2).

- Second-year students are responsible for presenting information on the medications the patient is taking, any illnesses the patient has, and risk factors.

- Third-year students are expected to answer a clinical question using evidence-based principles.

- Fourth-year students select one of their patients for the case presentation and coordinate the team project while working with their patient. They also must demonstrate leadership skills through coordination of their team, and afterwards they conduct a self-assessment.

For the peer-review element, the students analyze each other as a team by reflecting on the project to discover areas for improvement and assess factors of each other's teamwork, presentation, and time-management skills. We also have mentors for each team — faculty and clinical staff members who oversee the team and monitor its progress.

Student response to the pilot has been extremely positive. The first-year students are obviously relatively new to the program; this group will be the first class to complete the project's four-year cycle and gain an understanding, through this teamwork, of their future role for years two through four.

We are still working on how to assess the students through this process. Currently, the fourth-year student is the only one who receives a grade as part of their clinical competencies. The mentors on the team use a rubric to assess the student's diagnosis, clinical findings, problem identification, and interpretation. Our plan now is to move toward more quantitative data to help the faculty better facilitate and assess skills such as teamwork and communication. Traditionally, students have focused solely on case management and case practice. We'd also like to better train our students ahead of time on self-assessment, including how to do it effectively and what that means.

Other Learning Strategies

Several other tools supported our pilot efforts: provision of a student response tool; a move to computer-based exams; and the introduction of peer review.

Student Response Tool

One of our key challenges was to make large lecture-based classes more interactive and personalized — no small task given that the seats are bolted to the floor, making teamwork opportunities and other go-to interaction approaches challenging, if not impossible.

We therefore decided to invest in the Learning Catalytics tool, an online assessment software program that lets students use their smartphones, laptops, or tablets to participate in class. Faculty can then ask students questions in class and immediately see and address the answers. Instructors can thus check in with their students in real time to ensure they understand a topic. It's also helpful for students who might be confused about something but are unlikely to raise their hand in a crowd. Because the tool is accessed online, has numerous question formats, and is easy to use in a class for 200+ students, TUSDM has moved from one faculty member piloting the software to over 55 faculty using it in not only lectures but also clinics, exam reviews, workshops, and online learning.

One faculty member, for example, teaches an introduction to research course — a topic students often have many misconceptions about. The professor decided to use this tool to check in with students on the first day of class to find out what they actually thought about "research." Not surprisingly, many of their initial reactions were comments like "boring" and "lab coat." However, because the professor could actually read these reactions in real time, it led to a lively discussion about misconceptions, as well as the importance of learning how to identify good sources online and execute a sound research project — which, after all, is essential in higher education.

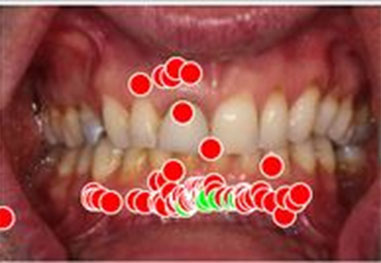

Figure 3. Radiograph

The response tool is also used in other ways. Sometimes faculty will share a radiograph image and ask students to identify an area that is problematic; students can click or draw directly on the image so that faculty know right away if the student comprehends the graphic information (see figure 3). Other times a student's in-class question might lead to a homework assignment that the class can review together the next day. Or faculty who have never tried team-based learning because of the large number of students can do so now with the team-based learning format. The tool basically offers a creative way to engage with a large audience of students and also to give them the feeling of participation and interaction in regular and often spontaneous ways.

Computer-Based Exams

Like many universities, we recently moved from paper-based to computer-based exams. At first glance, it seems like the primary motive for the move is financial — automatic grading obviously saves time and thus money. Another assumed motive is that students prefer this mode of assessment. While both of those reasons are generally true, what's been interesting for us is that this move has been a creative journey as we explore the possibilities that arise when using computer-based assessment as a learning tool. Rather than waiting for teaching assistants to finish grading or waiting to use the Scantron test scoring machines, faculty members get assessment analytics right away. They can immediately assess their students' understanding, as well as areas that might require more class time before moving on to a new unit.

The system also generates student reports and maps the curriculum to specific learning competencies. Say, for example, a student misses a particular exam question. The report will link the missed question back to a learning objective in the syllabus — say, the cognitive domain. The student can then ask, Am I lacking knowledge? If not, am I having trouble applying that knowledge, or is it a problem with my critical thinking skills? This type of information is also useful to instructors, who can prepare for student meetings by reviewing reports and thus help correct misconceptions and support the students in taking their work to the next level.

Peer Review

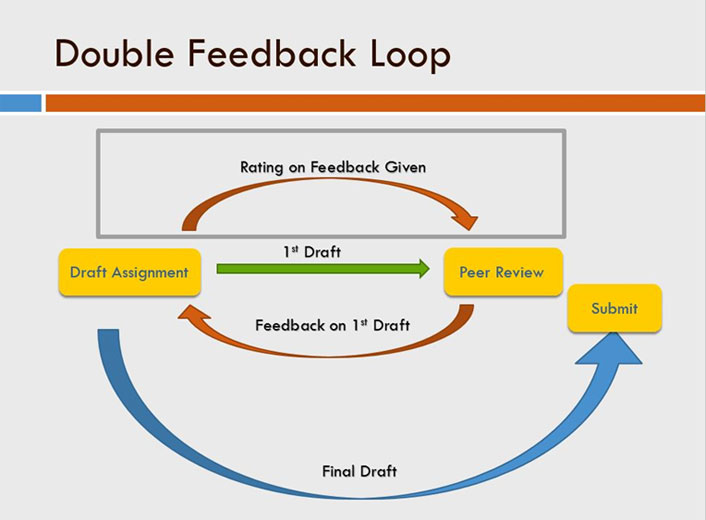

TUSDM piloted an online-based peer-review tool called Mobius SLIP in the 2016 Research Methodology course that allows for double-looped feedback (see figure 4). Students switch between the roles of active evaluator and receiver, while the instructor plays the role of the facilitator. Peer review is a way for students to receive feedback as they work on a project, granting them the ability to make adjustments before the final submission. This kind of formative feedback is critical to allowing students to improve their work by reflecting on constructive feedback and supports development of 21st-century skills. Peer review also provides mental scaffolding that allows students to build on each other's learning for a more effective learning experience.

Figure 4. The double-looped feedback cycle

Some of the most salient shortcomings of peer review include the uneven quality of feedback and the time it takes for faculty to implement. Student evaluators are often afraid to give negative feedback and might be unsure how much feedback to share, while students receiving feedback may feel that it is not relevant. The pilot program employed a technology tool that allowed a double-looped review, whereby student evaluators provide feedback and the student assesses the quality of the feedback received, creating benefit for both. The tool also made it easy for faculty to facilitate the peer review. The traditional way of facilitating a peer review is basically impossible in large classes due to the amount of time faculty have to spend on grading and facilitating the assignments. The tool allows the instructor to preset everything and can even set up the groups for peer review.

Advice

My experiences with engaging these 21st-century shifts in pedagogy are relatively new in large class sizes, but I do have a few suggestions for others embarking on this journey.

Start Small

Starting small is important; people can get really excited about a technology or another of the school's initiatives, hearing the great success stories without understanding all the work required to get there. I recommend that you break any project down into smaller projects and look for faculty champions and an interested group of students. By starting small, you can work out the kinks early.

Listen to Faculty

Using technology for technology's sake isn't the best-case scenario. You really want to listen to what faculty are doing in their classrooms and then go experience it. Find out what is working and not working, and then explore which tools match both the course needs and the learning objectives. Keeping focused is so important. Part of an instructional designer or education technologist's job is to go out and explore what's happening in technology and find new and exciting things that can open up new doors; the other part is to be realistic about what we can actually do to support faculty and teaching at our schools.

Answer the "Why?" Question

Another important step is to tightly connect what you're doing with a "why" for both faculty and students to create value. Let's go back in time and say, for example, that we were just launching Learning Catalytics as a new tool at Tufts Dental. Generally, both faculty and students are extremely busy; to simply hand off the tool without offering reasons as to why they should try it and explaining how it works will almost guarantee it gathers dust.

"I am a big fan. Learning Catalytics has enabled us to get feedback from students in real-time, which is no easy feat in a class of 190. I can look over their responses and see if their thinking is spot on or if they have gone off track and then address it right away. We have seen a tremendous improvement in students' understanding as well as attendance in class and the fact that they have 24/7 access to the session questions afterwards allows them to engage with the materials again."

—Michael Kahn, Professor, Oral Pathology

For faculty and students to use any new technology, inside or outside the classroom, depends largely on its benefits. Our job is to be sure those benefits are clear, plentiful, and understood. Such benefits typically relate to saving time or improving the learning process; ideally, they will include both.

Create a Safe Space

What is innovation? Some leading experts say that innovation is the implementation of creative ideas in order to generate value and about staying relevant during times of change. It is not easy, but it is critical that your campus supports faculty who are trying new strategies to improve learning. It takes time to plan and implement change, and it doesn’t always works the first time. In addition, it takes a community to support these initiatives and a safe space created to try new things and be allowed to make mistakes. You need to be part of the conversation.

Conclusion

So far, our experience at TUSDM has helped me realize the importance of thinking outside the box — and outside traditional pedagogy. At their best, these new technologies not only facilitate the development of new competencies among our students but also give us new ways of thinking about learning and about each other.

It helps to remember what it is like to be excited by learning itself, and to revive the curiosity and passion that are often trampled in the rush for grades and academic advancement. If we, as administrators and faculty, can demonstrate this and allow ourselves to model curiosity and beyond-the-box thinking to our students, we will automatically create more enriching learning environments.

Jennipher Murphy, MS Ed, is the director of Educational and Faculty Support at Tufts School of Dental Medicine in Boston.

© 2017 Jennipher Murphy. The text of this EDUCAUSE Review online article is licensed under the Creative Commons BY-NC-ND 4.0 license.