Key Takeaways

- Faculty members are increasingly using social media in a professional context, raising questions about how administrators might value and measure these contributions to academic fields.

- A group of researchers set out to determine how modern measurement tools — such as social network analysis — might be used to gauge productivity in faculty's use of social media.

- The resulting multiyear study reveals potential strategies to increase and illustrate scholarly productivity in these new arenas.

An increasing number of higher education faculty are using social media — such as blogs, podcasts, and Twitter feeds — for professional purposes to communicate with peers worldwide.1 This practice raises two key questions for administrators:

- What value do we place on social media use in terms of faculty productivity?

- What measures do we use to determine faculty members' impact on their fields through their social media use?

To help answer these questions, we set out to determine how institutions might incorporate modern measurements — such as social network analysis — with traditional indicators to measure faculty productivity.

Defining Faculty Productivity

In higher education, faculty productivity is traditionally measured in terms of

- teaching success, such as through positive student opinion surveys;

- scholarship and creativity, such as through dissemination of scholarly and creative works (in journal articles, books, performances, and so on) in conjunction with how frequently those works are cited in the work of others; and

- service to one's institution and professional discipline, such as through membership on institutional committees.

In previous work, we described faculty productivity as activity that positively contributes to the faculty member's institution.2 Conceptually, this includes anything that supports and promotes the institution's goals. For most colleges and universities, a primary goal is teaching. However, important secondary and tertiary goals include encouraging faculty members' scholarly research and publication and maintaining strong ties with specific academic disciplines, both to uphold the institution's reputation and to provide career openings for alumni by exerting influence in various disciplines.3

Since the 1990s and the inception of The Delaware Study, faculty contributions from hundreds of U.S. colleges and universities are determined by data-driven measures. According to The Delaware Study's chief architect, Michael Middaugh, measuring and assessing faculty productivity requires constant review and updating both to remain valid and to present the general public with an accurate report of an institution's productivity and efficiency.4

Measuring Scholarly Impact

Higher education faculty members have traditionally struggled with reporting their work's impact because each case is unique and diverse variables, such as teaching load and discipline/subdiscipline expectations, affect individual performance.

Traditionally, a faculty member's scholarly impact is measured by the number of times his or her work is cited by others. To report this citation impact, faculty members use various methods. One example is the h-index, also known as the Hirsch index or Hirsch number. 5 According to Google Scholar, which reports h-indices as part of author profiles, the h-index is "the largest number h such that h publications have at least h citations."

Metrics such as the h-index help academics better understand and articulate their journal publications' impact. Likewise, similar metrics designed to measure the reach and effects of social media participation might help academy members better understand and articulate their influence in that dimension.

The Need for Alternative Measures

Although social media tools such as blogs and podcasts are not often cited in traditional scholarly literature, they are widely referenced and linked to in modern social media (such as a "tweet" recommending a podcast episode or series). This fact led us to explore how modern technologies and assessment strategies might inform both professional practice and faculty evaluation.

As Heather Piwowar points out,6 a watershed moment in redefining scholarly productivity occurred in January 2013, courtesy of a change to the US National Science Foundation's (NSF) grant application policies and procedures:

Instructions for preparation of the Biographical Sketch have been revised to rename the "Publications" section to "Products" and amend terminology and instructions accordingly. This change makes clear that products may include, but are not limited to, publications, data sets, software, patents, and copyrights. 7

With this change, the NSF joined a chorus of scientists and researchers in recognizing that evolving web-based technologies and current scholarly activity have exceeded the capacity of traditional scholarly productivity measures.8 Incorporating social media into the research workflow can improve the overall responsiveness and timeliness of scholarly communication. It also has a powerful secondary advantage: exposing and pinpointing scholarly processes once hidden and ephemeral.9

To date, however, work in social media is unlikely to be identified as impactful unless it is somehow transformed into a traditional paper published in a journal that has an impact factor rating. To investigate which measures we might use to determine faculty members' impact on their fields through social media use, we explored two tools: altmetrics and social network analysis.

Altmetrics

"No one can read everything." This is the first line of Altmetrics: A Manifesto.10 Having established this point, the manifesto proposes a primary solution to information overload: apply filtering systems to support users in finding high-quality scholarly work on a given topic. Filtering mechanisms and processes — such as peer review, citation counting, a journal's average citations per article (JIF), and the more contemporary and aforementioned h-index — have provided some structure to date. However, if we are to effectively, efficiently, and accurately assess scholarly productivity in multiple forms — and thereby determine the true impact of a scholar's work — we need new filtering systems.

Altmetrics applies filtering systems that can handle the ever-increasing volume of new work and also increase the scope of official academic knowledge to include impact in three key areas:

- The sharing of "raw science," including data sets, code, and experimental designs.

- Semantic publishing or "nanopublication," in which the citeable unit is an argument or passage rather than an entire article.

- Widespread self-publishing via blogging, microblogging, and comments or annotations on existing work. 11

Because creators of social media products might find it difficult to achieve recognition through traditional means, altmetrics could be crucial in evaluating and promoting web-aware scholarship. One method researchers have identified as a useful alternative measure of impact is social network analysis. Indeed, Wouter de Nooy, Andrej Mrvar, and Vladimir Batagelj argued that social network analysis provides "the most suitable" method for revealing the structure of complex data.12

Social Network Analysis and Social Media Impact

Social network statistics and visualizations offer a powerful perspective on the activities of various types of networks; such statistics are used to develop strategies to enhance networks (such as by improving communication within an organization) or to inhibit networks (such as by disrupting terrorist groups or stalling the spread of infectious diseases).

Common statistical measures of social network analysis include the following:

- Density: the total number of people connected in a network versus the total possible connections.

- Centrality: How central a person is in a network as determined by aspects such as their number of connections to others (density), distance from others in the network (distance), and the degree to which a person is located between others on network pathways (betweenness).

- Cliques: smaller complete subgroups within a larger network.

- Homophily: the tendency of networked individuals to associate and bond based on a shared trait.13

The two basic network properties used to render social networks are connection data and attribute data. Connection data is represented using dots (nodes) to show individual units, with lines showing connections between the nodes. Attributes are additional features, such as labeling the nodes with numbers or changing the shape, color, or size of the nodes. For example, in our experiment, for traditional scholarly production we used numbers to represent the cited publication and colors to represent the publication type.

We examined a five-year sample of the traditional publication and social media productivity of one of us (hereafter referred to as "author examinee") to explore the use of social network analysis as an altmetrics measure. Our goal was to compare the author examinee's publication impact and social media impact, and to take the first step in what will be an iterative process exploring the use of altmetrics to assess traditional and social media scholarly activity.

Analysis of Traditional Scholarly Impact

In the first step of our social network analysis, we created a network of the author examinee's traditional scholarly productivity. We carried out this traditional productivity analysis using five criteria:

- Works included were cited in a traditional media form (such as publications, journals, books, annuals, and proceedings).

- The citations occurred in a five-year window between 2010 and 2015.

- The works were indexed in Google Scholar.

- The author was the first author of the cited work.

- Only one citation was counted for each citing author.

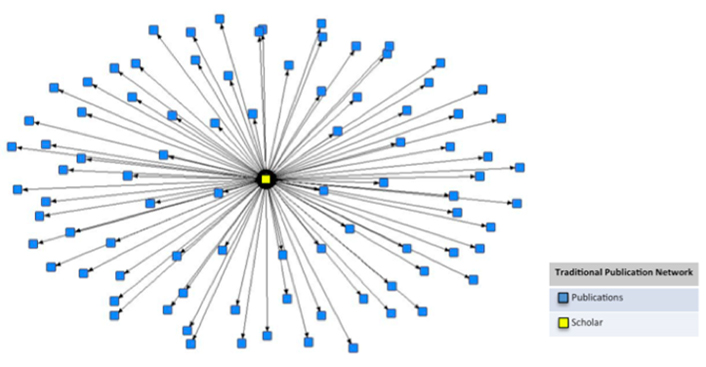

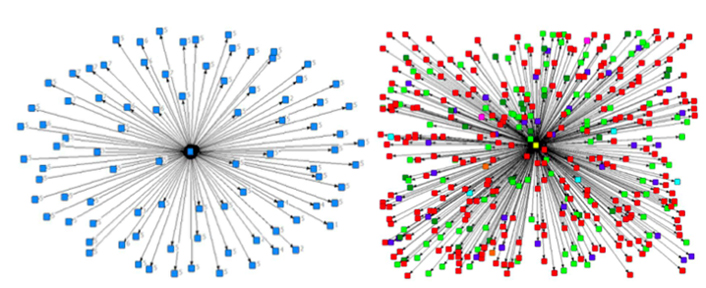

Figure 1 shows the author examinee's basic network of scholarly activity in traditional publications. The analysis revealed that 88 unique authors between 2010 and 2015 cited seven of the author examinee's publications.

Figure 1. The author examinee's traditional publications: 88 unique connections for seven publications cited

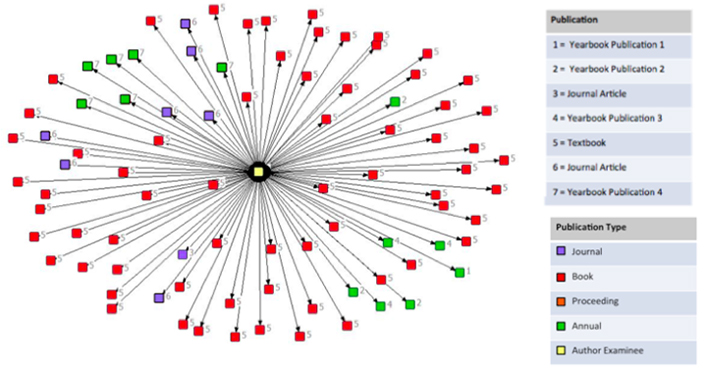

We then enhanced the network's detail by adding the attribute of publication identity to each node to show the publication cited, by number. To further enhance the representation, we assigned each publication type a color and colored each node accordingly. This provided a more detailed visual of the author examinee's publication impact between 2010 and 2015 based on the criteria established for the analysis.

Figure 2. The author examinee's enhanced traditional network

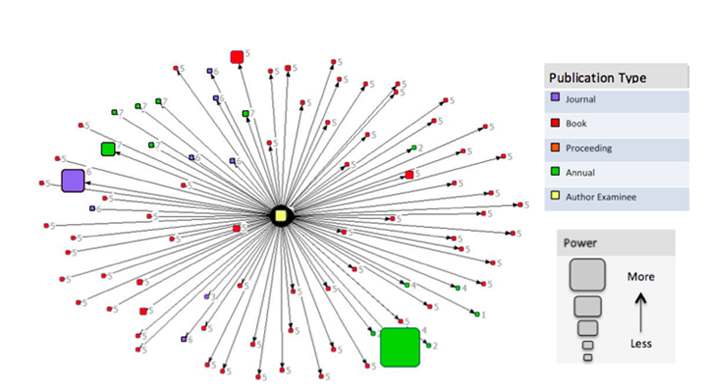

We then added a final dimension to the network: a power index attribute, which we arrived at by adding two indices:

- the h-index, which measures the impact of individual authors by measuring the total number of citations and the number of times each article is cited, and

- the i10-index, introduced by Google in 2011, which measures the number of publications with at least 10 citations.

By combining these measures, it became possible to determine the author examinee's power index, as well as the power index of those who cited the author examinee. We then began to consider how we might include such a measure in an alternative process for assessing scholarly impact. Note that the basis for this power index involves assessing the citing authors' Google profile. Thus, the resulting network shows a power index only for those authors with established Google profiles (figure 3).

Figure 3. The author examinee's network with a power index

The result of this analysis was our finding that the author examinee had an impact in the area of traditional scholarly activity. The author examinee's work was published in a variety of venues and was cited by many peers in the field, including those with high power index measures.

Analysis of Social Media Scholarly Impact

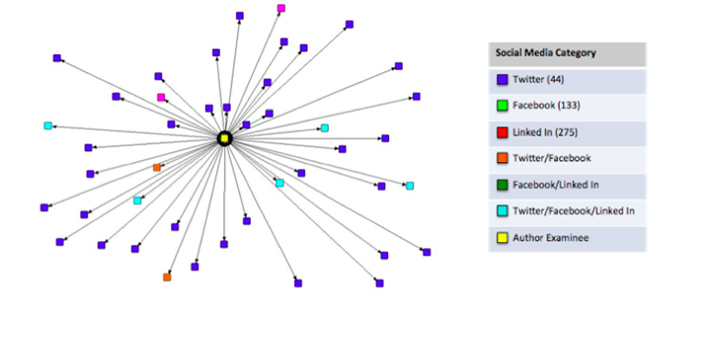

The next step in the network analysis was to evaluate the author examinee's impact in social media. The criteria for the analysis of scholarly social media impact included the following elements:

- The connection was between the author examinee and members of the academic community.

- The connections exist for the purpose of academic work.

- The connections are unique (individuals are counted only once).

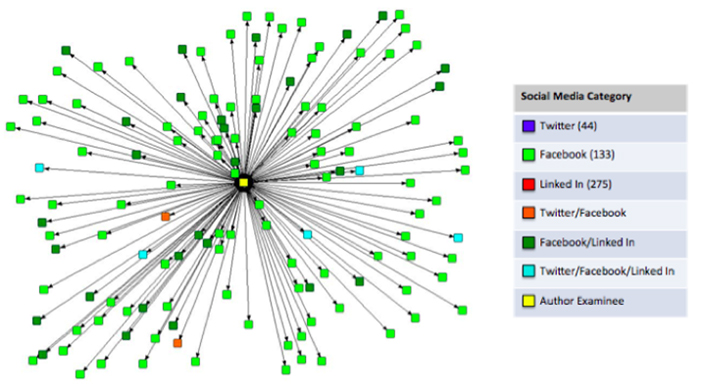

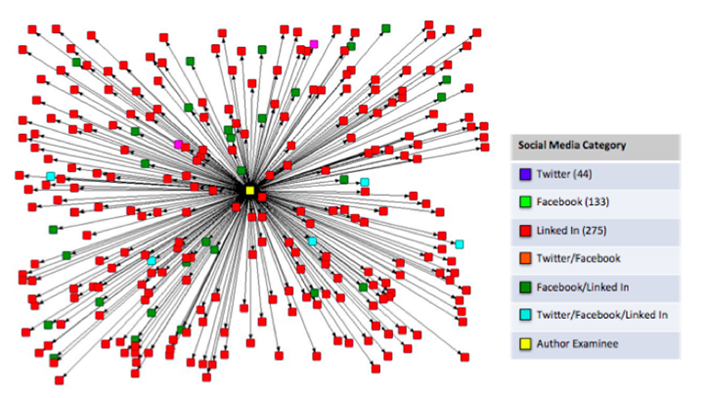

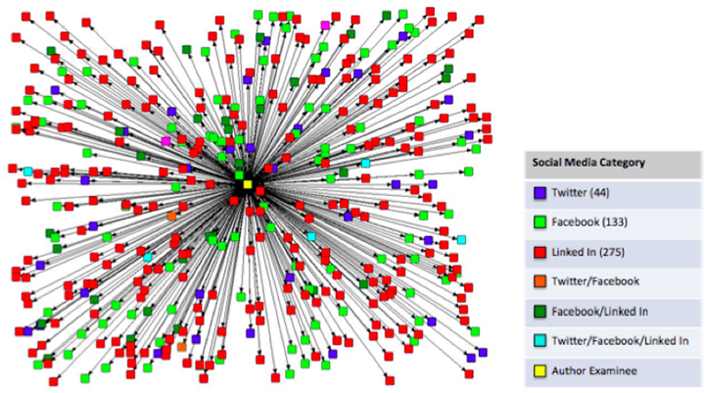

Our social network analysis of social media identified 452 unique Twitter, Facebook, and LinkedIn connections related to scholarship. Figures 4–7 show the author examinee's social media subnetworks and composite network.

Figure 4. The author examinee's Twitter network (44 connections)

Figure 5. The author examinee's Facebook network (133 connections)

Figure 6. The author examinee's LinkedIn network (275 connections)

Figure 7. The author examinee's composite social media network (412 unique connections)

The author examinee's traditional publications demonstrated impact in the type and number of connections created through scholarly production. However, the 452 unique connections to the author examinee's social media production (figure 7) is more than five times larger than the author examinee's traditional scholarly network of 88 unique connections (figure 1).

Figure 8's visual comparison of these networks shows the stark difference between the author examinee's traditional and social media networks. Although we have yet to determine if and how many of these social media connections are based in scholarly activity, clearly, this typically invisible network merits attention and is worthy of the work required to consider its activity in the tenure and promotion process. This is particularly true for educational technology professors, who are often expected — if not required — to have a social media presence.

Figure 8. A comparison of the author examinee's traditional scholarly network (88 connections) and social media network (412 unique connections)

Discussion

The use of altmetrics is growing, but much work remains to determine how to include them in the tenure and promotion process.

The Challenges

In addition to questions about whether altmetrics indicate relevant scholarly communication,14 they can hardly be considered a paradigm shift. In current altmetrics, scholarly articles remain the basic unit of analysis, as altmetrics search capacity is based on social media mentions of articles that can be identified with a digital object identifier (DOI). Until we can associate universally accepted unique identifiers with individual social media products, social media knowledge creation will be limited to the impact of articles and journals.

Further, Stefanie Haustein, Rodrigo Costas, and Vicent Larivière warned of a potential Matthew Effect in social media — that is, those who are popular will continue to gain in popularity, while those who have less of a footprint might continue to languish in obscurity.15

Finally, we currently have no standard for what constitutes "impact" when it comes to knowledge created using social media. Edwin Horlings pointed out that, while altmetrics is potentially revolutionary, it also offers the same false promise of any technology system: that somehow the system itself will positively influence development.16 Just as the development of the Internet required standards and protocols for computers to function in a network, we must develop standards and protocols if assessments of social media and scholarly impact are to be effective in a global research network.

The Case for Overcoming the Challenges

Implementing altmetrics entails clear challenges, but the challenges are similar to those faced in the traditional tenure and promotion process. As Masood Fooladi and his colleagues argued, the same criticisms leveled at altmetrics were also leveled at citation indexes when they were introduced in the 1950s.17 Any new system faces challenges; the key is to determine whether a new scholarly impact assessment system is worth the cost, resource expenditure, and effort of overcoming implementation barriers.

In the case of altmetrics, the answer is clearly yes, as indicated in the growth of both online tools and the social media-based knowledge that scholars are producing. This is particularly true in fields such as educational technology, where foregoing social media production is not a realistic option.

Researchers around the world are taking the first steps to implement altmetrics. The fact that the NSF has demonstrated an openness to moving beyond publications in its consideration of expertise is a favorable sign that minds are beginning to open to new types of scholarly impact.

Conclusions and Next Steps

At the start of this article, we posed two questions. We now venture our answers:

- What value does social media have in terms of faculty productivity? Although the value itself is certainly there, how best to quantify that value is yet to be determined and requires further discussion among faculty and administration.

- What measures should we use to determine social media impact? Altmetrics and social network analysis are promising measurement strategies for determining faculty members' impact on their field.

Social network analysis and other visualization approaches are particularly promising methods for documenting activity in ways that are meaningful to institutional administration. Our experiment in applying these approaches to a single faculty member and developing relatively easy to understand illustrations and reports is a qualified success, but the analysis of one individual is merely a first step.

Our next steps will focus on illustrating comparative results among a range of faculty members with differing levels of scholarly productivity. We also plan to review our results with college and university administrators to determine whether they are, in fact, as easy to understand as we think.

We hope the results of our study promote a better understanding of how modern technologies and assessment strategies can inform both professional practice and faculty evaluation, and motivate others to continue research in this area.

Notes

- Jana L. Bouwma-Gearhart and James L. Bess, "The Transformative Potential of Blogs for Research in Higher Education," Journal of Higher Education, vol. 2, no. 83, 2012: 249–275; Jeff Seaman and Hester Tinti-Kane, Social Media for Teaching and Learning, Pearson and Babson Survey Research Group, 2013.

- Abbie Howard Brown and Tim Green, "Social Media and Higher Education Faculty Productivity: An Analysis of Type, Effort and Impact," presentation, Association for Educational Communications & Technology Annual Convention, Jacksonville, Florida, 2014.

- Roger L. Geiger, The History of American Higher Education: Learning and Culture from the Founding to World War II, Princeton University Press, 2014, 326.

- Michael F. Middaugh, Understanding Faculty Productivity: Standards and Benchmarks for Colleges and Universities, Jossey-Bass Publishers, San Francisco, California, 2001, 18.

- J.E. Hirsch, "An Index to Quantify an Individual's Scientific Research Output," Proceedings of the National Academy of Sciences of the United States of America, vol. 102, no. 46, 2005, pp. 16569–16572.

- Heather Piwowar, "Altmetrics: Value All Research Products," Nature, vol. 493, no. 159, 2013.

- US National Science Foundation, "Dear Colleague Letter—Issuance of New Proposal & Award Policy and Procedure Guide," NSF 13-004, 2012.

- Lauren Ashby, "The Empty Chair at the Metrics Table: Discussing the Absence of Educational Impact Metrics, and a Framework for Their Creation," presentation, Altmetrics Conference, Amsterdam, October 2015.

- Jason Priem, Dario Taraborelli, Paul Groth, and Cameron Neylon, "Altmetrics: A Manifesto," almetrics.org, October 26, 2010.

- Ibid., 1.

- Ibid., 12.

- Wouter de Nooy, Andrej Mrvar, and Vladimir Batagelj, Exploratory Social Network Analysis with Pajek, Cambridge University Press, 2011.

- Robert A. Hanneman and Mark Riddle, Introduction to Social Network Methods, University of California, Riverside, 2005.

- Mike Taylor, "The Challenges Of Measuring Social Impact" [http://www.researchtrends.com/issue-33-june-2013/the-challenges-of-measuring-social-impact-using-altmetrics/], Research Trends, vol. 13, no. 33, 2013.

- Stefanie Haustein, Rodrigo Costas, and Vincent Larivière, "Characterizing social media metrics of scholarly papers: the effect of document properties and collaboration patterns," PLOS ONE (2015); DOI: 10.1371/journal.pone.0120495.

- Edwin Horlings, "The Power and Appeal of New Metrics: Can We Use Altmetrics for Evaluation in Science?" Altmetrics Conference, Amsterdam, October 2015.

- Masood Fooladi, "Do criticisms overcome the praises of journal impact factor?" Asian Social Science, vol. 9, 2013.

Abbie Brown, PhD, is a professor and interim chair of the Department of Mathematics, Science, and Instructional Technology Education at East Carolina University. He is a recipient of the University of North Carolina's Governors Award for Excellence in Teaching and author of several books on instructional design and technology.

John Cowan, PhD, is a senior research associate in the Department of Outreach, Engagement, and Regional Development at Northern Illinois University. He is an expert in the design of hybrid education programs and the use of social network analysis to capture relationships in education-related networks.

Tim Green, PhD, is a professor of Educational Technology and Teacher Education at California State University, Fullerton. He has served as the director of Distance Education at CSUF. Green is a board member for CUE, a nonprofit educational organization that focuses on educational technology. He is the author of several books on instructional design and technology integration. With Abbie Brown, Green produces a podcast, Trends and Issues in Instructional Design, Educational Technology, and Learning Sciences. He can be found on Twitter at @theedtechdoctor and on the web as The Ed Tech Doctor.

© 2016 Abbie Brown, John Cowan, and Tim Green. The text of this EDUCAUSE Review online article is licensed under the Creative Commons BY-NC-ND 4.0 license.