Key Takeaways

- Over the past 10 years Princeton University developed and implemented an institutional strategy to support research and administrative computing.

- Changing economic and organizational factors influenced strategy development and the critical elements behind the successful collaboration between research faculty, the IT organization, and senior administration.

- This collaboration informed Princeton's decision to build a high-performance computing center to meet the university's research and administrative computing needs now and in the future.

Computational research has become a vital — and increasingly complex — part of most research endeavors today. Researchers often become expert in the nuances of computer architecture and programming as they strive to produce the tools that allow for new insights and discoveries. Thus, the need for computational research has continued to grow. An environment that supports computational research requires not only expertise but also substantial investment. Furthermore, there are many models for providing a computing support infrastructure (e.g., leveraging cloud computing, joining consortiums, leasing data center space, building a data center, etc.). Hence, it's fair to ask, How do organizations engaged in computational research decide the best course? This article explains how Princeton University made its decision to build a high-performance computing center to accommodate both research and administrative computing needs.

Historical Perspective

Princeton University is the fourth-oldest college in the United States, having been chartered in 1746. Princeton is an independent, coeducational, nondenominational institution that provides undergraduate and graduate instruction in the humanities, social sciences, natural sciences, and engineering.

CSTechnology is an advisory firm that provides strategy and implementation services to institutions seeking to unlock the value of their IT portfolio investments.

As was typical before 2000, Princeton's administrative computing needs were provided as a central service by the Office of Information Technology. OIT generally supported broad, university-wide needs (e.g., payroll, course scheduling, etc.). By the start of 2000, Princeton's computing environment was transforming from a mainframe-centric environment to a client-server architecture for the main business and teaching applications.

Research computing was conducted at the departmental level, with little involvement by the central computing services organization, and was generally linked to the grants that sponsored the research, with dedicated computing platforms housed in rooms set aside for a specific research project or the applicable department. Thus, like most universities, the research computing activity was managed as a fragmented resource, with each constituency planning for, and managing, its own domain. Fortunately, Princeton's relatively small size and strong, shared sense of purpose (particularly with respect to the importance of research) provided a culture of cooperation that allowed access to key individuals in their respective stakeholder or decision-making roles. These features were critical to Princeton's success in restructuring of computing resources.

Computing at Princeton in the Early 2000s

At the start of the new millennium, the administrative and research computational environments operated relatively independently. Against this backdrop, organizational change became the catalyst for transformation. Three organizations emerged with keen interests in better addressing computational research needs.

- OIT—Betty Leydon took over as Princeton's CIO in 2001, moving from a similar position at Duke University. As head of the Office of Information Technology, she began to place a greater emphasis on supporting research computing. Leydon, now vice president of IT and CIO, explained:

"Support for research computing has emerged as a strategic direction for Princeton University. Because of our intimate size and overarching organizational structure, we are ideally situated to excel and become a world leader in research computing. Going forward, computational modeling and analysis will continue to grow in importance across many academic disciplines; therefore, Princeton's success in this area will depend on a reliable, state-of-the-art, and expanding research computing infrastructure."

- PICSciE (pronounced "pixie")—The Princeton Institute for Computational Science and Engineering was founded as an initiative to provide support for centralized computational research. The PICSciE website describes its beginnings. Because PICSciE was outside OIT, the group was free to focus on the science of computation itself and to serve as a de facto voice for Princeton's academic research departments.

- RCAG—The Research Computing Advisory Group was formed in 2002 as a faculty committee. RCAG allowed the faculty and departmental staff to guide OIT on how best to serve the research faculty's computational needs. Thus, RCAG forms a bridge connecting OIT, PICSciE, and faculty members interested in research computing, helping them coordinate and work together effectively.1

With the energy provided by these nascent groups, it was possible for a common strategy to develop and for the university leadership (through OIT, the provost, and trustees) and key research faculty (through RCAG and PICSciE) to come to agreement on the principles that led to cooperation between administrative computing and the various research computing groups.

Developing a Unified Strategy for Research Computing

In 2005, Princeton took another major step toward centralization of research computing efforts, and again, collaboration was key. OIT and Princeton researchers worked together to purchase and install a "Blue Gene" high-performance computer from IBM as part of a strategy not only to support existing research programs but also to lay groundwork for future research including complex problems in areas such as astrophysical sciences, engineering, chemistry, and plasma physics. As author Curt Hillegas, director of research computing, noted:

"The faculty made the final decision on the architecture and vendor because they knew the requirements and performance characteristics of their codes. With this system we initiated a new scheduling model that guarantees that, averaged over any two-week period, contributors are able to use the proportion of the system they have funded when they are actively using the system. Centrally funded and 'unused' portions of the system are available to other (nonfunding) researchers, who get access through a research proposal process."

The collaboration was not just strategic but financial as well, with OIT, PICSciE, the School of Engineering and Applied Science (SEAS), and several individual faculty members contributing to the cost. OIT paid for about half of the system; PICSciE, SEAS, and individual faculty members all made significant contributions toward the purchase.

During this time, granting agencies grew hesitant to support requests for individual faculty members' computing clusters because they were beginning to view individual approaches as inefficient. Princeton's new collaborative approach demonstrated enhanced computing efficiency as well as real savings. More funding went directly to research because Princeton had already pooled resources to support the computing infrastructure needed for projects. Centralized operational support for the research computing helped keep the computers operational and eased the installation of new peripherals or software required for new research endeavors. Of course, these advantages also gave researchers more time to focus on their research and writing papers.

Princeton's collaborative research model required that growth be managed in a new way as well. It was a challenge to provide the necessary power in buildings designed for classroom and office space, and taking space designed for one function and redesigning it for another would be both costly and inefficient. On the other hand, properly designed computer space, cooling, and electricity infrastructure became more expensive as computing equipment required more power. When new computing equipment was required to support a research program, the collaborative environment at Princeton provided advantages. "When I got funding for my clusters, it covered only the cost of my machines," explained Roberto Car, Princeton's Ralph W. Dornte Professor of Chemistry. "You need staff to manage the clusters, but that can be expensive. Typically, one could hire graduate students and postdocs, but they have other objectives. Rather than spending their time learning about science, some may spend more time than prudent in cluster administration."2

Maintaining a researcher's independence and control while developing joint resource plans became the status quo at Princeton. Certainly there was tension in managing a dynamic, essential resource so key to the university's mission and researchers' careers. The atmosphere of collaboration and trust, along with the advantages offered by the shared computing infrastructure, soon began to pay dividends for all involved.

Advances in Administrative Computing

As the seeds of a collaborative research computing environment were germinating at Princeton, the administrative computing functions area was exploring new ground. In the 1990s the administrative computing environment had migrated from a mainframe model to a distributed systems model, and more changes were to come.

The next wave of advances in administrative computing reflected the significant changes in IT governance and the focus on improving IT disaster resiliency in the wake of September 11, 2001. OIT began implementing plans to increase the redundancy and availability of key systems. The main data center room was connected by fiber to a secondary server room across the Princeton campus, and key IT network and server infrastructures were replicated across the two sites. By 2007, key e-mail and web applications were housed in both locations.

Furthermore, new approaches in project management and methodology, as well as a widening of the circle of stakeholders, translated into a predictable cycle of system enhancements with direct linkages to capitalizing on the new client-server investments. Since new applications could be more economically deployed and maintained, there was steady growth in the number of servers supporting the administrative environment. Only in the past few years has this growth been stemmed by increasing investments in virtualization, which has steadied the data-center capacity requirement.

Assessing Needs in the New Millennium

Businesses and educational institutions all make wagers based on their best abilities to foretell the future. In the case of technology investments, and particularly computing infrastructure, native growth, external drivers, and technology changes must be factored in when deciding what investments are in the institution's best interests. At Princeton, the administrative and research constituents had their own perspectives.

Planning the needs of administrative computing in the new millennium required assessing the needs of the business of the university. Throughout the last decade, the main trends were growth in storage demand and increased accessibility. Accessibility concerns included both off-campus application use (remote access, mobile access) and access to resources 24 hours a day. These increasing needs drove requirements for more networking capacity, better security, and improved availability (through strategies for 24/7 operations and disaster recovery). Fortunately, once the university's administrative operations had been largely automated (early in the 2000s), there was very little further growth in the power-consumption requirement for administrative computing, as virtualization provided opportunity for continued growth with relatively flat power consumption.

Research computing in the early 2000s was still largely independent and focused on grant-driven activities, with individual researchers and departments dealing with computing resources locally. Leydon realized the opportunity she had to leverage OIT resources (including financing) to forge and incentivize a new partnership. Expenses, such as capital improvements, were financed out of the university's renovation and maintenance budgets. Other costs, such as maintenance expenses, were supported by reallocating funds from other budget line items, making the reallocation net neutral. Operating expenses increased as the collaboration grew, but faculty were willing to participate in the costs at that point, in large part because they were involved in a true partnership. Researchers knew what was needed to carry out their research, so OIT's role became one of facilitating those research goals. If OIT had dictated the approach, the model would not have become so successful. As Paul LaMarche, vice provost for space programming and planning, noted,

"A number of faculty and departments had fully invested in the centralized research computing support model, but looking at those HPC resources that remained in academic buildings, we saw an opportunity to return space to its intended purpose while greatly improving power and cooling efficiencies."

Simultaneously, researchers realized that the distributed server model was difficult for them to maintain and refresh. Also, their researchers spent more and more time managing the computer environment and less time conducting fundamental research. Skepticism diminished as the new centralized computing model matured. Thus, more faculty contracted with OIT for the data center environment. Initially the researchers continued to support their own machines, but over time the shared processor model with support provided by OIT and PICSciE took hold more deeply.

Setting the Stage for HPCRC

Given that the university's research and administrative functions had legacy environments, it was clearly important to understand how those existing environments had evolved and the key drivers — past, current, and future. By 2008, Princeton already had an effective understanding of these environments through OIT for administrative computing functions and TIGRESS (Terascale Infrastructure for Groundbreaking Research in Engineering and Science) for research computing functions. From the standpoint of inventories, occupancies, and future plans, Princeton was well equipped to present accurate data and informed requirements for the respective environments. There was a clear understanding of the past growth and an expectation of increasing participation from faculty members and departments as the centralized research computing support model increased in visibility and popularity.

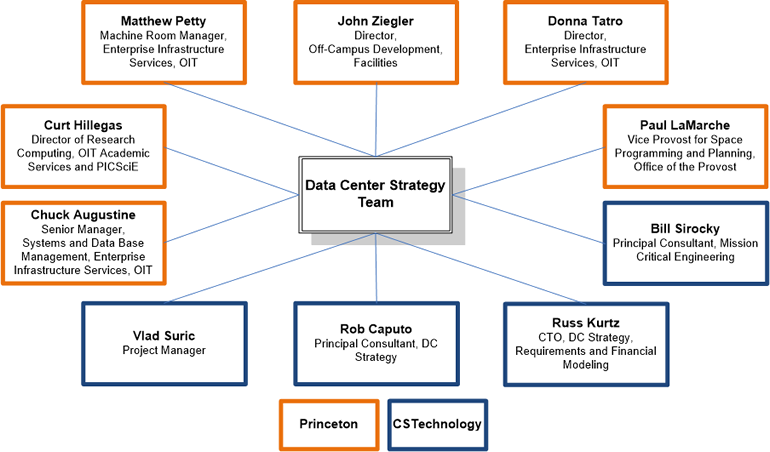

Still, as of mid-2008, there was no unified perspective regarding growth projections. In fact, some prior studies were counterproductive in that they projected growth so great as to result in unaffordable program requirements (i.e., a data center that was too expensive to fund). At this point, Princeton formed the team (see figure 1) chartered by OIT to develop the university's data strategy for the coming years.

Figure 1. Data Center Strategy Team

The team initially focused on two aspects of need: growth and resiliency. In addition to the main constituents (administrative and research), requirements were developed for a third-party occupant and distributed departmental and research environments not currently served within core data center locations (e.g., departmental computer rooms). Additionally, the administrative occupancy was split into production and nonproduction (e.g., development, test, backup) segments. Since there was information on each of these capacity types, analysis was performed based on historical growth. Future projections were made based on the trends and needs foreseeable for each occupancy type.

Another key investigation revolved around the resiliency requirements for the respective occupants. Administrative production capacity required the most protection, including UPS and generator capacity to maintain power during a utility outage. Research capacity would not require generator backup, as long as the chance and probable duration of an outage was reasonably small and UPS protection provided sufficient time for a graceful shutdown of the machines.

A key stress point, then, was how to introduce flexibility while minimizing costs to find a solution that did a good job meeting these similar, but not perfectly aligned, needs. It was at this point that the team generated some of the key design principles that carried over to the final design. For example, it was clear that with continued virtualization, administrative computing requirements would be fairly static, whereas research computing requirements would continue to grow. Because only administrative systems required generators, the initial basis of design could limit generator capacity, saving millions in capital costs. Another key differentiator was the projected power densities, which were foreseen to be as much as 3000W/m2 for research, yet no more than half that for administrative computing.

Of course, while meeting these slightly different needs, the team had to remember that Princeton's overarching need was not only to maintain an effective computing environment but also to create a leading-edge environment that would attract world-class researchers and teams. Recent faculty addition Jeroen Tromp, the Blair Professor of Geology and professor of Applied and Computational Mathematics, stated:

"When I was looking for a new research environment, I chose Princeton in part because of the support TIGRESS would provide for my computing needs and the fact that the university was clearly willing to stay ahead of others with respect to its computing resources."

The Data Center Strategy Team realized that computing resources offered another opportunity to differentiate Princeton from other institutions.

The development of a comprehensive growth model allowed Princeton to consider some provocative what-if scenarios, including unabated growth as well as different strategies for managing growth over time (e.g., continued virtualization, consolidation of legacy environments, etc.). For the first time, Princeton developed comprehensive future budget projections for its computing environment under several scenarios and hence was able to make informed choices about managing those resources.

Existing departmental distributed computing locations had evolved in an ad hoc manner, often in ill-suited locations. The centralized computing systems were housed in a building conceived in a completely different era of computing. Thus neither environment was very energy efficient. In fact, the legacy data center's electricity use per square foot exceeded by far all other buildings on campus, representing more than five percent of the entire campus electricity consumption. In February 2008, the university adopted a formal sustainability plan, which provided focus, defined goals, and coordinated many disparate efforts toward delivering energy more efficiently and reducing its use and associated expense and CO2 emissions.3

As participants in the data center design team, members of Princeton's facilities engineering department offered recommendations for design features and new technologies to reduce total cost of ownership (TCO) and environmental impact. Each was evaluated on a life-cycle-cost basis against more standard designs and technologies. In these evaluations, the university placed a self-imposed $35/metric-ton value on avoided CO2. Wherever appropriate, New Jersey Clean Energy Program grants were sought for these energy-saving upgrades. Some energy- and cost-saving features adopted include: LED lighting; increased building thermal mass and insulation; both water- and air-side economizers; rack-mounted, rear-door heat exchangers for new equipment and direct-ducted rack exhaust for legacy equipment; use of the expanded ASHRAE thermal guidelines for data-processing environments; a state-of-the-art reciprocating natural gas engine coupled with an absorption chiller; and a nonchemical cooling tower water treatment. Author Ted Borer, the university's energy plant manager, indicated that this project

"represents a unique opportunity to make a significant step toward meeting our CO2 reduction goals while avoiding millions of dollars in annual energy expenses for decades into the future."

Navigating the Approvals Process

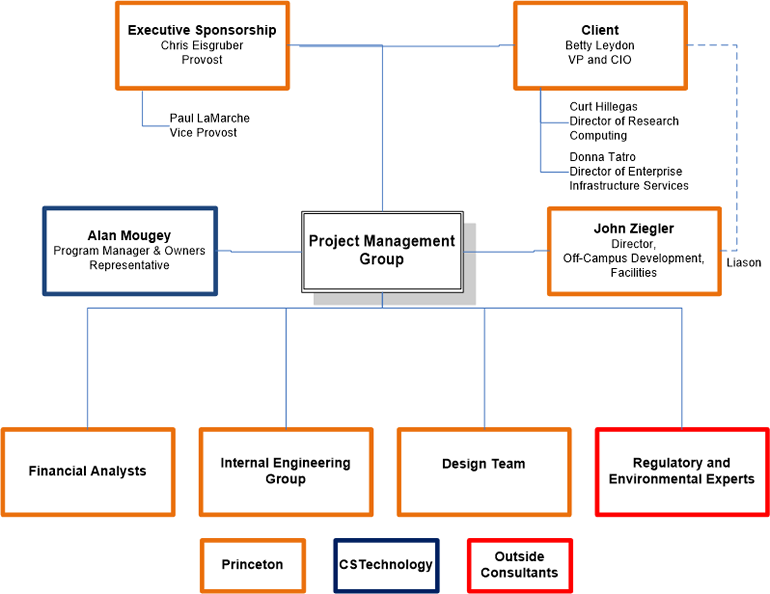

Capital projects at Princeton University require various levels of approvals in proportion to cost and institutional impacts. In the case of the centralized data center, the approvals ascended to the highest level, namely, those of the Grounds and Building (G&B) Committee and the Finance Committee of Princeton's Board of Trustees. To begin, the project team develops the program book and then seeks approval from the Project Management Group (see figure 2). The program book this team develops provides the basis for design and covers scope, quality, schedule, and budget parameters, including capital cost, operating cost, and environmental impact.

Figure 2. Project Management Group

Prior to taking any project to the trustees for approval, the Facilities Planning Group reviews the project at least three times. The initial review, if favorable, grants approval for a study budget. The second and subsequent approvals allow the project team to seek incremental funding from the trustees.

Once the data center project reached the schematic design phase (that point in a capital project where the facility has been given its initial form, but is still somewhat malleable in its configuration and systems), the development team presented the project to the trustee's G&B Committee for initial review and comment. Given that a large part of the data center would serve the computational research community, Hillegas presented to the trustees the options that the project team evaluated and why the proposed solution was best among all those identified. G&B Committee members gave their approval to proceed, and the project team continued to develop the facility, evaluating mechanical and electrical systems against the agreed-upon design criteria and budget.

Several months later, armed with a comprehensive design package (approximately 85 percent complete construction documents), construction cost estimates, construction schedule, and operational cost projections, project representatives returned to the trustees for their final approval. The G&B Committee reviewed and approved the final design, and the Finance Committee gave its approval to expend the capital for construction. With those final approvals in hand, the project team completed the documents and proceeded to secure the remaining relevant regulatory approvals.

External reviews included municipal land use and planning (Plainsboro Township) and environmental-related (New Jersey State) approvals. While CS Technology managed the comprehensive regulatory approval process, Picus (the organization that manages the Forrestal Campus on behalf of Princeton University) led the municipal regulatory process, and Facilities Engineering in conjunction with Van Note-Harvey, the project's civil engineering firm, led the environmental approval process. Once the external regulatory approvals were complete, construction could begin.

Computing Capacity as a Strategic Resource

Universities have capacity plans to support classroom requirements, office space, parking, and supplies. However, computing resources have not traditionally been planned in the same vein. Furthermore, the resources required include not just the computers themselves but the proper environment, which is generally classified as a facilities responsibility (as would be classroom, office, or dormitory space). Thus effective computing resource planning must link the machine acquisition and computer center construction planning disciplines. Once these disciplines are in place and linked, the university can plan and budget for computing resources well into the future.

It is difficult to manage or control the expense of operating a computer environment when the costs are largely unknown. When only partial costs are accounted for, decisions are ill informed and can generate unintended costs. The major costs associated with a computing environment tend to be managed across diverse organizational silos, hence developing a workable budget is challenging.

Representative unit costs (per KW per year) are:

- Data center capital expenses (CapEx) — amortized: ~$1.7K/KW/year

- Computing equipment CapEx — amortized: ~$8.6K/KW/year

- Data center operating expenses (OpEx): ~$3.0K/KW/year

It became evident that the cost of the environment to support the computing equipment (the data center CapEx and data center OpEx, $4.7K/KW) was about half the amortized cost of the computing equipment ($8.6K/KW). Modeling for inflation and other effects (e.g., cost of capital) was also performed. Representative costs for the distributed computing environment (those housed in departmental spaces) are somewhat higher because of inefficiencies, and they were also modeled against various growth and consolidation scenarios.

With the aid of these models, Princeton developed a comprehensive budget for the data center environments employed to support its IT portfolio.

Generally, grant money is used for computer equipment purchases and not for supporting the data center operational budget, but the operational budget associated with the grant can easily be more than half the value of the computer equipment purchased. In other words, a $100K grant may drive more than $50K in data center operational expense. Most universities today are blind to the degree of commitment being made in support of such grants, typically due to some combination of the following factors:

- Inadequate metering of thermal and electric energy use associated with campus buildings

- Inadequate metering of thermal and electric energy use associated with individual servers or applications in the data center

- The practice of prorating energy costs over the whole institution rather than charging buildings or departments in proportion to consumption

- End users not being given information or responsibility for the energy costs their activities cause

With the ability to project future costs parameterized by future growth, Princeton put together a growth plan that is affordable. In this model, research computing can be planned as another essential infrastructure component, just as the university budgets for office space, staff, and other resources. That growth plan also defines the size of the initial data center, as well as the capacity of expansion increments.

In the past, Princeton relied on incentivizing centralized computing through funding (paying for a sizeable share of the computing hardware and environment as well as energy costs). In addition, the new data center promises improved resiliency (through its generators) and operational security (purpose-built facility with limited traffic and appropriate security safeguards). By continuing to offer financial incentives, the university expects that, over time, most of the distributed environment will migrate to the centralized data center. Furthermore, the university, on the whole, will be better off from a TCO perspective.

Financial Perspectives

Even bottom-line-oriented financial institutions typically have difficulty determining their data center costs. Often the data center utility cost is not separated from other usages, for example, and the depreciation stream may be intermixed with other investments (e.g., in a building that is multiuse). At Princeton, electrical usage reports at the existing data center were available, so they could be used to develop cost streams associated with electricity consumption. However, the cooling costs had to be inferred based on approximations of current chilled water usage and the cost of providing chilled water at the university's central plant. Furthermore, assumptions about site efficiency were required to account for full costs. The university also levies an internal charge for carbon production, which was added to the operating expense budget.

Princeton's data center planning model provided for the analysis of various growth projections, backup capacity strategies, and transition alternatives. The development of a cost-informed strategy and the evaluation of different scenarios are powerful aspects of the program. The ability to look at these "what ifs," in particular, drives team consensus by offering a quantitative means to evaluate potential paths.

By almost any measure, a data center construction project is expensive, and finding the funding is a challenge. Even though the bulk of the funding was provided by the standard capital budget appropriations process, some capital was redirected from improvements that would no longer be required at the legacy data center locations. The capacity and financial modeling were crucial to identifying what investments to the old data centers would be obviated by the new data center. Further funding grants and low-interest loans were available from the utility provider through their data center efficiency program. Finally, while not a capital savings, future operations savings projected to accrue from the increased efficiency (again through the capacity and financial models) of the new data center were used to offset some capital costs in the life-cycle cost analysis of the new data center.

Execution

Princeton University is, in large part, centrally governed, yet still involves many entities. It was critical, therefore, to create a project team that represented each stakeholder group without becoming so large and cumbersome as to stifle the new data center initiative.

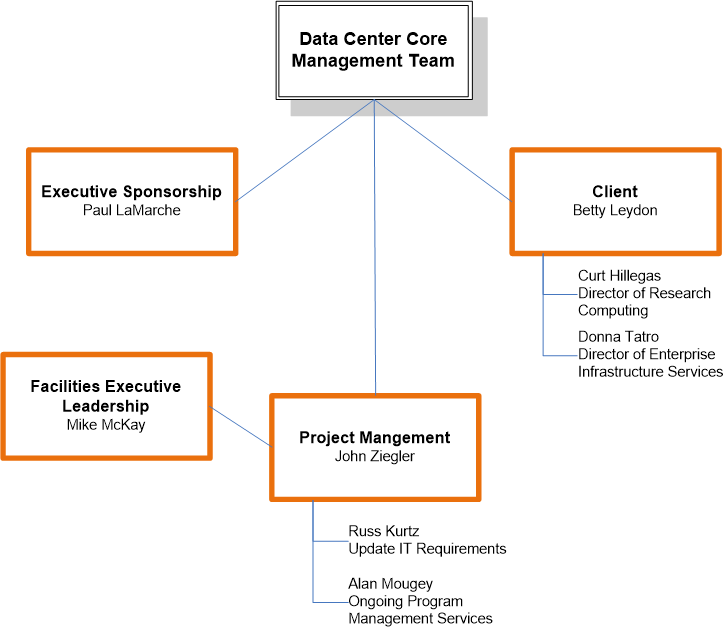

Princeton's core management team for the data center (see figure 3) consisted of the executive sponsor, the "client," and the project manager. In this case, Paul LaMarche represented the provost's office. The client representative was Betty Leydon from OIT, who retired in September 2012. Given the dual focus of computing needs and internal clients (research and enterprise computing), Leydon appointed Curt Hillegas and Donna Tatro as her primary representatives. Lastly, author John Ziegler led the overall project management efforts.

Figure 3. Data Center Core Management Team

Each of these leaders "owned" a fairly explicit set of responsibilities. LaMarche acted as the team's sounding board for fundamental scope, budget, and schedule parameters as well as for evaluating design alternatives and value engineering opportunities. Hillegas and Tatro contributed OIT, RCAG, PICSciE, and enterprise-based goals. Ziegler was responsible for putting together the project management team and then working with all primary stakeholders to ensure that the team met the agreed-upon project goals.

Princeton's core management team still lacked data center development expertise. In order to effectively lead the project, Ziegler worked with Mike McKay, vice president for facilities, to begin a series of calls to colleagues within and outside the university. These contacts put the core management team in touch with CSTechnology principals JP Rosato and David Spiewak. The university's core group agreed that CST's combination of data center expertise and project management experience would likely fill the requirements to update the university's IT requirements and provide ongoing program management services. Authors Russ Kurtz and Alan Mougey of CST provided the support to fill those requirements.

Prior to late 2008, the data center program was based on an unaffordable set of requirements; CST began working with university constituents to develop a university strategy. The strategy team was able to evaluate current computing capacities, current and future needs, future desires, and associated costs. Categorically articulating existing status along with future needs and desires allowed the team to objectively prioritize the project's scope within the constraints of a revised (lowered) budget that still met the university's research and administrative computing requirements for the foreseeable future.

2008 closed with a draft recommendation for the data center strategy, which was presented in January 2009 to the project team, key stakeholders, and university representatives. While these initial recommendations launched the project, key design aspects were modified during the planning phases (often in conjunction with financial modeling). As initially reported, the team found that no combination or repurposing of Princeton's legacy data center sites would provide for the long-term mission of the university. Cloud computing was also considered but was not appropriate because:

- Research computing at Princeton is characterized by high, continuous usage for specialized functions that are dedicated to specific investigations; cloud computing does not fit this profile.

- Coding for specific research projects is customized for different computing configurations; again, cloud computing does not fit this profile.

The team proceeded to recommend a new data center initially sized at 1.8MW critical IT load, located at a site where future expansion to over 7MW was possible. The new recommendation was a more cost-effective, long-term solution than either outsourcing the need (e.g., through a co-location provider) or repurposing an existing site for the intended use, though these alternatives were carefully considered as options that could minimize capital expense.

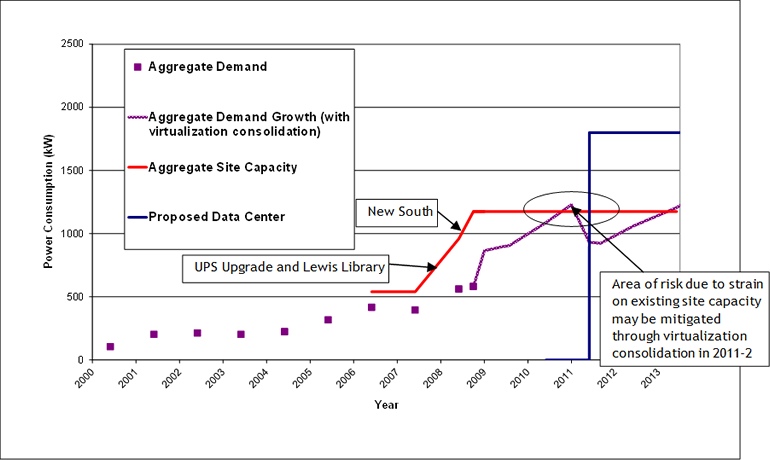

The university also adopted a constrained consumption plan to provide some additional "head room," given that virtualization savings projections for 2011–12 were uncertain (see figure 4).

Figure 4. Data Center Power Consumption Projections

At this time, it became apparent that the project team had built a sufficient business case based on real data and requirements and could proceed.

The university solicited proposals for owner's representative and project management services to take the program forward. CST won the commission and proceeded to wrap their programming update into a more comprehensive program book that established overall scope, quality, schedule, and budget parameters.

While these parameters were being further developed and vetted, university executive administration challenged the project team to evaluate the real-estate market for potential locations, partners, and service providers that could meet the project requirements in the most cost-effective manner. Following a four-month process, during which work on the base recommendation proceeded (so as not to delay any potential alternative), all stakeholders and the Data Center Core Management Team agreed that the optimal location was a vacant development plot at Princeton University's Forrestal Campus.

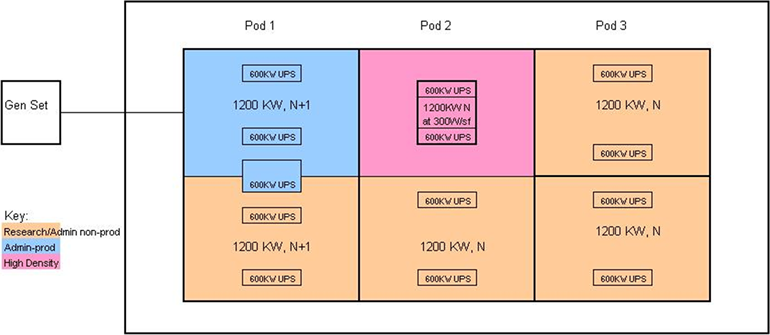

Not all the design details were presented with the program recommendation. The team continued to examine criteria, such as power density and redundancy requirements, and devised alternatives specific to Princeton's needs. For example, the original design limited generation capacity to match administrative production load and reduce capital. Also, the team recommended that the data center space would be designed with three "pods," each with two modules of 1.2MW capacity. High-density occupancy (300W/square feet) would be supported in designated modules (see figure 5).

Figure 5. Early Schematic for Data Center Configuration

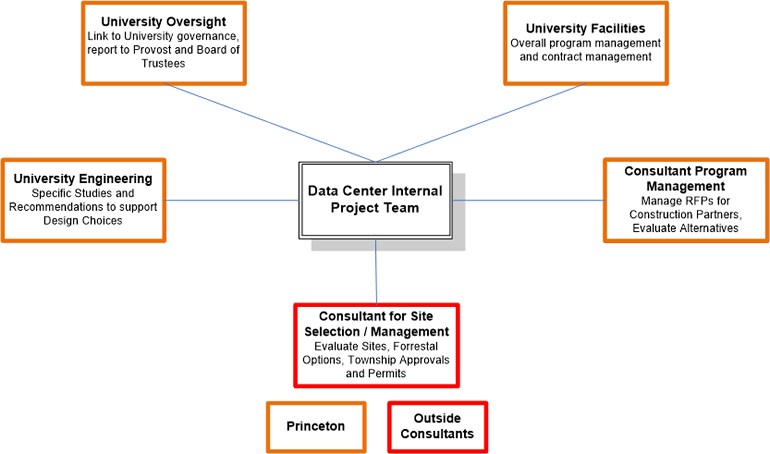

The team understood that detailed design work could well alter some of the initial design parameters. This understanding led to some important improvements. The open-book Guaranteed Maximum Price approach (as opposed to design-build) also supported the opportunity to consider affordable improvements as the project progressed. The initial phase also reviewed a number of construction funding options. For example, "build, sell, lease back" and "lease with build-to-suit" arrangements were investigated, particularly because they could reduce or eliminate the capital requirements for the new data center. This initial phase also underwrote the development of an internal project team for the data center (see figure 6).

Figure 6. Data Center Internal Project Team

After the Data Center Core Management Team had identified the future data center's fundamental requirements and location, the Data Center Internal Project Team set about establishing, evaluating, and considering key design options for the new data center and proceeded to assemble a project team, including the architect, engineers, and other representatives critical to ensuring project success.

Design Choices

Once the project team identified an approved project site, it developed site-specific criteria that would inform the design on an "outside-in" basis, while the data center performance criteria created the "inside-out" objectives. Neighborhood context, landscaping, architecture language, engineering, and regulatory requirements intersected and presented a complex range of issues to consider.

The design team investigated state-of-the-art and emerging data center technologies, witnessing their operation in the field and talking with facility owners and operators before including those new technologies in the design. The final project represents the successful resolution for each of these intersections and is a testament to each of the stakeholders and their mutual collaboration.

From the onset the university made clear that this facility was to be responsible and efficient in both design and operations. The intent was to build a largely unstaffed "lights out," high-efficiency, purpose-built facility that met those requirements and nothing more. The facility architectural design and finish were to be appropriate for its setting and function, not overstated. All decisions were to be driven by engineering and cost analysis using TCO (aka "life-cycle costing") evaluation standards, while providing appropriate levels of reliability, scalability, flexibility, modularity, high-efficiency, and cost-effective operations.

Another important design goal was to meet or exceed LEED Silver equivalency. The university viewed this goal as a way to drive responsible design decisions without requiring elements that add little or no value except to gain LEED points. In fact, after the design was finished and the initial scorecard completed, it became clear that LEED Gold certification would be possible. Although the team didn't have the normally preferred point buffer when filing for certification, the project achieved LEED Gold certification.

As indicated earlier, one of the benefits of the centralized, purpose-built computing facility is the reduced time it takes to deploy new technologies or systems. This aspect was carefully designed into the facility and site with in-rack, in-room, and on-site expansion capabilities to address short-, near-, and long-term growth with minimal or no impact to the ongoing operations of the university.

Some energy-efficient features of the facility include air-side and water-side economizers, high "delta T" cooling, higher operating temperatures, chilled water produced by an absorption chiller using residual heat from a dispatchable gas reciprocating engine (i.e., combined heating and power [CHP]), high-efficiency UPS systems, variable frequency drives (VFDs) on all major rotating equipment, and more. Borer explained,

"Princeton's commitment to life-cycle cost analysis coupled with its self-imposed 'carbon tax' were excellent tools. They allowed us to objectively compare the relative merits of each energy-efficiency measure. With additional leverage from New Jersey's Clean Energy Program, we were able to design a highly efficient and reliable facility that is solidly cost justified."

Final Result

The project, as completed, meets or exceeds all the initial criteria as presented in the program book. Innovative systems integration resulted in adding a peak-shaving, gas-fired generator that will provide electrical redundancy far in excess of that stipulated in the program book. Furthermore, it will feature combined heat and power capabilities that will advance Princeton's sustainability objectives. It also appears that the PUE (power utilization efficiency, which measures how many incoming units of electricity are required per each unit that reaches the computing equipment) will be in the range of 1.2 to 1.4 — lower than the initially anticipated 1.5 PUE and substantially lower than the 2.25 PUE of the university's existing central data center.

The project was completed within budget, even after including the innovative features, and was on schedule until an issue with the manufacturing of one of the generators surfaced. The impact to planned turnover and computer migration was limited, and the project team worked with OIT and related stakeholders to mitigate any issues associated with the delayed opening in late December 2011.

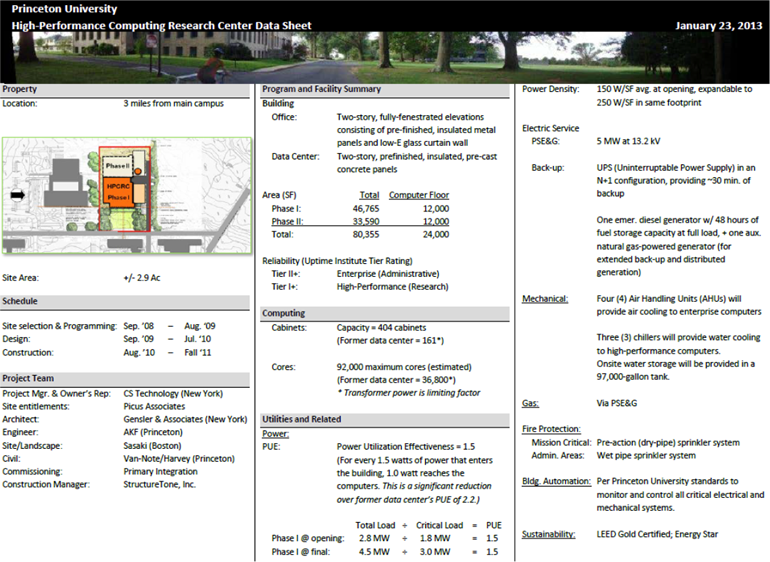

The following data sheet (figure 7) provides a summary of the final design specifications.

Figure 7. High-Performance Computing Research Center Data Sheet

Looking Ahead

The data center was ready to accept new computers following the official opening in late December 2011, but OIT and associated stakeholders agreed to postpone the initial migration until after the holidays in early January 2012. What computational researchers had viewed 10 years ago as undesirable—the notion of a centralized computational research facility—is now perceived as an asset. The data center benefits not only their respective research programs but also the extended university community. Greater capacity, room for expansion, higher redundancy, greater resiliency, more robust systems, lower cost of operations, and overall better service will now allow researchers to focus on their academic mission, rather than spending their time trying to optimize their computer systems in suboptimal environments. And the university as a whole benefits by consolidating the more volatile research computing adjacent to the relatively stable administrative computing environment — both research and administrative communities reap benefits that would otherwise not be available. Further, with the ability to measure utility usage at the rack level, the university can better track and manage the costs associated with adding new computers.

Lessons for Others

In looking back over the last decade of progress at Princeton, we can identify several points where a specific approach or decision led to results that advanced the program. The team has identified these techniques for others to consider.

Develop a clear strategy and promote it. Princeton's bold decision to support research computing with centralized resources (including funding) was credible because it was promoted within OIT, accepted by university governance, and provided advantages to the crucial user constituency: research faculty. A clear message that embraces the key needs of the stakeholders and substantiates them for university governance builds a groundswell of understanding and support. In the case of Princeton, research and administrative computing have different characteristics (one is high growth and heavily virtualized, one requires high resiliency), yet both are well served by a single strategy — although not a single solution — the data center "flexes" to accommodate both.

Embrace support from key constituents. Early on, OIT leadership made sure to identify essential stakeholders and decision makers (sometimes they self-identified, sometimes they had to be discovered) at Princeton. Early in her tenure at Princeton, Leydon took an expansive view of her role and her potential partners in transforming OIT. She reached out and gained the trust of faculty, especially research faculty, through organizations specifically designed to provide an effective voice in OIT planning. Thus, RCAG was instrumental in providing a means to proactively approach faculty and engage them. Even more important, research faculty were engaged, informed, and consulted throughout the process to make sure that they willingly endorsed major decisions. When the faculty embraced a solution, OIT made sure to highlight it as a potential recruiting advantage to university governance.

Anticipate and respond to risks, issues, and roadblocks. All projects experience stress, so it's important to plan accordingly. If project stakeholders had an issue or concern that was not resolved or at least acknowledged, they might retract their support. Throughout the data center project, it was not possible to please everyone on every point, but by maintaining focus on an overall strategy, the advantages kept everyone engaged and invested in the outcome despite the necessary compromises. The project team was willing to consider changing direction in response to constructive information, but made sure that the cost to the project was not too high.

Assemble the right team. Success demands that you assemble the right team for the job. The appropriate experience and expertise, the correct number of team members, and a clear understanding of the roles and responsibilities are all key considerations. Sometimes, during the organization phase, it is possible to lose sight of key skills that are not readily available within the organization. The Princeton team members had worked together on Princeton projects, but none had the required expertise to build a data center. CS Technology was engaged to provide that facet.

Know your limitations. A strategy has to fit within the constraints of time and budget. It's not always possible to know the constraints when setting out. Stakeholders may not know all the limitations at first. However, by faithfully keeping stakeholders informed, the important constraints surface as they respond to ideas, brainstorming sessions, or proposals.

Recognize the cost. Data centers are energy intensive. Use measurement-based data to determine the actual operating costs of these facilities. Communicate these costs plainly to all stakeholders. Use life-cycle cost analysis to evaluate options and estimate TCO. Efficiency measures designed with the facility's life cycle in mind will best deliver long-term savings and reduce environmental impact.

Learn from everyone. Data centers are being built throughout the world. It is tremendously valuable to get key project team members and stakeholders to physically tour operating data centers and talk with their peers about what succeeded and what they wish they had done differently. Some will demonstrate the value of state-of-the-art equipment; others will serve as case studies in what not to do. All past experiences have much to teach a design team.

Plan for life. A data center's design and construction will take months, but its operation is likely to continue for decades. It is important to recognize that all equipment will fail, need maintenance, become outmoded, need replacement, and, eventually, be disposed of. Designs that incorporate this understanding will be more reliable, adaptable, and energy efficient and will have less environmental impact.

Communicate. The key thread through these "lessons learned" is the need to communicate often and tirelessly. If you think you're communicating too much, it may be just enough. Princeton's OIT used existing, regular meetings (e.g., faculty senate, university budget, etc.) and executive reports to keep partners and stakeholders up to date, even before there was a specific ongoing project. When structure was missing (as with RCAG at Princeton), it was created. When the data center project was under way, the project team made sure to support and publicize it at the appropriate time, by convening project-specific review meetings and even using webcams to follow the data center's progress.

Conclusion

It's a given that the computational research landscape will continue to change. Future decisions will be just as complex, just as challenging, and just as strenuous and may lead to different conclusions in different times. Issues around financing, governance, planning for growth, and the like will continue to stress institutional resources and leadership's imagination. However, the authors propose that some principles are static. Thus, by focusing on the success factors outlined herein (developing a unified strategy, building the business case, gaining the support of key stakeholders) and, most importantly, assembling the right team (inside players and outside expertise), Princeton arrived at a collective decision that building a world-class, high-performance computing facility (see figure 8) provided the best means of supporting our computational research needs in the coming years.

Figure 8. Front View of Princeton's New High-Performance Computing Research Center

- Go to Princeton's website for more information on RCAG.

- Judith A. Pirani et al., "Supporting Research Computing Through Collaboration at Princeton University," ECAR Case Study 2 (Boulder, CO: EDUCAUSE Center for Applied Research, May 23, 2006), p. 5.

- Go to Princeton's website for more information on the sustainability plan.

© 2013 Edward T. Borer Jr., Curt Hillegas, Russell Kurtz, Alan Mougey, Donna Tatro, and John Ziegler. The text of this EDUCAUSE Review Online article is licensed under the Creative Commons Attribution-NonCommercial 3.0 license.