Key Takeaways

- A facilitated testing program uses a three-stage approach that motivates testers to actively engage with a system by first introducing the expected changes and then guiding them through testing.

- Organizations can use facilitated testing programs for development prototyping, user acceptance testing, and rollout training.

- Key factors in these programs' success include using "live" data while running an actual demonstration of the new system and how it works.

- As experiences from its implementation at the University of Oxford show, a facilitated testing program offers benefits including effective testing, better user buy-in, and increased satisfaction levels within the tester group.

User acceptance testing too often misses the mark; users neither test nor accept (because they know they haven't tested). How many times have your testing teams assembled, grudgingly, in a bleak room of computers with no real conception of what to do, where to start, how to do it, or what they are looking for? Typically, the (often disinterested) testers are invited by impartial organizers who have already seen the tool work and now must convince business users of its value. Some testers have been assigned to the task by their division managers, while a few might volunteer. Why is it always surprising to find that testers fall short of expectations and that the much-needed "destructive" system testing never materializes?

Next time, try introducing a facilitated testing program. The experience might just generate a level of interest that surprises the testers, the project team, and the project owner.

Innovative organizations that lead the way in upgrades, new IT initiatives, and business process changes will find this approach of particular interest. Organizations without planned IT developments are unlikely to find it of much interest.

This article describes a facilitated testing program's benefits and the steps required to implement it. We base this discussion on our experiences at the University of Oxford, where we launched a facilitated testing program while introducing a new research-based costing model and then continued with a similar program during an Oracle Financials upgrade, albeit using automated testing tools.

Facilitated Testing Program Stages

A facilitated testing program can be used for development prototyping, user acceptance testing, and rollout training. It consists of three stages:

- Demonstrate the system to testers using a clone of the live system.

- Let testers test the new system using a recent clone of live data.

- Collect the testers' feedback and use it to refine the software (or to park it in a future enhancements work stream).

Core components

Within the three stages are several key steps.

In the first stage, the development and training teams prepare a training course on the software to be tested.

In the second stage, project team members would:

- train testers on what appears to be a live system using data that they recognize, and

- allow testers free range on the test system with support from both the trainer and a business analyst involved in the system development.

One of the senior project team observed, "I haven't seen such an interested and enthusiastic group in a test room — ever."

In the third stage, project team members would:

- review the tester opinions and findings that the trainer and business analyst delivered;

- decide on essential revisions required to the software and record nonessential revisions for possible future enhancement work;

- if required, update the software, refresh the test system, install the updated software, and retest the system; and

- repeat the process until team members agree that the user testing has demonstrated a working system.

Assumptions

A facilitated testing program's success rests on several assumptions, including:

- the business analysis is thorough and complete;

- the software is robust, modular, and readily changeable;

- the prototype is at an advanced stage of development and has been signed off by the project sponsor as fit for purpose; and

- the software has been tested by the development team and is ready for full-scale user acceptance testing.

Methodology

The creation of the test environment requires eight work streams.

Stream 1: Obtain Permissions

The team must obtain the necessary approvals to clone the live system; depending on the organization, this may or may not be a showstopper. However, in most cases, it will not be a problem because almost every system has the ability to create test scenarios using a copy of the live system.

Stream 2: Examine System IT Capabilities

The objective is to provide a responsive system that gives testers a real-life experience of the system so they can focus on the testing experience. The key component that the IT team must provide is thus a responsive, current test system. This requires:

- sufficient disk space and an adequate number of processors allocated to the test system for the testing duration;

- the ability to clone and copy the system from live to test within a short period of time; and

- the ability to quickly apply any required system changes, in the form of software patches, to the test system.

It is essential to sort out the testers' security access up front. The users must be able to use their own passwords and have normal access privileges to build their confidence in the process.

The team must also identify and plan a way to coordinate tester actions throughout the test process. The process flow sequences must be followed so that the upstream tasks are completed and tested before the downstream tasks. The planning must ensure that all key processes (such as posting data to various modules) are tested end to end.

Because the users will test on virtually current live data, the risk of cross-contamination must be avoided. The screen appearance should be altered so that users know they are not working on a live system, and reports generated by the test must be clearly marked as such.

Stream 3: Create the Environment

The facilitated testing program environment uses a conventional training room structure for the testers, supported by a trainer and business analyst. IT and project management teams also provide support. However, we found that the visibility of project sponsors and the project team during the training can increase the feel-good factor for everyone involved in the testing program.

Stream 4: Find Technically Savvy "Opinion Formers" for Testing

We found it imperative to identify users who had sufficient knowledge of the current system, particularly when integrating system changes to it. Our project Steering Group — which consisted of members of the project team and the division responsible for the system, as well as three departmental representatives — identified and invited 25 departmental users to attend the testing. The initial tranche was designed to involve "opinion formers" and "troublemakers (in the nicest possible sense)" in the early exposure of the software solution. Obviously, it is essential that the software has already been properly tested by the functional teams, as the facilitated testing is also designed to convince and impress.

Stream 5: Prepare the Tester Training

We used a trainer from the university's Training department who had worked in the targeted functional area and was conversant with the system to be changed. The trainer was functionally very competent, having also worked in a relevant department before, which gave the facilitated testing program a decided advantage. We recommend that you choose your trainer very carefully, as trainers must field in-depth questions from the test teams as they are shown the system to be tested.

A key component is to ensure that the trainer is fully conversant with the system and the planned changes. This will involve considerable time with the software developers, as well as with the business analyst who heads up the change and the development systems. The project management team also must become involved in the development of the trainer's expertise in the proposed system, specifically as it relates to the organization's target objectives, the key users, issues that might occur, and any planned future enhancements — and why they are only planned at this point and not included.

When the trainer becomes familiar with all project aspects, you can begin developing the training course. It is reasonable to expect the testers to be fully aware of the existing system — or at least their parts of it — so the course need not include the process basics. After all, this is not training for new system users!

We did capture opinions from the trainers, who found the experience rewarding. One said, "It was a new experience, and yes, it did involve a lot of work up-front, but we developed a good training course as a result. In effect the testers were also beta-testing our training course."

It is also important to identify and cater to different task allocations, as not everyone in the test team will need the entire new process demonstrated and explained to them. This might involve preparing for two FTP training courses, such as one for central finance testers and another for department testers.

The training's purpose is to explain to the testers the system changes and how they will affect their day-to-day work. It is sometimes useful to present the changes in terms of how they will benefit the organization In any case, because the usual change management issues will surface during testing, the trainer must be in a position to manage them. It is beneficial to assess the likely resistance to the planned changes — and preferably address them at a departmental level — before the tester training commences.

When we developed this testing system, we were also in the process of introducing Oracle's User Productivity Kit (UPK), which let trainers create quite sophisticated interactive training programs. These can be made available on demand to users at their desktops and can be integrated into the main application's help facilities. We recommend the use of this, or an equivalent, in tester training courses as it supplements the demonstrator-led training). It also lets testers access UPK as they would a help screen and obtain walkthroughs of the process under test. The work required to create the UPK course for testing will lead to more robust rollout training, as the training prepared for and used in the facilitated testing program will be usable with little or no change for the wider user population on rollout.

Stream 6: Begin Facilitated Testing

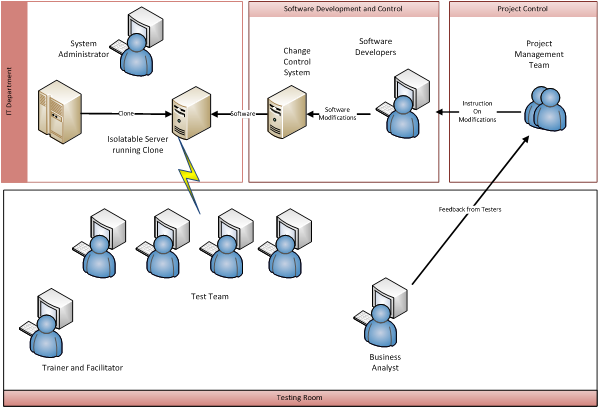

As figure 1 shows, this stream brings together all the earlier streams.

Figure 1. Overview of facilitated testing structure

At this stage, the test system will be in place, the training presentation will be ready, and users will be in their seats. The business analyst will be in attendance and, as recommended, project managers will be hanging round nervously to see if the testing goes according to plan.

The project sponsor launches each session to demonstrate organization and personal commitment and support, to thank the testers for their presence and the work they were about to undertake, and to demonstrate knowledge of the new testing approach. This introduction doesn't have to be very long — 10 minutes at most — but we found that it generates good feelings among participants. After all, how many testing sessions have you attended where senior staff do not appear? That simply sends a "not important" signal, which we wanted to avoid.

Next, the trainer works through the changes introduced by the new system and demonstrates new screens, changed work practices, and new reports. For data security over a wide trainee/tester population, this should be done using artificial data; in our experience, using live data was too problematic at the program's launch. After the formal presentation, the floor is opened to questions. The trainer and business analyst should be in a position to handle 95 percent of these questions. The trainer must be pre-briefed on areas that warrant deferred answers, and must be free to park some questions as necessary. If possible, you should discuss the parked items before the test session finishes, bringing in the appropriate expert to resolve them or, in extreme cases, the project sponsor or departmental heads. However, in our case, we did not have any serious pushback from the testers.

In the next step, the system is opened to the testers. The fact that they can open the test system with their own name and be presented with information that they were handling only a few days ago proved, in our experience, to be a revelation. Test systems with virtually live data seem to be exceedingly rare beasts — but are fundamental to the success of facilitated testing.

The use of live data lets users complete transactions that were recently work in progress and run reports on the information. Users can assess the changes made and provide feedback to the trainer and business analyst. Because the number of testers is deliberately kept manageable, each receives one-on-one support during the session. Our general rule was to have no more than 10 testers at each session, and preferably less — particularly in the early session, when many software bugs might be present.

When we asked the testers if they thought we should use this approach again, every one of the testers who responded said yes, and 20 percent of these said yes, definitely. One typical response was "It's not until you play with a system does it really sink in, and you can start to have questions." In addition, the testers found they were listened to throughout the process and found it beneficial to ask questions and get answers quickly.

The issue of end-to-end testing must be addressed when you compose the test teams. In our case, the process owners and the departmental head affected by the change developed comprehensive test scripts. In practice, we did not find tester creativity to be a problem—our testers were very aware of data and procedural sequences that were currently causing problems or had caused them problems in the past — and the process owners formulated their own test scenarios to an excellent standard. In general, however, implementation teams might find that the business analyst is probably the person with the best overview. In this case, the business analyst should be consulted on the composition of the test teams. That person should also prepare test scripts and seed data in case testers need help in thinking up test data to use.

Stream 7: Collect User Findings and Feedback

We tasked the trainer and business analyst with collecting user test findings and feeding back their summaries of the test results to the testers. Test findings should be generated during the test session for timely presentation to the project team. This provides immediacy and visibility of the tangible lessons learned from the testing session to those testers involved, which reinforces the importance given to the test process. When significant bugs were identified and corrected, we informed the "bug finder" of the changes made and invited them back to recheck the correction; this achieved significant buy in and ownership transfer of the software.

Throughout the project, we had an excellent in-house communications team working alongside the project team. The communications team was able to disseminate to a wider audience the progress made during the test process and raise awareness of the planned system changes. That team was also able to spotlight the real people behind the complete process, from design through testing, and support the initiative.

Stream 8: Have a Formal Sign-Off

Involving the process owners and departmental head in the overall project, through definition design and test, enabled a smooth sign-off on user acceptance testing. More interestingly, the practical user acceptance levels within the department were very satisfactory, a result attributed to the elevated awareness and involvement attained by interpersonal contact between the testers and the user body, by the communications strategy put in place, and by strong and public sponsor support.

Summary

Our facilitated testing program's success arose from several factors, including the:

- Availability of an actual demonstration of how the new system would work.

- Ability to use "live" data in the testing process.

- Ability to process transactions to test setup, billing and reporting.

- Opportunity for system testers to raise questions and receive responses to those questions quickly.

- Ability to hear other tester questions and comments.

Comments from the delegates attending our departmental facilitated testing program sessions were favorable, and they recommended that we use this approach in future projects. Overall, feedback from the facilitated testing program was positive and gave system users (from both Central Finance and other departments) the opportunity to raise questions.

Among the feedback we received were recommendations to:

- Ensure that key users are available to devise typical scenarios to include in the testing.

- Ensure staff members who manage operations and can validate that business requirements have been met are available for system testing.

- Structure the testing so that the initial focus is on the area of system change, and build up to more complex scenarios within current functionality.

- Provide a checklist of key areas to assist the users in testing the changes.

- Use the facilitated testing structure for future system enhancements and system testing activities.

- Identify competent Oracle system users and involve them in the testing.

- Take the testing to the users by using appropriate locations throughout the university.

- Submit facilitated testing program feedback electronically.

Other organizations have tried elements of this approach before under other names and in various combinations.

The facilitated testing program idea builds on the standard application of user acceptance testing for new IT initiatives. It goes further, however, in the preparation for the experience, the support, and the follow-up in human terms. Facilitated testing is based on the premise that you cannot simply leave users to their own devices when testing a new and enhanced system. Instead, users must be taught how the system works and be supported through the testing process. A facilitated testing program engages users by collectively introducing the changes expected, guiding them through a testing approach, and then — and only then — letting them loose for further destructive testing.

Facilitated Testing Program/Quick Reference Guides

Roles

| Role | Task |

|---|---|

| System administrator | Prepare clone of live system on demand Transfer clone to separate server or processors/file space on mainframe Apply software under test to the cloned system Change user screen appearances to distinguish test system from live Manage tester access and email messaging (if used in the live system) |

| Software developer | Complete all development that the software change requires Be available to review and resolve system bugs during the testing |

| Trainer and facilitator | Lead early testing activities to build knowledge of the system changes and prepare for the facilitated testing training course Prepare the training course with the project team Deliver the training presentation Capture feedback from the training course and feed it back to the project team |

| Business analyst | Act as liaison between the testers and the development team Lead early testing activities to build knowledge of the system changes and prepare for the facilitated testing Capture feedback from the training course and feed it back to the project team |

| Test team | Represent the wider user base to test system changes Provide feedback to the project team throughout the facilitated testing program |

| Project management team | Sponsor the project and expected system changes Provide subject matter experts to support the facilitated testing program Provide feedback to project board on progress of the plan |

Prerequisites

| Components | Requirements |

|---|---|

| Clone live system | Ability and authorization to clone the live system on demand Ability to clone security settings |

| Patch clone with upgrade | Ability to quickly apply the software to the cloned copy of the live system |

| Suitable testers | Early sourcing of test teams |

| Skilled trainer | Availability of a skilled trainer who understands the live system and has already worked through the modified system with the software developers and business analysts |

| Training course for the new software | Prototype training (which will be developed into the final user training suite) FAQs (using a moderated Wiki, if possible) Oracles' User Productivity Kit (UPK) tool (or equivalent) to distribute training in advance and support testers in using the new system |

| Business analyst availability | Familiarity with business case and final system design to support trainer during the test process |

| Report identification | Marking all output to indicate that they have been generated by the test system to avoid confusion with live system reports |

The Tester Experience

(Subject to changes required by the revised software, which will be explained to them.)

| Tester | Capability |

|---|---|

| System access | Testers can sit down at a terminal and log in to the test system using their normal password. They will see their usual screens and have their standard access privileges. |

| Data | Testers will have access to their live data, which is at most days old. They will be able to complete transactions already on the system and generate new transactions within their spheres of responsibility. |

| Screen appearance | The screen content and layout are the same as in live system, but the screen colors and font are different to indicate to users that they are on the test system. |

| Reports | Standard reports must be directed to a printer within the test room. If possible, such reports should carry a warning watermark that they have been produced from the test system. |

| Alerts | Standard system alerts, either on-screen or by e-mail, must be directed only to terminals within the test room. They must not be allowed to enter the live system outside the test area at any time. Alerts should be marked as "test alerts" if possible. |

© 2013 Jodi Ekelchik and Tom McBride. The text of this article is licensed under the Creative Commons Attribution 3.0 Unported License.