Key Takeaways

- Using clicker technology in classrooms can increase student learning, participation, and attendance.

- Clickers require a significant economic investment; deciding who pays is a key issue that gives rise to two implementation models: institution-pays and students-pay.

- Although having students pay for clickers and earn incentives for their use improved student participation and attendance, the collected data — along with both instructor and student opinion — supports an institution-pays model.

Student response systems can help instructors integrate active learning into their classrooms. Such technology is known by a variety of names, including classroom response systems, student response systems, audience response systems, electronic response systems, personal response systems, zappers, and clickers. The “system” consists of three components: a clicker, a receiver, and software. Each student possesses a clicker — a personal handheld keypad that allows them to answer multiple-choice questions posed by a course instructor (see Figure 1). Students choose their answers by pushing the appropriate numbered button on the clicker, which transmits a radio frequency signal to a receiver. This receiver collects the data that the class clicks in and sends it to the clicker software installed on the instructor’s computer. The instructor can then display a class response histogram to the class as an object of discussion — especially in instances where there is no consensus among students as to the correct answer. For the purposes of simplicity and consistency, we will refer to the entire system as “clickers.”

Figure 1. A Student Demonstrates Clicker Use

We tested three different clicker implementation approaches from 2008–2009:

- In the first implementation, the students-pay-without-incentive (SPWOI) approach, students bought clickers and were not awarded grade points for clicker use.

- In the second, the students-pay-with-incentive (SPWI) approach, students bought clickers, and three to five percent of their final course grade was based on clicker use.

- In the third, the institution-pays-clicker-kit (IPCK) approach, instructors loaned students institution-owned clickers on a class-by-class basis.

In this article we discuss these models and provide a decision tree that can further serve as a guide in clicker implementation.

Clicker Use and In-Class Learning

Recent research suggests that, when used as part of an active learning pedagogy, clickers increase in-class learning. Michelle Smith et al.1 accompanied clicker questions with short (2–3 minute) student-to-student discussions and documented significant learning gains. Steven Yourstone, Howard Kraye, and Gerald Albaum2 showed that, when used for the duration of a course, active learning clicker use can lead to higher final examination scores. Similarly, Kirsten Crossgrove and Kristin Curan3 documented higher exam scores on course material that was taught using clickers compared to course material that was taught with more conventional means. However, most clicker proponents agree that these learning gains would not be evident without incorporating active learning pedagogies into clicker use; clickers are effective learning tools because they facilitate a constructivist learning environment in which immediate feedback is given.4 Clickers can also be used in contexts that do not directly facilitate in-class learning, such as when asking formative evaluation questions,5 collecting demographic information from a class,6 or quizzing with trivia-style questions.7 These types of questions can be useful for checking students’ knowledge of simple factual information, as well as helping maintain student attention. See the sidebar “Question-Asking Pedagogies” for a list of question-asking strategies for clicker use.

Clicker Implementation Models

Although clickers are very useful learning tools, they can require a significant economic investment. As more institutions adopt clickers, the question of best-practice clicker implementation arises and a decision must be made as to who is responsible for purchasing the required clickers. Usually, this responsibility rests either with the institution or with students, giving way to two key implementation models.

The Institution-Pays Implementation Model

In an institution-pays model, the educational institution assumes the responsibility of buying and distributing the required number of clickers to students. Distribution is usually carried out using one of four popular approaches: the clicker kit, the long-term loan, embedded clickers, and free-clickers.

In the clicker-kit approach, the institution gives instructors the clickers they need for the students in their courses. The clickers are transported to-and-from class every lecture in a carrying case, or clicker kit, and are loaned to students on a class-by-class basis. In the long-term loan approach, each student requiring a clicker borrows one from the institution for an extended period of time, such as a semester, a year, or several years. At the end of the time period, students must return their clickers to the institution or be charged a replacement fee to cover its cost. In the embedded-clickers approach, a clicker is installed into the armrest of each permanent-fixture classroom seat. Each clicker is then used by the student occupying the seat. Finally, in the free-clickers approach, the institution purchases clickers and simply gives one to each incoming student with no expectation that the clickers will be returned.

The Students-Pay Implementation Model

In the students-pay model, each student assumes the responsibility for buying his or her own clicker. When they complete their degrees and leave the institution, they can resell their clickers to incoming students as desired. Students are asked to buy a clicker in the same way that they are asked to buy a textbook, understanding that it is a tool that is designed to aid their learning in certain courses. In the students-pay model, clicker distribution is often managed by an institution-affiliated vendor (such as a bookstore). Within this model, two approaches are generally used. First, in the students-pay-with-incentive approach, students must register their clicker with the class and are given grade points (the incentive) for participation and/or performance in clicker questions. Second, in the students-pay-without-incentive approach, no grade points are given for clicker-use.

Objective

The objective of this research was to test the feasibility of three different clicker implementation approaches based on the opinions, behaviors, and ideologies of students and instructors. The approaches we tested were:

- An institution-pays-clicker-kit (IPCK) approach

- A students-pay-with-incentive (SPWI) approach

- A students-pay-without-incentive (SPWOI) approach

We collected data from science courses at McGill University, a large, research-intensive institution in Canada.

Methods

We wanted to ensure valid comparisons for our study by setting up similar conditions for the courses included and similar clicker experience levels for the instructors. We evaluated instructor and student behavior and sought their opinions, as well as analyzing our data to determine statistically significant differences among groups.

Course Selection

We collected data from nine science courses, with three assigned to each of the three implementation approaches. The nine were selected from among 20 candidate science courses that were using clickers at our institution in the 2008–2009 academic year. Of these, 15 were using an SPWOI approach, four an SPWI approach, and one an IPCK approach. Of these, we selected five SPWOI courses, three SPWI courses, and the IPCK course to participate in our study. We asked the instructors of two SPWOI courses to adopt an IPCK approach for the purposes of our study, resulting in three courses using each implementation approach.

Course selections were made so as to minimize student overlap among courses, preserving independence of data for each. Each course involved in our study had from 150 to 650 registered students. Further information about the specific demographics of these courses is limited due to the conditions of confidentiality for instructors and anonymity for students made explicit in our study; giving exact class sizes and/or course departments would allow for easy identification of the participating courses given the small pool of 20 science courses using clickers during the study period.

The three courses used in each implementation approach included at least one introductory-level course and at least one advanced-level course. The specific way that the instructors integrated clickers into their courses was not controlled for, but we believe that most integration methods were similar. Prior to the study, no instructor had more than one year’s experience using clickers. We required all participating instructors to complete a clicker workshop, where they learned how to effectively integrate clickers into their teaching. Although the exact clicker-use pedagogies almost certainly varied from one instructor to another, we believe that these two factors (limited experience and common training) provide grounds for assuming relative similarity among instructor clicker-pedagogy and integration.

Student and Instructor Behavior

We conducted in-class observations of more than 138 class sessions throughout the nine target courses, covering more than 167 hours of instruction, including 365 clicker questions. We collected two main pieces of data on student behavior:

- The percent of student attendance, defined as the number of students who attended a given class session divided by the total number of students registered in the course (multiplied by 100)

- Student participation, defined as the number of students who clicked in a given class session divided by the total number of students who attended (multiplied by 100)

We grouped student behavior data into three categories (matching the implementation approaches) to test for significant differences among groups using an analysis of variance.

Because we designed our study specifically to include three courses in each implementation approach, we decided to collect instructor-choice behavior data from across the faculty of science rather than from the nine courses in our study. Instructor-choice behavior was measured as the percentages of courses using the IPCK approach, the SPWI approach, and the SPWOI approach. In this tabulation, we did not include the two IPCK courses from our study because we had asked these instructors to use the kits.

Student and Instructor Opinion

We conducted midterm and end-of-term student surveys in each course to gather data on students’:

- Satisfaction with the implementation approach they were using

- Opinion on an institution-pays versus students-pay approach

- Opinion on SPWI versus SPWOI approaches

In the fall 2008 semester, we surveyed 763 and 659 students at midterm and end of term, respectively. In the winter 2009 semester, we surveyed 247 and 155 students at midterm and end of term, respectively. (See a sample student survey here.)

We conducted end-of-term instructor satisfaction and opinion surveys to determine instructors’:

- Satisfaction with the implementation approach they used

- Perception of student satisfaction with the implementation approach they used

- Opinion on the appropriateness of each clicker implementation approach

(See a sample instructor survey here).

We surveyed a total of 12 instructors over two semesters (some courses were team-taught). Two instructors declined to participate in the survey. We also asked instructors their opinion on the appropriateness of awarding participation grade points and performance grade points for clicker use. Participation grade points are awarded when a student answers a clicker question (by clicking in), but grade points are not added or deducted on the basis of being correct or incorrect. Performance grade points are awarded for answering a clicker question correctly.

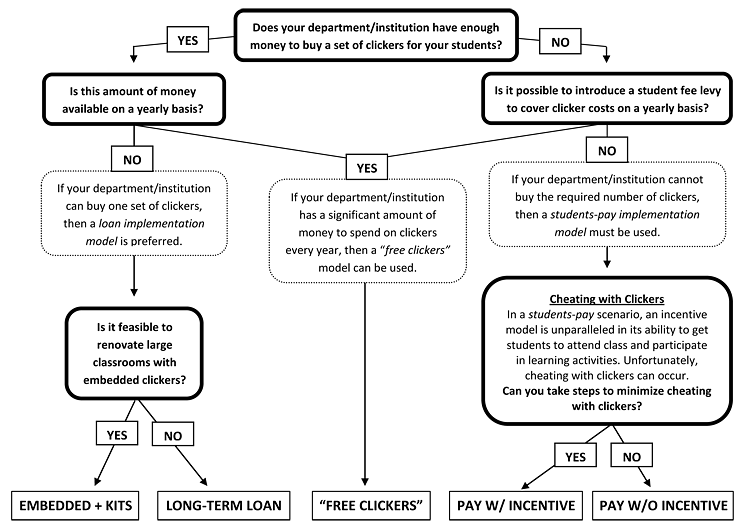

Institution Capability

The ability to support a particular clicker implementation approach is highly variable from institution to institution. Our study operated within the study institution’s implementation model support capabilities. For an additional perspective, we created a decision tree based on the data we collected (see Figure 2). The decision tree empowers institutions to discriminate between implementation approaches based on their specific resource capabilities and to choose the right implementation approach for their specific situation.

Figure 2. Choosing the Optimal Clicker-Implementation Approach

Key Results

As noted, we surveyed students participating in our study courses, as well all instructors in the university’s science department, with the following results.

Student and Instructor Behavior Data

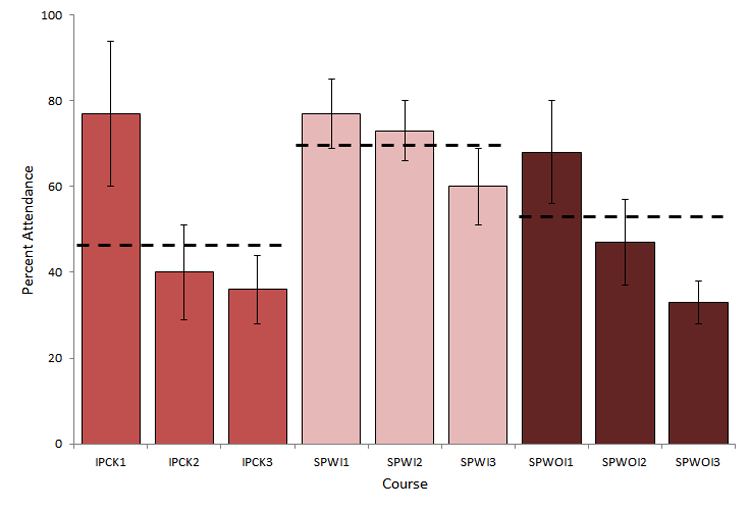

- Attendance as a percentage of registered students (see Figure 3)

- IPCK courses: 45%

- SPWOI courses: 53%

- SPWI courses: 69%

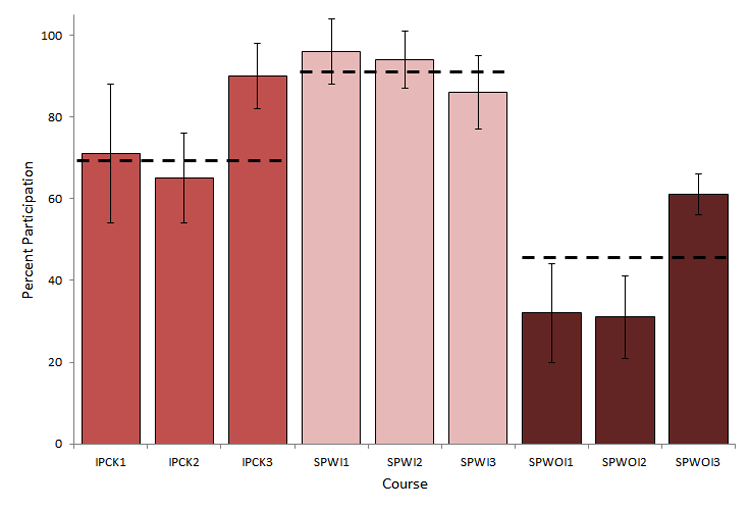

- Participation as a percentage of attending students (see Figure 4)

- IPCK courses: 69%

- SPWOI courses: 47%

- SPWI courses: 93%

- Model choice by instructors in courses using clickers (20 total)

- IPCK courses: 1

- SPWOI courses: 15

- SPWI courses: 4

Figure 3. Attendance and Clicker-Implementation Approach

Figure 4. Participation and Clicker-Implementation Approach

Student Opinions

- The IPCK approach

- 92 percent of respondents indicated they would rather use a clicker on loan from the university than buy their own.

- 81 percent of respondents agreed or strongly agreed that the university should be responsible for providing free-clickers; 5 percent disagreed or strongly disagreed; 13 percent were neutral.

- 68 percent of respondents indicated that they would prefer to be loaned a clicker on a semester-by-semester or on a year-by-year basis. Only 13 percent of respondents indicated that they would prefer a lecture-by-lecture loan model. The other 19 percent of respondents would prefer two-year loans or length-of-degree loans.

- The SPWOI approach

- 13 percent of respondents indicated they would pay $50 for a clicker even if no grade points were attached, because clickers are valuable learning tools.

- 72 percent of respondents indicated that they would buy a clicker if it was used in “at least 3 courses” they were taking per semester, while 4 percent of respondents answered “at least 1 course,” and 24 percent answered “at least 2 courses.”

- The SPWI approach

- 65 percent of respondents indicated they would buy a clicker for $50 if 3–5 percent of their final grade was given for clicker participation.

- 41 percent of respondents agreed or strongly agreed that it was appropriate to have participation grade points awarded for clicker use; 35 percent disagreed or strongly disagreed; 24 percent were neutral.

Instructor Opinions

- The IPCK approach

- 67 percent indicated they would be willing to use a clicker kit.

- 75 percent indicated they felt it was the university’s responsibility to provide free clickers to students.

- The SPWOI Approach vs. the SPWI Approach

- 42 percent of polled instructors agreed or strongly agreed that it was appropriate to award participation grade points for clicker use; 33 percent disagreed or strongly disagreed; 25 percent were neutral.

- 8 percent of polled instructors agreed that it was appropriate to award performance grade points for clicker use; 75 percent disagreed or strongly disagreed; 17 percent were neutral.

Discussion

In evaluating the results for each clicker implementation model, we found that the approaches generally have some conditions under which they are preferred. Here we summarize the conclusions and recommendations for each.

Institution Pays versus Students Pay

The three clicker implementation approaches we explored, and their variants, have strengths and weaknesses, and successful implementation can be dictated by an institution’s resource capabilities (see Figure 2 for an overview). That said, the data we collected generally supports an institution-pays implementation model over a students-pay implementation model. Although the SPWI approach scored highest in both student attendance and participation behaviors, it lacked opinion support from students and instructors. However, in an institutional context where instructors favor an incentive-based implementation approach, or where clicker implementation approaches are chosen by each instructor rather than at departmental levels, an SPWI approach might be appropriate. Based on our findings, the SPWOI approach should be used only when clicker use is widespread across courses in a given department or institution.

Student Attendance and Participation within Each Approach

We found considerable variance within approaches in terms of student attendance (Figure 3) and, to a lesser degree, student participation (Figure 4). Although this variance is large at times, we do not believe that it had a significant impact on our findings. Nancy Fjortoft8 showed that this type of variance is sometimes due to differences in class scheduling and instructor teaching style. Two of our nine courses were early morning courses starting at 8:35 a.m.: IPCK3 and SPWOI2. IPCK3 had a very low attendance rate (36 percent) but a very high participation rate (90 percent). SPWOI2 had a moderate attendance rate (47 percent), but a participation rate of only 31 percent. It is possible that the early start time led to the low attendance in IPCK3 and the low participation in SPWOI2, but courses in our study with later start times had equally low attendance and participation rates. (In SPWOI3, for example, attendance was 33 percent with a 2:35 p.m. start time, while participation in SPWOI1 was 32 percent with a 10:05 a.m. start time.) All other courses had start times later than 10:00 a.m. and concluded before 4:00 p.m. This means that start time probably had little effect on the differences we observed in student attendance and participation among implementation approaches.

Differences in teaching ability or style, however, are not as easy to dismiss and have been shown to have small but measurable impacts on the effectiveness of peer-engagement techniques.9 One obstacle for measuring this in our study is that the qualities that define an excellent/good/average/poor teacher are ill defined.10 It is difficult to get accurate, objective, quantitative, and reliable data on even some of the most basic instructor traits like “charisma,” “sense of humor,” or “preparedness.” What one observer perceives as a well-prepared, charismatic instructor in a given class session might come across as an arrogant and abrasive instructor to another observer. Because of this, we limited our data collection to objective forms of quantitative data and survey data. Although instructor style and behavior likely affected student attendance to a degree, it is unclear how much style and behavior would affect student participation. We believe that the high overall average student attendance and participation among SPWI courses is due to the grade-point incentive being offered. Attendance notwithstanding, the IPCK approach also scored very well in student participation.

The Institution-Pays Model: Breaking It Down

There are many practical considerations for implementing an institution-pays model across an entire institution or department. The most obvious and perhaps the most significant consideration is the upfront cost of purchasing a set of clickers. Regardless of whether an IPCK approach is used or another loan-type institution-pays approach, enough clickers must be purchased to outfit all students expected to use them. Depending on the vendor, clickers can cost up to $50 Canadian each, plus the cost of a receiver (approximately $200) for each classroom. In 2007–2008, McGill University purchased 5,000 clickers and dozens of receivers at a total cost of approximately $250,000 Canadian. For many institutions, this type of upfront investment might be infeasible, while for others — particularly smaller institutions that require hundreds rather than thousands of clickers — this type of expenditure might be possible.11

Other challenges include the infrastructure and personnel resources required to manage an institution-pays implementation model. In our study, the institution-pays model was implemented in 2007–2008, but it lasted just one year due to the lack of additional resources required to store, test, maintain, and track loaned clickers. In this particular case, the institution loaned clickers to students on a yearly basis (rather than implementing clicker kits), so clicker resource staff had thousands of students to interact with (each bringing their own individual clicker) rather than dozens of instructors (each bringing their own kit of clickers).

The IPCK approach wasn’t used in 2007–2008 because class size limits the model. The clicker kits we used in our study carried between 100 and 150 clickers — appropriate for classes of the same size. Many introductory science courses exceeded 150 students. Clicker delivery to students in courses with several hundred students is difficult with a clicker kit. Student traffic to and from the kit to pick up and drop off a clicker can take a significant amount of time with hundreds of students, and the prospect of having the instructor carry multiple clicker kits to and from class makes clicker kit implementation impractical under these circumstances. However, if an institution is dominated by small to medium classes (that is, with less than 150 students), then the clicker kit institution-pays implementation model remains feasible.

The Students-Pay Model: Breaking It Down

Courses implementing the SPWI approach were unparalleled in their student attendance and participation rates. This makes intuitive sense — if students are not in class using their clickers, they will not earn the grade points associated with clicker use. However, there were mixed reviews of this model in the opinion poll data. Students were much divided in their support of this model, with 41 percent in favor, 35 percent against, and 24 percent undecided. Likewise, instructor opinion was divided, with a nearly identical split: 42 percent in favor, 33 percent against, and 25 percent undecided. Although opinion varies on assigning grades for clicker use, an SPWI approach carries virtually none of the challenges present in an institution-pays implementation scenario.

An SPWI approach has numerous benefits. Instructors do not have to carry a kit to and from class or worry about in-class distribution issues; the institution is not saddled with a large upfront clicker cost; and personnel and infrastructure resources to not need to be developed to store, test, maintain and track loaned clickers. Each student is largely responsible for their own clicker maintenance, although we would strongly advise that a clicker testing desk be made available for students to test that their clicker is working properly, for example after changing the battery or resetting the radio frequency channel. An institution implementing an SPWI model will also have to make provisions for students to register their clickers with the institution (or with individual instructors) so that the appropriate grade points can be awarded.

Although science instructors in our study ideologically supported an SPWI approach, few actually implemented it — only four did so out of the 20 courses using clickers. We can suggest a few reasons for this reluctance: Instructors might not understand the gains that this model provides (that is, high student attendance and participation) and might perceive that the extra time cost required to document clicker grade points outweighs the benefit. It is also possible that many instructors worried that the possibility of technology failure or cheating could make an incentive model inappropriate. Technology failure could theoretically happen if the clicker hardware or software does not accurately or successfully record a student’s clicks. Cheating can occur when a student brings his/her friend’s clicker to class and clicks for them in their absence. This is very possible; clickers are small and can transmit data from a concealed location. Peter White, David Syncox, and Brian Alters12 addressed these concerns in detail and concluded that the prospective of technology failure with modern clicker technology is more of a sensationalized fear than a crippling probability. Cheating, they concluded, is a real concern but can be minimized by educating students about clicker-related academic fraud policies and by staying away from high-stakes clicker activities (such as individual clicker questions that are worth a large proportion of the final course grade).

One of the only significant advantages of an SPWOI approach is the low resource investment it requires from the institution. Some institutions simply cannot acquire the personnel, financial, or infrastructure resources needed to run a loan approach and also feel uneasy about grades-based clicker use. Thus, even though the SPWOI approach shows no measurable gains in student attendance or student participation and was not supported by student or instructor opinion polls, it might remain the approach of choice. In our study, student opinion of an SPWOI model became favorable under the condition that they be used in 60 percent of their courses. Many institutions rely on instructor volition to implement clickers into their particular courses; this can slow clicker adoption rates and make the 60 percent adoption target difficult to reach.

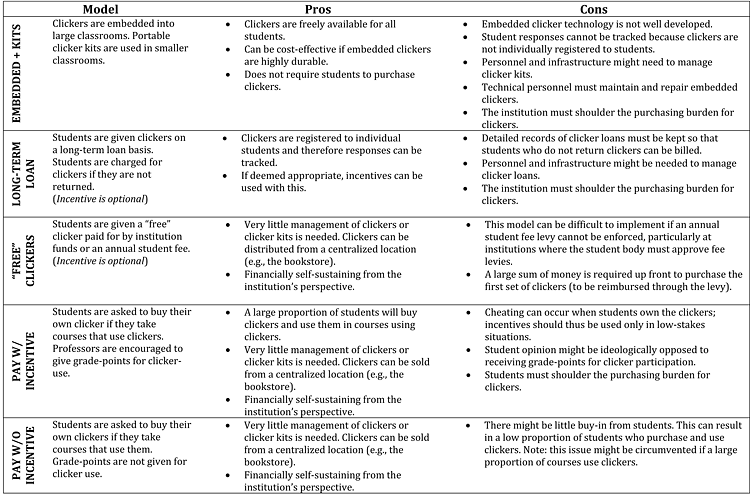

Choosing a Clicker Implementation Approach

Based on our findings, we outline five different clicker implementation approaches corresponding to the different combinations of student, instructor, and institution considerations (see Figure 2 and Table 1). The first hurdle for clicker implementation is a financial one. If an institution has the requisite financial resources or can raise them (for example, through a student fee levy), then this suggests that an institution-pays model can be used. If financial resources are unavailable, then the only viable approaches are those in which students pay. In a perfect scenario, where annual financial resources are abundant, students would simply receive “free” clickers paid for by the institution or as part of a fee levy. This scenario circumvents many of the staffing and upkeep issues that arise with loan scenarios and might also avoid the negative student perception of clickers that can arise when they have to pay for them out of pocket.

Table 1. Feasible Clicker Implementation Approaches

This “free clickers” approach quickly becomes unsustainable if the financial resources are not renewable on an annual basis. In this case, there are two types of loan approaches that might be appropriate. The first is the long-term loan approach. Its major hurdles, as discussed, are infrastructure and personnel. Instead, where class sizes are small and student residency at an institution is short, clicker kits might be preferred. Clicker kits, when loaned out to instructors on a course basis, have lower staffing requirements than an approach in which clickers are loaned out to individual students. One final option in the institution-pays model is the embedded-clicker approach, in which clicking devices are embedded into the armrest of permanent classroom seating. This is not a novel idea,13 but it could be a very efficient approach for large lecture halls. For example, if a lecture hall has a 400-seat capacity and hosts six courses during a given semester (with two semesters per year), then 400 embedded clickers could serve up to 4,800 students per year. This could become a very cost-effective approach if embedded clickers are durable beyond one or two years.

The reality in many institutions is that budgets are tight and financial resources are not available for a clicker implementation initiative. This necessitates a students-pay model. The most significant consideration in this model is whether or not to attach grade-point awards to clicking in class. Cheating issues aside, cues can be taken from students to determine the appropriateness of an incentive versus non-incentive approach. In one engineering class at the studied institution, the instructor gives the students the choice of whether to attach participation grade points to clickers. The option a class chooses varies from year to year, depending on the mix of students and the prevailing attitudes. Some students see these types of grade points as free marks to be taken advantage of, while others see them as a manipulative way to ensure high class attendance.

Conclusion

Our data suggests that an institution-pays model is preferred over a students-pay model. However, many institutions do not have the financial, infrastructure, or personnel resources to support an institution-pays model, and in these cases, an SPWI approach might be best for the long-term sustainability of clicker use in the classroom. If resource capabilities are limited and an incentive-based clicker option is not possible, SPWOI becomes the only option and might still achieve long-term sustainability if adoption rates are high among instructors.

Acknowledgments

We are grateful to Dr. Richard H. Tomlinson for his generous funding and support, and to Connor Graham and Erin Dodd for their contributions collecting data. Finally, thanks to Faygie Covens for her invaluable insights and input throughout the project.

- Michelle K. Smith, William B. Wood, Wendy K. Adams, Carl E. Wieman, Jennifer K. Knight, Nancy A. Guild, and Tin Tin Su, “Why Peer Discussion Improves Student Performance on In-Class Concept Questions,” Science, vol. 323, no. 5910, 2009, pp. 122–124.

- Steven A. Yourstone, Howard S. Kraye, and Gerald Albaum, “Classroom Questioning with Immediate Electronic Response: Do Clickers Improve Learning?” Decision Sciences Journal of Innovative Education, vol. 6, no. 1, 2008, pp. 75–88.

- Kirsten Crossgrove and Kristen L. Curran, “Using Clickers in Nonmajors- and Majors-Level Biology Courses: Student Opinion, Learning, and Long-Term Retention of Course Material,” The American Society for Cell Biology—Life Sciences Education, vol. 7, no. 1, 2008, pp. 146–154.

- Jane E. Caldwell, “Clickers in the Large Classroom: Current Research and Best-Practice Tips,” CBE Life Sciences Education, vol. 6, Spring 2007, pp. 9–20.; Stephen Draper and Margaret I. Brown, Ensuring Effective Use of PRS: Results of the Evaluation of the Use of PRS in Glasgow University, Department of Psychology, University of Glasgow, 2002; Adrian Kirkwood and Linda Price, “Learners and Learning in the Twenty-First Century: What Do We Know about Students’ Attitudes Towards and Experiences of Information and Communication Technologies that Will Help Us Design Courses?” Studies in Higher Education, vol. 30, no. 3, 2005, pp. 257–274.

- Kathy Kenwright, “Clickers in the Classroom,” TechTrends, vol. 53, no. 1, 2009, pp. 74–77; Kalyani Premkumar and Cyril Coupal, “Rules of Engagement—12 Tips for Successful Use of "Clickers" in the Classroom,” Medical Teacher, vol. 30, no. 2, 2008, pp. 146–149.

- Sally A. Gauci, Arianne M. Dantas, David A. Williams, and Robert E. Kemm, “Promoting Student-Centered Active Learning in Lectures With A Personal Response System,” Advances in Physiological Education, vol. 33, no. 1, pp. 60–71.

- Beth Morling, Meghan McAuliffe, Lawrence Cohen, and Thomas DiLorenzo, “Efficacy of Personal Response Systems (“Clickers”) in Large, Introductory Psychology Classes,” Teaching of Psychology, vol. 35, 2008, pp. 45–50.

- Nancy Fjortoft, “Students' Motivations for Class Attendance,” American Journal of Pharmaceutical Education, vol. 69, no. 1, 2005, pp. 107–112.

- Yourstone, Kraye, and Albaum, “Classroom Questioning”; and Chandra Turpen and Noah D. Finklestein, “Not all Interactive Engagement Is the Same: Variations in Physics Professors’ Implementation of Peer Instruction,” Physics Education Research, vol. 5, no. 2, 2009, pp. 1–18.

- Fred A.J. Korthagen, “In Search of the Essence of a Good Teacher: Towards a More Holistic Approach in Teacher Education,” Teaching and Teacher Education, vol. 20, no. 1, 2004, pp. 77–97.

- Jim Twetten, M.K. Smith, Jim Julius, and Linda Murphy-Boyer, “Successful Clicker Standardization,” EDUCAUSE Quarterly, vol. 30, no. 4, 2007, pp. 1–6.

- Peter White, David Syncox, and Brian Alters, “Clicking for Grades? Really? Investigating the Use of Clickers for Awarding Grade-Points in Post-Secondary Education Institutions,” Interactive Learning Environments, iFirst article, 2010, pp. 1–11.

- Joel A. Shapiro, “Electronic Student Response Found Feasible in Large Science Lecture Hall,” Journal of College Science Teaching, vol. 26, no. 6, 1997, pp. 408–412.

© 2011 Peter J.T. White, David G. Delaney, David Syncox, Oscar Avila Akerberg, and Brian Alters. The text of this EQ article is licensed under the Creative Commons Attribution-Share Alike 3.0 license.