Key Takeaways

- Students and faculty use course management systems much more frequently than any other technology.

- Professional students use classroom response devices ("clickers") and Education students use e-portfolios more often than students in other fields use either.

- Faculty in all disciplines rarely use blogs, collaborative editing tools, and games and simulations.

- Students and faculty have different expectations and use technologies in different contexts, which can create tension and misunderstandings between the two groups.

Technology is often considered an enabler, a way of surpassing our natural limitations. In the classroom, educators employ technology with the hope that it will enable students to learn more effectively and teachers to teach more effectively. Although the empirical research is often mixed or contradictory with respect to technology's effectiveness and the reasons for that effectiveness,1 undergraduates expect faculty to use technology and use it well.2

We must untangle several complex ideas to understand this phenomenon:

- First, we must unpack which technologies students and faculty use, and how often.

- Second, we must understand their experiences in the contexts in which they live them. Arguably, one of the most pervasive contexts is the structure of academic disciplines that permeates American higher education.

- Finally, we must explore potential differences in how students and faculty view and use academic technologies — they are two very different populations who use these technologies in very different contexts.

Literature Review

A growing body of research has linked student engagement — a proxy for student learning and involvement closely associated with the National Survey of Student Engagement (NSSE) and related surveys — with technology. Using data from the College Student Experiences Questionnaire (CSEQ), the predecessor to NSSE, George D. Kuh and Shouping Hu found a positive relationship between a student's use of technologies and self-reported gains in science and technology, vocational preparation, and intellectual development.3 In another study, Hu and Kuh also found that students attending more "wired" institutions not only used computers more frequently but reported higher rates of engagement than students at other institutions.4 Data from NSSE have repeatedly indicated that student use of information technology is not only strongly associated with measures of learning and engagement such as academic challenge, active and collaborative learning, and student-faculty interaction but also that students who more frequently use technology report greater gains in knowledge, skills, and personal growth.5

Despite the research that has linked technology with positive educational outcomes and learning, for decades a number of researchers have argued convincingly that any link between technology and learning is indirect at best. Richard Clark, Kenneth Yates, Sean Early, and Kathrine Moulton provided an excellent brief overview of these arguments,6 while Clark provided an in-depth, book-length review.7 These arguments typically focus on the pedagogical changes that inevitably accompany the introduction of technology into the class or classroom, asserting that pedagogical changes are responsible for changes in learning, not the technologies themselves. The federal government's now-defunct Office of Technology Assessment summarized this argument neatly:

…it is becoming increasingly clear that technology, in and of itself, does not directly change teaching or learning. Rather, the critical element is how technology is incorporated into instruction.8

One explanation for the evident link between technology use and positive educational outcomes might be that use of technology often increases time on task. For example, a recent meta-analysis commissioned by the U. S. Department of Education9 examined the relationship between learning outcomes and online and hybrid courses. The authors concluded that both online and hybrid courses have a significant positive impact on learning outcomes, with hybrid courses having a greater impact. In sympathy with the arguments against a simple link between technology and education, the authors cautioned that the "positive effects associated with blended learning should not be attributed to the media, per se."10 In fact, a close reading of the report shows that online and hybrid courses appear to require more time on task than offline courses, a potential explanation for their increased effectiveness. Indeed, NSSE data support this conclusion. In his 2004 analysis of students' online habits, NSSE researcher Thomas Nelson Laird concluded that "students who spend more of their time online for academic purposes are likely to benefit to a greater degree from their collegiate experience than other students."11

Another way to explore the relationship between academic technologies and educational outcomes is to examine the different ways that students and faculty in different academic disciplines use technology. In American higher education, academic disciplines and discipline-based departments are "the foundation of scholarly allegiance and political power, and the focal point for the definition of faculty as professionals."12 Disciplinary affiliation shapes how faculty conceive of knowledge and how they teach,13 how they use technology in their teaching,14 and the impact of technology on their students.15

Our study extends the research into faculty and student use of contemporary academic technologies by asking five questions:

- How often do students report using academic technologies?

- How often do faculty report using academic technologies?

- Do students in different disciplines use these technologies more or less than their peers?

- Do faculty in different disciplines use these technologies more or less than their peers?

- Are there noticeable differences between how often students and faculty use these technologies?

Methodology

Our study examined responses to a pair of surveys — the National Survey of Student Engagement (NSSE) and the Faculty Survey of Student Engagement (FSSE) — administered in the spring of 2009. Eighteen American colleges and universities participated in both surveys, administering a matched set (student and faculty) of additional questions focused on academic technology and communication media.

Of the 18 participating institutions, eight are public institutions, eight are private nonprofit institutions, and two are for-profit institutions. They range from relatively small baccalaureate colleges through medium-sized master's colleges and universities and special-focus institutions to large research universities; the average enrollment was 5,300 students. Two of the institutions are Historically Black Colleges or Universities (HBCUs).

This analysis includes only the 4,503 randomly selected senior student respondents. Unlike their classmates in the first year of study, senior students not only have a declared major but likely have taken and are taking many classes in their discipline. Similarly, only the 747 faculty members who teach primarily upper-division courses or senior students were included, as they tend to teach courses that more clearly demonstrate differences between disciplines. Also, faculty respondents were nearly evenly divided between the ranks of assistant professor, associate professor, and professor, with only 13 percent of respondents having different titles (lecturer, instructor, etc.).

Student respondents were:

- 68.2 percent female

- 61.7 percent white

- 76.7 percent full-time

Faculty respondents were:

- 57.4 percent male

- 72 percent white

- 41 percent 55 years old or older; 7 percent younger than 35

The analyses employed no other controls because this study seeks to understand the student and faculty experiences without making predictions or describing causal relationships. Not only do the number of disciplines explored and number of faculty surveyed make it difficult to perform complex analyses (the cell sizes quickly become very small, for example), but we wanted to make this study readable and easily applied to practice. Although the latent social, cultural, and economic causes are interesting and important, they are complex and beyond the scope of this study and its two brief surveys. Finally, the list of technologies is not exhaustive — surveys must remain succinct and focused for people to respond.

In addition to comparing means and identifying homogenous subgroups using Tukey's post-hoc test, we performed cluster analysis on both student and faculty responses, grouping students into four clusters: High use, Medium use, Low use, and No use of technology. Similarly, we grouped the faculty into three clusters: High use, Low use, and No use. For both students and faculty, everyone except those in the No use groups made frequent or some use of course management systems.

Results

The first and second research questions asked how often students and faculty used academic technologies. Of the 10 technologies on this survey, students use only course management systems (CMSs) frequently, with the average response being "often." The other technologies are used by some students but with low frequency and mixed variances, with most technologies reported as used "never" more often than not. Faculty reported similar results, with relatively frequent use of CMSs but much lower use of the other technologies. Table 1 presents the responses of all senior students and Table 2 those of faculty teaching upper-division courses, with responses converted to a 4-point scale (Very often = 4, Often = 3, Sometimes = 2, and Never = 1). For both students and faculty, most mean scores are relatively close to the lowest score of 1, indicating that students and faculty overall make virtually no use of those technologies. The last column contains Cohen's d effect size differences between the scores of student and faculty respondents.

Table 1. Student Technology Use

| Technology | N | Mean | Standard Deviation | Cohen's d Effect Size |

| CMS | 4,106 | 3.1 | 1.0 | .17 |

| Clickers | 3,626 | 1.6 | 1.0 | .35 |

| E-portfolios | 3,797 | 1.5 | 0.9 | .12 |

| Blogs | 4,079 | 1.3 | 0.7 | .15 |

| Collaborative editing tools | 3,980 | 1.8 | 1.1 | .68 |

| Video | 4,220 | 1.6 | 0.9 | .23 |

| Games | 4,094 | 1.2 | 0.6 | .00 |

| Surveys | 4,057 | 1.4 | 0.7 | .31 |

| Video or voice conferencing | 4,119 | 1.2 | 0.6 | .00 |

| Plagiarism detection | 4,048 | 1.4 | 0.8 | –.12 |

Table 2. Faculty Technology Use

| Technology | N | Mean | Standard Deviation | Cohen's d Effect Size |

| CMS | 710 | 2.9 | 1.3 | –.17 |

| Clickers | 667 | 1.3 | 0.7 | –.35 |

| E-portfolios | 688 | 1.4 | 0.8 | –.12 |

| Blogs | 694 | 1.2 | 0.6 | –.15 |

| Collaborative editing tools | 683 | 1.2 | 0.6 | –.68 |

| Video | 699 | 1.4 | 0.8 | –.23 |

| Games | 692 | 1.2 | 0.5 | .00 |

| Surveys | 693 | 1.2 | 0.6 | –.31 |

| Voice or video conferencing | 692 | 1.2 | 0.6 | .00 |

| Plagiarism detection | 695 | 1.5 | 0.9 | .12 |

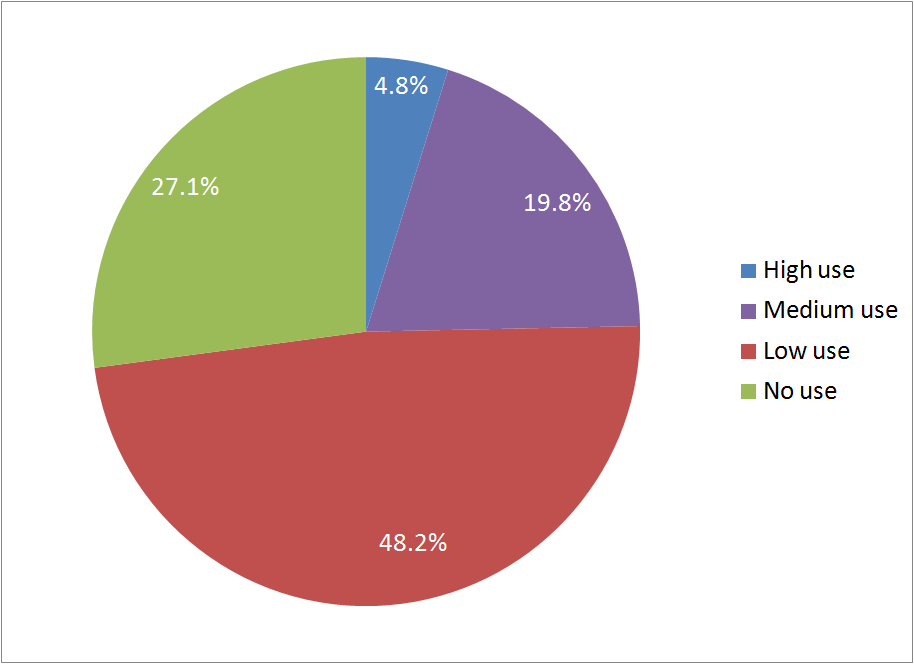

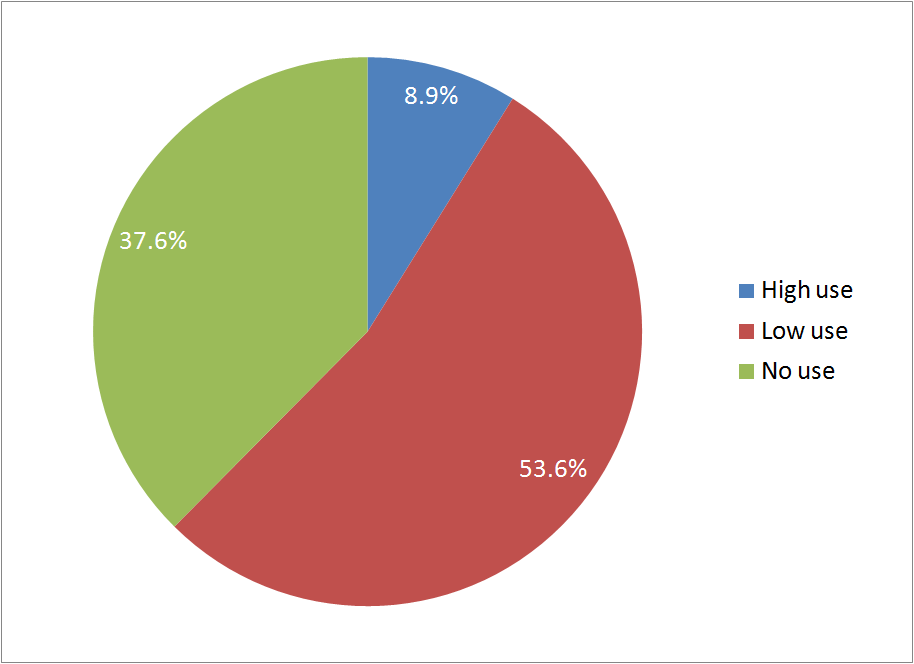

Another way of describing the frequency with which students and faculty use these academic technologies is through cluster analysis, as described in the methodology section. Figures 1 and 2 show the percentage of students and faculty in these clusters. High and Medium use in Figure 1 indicate broad use of multiple technologies by students, while High use in Figure 2 indicates broad use of multiple technologies by faculty. The plurality of students (48.2 percent) and majority of faculty (53.6 percent) are in the Low use clusters, indicating that the only technology used was their CMS — an unsurprising finding given that only the CMS has a mean above 2.0 (3.1 for students and 2.9 for faculty, both indicating that on average students and faculty used their CMSs often).

Figure 1. Student Use of Academic Technologies

Figure 2. Faculty Use of Academic Technologies

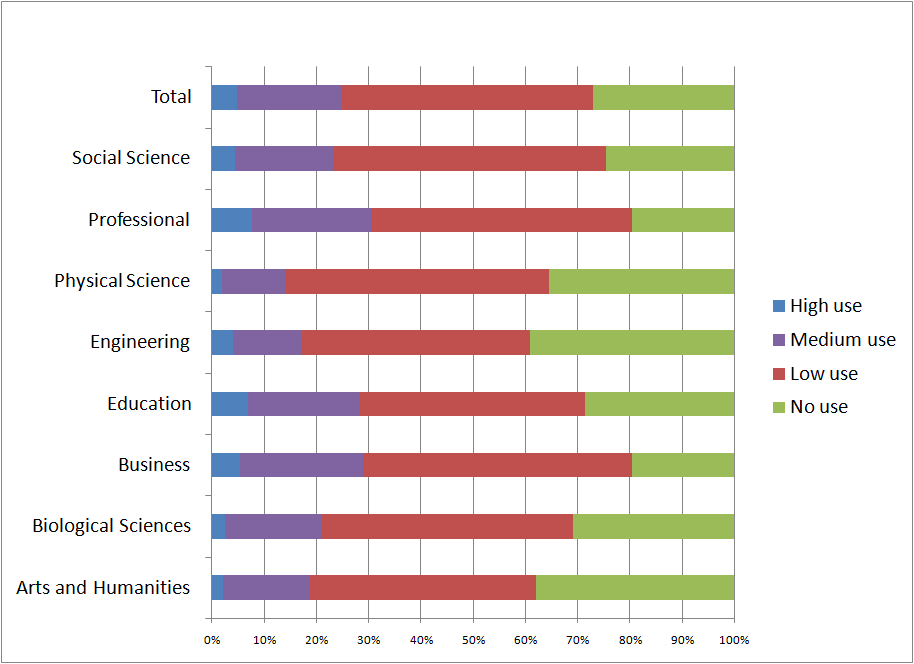

Because differences in discipline shape faculty's pedagogical choices, we chose to view uses of academic technologies across disciplines. The third research question asked if students in different disciplines use these technologies more or less than their peers. Differences between disciplines become apparent once the responses are compared between students with different majors; post-hoc analysis using Tukey's test (alpha < .05) identified multiple homogenous groups for each of the technologies. These homogenous groups seem to indicate that students in Professional, Business, and Education majors used these technologies significantly more than their peers in other disciplines, a finding supported by observing that only these three groups have more than 25 percent of their respondents in the High or Medium use clusters, as shown in Figure 3. In particular, note that Professional students use classroom response devices ("clickers") and Education students use e-portfolios significantly more than students in all other disciplines. As before, High and Medium use indicate broad use of multiple technologies, whereas Low use indicates use of only CMSs.For reference, the entire group of student respondents is shown at the top of the figure.

Figure 3. Student Academic Technology Use Across Disciplines

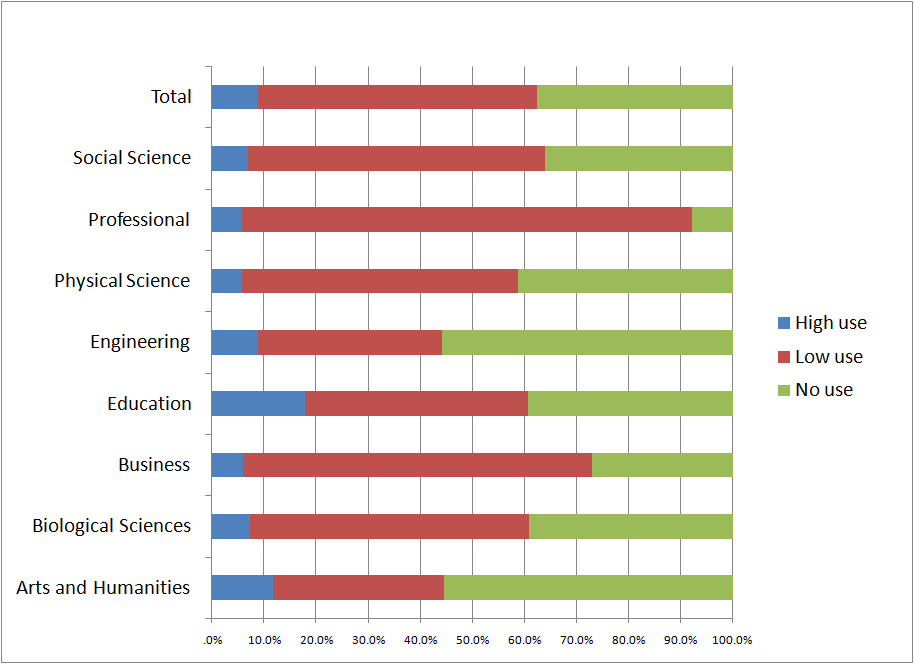

The fourth research question asked if faculty in different disciplines use these technologies more or less than their peers. Similar to the student respondents, differences are evident in the faculty responses, although fewer of them. Examination of the homogenous groups indicate that faculty of all disciplines reported using blogs, collaborative editing tools, and games with similar frequencies. For those technologies which faculty employed more in specific disciplines, similar patterns appeared as for the student responses: Professional and Education faculty used many of these technologies more often than other faculty.

Faculty of all disciplines made uniformly low use of some technologies: blogs, collaborative editing tools, and games and simulations. The mean frequency of use for these tools is near "none" (1.0) for faculty in all disciplines. Moreover, the means for all disciplines are statistically identical at the p < .05 level of significance. In other words, the average frequencies of use of these three technologies are virtually indistinguishable across the disciplines.

The picture becomes more complex, however, when examining the faculty clusters in Figure 4. Education faculty stand out as clear leaders in the use of technology. Although many faculty in the Arts and Humanities disciplines fall into the High use cluster, they are also the second largest group in the No use cluster, indicating a bifurcation where these faculty either used multiple technologies or none. Professional faculty, on the other hand, made relatively low use of each technology except for their CMSs, which nearly all of them used with some frequency. For reference, the entire group of faculty respondents appears at the top of Figure 4.

Figure 4. Faculty Academic Technology Use Across Disciplines

The final research question asked if there are noticeable differences between how often students and faculty use these technologies. As indicated by the mean scores and their distribution among clusters, students indicated more frequent use of virtually all of these technologies than faculty. This remains true when examining the significant differences between disciplines and when comparing the student and faculty clusters. The only exception is plagiarism detection tools, a technology that faculty reported using slightly more than students. Note that although these differences exist, the effect size differences in Tables 1 and 2 show that many of the differences are trivial. Students report using surveys and clickers slightly more often than faculty, but the largest difference is clearly in students' and faculty's use of collaborative editing tools. Although such a direct comparison is not necessarily advisable because the students were not matched with their faculty, it is an interesting finding to explore in future research. Despite these differences, however, the mean scores of use of these technologies still indicate that, aside from CMSs, these technologies are not widely used by faculty or students.

Discussion

Generally, three themes stand out in these findings:

- Students and faculty of all disciplines used their institution's CMS much more frequently than any other specific technology or tool. This is good news considering the ubiquity and expense of these systems. The consistency is also heartening when juxtaposed with students' professed desire for frequent and consistent use of CMSs in their courses.16 However, that excitement might be dampened by remembering that some technologies — blogs, collaborative editing tools, and games and simulations — are uniformly unused by faculty in all disciplines.

- Students reported noticeably more use of some technologies than faculty. This might reflect a simple difference in perception or an artifact of the methodology of this study, but those explanations seem incomplete and unsatisfactory. It is more likely that students use technologies more than faculty require or expect them to. For example, students in all disciplines reported using collaborative editing tools more often than faculty. Those tools might not be required by faculty but used by students outside of class to collaborate and complete group assignments.

- Finally, the consistently high frequency of technology use reported by students and faculty in the Professional, Business, and Education disciplines seems to reflect values of those professions, resources available to them, and the specific content of the survey instrument. For example, Education students and faculty both reported making more frequent use of e-portfolios than any other discipline, a usage that can be understood given the high importance placed on effective assessment of learning outcomes in education programs. It is also possible that some disciplines with greater access to financial resources simply have greater access to technologies. Finally, the technologies on the survey instrument may be more amenable to these interdisciplinary and applied groups of disciplines.

Implications

Instructional technologists, faculty developers, IT support staff, and others can learn much from this study. First, that most faculty and students use CMSs can be celebrated as a victory of sorts given their complexity and costs. However, these numbers might be inflated because their use is required on some campuses. Even so, faculty and students in nearly all disciplines find the use of these tools normal and expected, particularly in the Business and Professional disciplines. IT practitioners should begin to look for additional CMS features or different tools that students and faculty in other disciplines, such as Physical Science and Arts and Humanities, could benefit from. Similarly, IT practitioners should incorporate other technologies (blogs, wikis, etc.) into the CMS to promote greater awareness and faculty use of them.

Second, these data clearly show pockets of technological experimentation, especially within Education and the Arts and Humanities. Although these disciplines also have many faculty and students making low or no use of technology, many outliers are on the leading edge of use and experimentation with technology. This becomes particularly evident when looking at specific technologies such as e-portfolios (especially popular in Education) and CMSs (most used in Business and Professional disciplines). Faculty in these disciplines should be sought out for their expertise and experience, especially when practitioners seek successful examples of technology in the classroom or allies in the development of new capabilities.

Finally, practitioners should note the differences in student and faculty views of and experiences with technology. That students reported using some technologies more often than faculty highlights a gulf that practitioners might have encountered many times. However, these data also remind us that some faculty use more diverse types of technology more often than some students. Although the general trend is that students use technology more than faculty, the picture is complicated and nuanced, with much of the detail yet to be filled in. IT practitioners will want to keep in mind that students and faculty may have different needs and uses for academic technologies and that different tools might work better for the two groups. When implementing or offering a new academic technology, it is important to evaluate these tools from both the students' and the faculty's perspectives.

Limitations

The greatest limitation of this study is that the two surveys were administered to two different groups of participants in different contexts. Students responded to NSSE in the context of all the classes they took in the semester or quarter during which the survey was administered (typically February through May). Faculty, on the other hand, responded either in the context of a typical student or class they taught in the current school year, depending on the survey option chosen by their institution. Directly comparing these responses, even with the careful selection employed in this study, presents some obvious limitations and challenges.

Additionally, the technology questions asked in the supplemental survey attached to NSSE and FSSE were not tested for reliability and validity nearly as heavily as the questions in the main NSSE and FSSE surveys. Although we are confident of their validity, these questions remain new and relatively untested. Note also that the technologies asked about in this study do not encompass all educational technologies or tools used for educational purposes. It is possible that many students and faculty use consumer tools for academic purposes in ways we did not consider. Also, because these survey items were written early in 2008, newer technologies, such as Twitter, were not considered likely to be used in educational settings.

Finally, the participants in these surveys were not selected completely at random. At each institution, the student participants were randomly selected or part of a census. However, each institution selected its own sampling strategy for faculty participants. More importantly, the institutions invited to participate in this study were intentionally selected to maximize diversity among institutions and respondents. Institutions could choose to not administer these additional questions, potentially limiting the participants to those at institutions with a particular interest in technology. Perhaps as a result of this institutional self-selection or because we focused on faculty teaching upper division courses, the faculty that participated in this study are almost entirely tenure-track. This could influence their willingness to use academic technologies, but it certainly limits the generalizability of this study, particularly as the ranks of contingent faculty continue to grow. It is also important to note that all institutions in this study were four-year baccalaureate-granting institutions, so results may not be generalizable to other institution types such as the nation's many community college and two-year institutions.

Future Research

The descriptives presented here represent only the beginning of this line of research. Because the study found CMSs to be the dominant technology used by both faculty and students, further descriptions of how students and faculty use these tools should be investigated. Future studies that employ more focused surveys and other methods of research may go beyond the descriptive findings of this study to explore relationships between disciplines and personal and institutional characteristics. Moreover, future studies employing different methods may be able to explore causation — why particular disciplines use (or do not use) particular technologies. This is important if we are to avoid idolizing or condemning disciplines simply for their use or non-use of academic technologies. Building off of the findings in this study that students appear to use technologies more than faculty require their use, future research should also focus on apparent differences between student and faculty uses and views of technology, particularly student use of technologies not assigned or required by faculty. Finally, further research along the same lines as this study will always be necessary simply to explore the uses of new technologies as they become available and grow in popularity (for example, microblogging, location-aware mobile technologies, and tablet computers).

Conclusion

Not only do students and faculty use some technologies in different frequencies, students and faculty in different disciplines use different technologies in different frequencies. Hence a "one-size-fits-all" approach to providing and supporting academic technologies will not suffice. Moreover, students and faculty have different expectations and use technologies in different contexts, which may create tension and misunderstandings between these two groups. Although this study confirms the general belief that students use technology more often than faculty, it also reminds us that the academic technology landscape is complicated and ever changing, always challenging our assumptions and demanding more context and deeper examination.

- Robert M. Bernard, Philip C. Abrami, Yiping Lou, Evgueni Borokhovski, Anne Wade, Lori Wozney, Peter Andrew Wallet, Manon Fiset, and Binru Huang, "How Does Distance Education Compare with Classroom Instruction? A Meta-Analysis of the Empirical Literature," Review of Educational Research, vol. 74, no. 3 (September 2004), pp. 379–439; Traci Sitzmann, Kurt Kraiger, David Stewart, and Robert Wisher, "The Comparative Effectiveness of Web-Based and Classroom Instruction: A Meta-Analysis," Personnel Psychology, vol. 59, no. 3 (Autumn 2006), pp. 623–664; and Barbara Means, Yukie Toyama, Robert Murphy, Marianne Bakia, and Karla Jones, Evaluation of Evidence-Based Practices in Online Learning: A Meta-Analysis and Review of Online Learning Studies (Washington, DC: U. S. Department of Education, May 2009).

- Shannon D. Smith, Gail Salaway, Judith Borreson Caruso, with an introduction by Richard N. Katz, The ECAR Study of Undergraduate Students and Information Technology, 2009(Research Study, vol. 6) (Boulder, CO: EDUCAUSE Center for Analysis and Research, 2009).

- George D. Kuh and Shouping Hu, (2001). "The Relationships Between Computer and Information Technology Use, Student Learning, and Other College Experiences," Journal of College Student Development, vol. 42, no. 3 (May/June 2001), pp. 217–232.

- Shouping Hu and George D. Kuh, "Computing Experience And Good Practices In Undergraduate Education: Does the Degree of Campus 'Wiredness' Matter?" Education Policy Analysis Archives, vol. 9, no. 49 (November 24, 2001).

- National Survey of Student Engagement, "NSSE Viewpoint: Converting Data Into Action: Expanding the Boundaries of Institutional Improvement," November 2003, Center for Postsecondary Research, Indiana University Bloomington; National Survey of Student Engagement, "Engaged Learning: Fostering Success for All Students," Annual Report 2006, Center for Postsecondary Research, Indiana University Bloomington; and Pu-Shih Daniel Chen, Amber D. Lambert, and Kevin R. Guidry, "Engaging Online Learners: The Impact of Web-Based Learning Technology on Student Engagement," Computers & Education, vol. 54, no. 4 (2010), pp. 1222–1232.

- Richard E. Clark, Kenneth Yates, Sean Early, and Kathrine Moulton, "An Analysis of the Failure of Electronic Media and Discovery-Based Learning: Evidence for the Performance Benefits of Guided Training Methods," Chapter 8 in Handbook of Improving Performance in the Workplace, Volume I, Instructional Design and Training Delivery, Kenneth H. Silber and Wellesley R. Foshay, eds. (Washington, DC: International Society for Performance Improvement, December 2009), pp. 263–297.

- Richard E. Clark, ed., Learning from Media: Argument, Analysis, and Evidence, a volume in the series Perspectives in Instructional Technology and Distance Learning, Charles Schlosser and Michael Simonson, series eds. (Greenwich, CT: Information Age Publishing, 2001).

- U. S. Congress, Office of Technology Assessment, Teachers and Technology: Making the Connection (Washington, DC: U. S. Government Printing Office, April 1995), p. 57.

- U. S. Department of Education, "Evaluation of Evidenced-Based Practices in Online Education."

- Ibid., p. ix.

- Thomas F. Nelson Laird, "Surfin' with a Purpose: Examining How Spending Time Online Is Related to Student Engagement," Student Affairs On-Line, vol. 5, no. 3 (Summer 2004).

- Judith M. Gappa, Ann E. Austin, and Andrea G. Trice, Rethinking Faculty Work: Higher Education's Strategic Imperative (San Francisco, CA: Jossey-Bass 2007).

- Michael Prosser and Keith Trigwell, Understanding Learning and Teaching: The Experience in Higher Education (Malabar, FA: Open University Press, 1999); and Ruth Neumann, "Disciplinary Differences and University Teaching," Studies in Higher Education, vol. 26, no. 2 (2001), pp. 135–146.

- Michael D. Waggoner, "Disciplinary Differences and the Integration of Technology into Teaching," Technology, Pedagogy and Education, vol. 3, no. 2 (1994), pp. 175–186.

- Chen-Lin C. Kulik, James A. Kulik, and Peter A. Cohen, "Instructional Technology and College Teaching," Teaching of Psychology, vol. 7, no. 4 (1980), pp. 199–205.

- Smith, Salaway, and Caruso, The ECAR Study of Undergraduate Students.

© 2010 Kevin R. Guidry and Allison BrckaLorenz. The text of this article is licensed under the Creative Commons Attribution-Noncommercial-Share Alike 3.0 license.