Key Takeaways

- Applying the principles of business intelligence analytics to academia promises to improve student success, retention, and graduation rates and demonstrate institutional accountability.

- The Signals project at Purdue University has delivered early successes in academic analytics, prompting additional projects and new strategies.

- Significant challenges remain before the predictive nature of academic analytics meets its full potential.

Academic analytics helps address the public’s desire for institutional accountability with regard to student success, given the widespread concern over the cost of higher education and the difficult economic and budgetary conditions prevailing worldwide. Purdue University’s Signals project applies the principles of analytics widely used in business intelligence circles to the problem of improving student success within a course and, hence, improving the institution’s retention and graduation rates over time. (See “Emergence of Academic Analytics.”) Through its early stages, the Signals project’s success has demonstrated the potential of academic analytics. Those early efforts have led to additional projects to develop:

- Student success algorithms customized by course

- Intervention messages sent to students

- New strategies for identifying students at risk

The premise behind Signals is fairly simple — utilize the data collected by instructional tools to determine in real time which students might be at risk, partially indicated by their effort within a course. Through analytics, the institution mines large data sets continually collected by these tools and applies statistical techniques to predict which students might be falling behind. The goal is to produce “actionable intelligence” —in this case, guiding students to appropriate help resources and explaining how to use them. Early reviews by administrators, faculty, and students have been positive, as has empirical data on the system’s impact.

The Signals system is based on a Purdue-developed student success algorithm (SSA) designed to provide students early warning — as early as the second week of the semester — of potential problems in a course by providing near real-time status updates of performance and effort in a course. Each update provides the student with detailed, positive steps to take in averting trouble. (Sample student dashboards [http://www.itap.purdue.edu/tlt/signals/demo/] are available online.)

Today, more than 11,000 students have been impacted by the Signals project, and more than 50 instructors have used Signals in at least one of their courses. These early results have led to a renewed discussion on campus of how best to identify students at risk and help them. As the discussion continues, the Signals project will evolve to include better models, more effective intervention strategies, and rigorous assessment approaches.

By no means is Purdue unique in its interest in academic analytics. Institutions across the world, large and small, public and private, research and teaching, have begun forays into various data source modeling strategies in an effort to find actionable data to support their goals. This article offers a snapshot of our experience at Purdue.

Toward Accountability

Since the introduction of television to the classroom, advocates of “educational technology” have promised that it will improve teaching and learning. While research on the impact of technology on learning has yielded confusing, often conflicting results, technology has nonetheless become enmeshed in the learning process, from computerized instructional materials to students searching for information with Google.

Technology also has become a major component of the instructional budget. Computer labs, technology-enhanced classrooms, course management systems (CMSs), collaboration tools, and other technologies account for a major component of IT budget growth during the past 20 years. Institutions also have invested heavily in providing support resources on campus, including educational technologists, instructional designers, assessment specialists, and other staff.

Moreover, technology provides the basis for a new level of scrutiny from higher education stakeholders including students, parents, government leaders, and accreditation agencies. Institutional data such as graduation rate, faculty-to-student ratio, and average financial aid is regularly requested, reported, and compared on websites, in news releases, and in legislative debates, among other forums. Institutional data digests can now be found on nearly every institution’s web pages, and new surveys ranking “top” programs are released and promoted on a regular basis.

The next decade promises to continue the exciting changes in how faculty, students, and institutions integrate technology into the teaching and learning process. In the current economic climate, however, demonstrating how technology investment improves student learning will be an institutional imperative. Through the use of academic analytics, institutions have the potential to create actionable intelligence on student performance, based on data captured from a variety of systems. The goal is simple — improve student success, however it might be defined at the institutional level. The process of producing analytics frequently challenges established institutional processes (of data ownership, for example), and initial analytics efforts often lead to additional questions, analysis, and implementation challenges.

Academic Analytics and the Instructional Environment

In 2007, John Campbell, Peter DeBlois, and Diana Oblinger1 introduced academic analytics as a tool to respond to increased pressures for accountability in higher education, especially in the areas of improved learning outcomes and student success. The instructional environment already has a significant data set ripe for analysis, prediction, and action. Data is actively collected in a variety of instructional systems including CMSs, student information systems (SISs), audience response systems, library systems, streaming media service systems, and much more.

Although a large amount of data is available, significant institutional challenges impede the implementation of analytics efforts:

- First, data is frequently maintained in different locations. Combining data into a common location is inhibited by different technology standards, lack of unique identifiers, and organizational challenges to the ownership and use of the data.

- Second, once the data is pooled, the nature of the instructional process may make it difficult to analyze — different instructors use technology in different ways. An instructional tool that one instructor considers key might not be important for another instructor teaching the same course.

Academic analytics can help shape the future of higher education, just as evolving technology will enable new approaches to teaching and learning. The fundamental principles of education as outlined by Arthur Chickering and Zelda Gamson2 will not change: effective teachers will continue to interact with their students; students will continue to work cooperatively and be accountable for their own education; feedback will continue to be important; and expectations will continue to grow. However, analytics can influence the way faculty and students go about their business. Consider the following scenarios, which could reach fruition because of academic analytics.

Scenario 1 [http://www.itap.purdue.edu/tlt/signals/demo/scenario1/]: The technology of academic analytics will provide better and more actionable information. This information can, in real time, inform faculty interventions to increase student success in their courses

Scenario 2 [http://www.itap.purdue.edu/tlt/signals/demo/scenario2/]: Academic analytics will provide information that allows students to be more proactive about their learning behavior, empowering themselves.

Scenario 3 [http://www.itap.purdue.edu/tlt/signals/demo/scenario3/]: Academic analytics will allow advisors to see real-time performance across classes, soon enough in the semester to intervene. Once accessed, help resources can better track student usage and make more refined resources available as needed.

These scenarios demonstrate the potential that academic analytics has to positively affect student success. If the turbulent transition from high school to college can be bridged by a technology that informs multiple levels of a college’s faculty and staff about a student’s progress and enables them to intervene quickly and effectively, the chances of a student’s early academic career being successful increase. Improvements in retention and four-year graduation rates might reasonably follow. Academic analytics could thus support a higher education institution’s ability to demonstrate accountability to its various stakeholders.

Academic Analytics at Purdue

In 2003, Purdue began considering ways to use the myriad of institutional data to improve the student experience3 — a cornerstone of the university’s strategic plan — in addition to its existing ongoing efforts. Initial work on academic analytics built on earlier efforts to:

- Identify students at risk

- Provide a variety of instructional services, from help desks to tutoring

- Restructure student orientation programs

The Purdue Early Warning System (PAWS) evolved over years of work, beginning with paper forms and then migrating to optical “bubble sheets” and finally to the web. The goal was to engage faculty in identifying students at risk. Each faculty member was asked to submit midterm results; based on this information, the institution could engage a student and provide the necessary help to support his or her academic success. While generally effective, PAWS had significant limitations. First, warnings frequently came too late in the term to assist students in a meaningful way. Second, the approach made it difficult for students to connect their efforts with the necessary changes in behaviors. Also, the warnings tended to be general in nature and did not include the resources available for a specific course.

At this junction a team comprised of student success and IT professionals at Purdue began work on the system now called Signals. At the time, the technology market offered lots of software designed to function as early warning systems. These systems flagged a wide variety (depending on the system) of input variables; that is, students would be flagged as “at risk” based on a combination of demographic and personal information, as well as grade performance in class. While all these factors help predict student success, we perceived a gap in what these models could accomplish because they did not really account for student behavior and effort.

Academic analytics showed promise in making the evaluation of risk more accurate and meaningful. From our perspective, a student’s risk status remained in constant flux throughout his or her academic career at Purdue. While demographic information, academic preparation (represented by admissions data), and performance (indicated by grades in the course) are important, we saw a vital need to include the hugely important, dynamic variable of behavior. The Signals model attempts to account for these factors by mining real-time data.

A Brief History of Signals

Because of technical limitations, the Signals pilot in fall 2007 required manual calculation of the SSA, which limited the number of participants. During these formative stages, the premise of the SSA was a weighted accounting of performance (points earned in the course) as well as effort (time spent using various CMS tools and in help-seeking behavior). A major objective was to develop a statistical proof of concept based on the historic data models Purdue’s John Campbell created.4

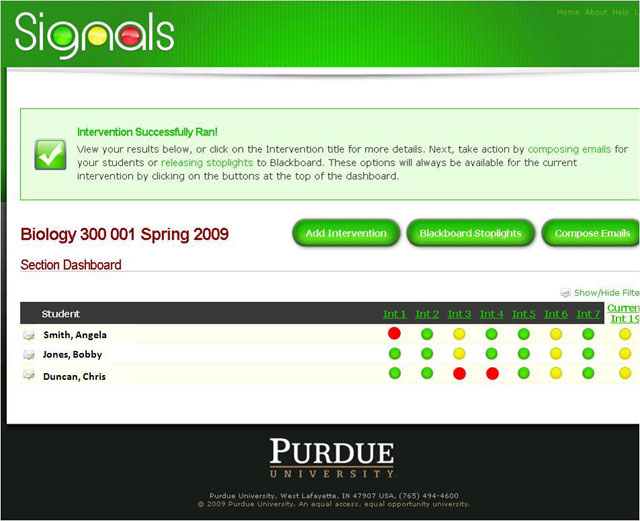

In brief, Signals works by mining data from the SIS, the CMS, and the gradebook. This data is then manipulated, transformed into compatible forms, and fed into an algorithm that generates a risk level with supporting information for each student, represented by a green, yellow, or red indicator (see Figures 1 and 2). Instructors then implement an intervention schedule they create, possibly consisting of:

- Posting of a traffic signal indicator on a student’s CMS home page

- E-mail messages or reminders

- Text messages

- Referral to academic advisor or academic resource centers

- Face to face meetings with the instructor

Interventions are run at the instructors’ discretion (see “Sample Criteria and Intervention Schedules”).

Figure 1. Student View of the Signals Dashboard

Figure 2. Green, Yellow, and Red Traffic Signals

We carefully monitored and analyzed the results at the end of each semester from fall 2007 through fall 2009, and they seem promising. For example, the Signals pilot program in a Biology course yielded 12 percent higher levels of B and C grades in sections using Signals than in control sections that did not, as well as 14 percent lower levels of D and F grades. In addition, although the number of withdrawals from the course stayed the same, there was a 14 percent increase in withdrawals early enough so that the drop did not affect those students’ overall GPAs.

An unsurprising trend was evident in risk level as well. Once a student became aware of his or her risk level, more often than not the student addressed behavioral issues to become more successful in the class. In one course (consisting of 220 students), the first intervention showed 45 students at the red (high risk) level and 32 at the yellow (moderate risk) level. Over the succeeding weeks, 55 percent of the students in the red category moved into the moderate risk group (in this case, represented by a C), 24.4 percent actually moved from the red to the green group (in this case, an A or B), and 10.6 percent of the students initially placed in the red group remained there. In the yellow group, 69 percent rose to the green level, while 31 percent stayed in the yellow group. There were no “backsliders” in this group, meaning that any student initially placed in the yellow category either remained constant (at a C level) or moved to the green level, with none dropping to the red level.

A marked difference in help-seeking behavior distinguished groups of students, as well, namely visits to a help center. Since help-seeking behavior is a fundamental characteristic of independent learners, we were especially interested in this factor. Students in the experimental groups (those receiving the interventions and traffic signals) sought help earlier than students in the control groups and appeared to retain the habit through the semester and in subsequent semesters. During the intervention period, the students receiving feedback from their instructor sought help 12 percent more often than those who did not receive feedback. After the interventions stopped, differentials in this help-seeking behavior became more marked, with the experimental group seeking help 30 percent more often than students in the control group.

Perceptions of Signals

While statistical analyses can provide great benefit to analytics, stakeholder experiences must also be considered. For this reason, the Signals development team gathered feedback from students and instructors at the end of each semester. A brief discussion of various points of view follows.

Administrator Feedback

Administrators saw great potential for Signals to increase retention, which in turn affects time and money spent on marketing and recruiting as well as national rankings. They also applauded the use of academic analytics to ease concerns of parents who send their children to a big university expecting them to succeed. Administrators viewed academic analytics (represented by Signals) as a scalable solution to support student success, familiarize students with campus help resources, and improve the fail/withdraw rates of large-enrollment, low-interaction courses often associated with first-year college attendance. They admitted to having concerns about consistency of use across courses.

The Perspectives video provides more detail about administrator feedback.

Faculty Feedback

While the SSA predicts which students might be in jeopardy of not doing as well as they could in a course, it is the faculty and instructors who use the information provided by Signals to intervene with students. It could certainly be argued that these instructors, armed with the data provided by academic analytics, are the most important weapons against student underperformance in the classroom. As one instructor said:

“I want my students to perform well, and knowing which ones need help, and where they need help, benefits me as a teacher.”

Faculty have easy access to the data via the faculty dashboard (Figure 3). Using academic analytics, faculty can provide more action-oriented and helpful feedback, which students appreciate, especially students early in their academic careers. Faculty also say that students tend to be more proactive as a result of the Signals system. While students still tended to procrastinate, they began thinking of big projects and assignments earlier. Instructors and TAs noticed that they received more inquiries about requirements well before the due dates. Because of the ability of academic analytics to assess risk early and in real time, students benefit from knowing how they are really doing in a course, so they understand the relative importance of assignments, quizzes, and tests.

Figure 3. Faculty View of the Signals Dashboard

In general, faculty, instructors, and TAs have had a positive response to Signals, but most approached the system with caution. Before using Signals, faculty expressed concern about floods of students seeking help, but despite their anxiety, few actually reported problems after they began using the system, with the most commonly reported issue being an excess of e-mails from concerned students. In addition, faculty reported concerns about creating a dependency in newly arrived students instead of the desired independent learning traits. A final faculty concern was the lack of best practices for using Signals.

Student Feedback

One of the major objectives of academic analytics is to identify underperforming students and intervene early enough to allow them the opportunity to change their behavior. For this reason the Signals development team has closely tracked the student experience with Signals since the pilot stage. At the end of each semester, a user survey gathers anonymous feedback from students, with more than 1,500 students surveyed across five semesters. In addition, several focus groups have been held.

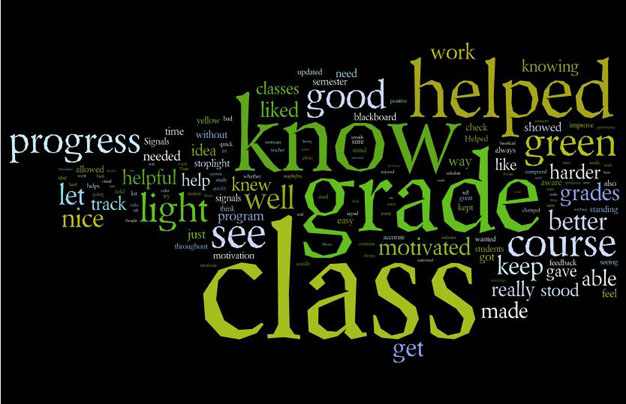

Students report positive experiences with Signals overall (Figure 4). Most students perceive the computer-generated e-mails and warnings as personal communication between themselves and their instructor. The e-mails seem to minimize their feelings of “being just a number,” which is particularly common among first-semester students. Students also find the visual indicator of the traffic signal, combined with the instructor communication, to be informative (they learn where to go to get help) and motivating in changing their behavior.

Figure 4. Word Cloud of Positive Student Responses

Of the roughly 1,500 student responses, two wrote of becoming demoralized by the “constant barrage” of negative messages from their instructor. While this perception should not be downplayed, negative feedback from instructors, especially for students who might not be prepared for the rigors of higher education, can be difficult for them to receive. (See “Sample Student Comments” for a selection.) Aside from these two student surveys, however, the remainder of the negative feedback concerned faculty use of the tool. For example, many students spoke of over penetration (e-mails, text messages, and CMS messages all delivering the same message), stale traffic signals on their home pages (an intervention was run but not updated, giving a false impression of a student’s status), and a desire for even more specific information. This demonstrates that instructors and students are on the same page about the lack of best practices.

Challenges and Future Considerations

Purdue’s experience with Signals begins to show the benefits possible from using academic analytics to improve the teaching and learning environment and student learning, success, and retention. As higher education begins to more fully harness the power of analytics, several key issues require additional thought and research. The length of this article prohibits a full discussion of any of these concerns, so only a brief synopsis follows.

- Procurement of data. Whether an institution focuses on real-time use of data or simply looks at historic trends, unless it can extract data in a timely and efficient manner, it cannot use analytics optimally. Often institutional data comes from several, often incompatible systems. Consider, also, the necessity of disparate technologies such as clickers, engagement tools, e-portfolios, and wikis. How can tracking and usage data be extracted from each of these sources and transformed into congruent data streams?

- Management of dynamic data.While the acquisition of static data — such as SAT scores, high school GPAs, and other admissions data — can be tricky, once obtained, it rarely changes. The same cannot be said for the procurement of dynamic data. Current semester GPA, probation status, and residence status (on campus/off campus) can change each semester, while student effort and performance data can change hourly. Securing, manipulating, and including this data in algorithms adds another layer of complication.

- Current lack of best practices. Administrators, faculty, and students at Purdue all mentioned the disparate intervention models facilitated by analytics. As with course design, instructors have great leeway in scheduling and crafting interventions. Students can easily become confused by this variability, unless each instructor is very clear about the categorizations they employ. Tone, frequency, method of communication, and intervention schedules are only a few of the other areas needing further research.

- Student privacy. In Purdue’s five years of research, not a single student has asked about how their privacy is protected. In fact, they like having information synthesized for them. The students’ apparent lack of concern for their privacy does not give us a pass, however — it would be negligent on our part to ignore the issue. It does raise an intriguing question: Do students today have a different expectation of privacy than earlier generations?

- IT’s role in academic analytics. Academic analytics is burgeoning in many campus IT units, but the question often asked is whether this type of work should fall under the jurisdiction of IT units, even if they are academic in nature. Since each institution has a different political nature, the answer will be different in each instance. However, as academic analytics practice becomes less novel and more commonplace, the question is more likely to take center stage. Is academic analytics simply an extension of IT, or is the presence of the SSA alone evidence that the process belongs outside of IT, in a more traditionally education-focused unit?

- Analytics as a retention tool and potential money saver. Many administrators see Signals as a tool to boost retention — increasingly important in the face of increasing public demands for assessment — and preserve marketing dollars that would otherwise go to recruiting new students to replace those lost.

- Advantages of a homegrown system versus turnkey software. The market is now, as it has been for decades, rife with early warning/alert systems. Most of these solutions indeed use analytics as a basis for their products. As with any evaluation of a potential software purchase, typically a homegrown solution is considered in the mix. Each institution must carefully consider the functionality necessary to best support its teaching and learning environment and weigh that against what commercial products offer.5

In the coming decade, academic analytics will become increasingly prevalent in higher education. While different institutions will rely on different data and use the outcomes of their academic analytics programs differently, analytics has proved its value in the world at large, and academia is quickly catching up. It is the “getting to action” part of academic analytics that has the most relevance in higher education. Can the power of prediction be harnessed to help students become more successful? Despite the obstacles we still need to overcome, the predictive nature of academic analytics will only get more powerful as we clear those hurdles, and with more powerful prediction comes more meaningful action.

Acknowledgments

From its inception, Signals has been an effort of many people. I would like to thank John P. Campbell for his inspiration and support. In addition, Drew Koch and Matthew Pistilli from the Office of Student Access, Transition, and Success at Purdue University have been invaluable on this journey. And, of course, I must mention the countless faculty (too numerous to name individually), academic advising staff, administrators, research assistants, and colleagues who have contributed knowledge and enthusiasm to this endeavor.

- John P. Campbell, Peter B. DeBlois, and Diana G. Oblinger, “Academic Analytics: A New Tool for a New Era,” EDCAUSE Review, vol. 42, no. 4 (July/August 2007), pp. 40–42.

- Arthur Chickering and Zelda Gamson, “Seven Principles for Good Practice,” AAHE Bulletin, vol. 39 (March 1987), pp. 3–7.

- See John P. Campbell’s doctoral dissertation, Utilizing Student Data within the Course Management System to Determine Undergraduate Student Academic Success: An Exploratory Study (West Lafayette, IN: Purdue University, 2007).

- Ibid.

- SunGard Higher Education, Purdue University, and the Purdue Research Foundation are collaborating to further develop and provide Signals to higher education as a commercial product [http://news.uns.purdue.edu/x/2009b/091029McCartneySunGard.html].

© 2010 Kimberly Arnold. The text of this article is licensed under the Creative Commons Attribution-Noncommercial-No Derivative Works 3.0 license.