Key Takeaways

- A quasi-experimental study investigated the influence on student engagement and performance, as well as student perceptions, of using clickers as an educational tool in a graduate-level research and statistics course.

- The majority of students who used clickers approved of them as a pedagogical tool, but one objected to their use in a graduate-level course.

- The class discussion and peer and instructor explanations that occurred with clicker use were important in fostering student learning.

- On average, students in the experimental section using clickers performed better on the final examination than did students in the control group section.

Investigation has established research and statistics classes as vital in graduate preparatory programs. Nonetheless, most students perceive statistics to be unengaging, difficult, and boring.1 Instructors have explored a variety of strategies to improve the situation, including using technology to create learning environments to enhance student engagement, interaction, and achievement. According to Meletiou-Mavrotheris, Paparistodemou, and Stylianou, technology use can "illuminate key statistical concepts by allowing students to focus on the process of statistical inquiry — on the search and discovery of trends, patterns, and deviations from patterns in the data, and on the communication of findings to others."2

While technology has become a useful component of research and statistics courses, choosing the right software to support learning has challenged instructors. The programs typically employed are specialized statistical software designed primarily for data analysis in research and do not help students build knowledge about fundamental statistical ideas and concepts.3 A better option involves the use of technology that creates a collaborative and active learning environment, supports active knowledge construction, provides opportunities for students to reflect upon observed phenomena, and contributes to the development of students' metacognitive capabilities.4

Classroom response systems, commonly known as clickers, have recently gained popularity in higher education. Persuasive comments5 in favor of using clickers include:

- Clickers can turn a silent lecture hall into a buzz of interactive learning.

- Clickers help instructors gauge student conceptual understanding and performance during a class.

- Clickers help promote student engagement.

Research indicates that clickers are typically used in large classes to help encourage active learning and collaboration.6 Using clickers, the instructor can pose questions related to the class lecture, to which students then respond anonymously. During the question-and-answer session, the instructor allows the students to discuss their responses with their peers before showing them the correct answer. This process provides an opportunity for collaboration, active learning, peer instruction, and interaction; it also allows students to understand which answers they got wrong or right and why. In short, clickers help the instructor obtain instant feedback on how well students are following the material presented during class, potentially promoting not just student engagement but also performance.

Clickers have been described as having pedagogical uses for formative and summative assessments, formative feedback for teaching and learning, peer assessment and community building, research on human responses, and discussion initiation.7 Research conducted thus far on clickers has examined student performance using them. In most cases, study participants acted as their own control group. In addition, research has not considered the effects of using clickers in conjunction with other instructional methods.8 Pan and Tang argued that more empirical studies are needed to examine the effectiveness of using technological innovations such as clickers in enhancing student learning.9

I created a quasi-experimental study to explore student perceptions regarding the use of clickers and to determine the extent to which clicker use influenced student engagement and performance in graduate-level research and statistics classes. Specifically, I examined class engagement and performance on a final class test, comparing graduate students enrolled in two sessions of the class. Students in the first session were assigned to use clickers, while students in the second session did not use clickers. The study findings have significance and the potential to contribute to existing information on the impact of technology integration, specifically clickers, on teaching and learning.

Research Questions

This study addressed the following research questions:

- What are students' perceptions regarding the use of clickers as a teaching and learning tool?

- To what extent did the use of clickers influence student engagement in class?

- Is there a significant difference in performance on the final class test for students who used clickers compared to those who did not use clickers?

Materials and Methods

Study participants consisted of 30 students enrolled in two sessions of an introductory graduate research and statistics class. The course is designed to provide students with the knowledge and skills required for evaluating, designing, and conducting research in educational settings. Because an understanding of statistics plays an important role in research, part of the class involved applying basic descriptive and inferential statistical techniques such as correlation analysis, regression analysis, t-test, and analysis of variance (ANOVA) in research. Teaching strategies for the course included using worksheets, in-class discussions, online discussions, data analyses using Statistical Software for Social Sciences (SPSS), and lectures. The class requirements consisted of article critiques, the production of a research proposal, and completion of online human subjects research training.

Information gathered from a qualitative survey administered at the beginning of the course indicated that students in both class sessions were mainly in-service teachers without a prior background in research and statistics. Class session I of the course included 20 students (17 females and 3 males) and met once a week, every Monday from 5:30–8:20 p.m. Class session II met from 5:30–8:20 p.m. on Thursdays and included 10 students (5 females and 5 males); one student in that class was a math major. Students in both classes had the same instructor, course content, and course materials. In addition to the class lectures, hands-on exercises, and computer lab work, students in the first session used Turning Point clickers in class.

The Experiment

Over a period of five weeks, I incorporated three to four clicker questions into each class lecture for students in class session I. The pedagogical rationale for using clickers in the class included:

- Clarifying instruction and student understanding

- Ensuring immediate student feedback

- Provoking class discussion

- Encouraging collaboration, active learning, peer interaction, and peer instruction

- Promoting student engagement and participation

The clicker questions were formulated from various statistical textbooks and designed to assess students' ability to apply important statistical concepts such as probability theory and hypothesis testing in research. They also assessed whether students could match the right statistical techniques to real-life research questions. At the beginning of each lecture, a clicker question assessed students' prior knowledge of the statistical concepts being covered that day. After that initial assessment, students were allowed time to discuss their responses in small groups of four students, then shared their responses with the whole class. After this interaction, I began the lecture by introducing the statistical concept and going over each clicker response option, explaining why a particular response was right or wrong.

Following the introductory session, I invited questions from the class before continuing with the lecture, pausing from time to time to pose two or three more clicker questions. The process for responding to each clicker question was similar to what transpired during the introductory process, that is, students answered each question individually, discussed their response with their group members, and finally shared their group's response with the class. The last clicker question evaluated the extent to which students understood the entire lecture. I used the knowledge gathered from student feedback to determine where to place emphasis while summarizing the lecture.

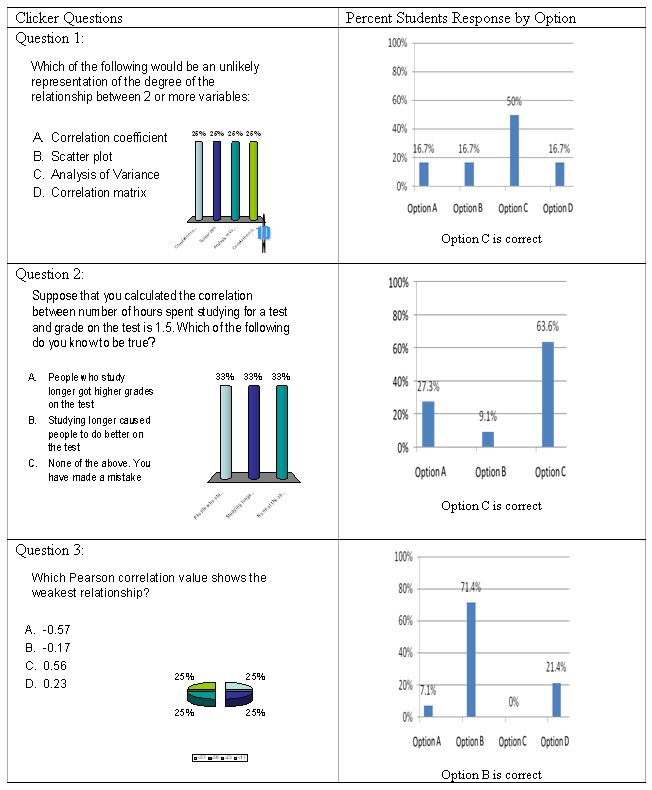

Figure 1 provides an example of the extent to which the percentage of students who answered the clicker items correctly changed as the lecture progressed. The questions involved correlation analysis, with question 1 followed later in the class by question 2 and later still by question 3. The percentage of students who responded correctly to the clicker questions as instruction and class discussion proceeded changed from 50 percent to over 70 percent.

Figure 1. Changes in Percentages of Students Who Answered Clicker Questions Correctly as the Class Meeting Proceeded

Students in the control group did not use clickers. However, like the students in the experimental group, they were required to complete the class worksheets and were involved in other class strategies such as hands-on activities, in-class discussions, and data analyses using statistical software (SPSS and Microsoft Excel).

At the end of the semester, I assessed student perception regarding the use of clickers by way of a survey modified from a survey originally designed by Cheesman and Winograd.10 The survey consisted of 14 closed-ended Likert scale questions and two open-ended items. I assessed student performance through a final class exam with a maximum allowable score of 30 points. The test, which consisted of 15 short-answer items, examined student understanding of the course content and their ability to apply the statistical content studied in class to real-life situations. I compared the final examination scores for students in the experimental and control groups using a t-test to determine whether the mean group scores were statistically significantly different.

Results

Survey results showed generally favorable perceptions of clicker use in class. Final exam results show a statistically significant difference in scores between the experimental and control groups.

Student Perceptions of Clicker Use

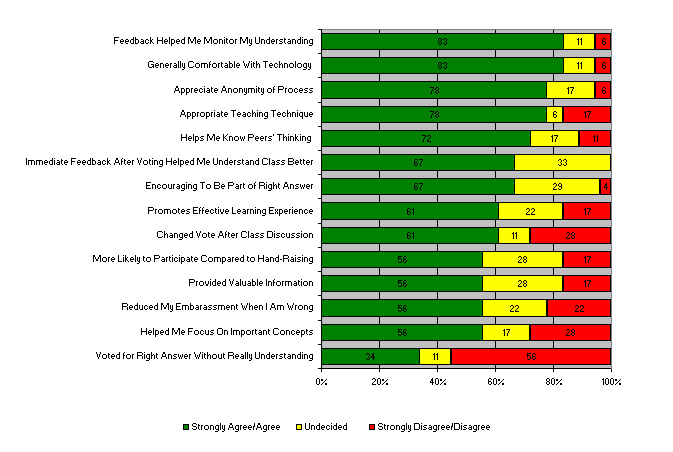

Data gathered from the survey suggest that the majority (78 percent) of students in the experimental group perceived the use of clickers to be an appropriate teaching technique (see Figure 2). About 83 percent of the students indicated that the use of clickers helped them monitor their understanding of the class content, and approximately 60 percent stated that they changed their initial answers based on the class discussion, which implies that student understanding increased with clicker use. About 72 percent said that the clickers showed them what their peers were thinking; 67 percent indicated feeling encouraged by having contributed to finding the right answer; and 61 percent concluded that the use of clickers promoted an effective learning experience in the class.

Figure 2. Student Perceptions of Clicker Use

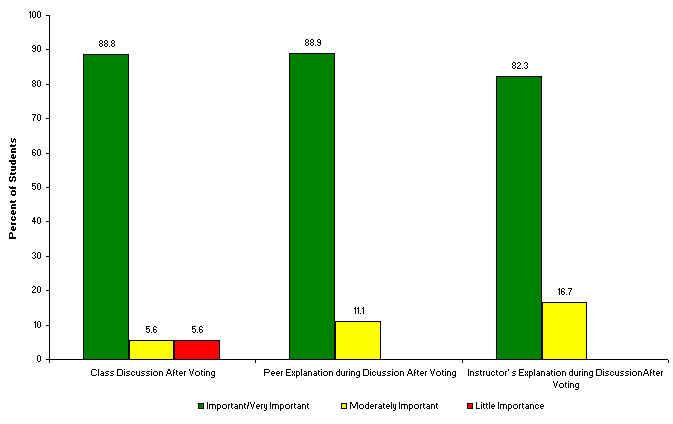

Figure 3 shows that over 80 percent of the class considered the class discussion that followed their voting and the instructor's and peers' explanations that occurred during the discussions to be important or very important to their learning or performance in class.

Figure 3. Student Ranking of Clicker-Related Activities by Importance

Student responses to the open-ended questions on the survey further revealed that they had both positive and negative perceptions about the use of clickers in the class. Students' responses are classified as follows:

- Pedagogical, interactional, and attitudinal benefits: Some students indicated that the clickers "provide a way to obtain immediate feedback from the professor." Others used the clicker sessions "to obtain clarification on the main points of the class." In addition, students thought that using the clickers was "a fun way to learn statistics and the class material that is difficult to comprehend." The clicker question-and-answer sessions provided "black and white" answers about the class discussion and "broke up the lecture and gave a chance for students to synthesize or review the class material."

Overall, the use of clickers helped students feel more engaged during class, revealed other students' understanding of statistical concepts, and helped students see possible response choices or answers to the questions while learning what made them correct or incorrect. Ultimately, the clicker activities gave the students the opportunity to study statistics in a nonthreatening environment and better prepare for the final class statistics test. - Technical, pedagogical, and interactional challenges: Some students had problems with their clickers and at times had doubts that the technology was working correctly. Although I checked all student clickers before the beginning of the class to make sure that they were in good condition, one or two students in the class always worried that their clicker "never worked correctly" and complained that the class was already starting to vote by the time they could inform the instructor of problems with their clickers. One student thought the use of clickers was an "improper use of time in a graduate-level course."

Student Engagement and Clicker Use

Over the course of the five-week experiment, I observed an immediate increase in student engagement and participation when clickers were incorporated into the class activities. Students became more interested and seemed excited and eager to test their knowledge or understanding of the class content whenever a clicker question came up during the lecture. Phrases such as "Did the computer register my answer?", "I think the correct response is C," and "I know this one for sure!" could be heard all over the classroom. When they got the answers wrong, students were anxious to know why. They wanted to understand how their peers answered the questions. The clickers also gave the students who had a better understanding of the class content an opportunity to explain their thought process to the members of their group as well as to the whole class. It appeared that the use of clickers provided students in the experimental class with opportunities for peer collaboration and interaction, to clarify their understanding of the class concepts, and to review for their final test.

A Comparison of Student Performance on the Final Class Test

Using the independent t-test, I compared average performance on the final test for students in the experimental and control groups. The analysis showed that, on average, students in the experimental class scored higher on the final test assessing student knowledge in statistics than did students in the control group (t(28) = 2.13, p < 0.05). Table 1 shows the mean, median, mode, minimum, maximum, and range for student scores. The analysis shows that the range of scores for students in the clicker class was slightly smaller than the range of scores for students in the control group. The scores for students in the control group were on average more widely distributed around the class mean (M = 20.7) compared to those for the experimental group (M = 24.9); this explains the high standard deviation observed for the control group.

Table 1. Final Test Scores for the Experimental and Control Groups

| Class Session* | No. of Students | Mean (M) | Median (m) | Mode | Range | Min. | Max. | Standard Deviation |

| I | 20 | 24.9 | 25 | 25/28 | 15 | 15 | 30 | 3.77 |

| II | 10 | 20.7 | 20 | 14 | 16 | 14 | 30 | 6.18 |

* Session I = Experimental Group; Session II = Control Group

Conclusion

Instructors often struggle to find ways to reduce students' anxiety about learning research and statistics and to make their classes more interesting and engaging, especially for students with limited mathematics backgrounds. Questions asked in class are often met with blank stares or attempts to avoid eye contact with the hope of not being called upon to answer the question posed; some students mouth possible answers without confidence in their validity. Such mostly nonverbal communications challenge instructors to make reasonable interpretations of students' understanding of the class content. For instance, are the students trying to communicate their misunderstanding of the question, or are they feeling uncomfortable, shy about speaking up in class, or not confident enough to share their knowledge in the larger group setting? How can instructors accurately determine students' understanding of class presentations so as to offer appropriate and prompt feedback to maximize learning? Past research suggests that clickers could help.

In this study, students enrolled in one session of a graduate-level research and statistics course used clickers with the pedagogical aim of enhancing engagement and performance in the course. The use of clickers helped increase interaction in the experimental class and provided students with the opportunity to learn from their mistakes and from each other. Students in the control group had fewer opportunities for collaboration, active learning, and peer instruction and interaction that came with the use of clickers.

An examination of student perceptions regarding the use of clickers showed that when combined with other pedagogical strategies, the clickers helped students monitor their understanding of statistics during the class period. The use of clickers also helped enhance student engagement in the class and seems to have helped them perform better on the final class exam. The results are in line with those presented by Gauci et al.,11 which suggested that the use of clickers helped improve student test performance immediately and up to one month after the lectures. Improved learning outcomes might have resulted from students being more engaged in the classes using clickers. In addition, the peer discussions and instructor feedback that occurred with the use of clickers might have lessened student anxiety while providing students with the opportunity to discuss their views, monitor their understanding of the course concepts, and review for the final class exam. Similar findings were observed by Smith et al., who suggested that "peer discussion enhances understanding, even when none of the students in a discussion group originally knows the correct answer."12 When incorporated in a graduate research and statistics class I taught, the technique helped promote an effective learning experience according to more than 60 percent of the students. Past research conducted by Martyn highlighted similar results.13

Learning occurs when students are motivated, actively engaged in the instructional process, understand how the new learning relates to past experiences, and can apply what they have learned in a real-life situation. To facilitate student learning, instructors should be able to assess students' existing knowledge and competencies as they relate to the concepts under class discussion. In addition, instructors should be able to provide immediate and appropriate feedback during instruction to help clarify student understanding of class content as well as provide students with opportunities for interaction. Clickers can provide such an opportunity. The outcomes of my study suggest that even in a small graduate-level class, the use of clickers helped promote collaboration and student engagement; was considered appropriate by the students; and helped promote effective teaching and learning.

- Wei Pan and Mei Tang, "Examining the Effectiveness of Innovative Instructional Methods on Reducing Statistics Anxiety for Graduate Students in the Social Sciences," Journal of Instructional Psychology, vol. 31, no. 2 (June 2004), pp. 149-159; and Carla J. Thompson, "Educational Statistics Authentic Learning CAPSULES: Community Action Projects for Students Utilizing Leadership and E-Based Statistics," Journal of Statistics Education, vol. 17, no. 1 (2009).

- Maria Meletiou-Mavrotheris, Efi Paparistodemou, and Despina Stylianou, (2009). "Enhancing Statistics Instruction in Elementary Schools: Integrating Technology in Professional Development," The Montana Mathematics Enthusiast, vol. 6, nos. 1&2 (2009), pp. 57–78, see p. 5.

- Maria Meletiou-Mavrotheris, "Technological Tools in the Introductory Statistics Classroom: Effects on Student Understanding of Inferential Statistics," International Journal of Computers for Mathematical Learning, vol. 8, no. 3 (October 2003), pp. 265–297.

- B. Dolinsky, "An Active Learning Approach to Teaching Statistics," Teaching of Psychology, vol. 28 (2001), pp. 55–56; Meletiou-Mavrotheris, Paparistodemou, and Stylianou, "Enhancing Statistics Instruction in Elementary Schools."

- Susan Bush and Susan McLester, "Clickers Rule!" Technology & Learning, vol. 28, no. 48 (November 15, 2007), pp. 10–11.

- Beth Morling, Meghan McAuliffe, Lawrence Cohen, and Thomas M. DiLorenzo, "Efficacy of Personal Response Systems ('Clickers') in Large, Introductory Psychology Classes," Teaching of Psychology, vol. 35, no. 1 (2008), pp. 45–50.

- Bush and McLester, "Clickers Rule!"; Jeffrey R. Stowell and Jason M. Nelson, "Benefits of Electronic Audience Response Systems on Student Participation, Learning, and Emotion," Teaching of Psychology, vol. 34, no. 4 (December 2007), pp. 253–258; James R. MacArthur and Lorretta L. Jones, "A Review of Literature Reports of Clickers Applicable to College Chemistry Classrooms," Chemistry Education Research and Practice, vol. 9 (2008), pp. 187–195; and Adrian Kirkwood and Linda Price, "Learners and Learning in the Twenty-First Century: What Do We Know About Students' Attitudes Towards and Experiences of Information and Communication Technologies That Will Help Us Design Courses?"Studies in Higher Education, vol. 30, no. 3 (June 2005), pp. 257–274.

- Margie Martyn, "Clickers in the Classroom: An Active Learning Approach," EDUCAUSE Quarterly, vol. 30, no. 2, (April–June 2007), pp. 71–74.

- Pan and Tang, "Examining the Effectiveness of Innovative Instructional Methods on Reducing Statistics Anxiety for Graduate Students in the Social Sciences."

- Elaine Cheesman and Gaynelle Winograd, "Classroom Response Systems: Student Perceptions by Learning Style, Age, and Gender," in Proceedings of Society for Information Technology and Teacher Education International Conference 2008, Karen McFerrin, Roberta Weber, Roger Carlsen, and Dee Anna Willis, eds. (Chesapeake, VA: AACE, 2008), pp. 4056–4062.

- Sally A. Gauci, Arianne M. Dantas, David A. Williams, and Robert E. Kemms, "Promoting Student-Centered Active Learning in Lectures with a Personal Response System," Advances in Physiology Education, vol. 33 (March 2009), pp. 60–71.

- M. K. Smith, W. B. Wood, W. K. Adams, C. Wieman, J. K. Knight, N. Guild, and T. T. Su, "Why Peer Discussion Improves Student Performance on In-Class Concept Questions," Science, vol. 323, no. 5910 (January 2009), pp. 122–124, see p. 122.

- Martyn, "Clickers in the Classroom."

© 2009 Lydia Kyei-Blankson. The text of this article is licensed under the Creative Commons Attribution-Noncommercial-No Derivative Works 3.0 license.