A proposed course framework, based on five educational design principles, helps instructors organize, motivate, and assess interactive online learning and prepares students to succeed in networked knowledge settings. The principles also offer the flexibility, self-pacing, and accountability associated with competency-based education.

Daniel Hickey, Professor and Program Coordinator, Learning Sciences Program, Indiana University Bloomington

In the 1950s behaviorists like B. F. Skinner convinced many that education should focus on very specific observable behaviors. This led to widespread interest in "programmed instruction" in the 1960s and arguments against lecture-based education. The cognitive revolution of the 1970s led many working in this tradition to reject Skinner's strict focus on behavior and begin instructing and assessing more "subjective" cognitive outcomes (i.e., "knowing that…" in addition to "knowing how…").1 But these more modern approaches still kept the behaviorist assumption that complex knowledge and skills should be broken down into specific competencies that can be systematically taught and assessed. While work in this tradition evolved substantially in the ensuing decades, competency-based education (CBE) has gained widespread support, particularly in higher education.

Support for CBE and related approaches has also been strong among some leading educational technologists taking advantage of continual technological advance. Each wave of advances has made it easier to present instructional content, assess mastery of the content, and track student progress. Now, all learning management systems feature sophisticated systems for assessing and tracking competences. More education is hybrid and fully online, and standard-setting organizations like the IMS Global Learning Consortium and major publishers like Pearson use CBE to coordinate their work. As such, it seems likely that competency-based approaches will dominate more sectors of higher education.

I predict, however, that further expansion of CBE and related-approaches will be rocky. In this article, I summarize the advantages of CBE and the reasons that many continue to resist CBE. I then introduce a "participatory" approach to CBE that takes advantages of its strengths while addressing its weaknesses. While some readers may find the theory behind this alternative complex, the approach itself consists of five relatively straightforward educational design principles.

The Advantages and Disadvantages of CBE

The appeal of competency-based education is obvious. CBE focuses on attaining and demonstrating specific elements of domain knowledge. This makes CBE highly structured and predictable, somewhat like a knowledge assembly line. You outline the competencies you want people to possess in a given domain. You then create ways to directly assess whether they have them. If students don't yet have a competency, you teach it to them, or let them learn it on their own. Learners then move on to the next competency. Proponents argue that competency-based learning is thus an efficient, flexible approach to education that emphasizes accountability. A particularly appealing aspect of CBE is that it makes minimal demands on instructors' knowledge of the content, how that content is best learned, and how that learning is best supported by whatever technology might be used to support it.2

As most proponents know, some educators and faculty resist any moves toward more competency-based approaches. Some of the fiercest resistance is rooted in differences about the nature of knowledge and (therefore) learning that emerged at the dawn of the cognitive revolution. While the perspectives that follow from these differences go by many labels (e.g., constructivism, inquiry-based learning, guided discovery, problem-based learning), all reject the idea that complex knowledge and skills can be readily broken down into more specific elements while still maintaining their essential meaning and educational value.3 While proponents of CBE sometimes attribute resistance to "protecting turf," politics, faddism, or just inertia, this different view of learning is supported by a substantial body of research in cognitive psychology and educational research.4 Meanwhile, many faculty and instructors do cling to traditional lecture-exam formats out of inertia; such formats are formalized in online courses with streaming videos and massively scaled in MOOCs. Whatever the reason, these approaches to instruction are often tacit and deeply rooted in school culture, accountability, and expectations.5 As many IT administrators and instructional leaders have experienced, the tensions associated with these differences quickly emerge when undertaking efforts to change instruction, assessment, technology, and accountability. Such tension can derail seemingly sensible reforms, even those well supported.

Newer "social" theories of learning raise additional concerns about CBE. As nicely summarized by John Seeley Brown and Richard Adler in EDUCAUSE Review in 2008, these theories shift the focus away from what a student is learning to focus instead on how students are learning.6 From this perspective, the socially isolated self-paced approach typical of most CBE doesn't prepare for the "interest-driven" digital networks where more and more knowledge is generated, shared, and learned. The broader "participatory" view of learning described by Brown and Adler is well known among cognitive scientists and strongly embraced by many learning scientists.7 But many find it perplexing because participatory theories of learning treat "knowing" as the stuff that gets "left over" from meaningful participation in the social and cultural practices that define what disciplinary experts "do." On the surface, this view might seem incompatible with CBE and its focus on specific competencies.

Pushing hard on participatory theories of learning also produces a promising path forward. While not yet widely appreciated, this aspect of participatory theories is important for the challenges facing CBE. Participatory theories don't reject the idea that complex knowledge and skills can be broken down. Rather, they assume that some knowledge and skills can be broken down and reassembled meaningfully. But participatory theories of learning (and a good deal of research) argue that teaching and assessing specific competencies in isolation from individual experience and from meaningful real-world contexts will leave behind "inert" competencies. Such competencies are inert because they are easily forgotten and not readily useful in settings beyond assessments similar to the instruction. These theories also argue that "real-world" contexts must be personally meaningful to each learner and suggest that many instructional contexts created by experts are meaningless to many learners.

Rather than directly instructing specific competencies, participatory theories suggest systematically embedding those competencies into activities that support "engaged participation" in more interactive social practices. These theories suggest helping learners informally assess their own and their peer's engagement with targeted knowledge and skills. In regards to the crucial question of prior learning, the more experienced and knowledgeable learners can readily participate and move on, while the learners with less knowledge and prior experience must take the time to gain the competencies needed to participate at that same level. Along the way, both types of learners learn to use their competencies in productive ways, and the more advanced learners have contributed invaluable contextualized examples that naturally move the entire conversation forward.

I contend that when competencies are developed in this fashion, demonstrations of those competencies through conventional assessments produce more valid evidence that those competencies will be useful in the future, when compared to similar assessment performance where those competencies were directly taught and formatively assessed.8 In this article, I present an approach to online and hybrid instruction that uses newer social views of learning to take advantage of the strengths and minimize the weaknesses of both competency-based and constructivist approaches to instruction.

Framework and Course Overview

My course framework builds most directly on the research of James Greeno, with whom Seely Brown co-founded the Institute for Research on Learning in Palo Alto in 1986.9 I call this approach participatory learning and assessment (PLA) because it uses several different types of assessment to implement the participatory view of learning advanced by James Greeno.10 The approach consists of five course design principles that emerged in iterative refinements of this approach across courses and platforms. These principles draw on my own work to refine participatory approaches to assessment and on the work of three of Greeno's students (Randi Engle, Rogers Hall, and Melissa Gresalfi).11

I first implemented PLA online in my graduate courses on educational assessment and on learning and cognition, taught using the Sakai platform.12 Since 2013, PLA has been used in the English courses taught at Indiana University High School, with one course I taught in Google Sites,13 and in my big open online course ("BOOC") on educational assessment using Google CourseBuilder.14 Ultimately, PLA requires only two features, available in most learning management systems and freely available on the open web: the ability for students to readily post pages that can be viewed and commented upon by other students and the instructor; and some sort of online assessment tool that supports both open-ended and multiple-choice items. In this article, I illustrate PLA with the features used to implement it in my graduate-level Learning & Cognition course when I first moved it from Sakai to Canvas in spring 2015.

Course Development Goals and Challenges

Having taught undergraduate and graduate courses on learning and cognition for 20 years, I have come to deeply appreciate three challenges it presents: First, we know a lot about learning and cognition, and far more than the practicing teachers and future administrators want to or need to know. For example, the textbook I use offers two chapters and six different theories that instructors might use to motivate classroom learning. This makes it hard to sort out what is most important to cover and then find ways to make that meaningful and accessible for students. Second, the content in this course is highly contextual. Reflecting the tensions described in the introduction, some of it is more relevant in some contexts and domains than others. Some of the guidelines in typical textbooks contradict other guidelines when treated as absolutes outside of the context of particular educational practices. Third, many of the students initially conflate the processes of learning with the practices of teaching. This creates a massive bottleneck for understanding the big ideas of the course well enough to use them. However, starting out with this nuanced distinction will confuse and overwhelm many students. Most experienced instructors will recognize different versions of these challenges in their own contexts.

Some proponents argue that these are precisely the sort of challenges that existing CBE models address. I disagree. Existing CBE models don't pay enough attention to the context in which knowledge is learned and used. Seely Brown and Greeno argued that the social and cultural contexts in which knowledge is learned and used is a fundamental element of that knowledge. This means that instructors and designers can't determine in advance for each learner (a) what elements of a domain are most important, (b) what context is most useful for learning that content, (c) the best way to overcome major bottlenecks, and (d) the contexts in which the new knowledge will be used. Recent work by contemporary theorists offers a relatively straightforward way of using differences across learners' own contexts (i.e., their prior experiences, current interests, and future aspirations) to address these challenges.

PLA also promises to addresses another universal challenge for instructors: time. My responsibilities to my university include coordinating a graduate program, teaching graduate seminars, mentoring doctoral students, and running externally funded research projects. So I always have to balance the needs of students in my online courses with the limited time I have available. In particular, I want each student to interact in ways that allow them to benefit from my experience with the content and my experience teaching this content. The features that support the proposed framework maximize such interactions while minimizing the amount of time-consuming private interactions with individual students. In recent years this time pressure has grown more acute as I open some of my courses to allow dozens and even hundreds of students to register for free. Recently I added features that allow students to have a highly participatory experience and take advantage of my input at their own pace and while making zero demands on my time.

Course Structure and Platform

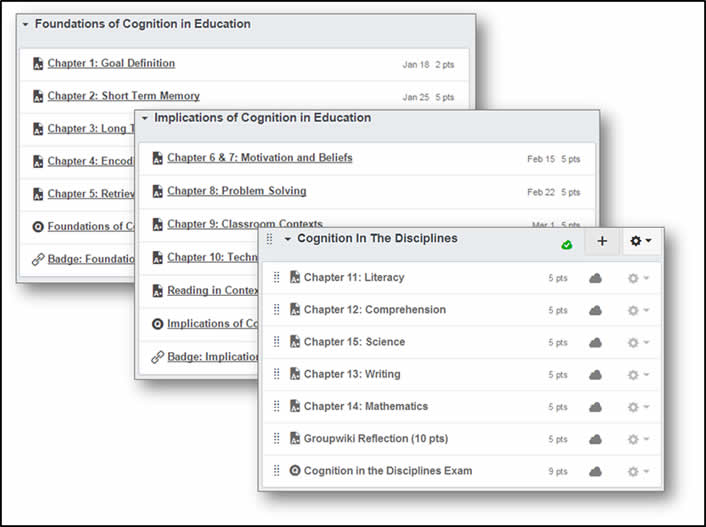

As shown in figure 1, my Learning & Cognition course has three modules: Foundations of Cognition in Education, Implications of Cognition in Education, and Cognition in the Disciplines. Each module consists of five one-week assignments, with each assignment corresponding to one or two chapters in a relatively advanced textbook.15 Each module concludes with an exam and the opportunity to earn a digital badge (a web-enabled microcredential). The third module consists of group assignment.16 I first taught this course in Canvas in spring 2015. Partly to test the efficiency of this approach, I agreed to lift the existing 20-student limit on the course and ended up with 29 students.

Figure 1. Three modules of the Learning & Cognition course

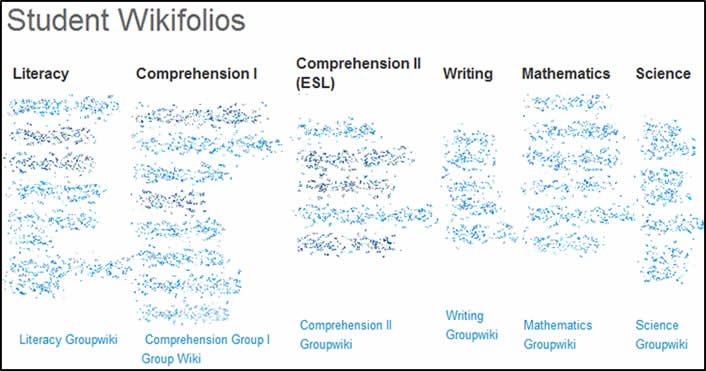

The first assignment is ungraded, and simply has each student briefly introduce themselves to the class in terms of their job and/or degree program and their reason for taking the class. They then select which of five "networking groups" they best fit in. These networking groups correspond with the five chapters in the third module of the course (see figure 1). This first assignment puts students into informal groups simply by rearranging the student course homepages on the course homepage (figure 2). In this large class the subset of students who chose the comprehension group were broken out into a second comprehension group.

Figure 2. Course homepage and student networking groups

Principle 1: Use Public Contexts to Give Meaning to Knowledge Tools

All of the interaction in my online courses takes place around "wikifolios" that are "public" to the class. This means that all students can see and learn from each other's examples. These wikifolios differ from traditional portfolios or assignments in that the course knowledge is reframed as "disciplinary tools." This makes it possible to engage students deeply with that knowledge by asking them to consider and discuss how those tools might best be used in specific personalized contexts. This approach differs from many prevailing approaches to instruction (including the "flipped classroom" and much CBE), where instructors or designers select the ideal curricular tool for helping students master a specific competency.

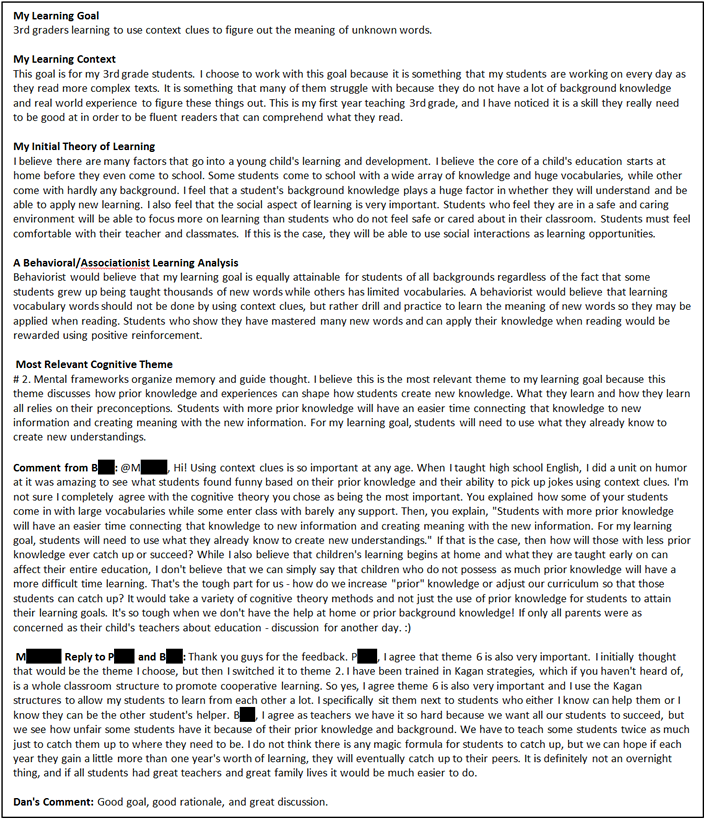

Figure 3 shows the first wikifolio from a student selected as representative of the class (currently teaching and completing a Masters of Teaching) and near the middle in terms of the length of her posts and the amount of interaction. (Two comments are omitted because of space.) This first assignment has the student define an instructional goal that will structure their learning for the remainder of the course. Students are instructed to select a goal that embodies their domain and that interests them.

Figure 3. First student wikifolio (two additional comments omitted)

This personalized goal helps students do what Randi Engle called "problematizing" course content.17 The assignment gives students guidance on what kinds of goals work best, instructs them to use that goal to draft an initial theory of learning, and asks them to make sense of a section in the first chapter that discusses behaviorist theories of learning. The assignment then introduces students to the core engagement strategy that they will use repeatedly throughout the course. Chapter 1 includes a section that lays out eight different "Cognitive Themes for Education," each two to three paragraphs long. The assignment instructs students to review the themes, select the one that seems most relevant to their instructional goal, and justify their selection of the most relevant.

This simple ranking feature has proven to be a robust activity for engaging students with content that might otherwise seem abstract and confusing. The assignment encourages students to examine the work of others, but only after they have drafted the assignment themselves and/or are really struggling. The important point here is that the ranking and justification problematizes the content knowledge in the chapter from the learner's perspective. There is no right or wrong answer. Asking students to articulate why various aspects of course knowledge are more or less relevant to them pushes them to make sense of all the implications.

This ranking activity dovetails nicely with another important feature of my online courses: all of the substantive discussion takes place in threaded comments that appear directly on the wikifolios. This creates social interaction different from discussion forums. Because they tend to be removed physically and conceptually from student work, discussion forums often push social interaction to be abstract and decontextualized, making them quite tedious for most learners).18 Students are instructed (but not required) to comment on their peers' work each week. Even on the very first assignment (figure 3), discussing the rankings leads quickly into relatively sophisticated discussion of the chapter's big ideas and is almost entirely student-led.

Another important feature of wikifolios is that students are instructed each week to restate and further elaborate on their instructional goal (and discouraged from cutting and pasting from the previous week). This encourages their knowledge of that goal and their own professional context to grow alongside their knowledge of the course content. For example, by the third wikifolio (on long-term memory), the instructional goal for the student in the example in figure 3 had expanded considerably:

My learning goal is 3rd graders learning how to use context clues to figure out unknown words when reading. Students who are struggling readers often struggle because they do not have a broad enough vocabulary to read all the words, especially as the content gets harder and harder. Students need to be reading texts where they do not know some of the words or they will never grow and expand their vocabulary. However, students who are not able to use what they already know (their prior knowledge) to figure out things they do not know will have a hard time reading and understanding new texts.

This same elaborate-rank-discuss strategy is used repeatedly in each weekly wikifolio for the remainder of the course. After the first chapter, the remaining chapter in the textbook concludes with a section called "Implications for Instruction." The core of each weekly wikifolio assignment involves summarizing the personal relevance of each of these five to eight implications for their instructional goal and ranking them in order of relevance. The instructions specifically state, "You should say something about the relevance of each of them but you must explain why the first two are most relevant and the last one is least relevant to you." Thus, in the third wikifolio, the primary school teacher in the examples above selected Recognize that the starting point of learning is what students already know — their prior knowledge as most relevant, providing the following rationale:

The sentence from the article "what can be learned depends substantially on what learners already know" (Bruning, pg. 62–63) really stuck out to me in terms of my learning goal. Students need to have a strong vocabulary background to be able to figure out unknown words. Each new word they learn builds on something they already know. They cannot make sense of words that they cannot somehow relate to something they already know. For example, when teaching prefixes and suffixes my students need to know the meaning of the base word to be able to figure out the meaning of the word with the prefix or suffix added. As a teacher, I have to make sure I start each student off at a point where they can be successful and build from there.

Requiring students to justify their ranking for the least relevant implication pushes them to make sense of all the implications. My example student, like many of the students, chose Provide opportunities for students to use both verbal and imaginal coding as least relevant. Her rationale and qualifications demonstrate a robust understanding of an otherwise abstract concept:

I chose this as the least relevant implication because in teaching this learning goal, we will most likely have the students reading or list[en]ing to reading. It would be great to find ways to incorporate imagery into the teaching of this goal, but I do not think it is very practical to use it all the time when teaching this goal. With very young students learning new words it is important to associate a picture with the word, but by the time they get to 3rd grade we do not usually use the picture association any more. I agree it can be powerful, but I do not believe it is extremely relevant to my learning goal. However, I am open to suggestions for how to incorporate both verbal and visual methods into the teaching of this learning goal if you have any ideas! [Emphasis in the orginal.]

Asking students to identify the least relevant element accomplishes two important goals: First, it pushes students to consider the personal relevance of the entire set of elements. Second, it enables the instructor and peers to naturally (and non-judgmentally) point to overlooked relevance. I give a few such comments in the first couple of weeks and commend students who do the same. Soon, many students are posting questions like the one in the example in figure 3. It turns out that even struggling and less-ambitious student can engage quite readily in this manner because they are the experts regarding the intersection of the course knowledge and their own context.

The assignment also instructs students to identify at least five "relevant specifics," starting with the bolded terms in the body of the chapter. The selection and justification that my primary school teacher provided is typical of the way this method links the declarative knowledge of the course with the social and cultural practices of teachers:

Relevant Specific #1: "Behavior can be influenced by memory of past events even without conscious awareness" (Bruning, pg. 42). This is very helpful is arguing for the implication I ranked 1st. This shows that even things we aren't consciously aware of are affecting our behaviors and performances on tasks. My students may not even be aware of things th[at] help them figure out unknown words. Students who were exposed to more words and educated conversations growing up have an advantage over other students that they don't even realize they have.

This search for relevance provides a "functional context" for reading and reviewing chapters that might otherwise bore and overwhelm students. The assignment reminds the student to makes sense of all of the bolded terms well enough to select the most relevant ones. This activity also gives the more advanced students a helpful benchmark for engaging with ideas and chapters they have encountered in previous courses.

Each of the assignments encourages students to ask questions of their peers to get the conversation going and instructs (but does not require) students to "look at the work of at least five classmates and make comments or questions." Thus the first peer comment on the long-term memory example above came from another member of the comprehension group:

From K: Hi M, I like your ranking order in terms of your learning goal. As you stated, vocabulary acquisition relies heavily on prior knowledge - The more students read, the more their vocabulary will grow! I really think implication #5 (which I ranked last as well) could be moved up considering the audience of third graders, although I agree that you wouldn't use it all the time. One year I taught SAT-specific vocabulary to eighth graders using a picture/context clue combination. Each word came with a memory trick and an illustration to help students commit the word & definition to memory. For example: Unanimous means fully in agreement. The memory trick was "Nanny Moose" and the sentence read, "We are all voting for you, Nanny Moose!" And there was a picture of a female moose running for some kind of office. You could incorporate something like that for domain-specific vocabulary lessons.

This comment provides informal evidence of productive engagement with all of the implications and invites comments from peers. This particular wikifolio went on to include six similarly engaged and lengthy comments from peers and two replies from the wikifolio's author. They included several suggested outside resources and referenced the textbook. Importantly, most of the comments referenced either the author's or the commenter's professional contexts, which helps anchor the abstract declarative knowledge of the course.

I will discuss function of these threaded comments more in the next section. But first, it is important to recognize that these discussions between individuals occur in public. This example represents what Rogers Hall defined as "local" interactions,19 which take place in public but are mostly interactions between individuals. As Rogers found in his research in mathematics learning, local interactions and their interplay with public interaction play an important role in fostering meaningful engagement. Because the local interactions are public, they support a kind of informal accountability that encourages students to be thoughtful.

Local interactions are also highly contextualized by the public interaction that surrounds them. This makes them ideal for me to insert more advanced content that would otherwise overwhelm some of the students if introduced as part of the assignment. One example of such content is the aforementioned distinction between teaching practices and learning processes. Rather than trying to explain this directly or include it as part of the assignment, I wait for students to conflate the two before introducing the distinction, explaining why it is important and linking to examples of other student work that illustrates these points. This is highly efficient for me as an instructor. I spend some time explaining the issue and linking to examples so that I can link to that interaction in announcements, weekly feedback, and/or subsequent review and commenting.

Principle 2: Encourage and Reward Productive Disciplinary Engagement

Participatory learning theories distinguish between two types of disciplinary knowledge. The first concerns the facts, concepts, and skills that come to mind when people think of "competencies." This is what cognitive scientists call "declarative" knowledge (because it can be "declared") and "procedural" knowledge (because it can be demonstrated). Declarative and procedural knowledge is readily assessed and measured in large part because it is meaningful in the abstract. Harkening back to the introduction, it is what disciplinary experts "know." The second kind of disciplinary knowledge concerns what experts "do." These are social, cultural, and technological "practices," which are much harder to assess and almost impossible to measure reliably and consistently because they are highly contextual. Building on Randi Engle's prior research, productive disciplinary engagement (or "PDE") results in massive numbers of connections between the more abstract declarative and procedural knowledge and the more contextual disciplinary practices.

This is where my approach diverges from other competency-based approaches. Other CBE models appear to either ignore social and cultural practices or characterize them in domain-general ways (e.g., "collaboration," "communication," etc.). I contend that framing disciplinary practices in domain-general ways strips them of the very contextualization that makes them important.20 Educators have been talking about these "21st century skills" since the 1990s. From the perspective of participatory theories of learning, the very notion of "21st century skills" is an oxymoron. PLA addresses this issue head-on by insistently focusing on domain-specific disciplinary practices. It does this by defining them as specific competencies that can be readily observed and assessed in context, but without attempting to "measure" them or even formally assess them. It then insistently focuses on these participatory competencies by making them the "primary" focus of instruction, while treating the declarative and procedural knowledge as "secondary" competencies that PDE leaves behind naturally.21

Most of the research behind the PLA framework represents a continuous search for new course features and strategies to implement the design principles that Randi Engle proposed for fostering PDE.22 Some strategies for encouraging PDE are individual and informal, such as reframing of the instructional goal. Other informal PDE strategies are more social, like my instructor-commenting strategies. To elaborate, I never commend students for stating something correctly; rather, all of my compliments go to interactions that open the discussion or reveal useful insights about how the declarative knowledge of the course intersects with the various instructional goals and professional contexts. Those particularly insightful "context × concept" observations are about the only place I post extended comments that introduce new ideas and insights. In addition to contextualizing that knowledge, this presumably also motivates and rewards students for doing so. By rewarding students for how they learn rather than what they know, I celebrate students' contributions and avoid the well-documented negative consequences of many schemes for rewarding excellence.23

Recently I added a more formal feature for fostering PDE to my courses: articulating PDE as a specific competency. For example, the competencies for the wikifolio on long-term memory state that on completing of the assignment, students should be able to:

- Meaningfully discuss the implications of specific aspects of long-term memory for education in specific contexts (as shown by your wikifolio, discussions, and reflections).

- Describe the specific aspects of long-term memory that are most relevant to a particular instructional goal (as shown by your wikifolio and practice exam performance).

- Distinguish the key features of the long-term memory framework, the building blocks of cognition, verbal and imaginal memory, and evolving models of memory (as shown by your performance on the practice assessment and the module exam).

Starting with the PDE competency highlights it as the most important thing. The second competency is less contextual and includes knowledge that can be meaningfully assessed. The third (more conventional) competency comes last to indicate that this more abstract and decontextualized knowledge is best learned as an outcome of developing the other competencies, rather than being learned directly through memorization.

Another more formal and social strategy I have explored for rewarding PDE is a form of "peer promotion." I introduced "PDE stamps" in this class, whereby the assignments ask students to post a comment starting with a distinctive string (in this case "&&&") for engagement they found particularly productive.24 For example, among the comments included in sample wikifolio was a 200-word comment from a doctoral student in a different group who was also one of the most prodigious and experienced students in the class. That comment pushed back hard on ranking visual encoding least relevant and gave some particularly insightful suggestions for doing so in the wikifolio author's instructional context. The author gave that comment a PDE stamp and wrote "thanks for pushing me to think more deeply about this!"25

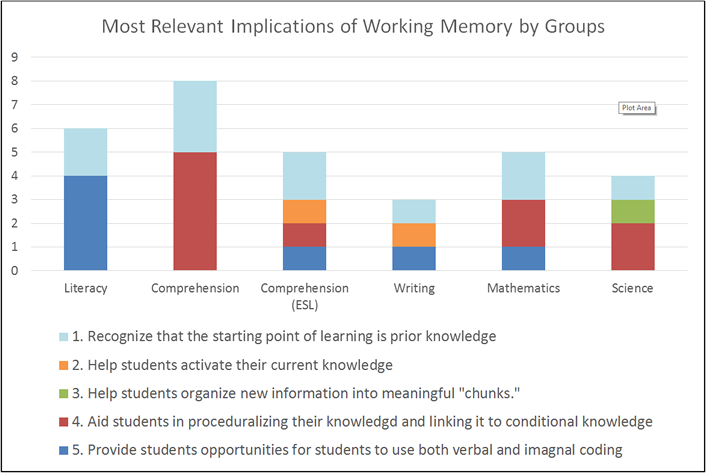

Finally, each week I provided whole-class feedback showing which implications were deemed most relevant and least relevant for the class and then for each group (figure 4). This feedback turned out to be a promising resource for reiterating key nuances, introducing new ideas, and recognizing exemplary engagement. For example, the fact that most of the literacy group selected implication 5 helped reveal and reinforce a key aspect of imaginal coding; this was easily illustrated by linking to a particularly cogent argument from that group. Alternatively, linking to the one wikifolio that selected implication 3 was an easy way for students to review what it means to "chunk" information and why that facilitates learning. I know from log data that most (but not all) students look at this feedback. My doctoral students and I are currently studying how to maximize the use and usefulness of this information when it is first posted and when students use it to guide their exam preparation.26

Figure 4. Example of whole-class feedback showing rankings by group

Principle 3: Grade Student Artifacts through Local Reflections

One of the most important features of this approach is that the contents of the wikifolios and the student comments are never directly graded. As the instructor, I prioritize my time searching for productive comments and exchanges so that I can commend and contribute. The wikifolios and interactions are assigned points toward a course grade via three carefully worded reflection prompts that students must respond to within one week of the original deadline. The first critical engagement prompt asks students to reflect on the intersection between their instructional goal and the assignment:

How suitable was your instructional goal and educational context for learning the ideas this week? Did your classmates have goals where these implications were more directly relevant? (You are not being asked to criticize your understanding or your work; rather you are critiquing the suitability of your instructional goal and experience for learning to use these ideas.)

The reflection on the long-term memory assignment from the student in the example above reflected thoughtfully on the universal relevance of long-term memory for learning:

The topic of long term memory is very important to my learning goal. I believe long term memory is suitable to almost any learning goal someone might have. There really aren't any learning targets we teach where the end goal is not to have students remember it for a long time. Since my learning goal has to do with reading, I believe it is one of the most relevant in terms of long term memory. Vocabulary and reading skills are things that need to be embedded in a student's long term memory. These are not skills that will just go away. We will keep building on these skills for the rest of their schooling and even after.

The second reflection prompt is used to recognize and motivate collaborative engagement:

Review the comments from your classmates and reflect on any insights that emerged in the discussions, anything particularly useful or interesting. Single out the classmates that have been particularly helpful in your thinking, both in their comments and from reading their wikifolios.

The assignment instructions for commenting tell students that their goal should be getting mentioned in their peer's reflections as often as possible. The student in the sample wikifolio wrote:

K gave me a great example for the question I asked in my post. I wasn't sure how to include both verbal and visual examples for my learning goal. However, C was especially helpful. She explained imaginal coding in a way I hadn't thought of before. Imaginal coding can be applied to anything that brings up an image in their heads. I can try to relate words to memories they have or prior knowledge by having them imagine these things. They will make associations through their own knowledge and thinking. It is nice to have it explained in a different way.

The wikifolio author awarded the badge to the second student for illustrating how the different features work together to support PDE. Note that in addition to further encouraging and rewarding PDE, the reflection itself is yet another example of PDE because it further elaborates on the relationship between imaginal coding and the wikifolio author's own professional practice.

The third reflection prompt concerns consequential engagement. This prompt builds on Melissa Gresalfi's research on dispositions27 and consequential engagement.28 The prompt pushes students to reflect more generally about the consequences of what they have learned across multiple contexts:

What are the consequences of what you learned this week for your instructional goal and in general? How would you teach or instruct differently? What are the real-world consequences of these ideas? Be specific.

The consequential reflection for the student in the example started with 180 words about strategies that might be used to stimulate prior knowledge and chunk information differently. It concluded with the following reflection on the potential consequences of the student's new knowledge about visual learning:

Finally, I got many helpful examples for incorporating both visual and verbal aspect[s] of teaching in my learning goal. I need to make learning vocabulary and using context clues more visual and not completely rely on verbal instruction. I like the idea of find[ing] a video, commercial, song, etc. that relates to a new word students are learning. I now have a much better sense of what will help my students take what I am teaching to the next level and be able to use it long after the 30 minute lesson is over.

This example nicely traces how this student initially struggled to uncover the implications of a complex disciplinary insight (linking verbal and visual information), benefitted from discussions with peers, and then provided compelling evidence of meaningful consequences for practice.

These reflections serve both as formative and summative assessments. They function formatively in the way that students have to think more deeply to consider how suitable their instructional goal was and what they will do differently in the future. Examination of wikifolio history files has confirmed that during the first few weeks, some students will go back and work on their wikifolios after they start writing the reflections. These history files also show that the collaboration reflection prompts wiki authors to respond to new comments. These experiences presumably shape future engagement in productive ways as well, by encouraging learners to think about them while working on the content of the wikifolios.29

These reflections also serve as summative assessments in that it is nearly impossible for students to post coherent reflections to these prompts without engaging meaningfully with the assignments and interacting with at least some of their peers. So, if student reflections are coherent and the wikifolio was posted by the deadline, students get all possible points for their wikifolios (in the Learning & Cognition course, students earn 5 points for each reflection on 14 wikifolios, for 70 possible points toward the 100 points total).30

Note two important additional points about this grading practice:

- First, it takes almost no time for me as an instructor. When I assign points, I insert a few comments in the grading feedback system to let students know I have read the reflections and commending noteworthy aspects — no more than a minute per wikifolio. My goal is to keep these private interactions between me and my students at a bare minimum, because they are so time consuming and inefficient.31

- The second and more important point is that grading the actual content of the wikifolio and the reflections works against the goal of fostering PDE. Grading content beyond making sure the work is complete leads students to demand precise guidelines, detailed rubrics, and extensive individualized feedback (i.e., "Is this what you want???"). The key point is to not use artifacts to assess what students know and might be able to do. That is the job of conventional assessments.

Principle 4: Let Students Self-Assess Their Understanding Privately

This principle suggests giving students the opportunity to privately assess their conceptual understanding of the "big ideas" and their knowledge of some of the specifics after each assignment and/or before taking exams. I have experimented with this principle a lot over the years, and it continues to evolve. Currently, each wikifolio assignment concludes with a self-assessment consisting of 6–10 essay items. Students are encouraged (but not required) to complete the self-assessment before they move on to the next wikifolio and/or before they take the three module exams. These interactions are "private" in that they occur only between the student and the LMS.32

The instructions encourage students to complete the practice test from memory to see if they are prepared for the time-limited multiple-choice exam. The practice essay items are challenging, and students don't see the correct answer right away. The instructions for interpreting their performance are carefully worded: if they are unable to answer more than one of the practice items, they need to go back and engage more with their peers and the text. In particular, the instructions tell students to review the weekly feedback and review and discuss the examples highlighted. Unlike more typical "formative assessments," the instructions do not encourage students to study the correct answers provided.

The design and wording of these assessments draw directly from my own research on what I call "multi-level" assessment33 and my critiques of prevailing models of formative assessment.34 This principle and the way it is enacted in this course reflects three participatory assumptions about assessment. The first assumption is that once disciplinary knowledge has been transformed into "known answer" questions, there is little more to be learned other than the correct answer to that question. This knowledge is mostly useful in the event that students see that same question again. Unlikely in the real world, that never happens in my classes.

The second assumption behind this principle is that assessments can only capture a fraction of everything that might be learned, even in a single week of class. This argues against spending a lot of time focusing on the knowledge that ended up on the assessment and the particular way that knowledge was represented.

My third assumption is that assessments are imprecise indicators of what students "know." This is an issue particularly with contextual and consequential knowledge and with instructor-developed assessments. While some content can be "directly" assessed accurately and reliably, much content cannot. Furthermore, focusing more on content that can be directly assessed or distorting more contextual knowledge so that it can be directly assessed ends up removing the most consequential content from the course.

This principle responds directly to the way that many approaches to CBE devote massive amounts of time and attention to assessment. This includes (a) instructors designing and securing assessments, (b) students completing assessments, (c) instructors grading completed assessments, and (d) students and instructors reviewing correct answers. In some of the more extreme CBE approaches, these activities are the instruction. This might make sense in the few domains and contexts where all of the content is factual, procedural, and meaningful out of context — but I have yet to encounter such a course.

One of the challenges I am currently struggling with follows from the expectations that students bring to class. Presumably based on prior experience, some students access the practice assessment items but do not enter answers (despite instructions to do so). I assume that these students are studying the answers to the practice items in order to prepare for module exams. While I have yet to study this behavior systematically, I have noticed that these students typically perform the lowest on the final exam.

Principle 5: Measure Aggregated Achievement Discreetly

This principle follows from my prior efforts at using participatory theories of learning to simultaneously improve and evaluate instructional programs.35 The notion of "aggregated achievement" highlights the assumption that multiple-choice achievement tests measure achievement at the level of groups of people and reliably and efficiently compare how much people know about a domain — in the aggregate. But the very feature that makes them good for doing so (machine-scoreable multiple-choice items) makes achievement tests problematic indicators of whether or not an individual "knows" something specific.

The notion of measuring achievement "discreetly" highlights my assumption that multiple-choice achievement tests should be assigned a narrow role in the instruction-assessment "ecosystem" associated with a given course. Such tests should be used primarily to evaluate the success of that ecosystem, measure course improvement over time, and compare impact across different courses targeting the same standards. Such tests should be used secondarily to motivate prior engagement, but they should never be used to directly drive instruction. Of course, instructors also should be conscious of the standards (or curricular aims) that their course targets, but studiously avoid directly exposing students to the specific associations used to represent course knowledge on their achievement tests.36

In most of my courses, I use time-limited multiple-choice exams worth relatively few points. These items align more to targeted standards and less to the specific ways my course targets those standards. In my Learning and Cognition class, each of the three module exams is worth 10 points (out of 100). I select or create items that can't be looked up quickly (because they are worded in a way that requires looking up all four or five responses), as well as challenging "best answer" items that can't be looked up at all (because they require deep understanding of disciplinary nuances). To maintain a reasonable level of test security, I only let students see one item at a time, and I only give feedback on overall scores (rather than individual items). I also regularly introduce new items and use item analysis (a useful feature now included in most LMSs) to identify and remove items with poor psychometric properties.37 Generally speaking, I refine my tests so that that just one or two students get perfect scores and the mean score falls near 90 percent. I also try to make sure that the scores are normally distributed (i.e., the familiar bell curve).

One of the big advantages of my approach to testing is that it is almost entirely automated. As with the previous principle, the biggest challenge I face in enacting this principle is the expectations that students bring to class. Many "good" students are used to getting perfect scores on classroom assessments; many students are also used to contesting answers and arguing for additional points. This seems common in graduate-level courses, and particularly in domains like education and the humanities. Even though the average-scoring student in my current class lost around one point on each of the three exams, some still complained about the "best answer" items, wanted to know which items they missed, and expressed concern about their grade. I try to head off these concerns with my instructions and feedback on the exams, but I still end up spending a fair amount of time exchanging private messages with some of the students after the first and even the second exam.

Conclusions and Future Work

My doctoral students and I are currently analyzing the wealth of data that the Canvas LMS provided us for this course. We have previously published evidence of the effectiveness of earlier versions of this course38 and initial learning analytics for the Educational Assessment BOOC.39 We have documented impressive levels of individual engagement (wikifolios averaging around 1,000 works) and social engagement (averaging five comments of about 100 words). Coding of comments has shown that the vast majority of them (over 90 percent) reference the topic of the assignment, and 25–50 percent reference a specific context of practice.

Much of the effort that my graduate assistants and I invested in designing and running this latest course went to overcoming the limits of the current enterprise version of the Canvas LMS. For example, unlike most other LMSs, Canvas does not allow threaded comments on student-generated pages. Our workaround involved having the student and the instructor post their comments and questions right on each other's pages, using tabbing to denote threads.40 Another limitation of the current version of Canvas is that the standard page-generation facility can only accommodate 200 pages. We uncovered a relatively straightforward workaround to this limitation as well.

One of the biggest advantages of CBE that this current course did not support is self-paced learning. The ability to start and stop instruction at any time appeals to many students and provides the flexibility that many expect in online instruction. Starting in summer 2015 my Educational Assessment BOOC introduced two new course features needed to support self-paced learning. The first is a method for archiving wikifolios so that students who have finished working with it can choose to (a) keep it active to generate a notification if someone leaves a comment, (b) archive it so that an e-mail is generated if someone posts a comment, (c) archive it so that subsequent learners can view it, or (d) delete it.

The second feature needed to support self-paced learners was a new student wikifolio home page that displayed wikifolios with all of the information, according to wikifolio assignment and course networking group. This has already allowed students in the 2015 course who are working ahead to interact with students from the 2014 course; it also lets newcomers enter the course and work at their own pace. Additionally, recently secured funding supports building prototypes of open learning modules for pre-calculus and calculus using these methods. The resulting modules will be entirely open, self-paced, and instructor-free.

While much work remains, it feels like significant progress has been made — and that PLA's five principles bring a useful coherence and focus to these efforts. Ongoing development around LTI are now making it possible to develop external LMS applications for wikifolios, making it possible to allow student interaction and work to take place in the open Internet, while keeping grading functions, private interaction, and formal tracking of competencies within the secure and password-protected LMS. These are promising developments that I expect will allow many innovators to experiment with a wide range of new approaches to online and hybrid instruction, including the example introduced here.

Acknowledgments

Joshua Quick contributed to the writing of this article. I thank Joshua, Rebecca Itow, Thomas Grenfel Smith, Suraj Uttamchandani, Tara Kelly, Karthik Bangera, Garrett Poortinga, and others for their help designing and supporting these courses. I also thank the many students in my online courses over the years for their enthusiasm and patience. This research as supported directly or indirectly by grants from Google, the John D. and Catherine T. MacArthur Foundation, and Indiana University's Office of the Vice Provost of Information Technology and Office of the Vice Provost of Undergraduate Education.

Notes

- John R. Anderson of Carnegie Mellon University is one of the leading scholars in this tradition. One of his classic texts, Cognitive Psychology and Its Implications, was first published by Freeman in 1980; the eighth edition came out in 2014. This tradition is now most clearly represented by the artificially intelligent cognitive tutors associated with Carnegie Mellon.

- This is the so-called "TPCK" or "Technological Pedagogical Content Knowledge" that seems in particularly short supply when it comes to online teaching, where many instructors are expected to teach many different courses across multiple platforms. Matthew J. Koehler and Punya Mishra, "Introducing TPCK," Handbook of technological pedagogical content knowledge (TPCK) for educators (2008): 3–29.

- For example, John R. Savery and Thomas M. Duffy, "Problem-based learning: An instructional model and its constructivist framework," Educational Technology, Vol. 35, No. 5 (1995): 31–38.

- For a particularly balanced discussion of the three "grand theories" of learning presented here, see Robbie Case, "Changing views of knowledge and their impact on educational research and practice," in The handbook of education and human development, D. Olson and N. Torrance, Eds. (Oxford: Blackwell, 1996), 75–99.

- For a helpful discussion, see Roger M. Harris, Barry Hobart, and David Lundberg, Competency-based education and training: Between a rock and a whirlpool (South Melbourne, Australia: Macmillan Education AU, 1995).

- John Seely Brown and Richard J. Adler, "Minds on Fire: Open Education, the Long tail, and Learning 2.0" EDUCAUSE Review, Vol. 43, No. 1 (January 18, 2008): 16–20.

- This view is nicely summarized in the principles of learning that emerged at the Institute for Research on Learning that Seely Brown co-founded in 1986.

- The approach that I present in this article emerged over two decades of studies carried out to support this hypothesis and explore its practical implications with various learning technologies. I recently summarized this research in the article "A situative response to the conundrum of formative assessment" Assessment in Education: Principles, Policy & Practice, Vol. 22, No. 2 (2015): 202–223.

- The Institute for Research on Learning's Seven Principles of Social Learning provide a concise overview of social and participatory theories of learning.

- James G. Greeno, "The situativity of knowing, learning, and research," American Psychologist, Vol. 53, No. 1 (1998): 5.

- Technically speaking, I have been conducting iterative cycles of design-based research (DBR), a signature method of learning scientists. Rather than conducting formal experiments to test "basic" theories, DBR builds "local" theories in the context of efforts to reform educational practice.

- Daniel Hickey and Andrea Rehak, "Wikifolios and Participatory Assessment for Engagement, Understanding, and Achievement in Online Courses," Journal of Educational Media and Hypermedia, Vol. 22, No. 4 (November 2013): 229–263.

- Daniel Hickey, "Open Source Options are Providing Alternatives for Core Services" The Evolllution: Illuminating the Lifelong Learning Movement, January 2014.

- Daniel T. Hickey, Tara Alana Kelly, and Xinyi Shen, "Small to Big before Massive: Scaling Up Participatory Learning and Assessment," Proceedings of the Fourth International Conference on Learning Analytics and Knowledge (New York: ACM, 2014), 93–97.

- Roger H. Bruning, Gregory J. Schraw, and Monica M. Norby, Cognitive psychology and instruction, 5th ed. (Prentice-Hall, Inc., Pearson, 2011). This text is particularly well suited for this approach because each chapter concludes with 5–8 "Implications for education." However, I have found that most textbooks and most assigned readings are organized in a way that allows them to be "problematized" using the ranking feature that is central to PLA.

- To see a description of this activity: Daniel Hickey and Andrea Rehak, "Wikifolios and participatory assessment for engagement, understanding, and achievement in online courses," Journal of Educational Multimedia and Hypermedia, Vol. 22, No. 4 (2013): 407–441. Students are assigned to formal groups within the LMS for this group activity. But for the first two modules in this course and in all of my other courses, group membership is informal and fluid, intended to help students construct their personal identity, and is signaled by simply arranging the student homepages accordingly.

- Randi A. Engle and Faith R. Conant, "Guiding principles for fostering productive disciplinary engagement: Explaining an emergent argument in a community of learners classroom," Cognition and Instruction, Vol. 20, No. 4 (2002): 399–483; Randi A. Engle, "Productive Disciplinary Engagement: Origins, Key Concepts, and Developments," in Design research on learning and thinking in educational settings: Enhancing intellectual growth and functioning, David Yun Dai, ed. (Routledge, 2012).

- This is not to say that some instructors in some online courses don't use discussion forums productively. But in my experience they are seldom productive for all learners. A common problem with forums is that the most advanced students and the instructor will draw on their more extensive experience to discuss ideas at a level of abstraction that is quite opaque for the less experienced students (who are, after all, the real "learners" in the class). In response, instructors sometimes post specific questions and require students to post responses — often resulting in redundant and dull exchanges that are not really discussions at all. In my course, discussion forums are only used to ask and respond to general questions. Normally I put up one forum each for questions about the class in general, technology, and specific assignments.

- Rogers Hall and Andee Rubin, "There's five little notches in here: Dilemmas in teaching and learning the conventional structure of rate," in Thinking practices in mathematics and science learning, James G. Greeno and Shelley V. Goldman, eds. (Mahwah, NJ: Lawrence Erlbaum, 1998): 189–235.

- Some approaches may go so far as to define competencies for "conditional" knowledge (knowing "what" and "when"). The problem is that the number of conditional competencies explodes exponentially when even a small number of contexts of use are introduced.

- It is worth noting that traditional models of cognition include "conditional" knowledge, which concerns knowing "when" and "why." A strictly CBE approach would presumably define additional competencies regarding the context where procedural and declarative competencies are useful. I contend that doing so even with a few contexts would lead to an exponential growth in the number of competencies and that these contexts would inevitably be less meaningful for many of the students.

- Problematize disciplinary knowledge from the learner's perspective; Give students authority and position them as local experts; Establish disciplinary accountability and require students to defend their positions respectfully; and Provide resources students need to do this.

- Hundreds of studies have shown that extrinsic rewards undermine engagement and intrinsic motivation. These concerns have been popularized by writers like Alfie Kohn, Punished by rewards: The trouble with gold stars, incentive plans, A's, praise, and other bribes (Houghton Mifflin Harcourt, 1999). I believe that a participatory approach to incentives addresses these concerns nicely; for example, Daniel T. Hickey, "Engaged participation versus marginal nonparticipation: A stridently sociocultural approach to achievement motivation," The Elementary School Journal, Vol. 103, No. 4 (March 2003): 401–429.

- Because this particular course is on learning and cognition, it is appropriate to actually call them "PDE stamps" and explain to students what PDE is. In my other courses, peer promotions are awarded for "exemplary engagement."

- I studied these peer recognitions more extensively in earlier versions of this course and in my Educational Assessment course where the peer endorsement and promotion features are actual buttons on the wikifolios and when clicked display the peer's name (for endorsements) and justification (for promotions). These analyses have shown that many students verify their "lurking" by endorsing without leaving comments, and the warrants for the peer promotions themselves represent an additional level of PDE. See Daniel T. Hickey, Joshua D. Quick, and Xinyi Shen, "Formative and summative analyses of disciplinary engagement and learning in a big open online course" in Proceedings of the Fifth International Conference on Learning Analytics and Knowledge (New York: ACM, 2015), 310–314.

- This is currently a rather laborious task in the current version of Canvas. Students are currently required to complete a survey after completing their wikifolio to generate this data. We have automated and streamlined many aspects of the ranking and feedback task in the Educational Assessment course and are currently designing versions of those activities that can be used as external applications in Canvas via learning technologies interoperability( LTI ) protocols.

- Melissa Sommerfeld Gresalfi, "Taking up opportunities to learn: Constructing dispositions in mathematics classrooms" Journal of the Learning Sciences, Vol. 18, No. 3 (2009): 327–369.

- Melissa Gresalfi and Sasha Barab, "Learning for a reason: Supporting forms of engagement by designing tasks and orchestrating environments" Theory into practice, Vol. 50, No. 4 (2011): 300–310.

- This is an example of an important concept from cultural anthropology known as "prolepsis," where activity in the present fundamentally shapes activity in the future.

- In most of my current courses, I maintain a strict policy for weekly deadlines to ensure a critical mass of discussion around that deadline. I deduct one point per day for each day students post after the deadline. I usually end up deducting points from a few students in the first couple of weeks, and then a few more throughout the semester. As discussed in the conclusion, I am now starting to let my open (non-credit) students complete courses at their own pace while still interacting with others.

- The only exception is when a student's work and interaction have been notably weak. Typically, I warn students that they will likely do poorly on the exam. Occasionally I have had students post minimal responses; in those cases I take away points because the reflections do not meet the criteria of "coherence."

- I can and do look at the responses, but never grade them. I have experimented with grading the responses, but find grading to be overly time-consuming and time-sensitive.

- Daniel T. Hickey and Steven J. Zuiker, "Multilevel assessment for discourse, understanding, and achievement" Journal of the Learning Sciences, Vol. 21, No. 4 (2012): 522–582.

- Hickey, "A situative response."

- Daniel T. Hickey, Steven J. Zuiker, Gita Taasoobshirazi, Nancy Jo Schafer, and Marina A. Michael, "Balancing varied assessment functions to attain systemic validity: Three is the magic number," Studies in Educational Evaluation, Vol. 32, no. 3 (2006): 180–201.

- I contend that "teaching to the test" is far more pervasive and problematic than many instructors or researchers acknowledge. For example, I believe that many instructors use the foils (incorrect responses) on their own tests as counterexamples in their lectures. Because the threshold for recognizing (as compared to recalling) specific information is so low, such practices introduce what validity theories call "construct-irrelevant easiness that artificially inflates student performance." For more information, see Samuel Messick, "Validity of psychological assessment: validation of inferences from persons' responses and performances as scientific inquiry into score meaning," American Psychologist, Vol. 50, No. 9 (September 1995): 741.

- This is actually a lot easier than most people realize. It is really about making sure that the most difficult items are answered correctly by the students who answered all of the other items correctly.

- Hickey and Rehak, "Wikifolios and participatory assessment."

- Hickey, Kelley, and Shen, "Small to big before massive."

- Instructure reportedly is adding the functionality to Canvas. Indiana University High School is using an open-source version of Canvas that the school modified to allow threaded comments on student-generated pages. In my most recent BOOC in Canvas (Introduction to Educational Data Sciences, summer 2015), students generated a new discussion forum for each wikifolio and used the header of the discussion forum as their wikifolio. This introduced an additional challenge of a slow page loads, however.

© 2015 Daniel Hickey. The text of this EDUCAUSE Review online article is licensed under the Creative Commons Attribution-NonCommercial 4.0 license