Key Takeaways

- Developed as a concept in the science of choice, subtle interactions — or nudges — can influence people's actions without infringing on their freedom of choice.

- The wealth of digital data in higher education enables the use of that data to nudge students toward better achievement and persistence.

- The next step is to figure out correlation characteristics from machine recommendations, and determine practices based on patterns found in the data.

- A vibrant community is forming for action, basing new practices on successful models of pattern analysis, implementation, and evaluation.

nudge vb (tr)

1. to push or poke (someone) gently, esp with the elbow, to get attention; jog, 2. to push slowly or lightly as I drove out, I just nudged the gatepost, 3. to give (someone) a gentle reminder or encouragement

— TheFreeDictionary

Richard Thaler and Cass Sunstein wrote a book called Nudge: Improving Decisions about Health, Wealth and Happiness,1 about the science of choice and how it can be used in influencing consumer action. They presented evidence for the design of subtle interventions to influence personal decisions without infringing on the consumer's freedom of choice. Exploring behavioral economics from the University of Chicago, the authors demonstrated how choice architecture successfully nudges people to improve habits in eating, investing, charitable giving, and care of their environment. Acknowledging the multitude of influences inherent in personal decisions and in freedom of action, Thaler and Sunstein made a compelling claim for the use of subtle interventions across diverse individual and group behaviors. Their book gives numerous examples, based on the architect's creative design for social good. (Visit YouTube for a demonstration of nudge in influencing a community's use of stairs versus an escalator.)

Could we bring choice architecture to higher education based not on expert design but on our wealth of digital data? Could we use machine-based nudges for our learners to promote the engagement, focus, and time-on-task behavior at the core of student success? Could we use the nudge of comparative data to influence factors like procrastination, meeting deadlines, coming to class — or to the class learning management system? This article makes the case that not only can our data nudge students to better achievement and persistence but also that the knowledge embedded in our machines makes it possible to do so without the guesswork of human design and intervention.

Institutional Interventions for Retention

Where do we start? Retention research2 in higher education populations can only provide loose correlations between institutional efforts and retention. Studies have suggested that effective institutional interventions are best aimed at:

- More informed enrollment

- Flexible courses allowing for reduced seat time

- Stronger counseling and academic support

- Nurturing social connections

We do not know why some students graduate and others do not, but for those who do, academic achievement in course work is related to persistence and to the ability to choose courses wisely (both to stay on track and to fit busy schedules). Still, do we really know how to improve retention? Certainly retention efforts have become a priority, and many institutions are seeing significant success. Most will tell you that in recent years, higher education is doing something right.

Arizona State University's (ASU) freshman retention rate hovered at 68 percent to 69 percent in the mid-1990s. It climbed to 75 percent to 76 percent in the mid-2000s, reaching 81 percent in 2009 (see Figure 1). Did this improvement result from hard work? Or an economy that drives students to stay in school? We do not know, but we offer stronger academic counseling and academic services and more directed, core choices in course selection to assist students in staying on track. We also flounder among different ideas, searching for ways to engage learners. These include initiatives like ASU on Facebook. We believe we are doing the right things, but we do not yet know which of these will produce positive results. What if retention numbers have improved due to outside factors like the economy, low wages associated with lacking a degree, or ease of getting into remedial higher education programs? How would we know whether causality exists with our retention efforts — or a spurious correlation that could be leading us in the wrong direction?

Figure 1. ASU's Freshman Retention Rate, 1990–2010

For more on correlation versus causation, and consequences of mislabeling, see Colleen's slideshare presentation:

More Knowledge Needed

What do we know? We know that strong correlation exists between academic achievement and retention3 — students who do well in their course work are more likely to persist. But aside from tutoring and stronger remedial course work options, we know little about academic achievement and motivators within our support systems. Historically, we have chosen many avenues to support students in the process of education, while leaving most motivators in course work to the student. Institutionally, the science of choice has historically been too data-complicated to implement. Science is hard; free pizza is easy. Still, pizza math alone leaves us to wonder what happened to the 19 percent of last year's ASU freshman class who didn't return. We really do not know.

Certainly the literature verifies that lower division retention correlates with increased personal support to the student, but many students fall through the cracks: students who had excellent grades and poor grades; students who stayed on course and took electives; students from high and low socioeconomic levels. All variables and correlations point to clear evidence that students must choose to persist.

As we see more independent, working, and older students in higher education (see Greater Expectations), we propose that today's learners might not need nurturing — they might just need a nudge. Nudge analytics, or machine recommendations based on patterns found in the data, might be a better way of reaching these students: a personalized digital nudge to study, to come to class, to read the chapter assigned, to submit the assignment due tomorrow. Based on the machine's ability to find success patterns in the data, students could receive reminders in the form of a simple, objective, nonintrusive nudge. An automatically generated message could point out that "Based on historical data for this point in the semester, 80 percent of students who log in as infrequently as you (1.3 times per week) seldom complete with better than a D, and 60 percent fail. You can improve your projected grade by 24 percent by logging in every 3 days with time on task greater than 2 hours." Never has it been easier to use analytics in proactive outreach to influence and encourage behavior associated with persistence.

Consider a simple analogy with the use of a global positioning system while driving: Most systems keep track of not only location of the vehicle but also its speed. Further, when drivers exceed the legal speed limit while traveling to their destination, a simple nudge is provided in the form of a color change in the indicated speed, from white to red numbers. Some higher priced global positioning systems have a "traffic enhanced" feature that incorporates existing road and traffic conditions and then provides alternative directional suggestions, allowing the driver to avoid congestion.

Analytics and Data Mining Leading to Action

Summative studies already show us the value of using embedded data to better review, project, and understand patterns and emerging trends to shed light on learner success and retention (see especially EDUCAUSE work on the analytics theme). Higher education's strongest evidence for the use of academic analytics appears in an earlier issue of EQ, where Kim Arnold of Purdue University made a strong case for summative analytics that allow faculty, advisors, and the students themselves to look at data as a means of predicting student performance.4

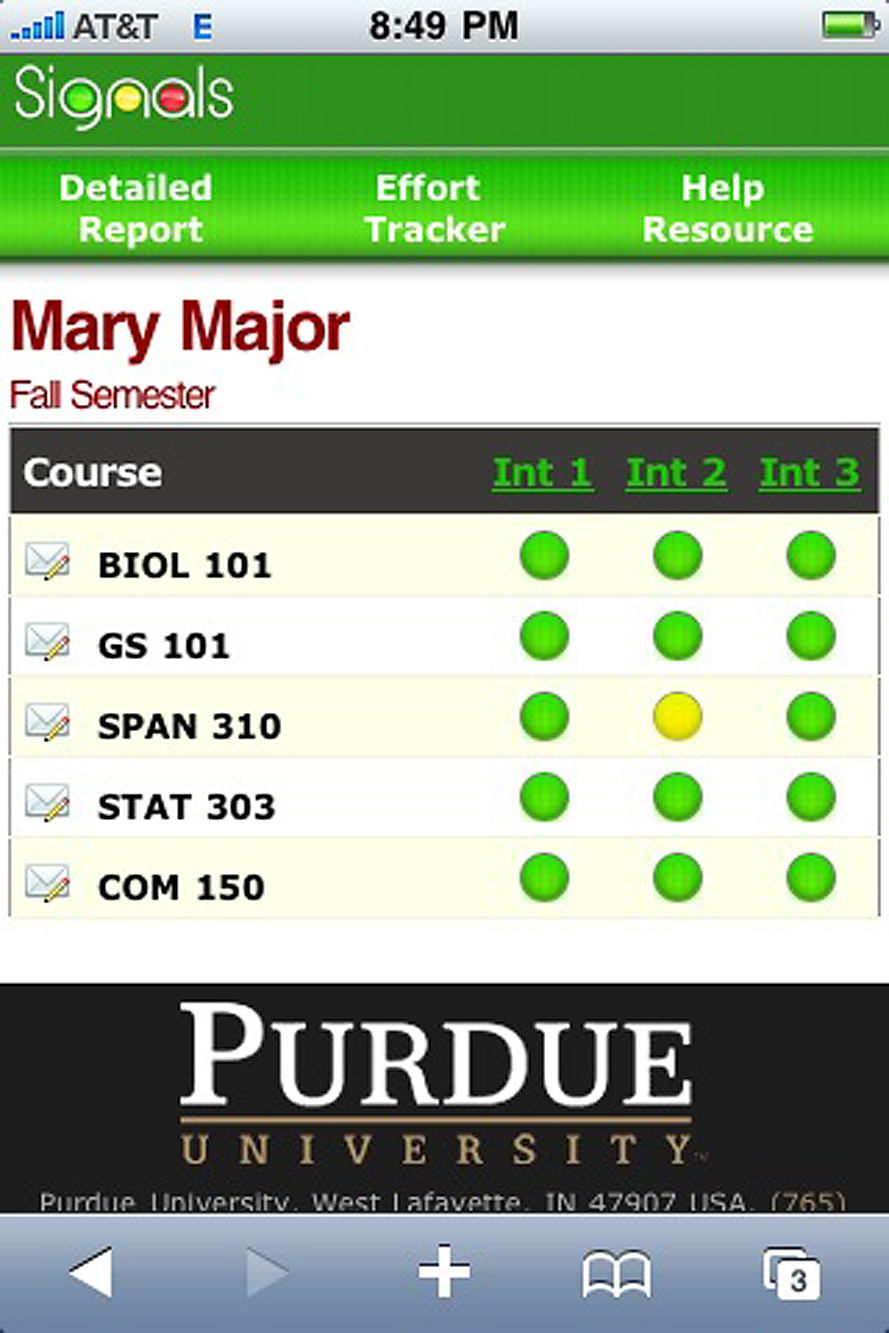

Using historical patterns found in gathering data from the institutional learning management system, Purdue's Signals initiative combines predictive modeling with data mining and nudges the learner by making apparent the relationship between learner commitment and trouble ahead. Based on an algorithmic assessment of risk, student performance in a Blackboard course is summarized as a stoplight, showing red and yellow as warning signs and green as encouragement (see Figure 2).

Figure 2. Screen from Purdue's Signals

Web image used with permission from Purdue

These data could easily be repurposed to provide machine recommendation on actions to be taken. Further, these data might be collected error-free without faculty requests or intervention.

A smaller initiative is underway at ASU, where the Criminal Justice and Criminology online bachelor's program instructors are trained and encouraged to turn on course and statistics tracking in Blackboard. Data is collected to determine use of the content, its effectiveness, and student engagement. Based on course data (who accessed this content, when, for how long), the program matches actions with outcomes. If material is not being accessed at the course level, the material needs to be rethought by the instructor — a nudge toward quality courses. If material is not being accessed at the student level, the student needs to be contacted. The ability of the Grade Center to easily monitor submissions allows instructors to monitor assignment persistence. If the student is 12 hours from an important due date and the object has not been submitted, a nudge e-mail can be sent. The challenge is to turn capability into machine-based nudge processes.

Next Steps

Understanding the possibilities and challenges ahead is becoming clearer to a growing community of analysts, coders, and assessment experts. These data geeks are creating intelligent agency for pervasive data collection designed to do something to enrich the experience of the learner (see the Action Analytics community site). Understanding and creating intervention systems based on data is a brewing field, and institutions that aren't working to figure out how to move data collection into predictive analytics will quickly be left behind. At the recent WCET conference, Clark Quinn (Learnlets) suggested the work of moving analytics to action rests in team design for merging "front-end/back-end services" and that this integration will be at the core of a new Web 3.0 world. At the same conference, Phil Ice claimed that skills in guiding the integration will be a hot career in higher education within three to five years.

Consider the possibilities: data collected via mobile phones, clickers, or radio frequency identification can now be used for auto-contact or to collect attendance information about the student. The mobile phone can be used to provide a nudge directly to the student in response to patterns found to affect retention (number of logins, time on task, meeting deadlines).

Nudge: To suggest, to poke, to inform. Nudge analytics: to point toward specific action. To remind a student of consequences for doing or not doing a specific task. Nudge analytics changes the information awareness model to an action model where outcomes can be observed and compared. Gathering and pattern-matching data with the intention of suggesting better actions and influencing decisions is the heart of nudge analytics.

Certainly our thinking about the desired objectivity and freedom of choice implied in nudge analytics comes from the discipline of evaluation and assessment, so vital to higher education. But, how do we know what works?

- The obsessive question that haunts a good instructor: "How do we know that our students know what we need them to know?"

- The obsessive question that haunts program chairs: "How do we know that our graduates are receiving a consistent and relevant education?"

- The obsessive question that haunts higher education leadership: "How do we know that our students will be prepared for the future they're facing, in careers that don't yet exist in the workplace?"

The kind of evaluation and assessment that educational institutions have often thought deeply about has become less daunting due to machine-based data collection. The machine knows how many of our students are first-generation attendees and how well they're doing. It knows how many students taking ENG 101 drop in week 4. It knows their ages and how often they logged in to the course. It knows how students in the same situation did in earlier semesters. It just doesn't put the information together to share or create recommendations. With what the machine knows, and what we could now do to implement nudge analytics, that could, with effort, easily change. Challenges exist in moving analytics to action and in putting together teams that have the skills to analyze data, design action, integrate with current systems, and assess the results. Consider this article a nudge to take those analytic steps.

- Richard H. Thaler and Cass R. Sunstein, Nudge: Improving Decisions About Health, Wealth, and Happiness (Cambridge, MA: Yale University Press, 2008).

- Vincent Tinto, Leaving College: Rethinking the Causes and Cures of Student Attrition (Chicago: University of Chicago Press, 1987); and Alan Seidman, ed., College Student Retention: Formula for Student Success (Westport, CT: ACE/Praeger, 2005).

- M. Scott DeBerard, Glen I. Spielmans, and Deana L. Julka, "Predictors of Academic Achievement and Retention Among College Freshmen: A Longitudinal Study," College Student Journal, vol. 38, no. 1 (2004), pp. 66–80.

- Kimberly E. Arnold, "Signals: Applying Academic Analytics," EDUCAUSE Quarterly, vol. 33, no. 1 (January–March 2010).

© 2010 Colleen Carmean and Philip Mizzi. The text of this article is licensed under the Creative Commons Attribution -Share Alike 3.0 license.