New technologies, especially those relying on artificial intelligence or data analytics, are exciting but also present ethical challenges that deserve our attention and action. Higher education can and must lead the way.

Some news stories are hard to forget, like the one from a decade ago about a teenager who was texting while walking and fell into an open manhole on the street. Many headlines made fun of the scraped-up fifteen-year-old. But most of the news stories were focused on the people involved and thus didn't see the bigger story about the place where humans and technology clash—or, in this case, crash.1

In 2020, I remember this story and see it as perhaps the perfect metaphor for the challenge of digital ethics. New technologies, many that depend on private data or emerging artificial intelligence (AI) applications, are being rolled out with enthusiastic abandon. These dazzling technologies capture our attention and inspire our imagination. Meanwhile, fascinated by these developments, we may soon see the ground drop out from under us. We need to find a way to pay attention to both the rapid technology innovations and the very real implications for the people who use them—or, as some would say, the people who are used by them.

I believe we are at a crucial point in the evolution of technology. We must come to grips with digital ethics, which I define simply as "doing the right thing at the intersection of technology innovation and accepted social values." This is a straightforward-enough definition; however, given the speed of technology change and the relativity of social values, even a simple definition may be trickier than it seems. For example, at the point where they clash, the desire for the latest data-powered apps and the desire for fiercely protected privacy reveal significant ethical fault lines. Which desire prevails? And while we contemplate this question, the development of new apps continues.

A Century of Profound Technology Change

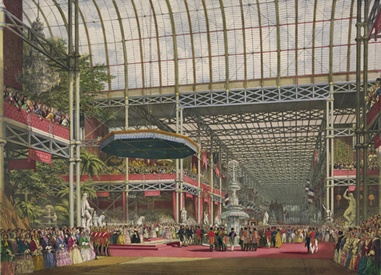

When we talk about technology innovation, we tend to look forward, imagining our contemporary circumstances to be utterly unprecedented. Yet we are not the first to deal with "disruptive," technology-driven change. Nor are we the first who must cope with the scale of the ethical implications of these developments. We have much to learn from the analog technology innovations of an earlier century,2 and there's hardly a better moment in time to consider than the Great Exhibition in London in 1851.

Credit: Everett Historical

The Great Exhibition was so popular that its profits funded several public museums still operating in the United Kingdom today, and the spectacle was so significant that the equivalent of one-third of the population of Great Britain came to London to see the exhibition.3 It was arguably the beginning of the technology optimism we still see today, the conviction that no problem was so grand that a new, marvelous invention couldn't present a solution. Representative medals were awarded to a telescope, early daguerreotypes, and even a precursor to the fax machine. Along with these recognizable innovations, there were also truly strange products on display, like the "tempest prognosticator": an enterprising inventor discovered that leeches responded to rapid changes in barometric pressure in a way that could be rigged to trip a trigger that would sound a bell, thereby warning of an impending storm.4 Also on display at the Great Exhibition was an example of William Bally's busts used to illustrate concepts of the then much-hyped pseudo-science of phrenology, the belief that the size and the shape of the skull are an indicator of someone's character and mental abilities.

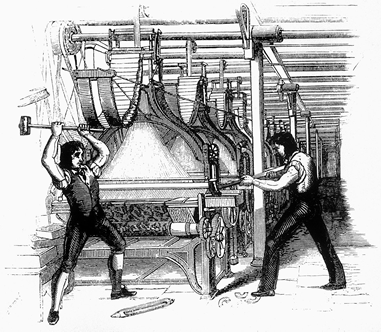

Credit: Badobadop

This was the century of invention and also the century of quackery of all kinds. From magnetic corsets to electric belts, products promised amazing curative properties for nervous disorders, indigestion, rheumatism, sleeplessness, and "worn-out Stomach." Emerging technologies based on the properties of electromagnetism were being created with little regulation and countless empty promises—even as the science was still being figured out. We may think the concept of tech hype arose in the 21st century, but the 19th century revealed early masters of deploying innovations based on an incomplete understanding of science. The discoveries of polonium and radium chloride were followed in turn by a wave of radioactive quackery that included radium-infused toothpaste, "Tho-Radia" cosmetic products promising a youthful glow, and even (now strangely redundant) radium cigarettes. The Vita Radium Suppositories promised "weak discouraged men" that they would soon "bubble over with joyous vitality."5

One attendee of the Great Exhibition was not impressed. Karl Marx saw the exhibition as proof of the damage caused by technology automation and concluded that the exhibition revealed an essentially exploitative agenda with nothing short of violent implications.6 Arising at this same time were the original Luddites—which does not refer to people with a reluctance to use technology, as the term is understood today. Instead, the Luddites were responding (violently) to the introduction of new mechanical looms in the wool industry, very literally pushing back against technology that was costing people jobs, ruining their livelihoods, and circumventing standard labor practices.7

Credit: Wikimedia Commons

A powerful artifact of the dark side of 19th-century technology innovation can be found in Mary Shelley's remarkable novel Frankenstein, published in 1818 in the shadow of the Luddite riots. Though the book features a stitched-together, reanimated corpse, it is actually an intensely sophisticated discussion of ethics and technology. Dr. Frankenstein recklessly uses technology that he does not fully understand and without thinking through the deeper implications. He creates life and then abandons his own creation when things get difficult. The tragedy of the novel is his ethical failure and the suffering that results. Perhaps the most meaningful summary of the book is also the simplest: Just because you can do something with technology doesn't mean you should.

Excitement

Let's return to the metaphor I used to start this article. Multitasking by walking and texting at the same time is a poor choice. Whenever we focus too much on the technology, to the exclusion of everything else, things tend not to end well. However, it's not practicable to think we can simply halt technology innovation, taking the equivalent of a musical grand pause, while we figure out all the ethical implications. In this case, we need to multitask in an additive way, not to lessen either our excitement or our caution but to attend to both. We can be deeply concerned about digital ethics and at the same time be genuinely excited about the digital transformation clearly underway in higher education. We can be energized by new technologies while we stay fully aware of the privacy and ethical considerations. The key is balance.

Those of us working in higher education information technology often find it easy to be exhilarated about the ways that technology innovation has advanced, and will continue to advance, academia. We recognize the important role that technology professionals play when they work strategically and collaboratively, offering traction in solving some of the most intractable institutional challenges.8 The EDUCAUSE Top 10 IT Issues for 2020 reflect this hope and excitement, with institutional priorities like student retention/completion, student-centric higher education, improved enrollment, and higher education affordability joining more traditional IT issues. Advances in technology will not single-handedly move these needles, but in the larger enabling context of digital transformation, new technologies may be the most promising hope in a challenge-filled landscape. Even a quick glance at the EDUCAUSE Horizon Report reflects the excitement afoot, with discussion of analytics, mixed reality, AI and virtual assistants, adaptive learning, and more.9

Data-powered predictive analytics—including adaptive learning and student success advising technologies—tops the list of promising technologies. In addition, many new applications rely on AI or machine learning innovations to help students succeed and to help institutions work more efficiently and save money that can be repurposed toward their mission. One example of analytics and artificial intelligence coming together in a student-centric way is the emerging class of chatbot applications, from Georgia Tech's "Jill Watson" teaching assistant in 201610 to more recent examples like Deakin University's Genie [http://genie.deakin.edu.au/] app or Georgia State University's Pounce chatbox, both of which help students get quick answers and navigate their way through processes and also produce concrete institutional results in vexing areas like "summer melt." The Pounce story is just the latest chapter of how GSU is using predictive analytics as part of a broader program to increase retention and graduation rates and eliminate the achievement/opportunity gap.

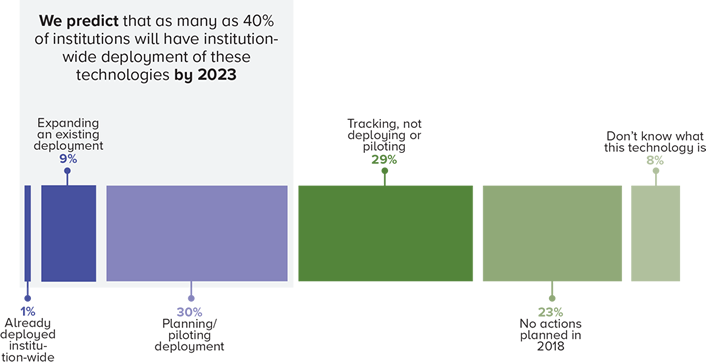

Finally, numerous technologies promise to intensify student engagement in learning. Conversations about games, simulations, and interactive problem-solving have been going on for a long time, but the growth of commercial augmented and virtual reality technologies suggests that dramatic change could be around the corner. EDUCAUSE research reveals that augmented and virtual reality technologies are expected to be deployed institution-wide at 40 percent of institutions by 2023 (see figure 1).

Source: EDUCAUSE Center for Analysis and Research (ECAR), "2018 Strategic Technologies: Data Table."

Caution

It's not a contradiction to be both excited and cautious at the same time; in fact, this seems to be the state of affairs for technology professionals. I would even argue that technology innovators who don't hold both of these thoughts in their head at the same time are likely not paying attention—or are letting the drumbeat of hype drown out the cautionary voices. It's time to listen to these quieter voices and carefully consider the question of digital ethics.

Before focusing on higher education, let's step back and understand that concerns about digital ethics extend far beyond any single context, enterprise, or industry. Readers of Shelly's Frankenstein will find many of today's news stories to be very familiar. In 2018, Chinese scientists created the world's first genetically modified humans: twins "Lulu and Nana." Although the scientists have been sentenced to jail terms and fines for their actions, at least one Russian scientist plans to continue the work.11 Meanwhile, Yale scientists have been conducting experiments to reanimate mammalian brains. The scientists are reported to be working quite cautiously, at one point shutting down an experiment because of a slim chance that some level of consciousness in an animal brain might be present. But as Nita Farahany, a law scholar and ethicist at Duke University, noted: "It's a total gray zone." Hank Greely, a law professor and ethicist at Stanford University, added that a scientist with less of an ethical compass will inevitably experiment with human brains.12 Whether we consider technologies that allow scientists to extend the life of brains or technologies that enable people to cut lives short with 3D printed guns, there is plenty to kindle concern among ethicists and non-ethicists alike.

The consequences of genetically modified babies and reanimated brains may seem unclear and far off, but weaponized artificial intelligence is already here, and it is rapidly advancing. In 2019, OpenAI created an AI language model that was so effective in generating believable text that OpenAI researchers at first decided it was dangerous and should be shared only in stages; in November 2019, seeing "no strong evidence of misuse," they released the full system: TalkToTransformer.com.13 Shortly after it was made available, I typed in "I am concerned about digital ethics because." The AI language model completed my thought this way: "I am concerned about digital ethics because of the impact of technological developments, particularly the rapid developments in digital media, in online communities. I am particularly concerned about the growth of the abuse of free speech online." This was followed by a fairly well-spoken paragraph on specific UK regulations and their implications. Impressive. Encouraged by this success, I next typed in "Digital ethics concerns me." The results of this very similar prompt were confoundingly different. This time the AI model wrote: "Digital ethics concerns me in the way I can't get on my knee and hug my husband when he's down."14

In any case, the next generation of mass, personalized, AI-generated phishing attempts will be far harder to spot. There is a terrifying signal of the future in the August 2019 story of a fake phone call that tricked one company out of $243,000 with an AI-produced impersonation of its CEO demanding a bank transfer.15 Deepfakes are already causing problems on an international scale. As deepfake-Obama observes in a viral video, new AI technologies can generate fabricated videos in which "anyone is saying anything at any point in time—even if they would never say those things." There is already much speculation on the potential geo-political mischief that deepfakes could cause (imagine the 1938 War of the Worlds broadcast scare, but with a more convincing presentation and with nuclear weapons). Election manipulation, riots, and regional instability are all very real possibilities.16 Celebrities are currently the biggest targets of deepfakes, but any college or university professional reading the chilling story of Noelle Martin will realize just how high the stakes could be. When she was a student, Martin was curious about who might be uploading her photos, so she used a reverse Google image search to find out. Instead of discovering that friends had uploaded her pictures on social media, she found hundreds of postings of her personal pictures on pornographic sites, along with photos with her face added to the bodies of porn actresses.17 Deepfake videos of students could have significant consequences for an institution, especially considering that the perpetrator could well be a student on the same campus.

Chatbots have received a lot of attention, especially recently. While bots of various kinds are being deployed at higher education institutions with positive outcomes, they are being used elsewhere in ethically problematic ways. In 2015 Mattel introduced Hello Barbie, an interactive, internet-connected doll. The idea of an "internet of toys," with children clutching internet-connected toys that ask probing personal questions (and store the answers), was viewed with great skepticism by some, for example those who launched the #HellNoBarbie campaign. In December 2017, Germany's Federal Network Agency labeled Barbie's European counterpart, Cayla, "an illegal espionage apparatus," and parents were urged to destroy the toy. In spite of the backlash, Hello Barbie is still around (and listening), while the market for connected smart toys has grown, not slowed. Interestingly, the company whose technology was incorporated into Hello Barbie changed its name to PullString, and in 2019 it was reportedly acquired by Apple to be part of their AI strategy.18

In March 2016, not long after the introduction of Hello Barbie, the chatbot Tay was released by Microsoft on Twitter. Tay was "designed to engage and entertain people where they connect with each other online through casual and playful conversation." Tay began cheerily declaring that "humans are supercool," but within 24 hours Tay was parroting racist, homophobic, and Nazi propaganda. After being suspended for nearly a week, the chatbot came back online again briefly, only to confuse and offend a few more people before falling into a fatal loop, endlessly repeating what might, in retrospect, be prophetic: "You are too fast, please take a rest." Tay was given a permanent rest, and many experts scratched their heads as to why Microsoft released the bot before anticipating the possibilities more accurately.19

The Tay story of artificial intelligence released too fast tops them all because it was so dramatic and so publicly visible. But in the competitive world of technology products, asking for forgiveness later may be easier than taking the time to anticipate all that could go wrong, especially given pressures to be first-to-market. As chatbots and AI products are released, the more concerning issues are the far-less-obvious examples of subtle discrimination, racism, and flawed data being built into algorithms that remain opaque to those who use them.

For example, as smart speakers and digital assistants continue to become more prevalent, ethical concerns are increasingly a global concern. A UNESCO study, titled "I'd Blush If I Could," focused on gender divides built into, and exacerbated by, digital assistants. In response to the remark "You're a bitch," Apple's Siri responded: "I'd blush if I could." Amazon's Alexa replied: "Well, thanks for the feedback." Microsoft's Cortana said: "Well, that's not going to get us anywhere." And Google Home (also Google Assistant) answered: "My apologies, I don't understand." The report observes that these AI responses come from applications built by "overwhelmingly male engineering teams" who "cause their feminised digital assistants to greet verbal abuse with catch-me-if-you-can flirtation." As a result of criticism, Siri's responses have evolved, and "she" now replies to abusive statements differently (though the report suggests even the new responses continue to be submissive).20

Responding passively to suggestive or abusive comments reinforces a subservient role for women and signals that the inappropriate comments are acceptable. Steve Worswick, developer of the award-winning Mitsuku chatbot, writes about ethical implications of chatbots from personal experience and reports that "abusive messages, swearing and sex talk" make up around 30 percent of the input Mitsuku receives. Perhaps there simply is no rational reason to believe that the technologies we invent are likely to solve the shortcomings involved in humanity itself. As Kentaro Toyama says in Geek Heresy: "Brilliant technology is not enough to save us from ourselves." The technologies and virtual personalities we create tend to amplify our human shortcomings, not eliminate them.21

There are ethical implications for many if not all of the technologies that are emerging and, of course, unresolved ethical issues with the internet itself—including the digital divides that have been identified and, some would say, ignored for a long time.22 However, the cluster of technologies that fall under the general category of artificial intelligence understandably get the most attention. In May 2017, Forbes reported that the use of digital assistants was on the rise, especially among business executives and millennials, and that nearly one-third of consumers couldn't say for sure if their last customer service interaction was with a person or a bot. In April 2018, Gartner suggested that in 2020, the average person will have more conversations with bots than with their spouse.23

In the face of this inexorable march, several powerful voices have come forward arguing for caution. Weapons of Math Destruction: How Big Data Increases Inequality and Threatens Democracy, written by Cathy O'Neil in early 2016, continues to be relevant and illuminating. O'Neil's book revolves around her insight that "algorithms are opinions embedded in code," in distinct contrast to the belief that algorithms are based on—and produce—indisputable facts. The subjective inputs embedded into algorithms explain the bias and discrimination we see too often in outputs—complicated and frustrated by the fact that these algorithms are opaque and don't allow an appeal if someone's life is negatively impacted by a decision based on the algorithms. O'Neil insists that the inherent opinions in the code are even more corrosive because they are obscured by the dazzle of technology—all very ironic since many of the applications she critiques in the book are intended (and marketed) as tools to reduce human bias and make fairer decisions. Defining a weapon of math destruction as a harmful application with "opacity, scale, and damage," she goes on to highlight a number of them in detail (including U.S. News higher education rankings). Recently, one nonprofit that originally supported algorithmic risk assessment before her book was published reversed its position.24 O'Neill argues that if big data had been used in the 1960s as part of the college application process, women would still be under-represented in higher education because the algorithm would have been trained by looking at the men who were then over-represented.

In 2018, Safiya Umoja Noble's book Algorithms of Oppression: How Search Engines Reinforce Racism intensified the attention paid to algorithmic bias, in this case looking at search engines, which are increasingly the conduit through which we arrive at our understanding of the world around us. Her focus on "technological redlining" concentrates on how search engines suggest ways for us to complete our searches (and our sentences). Noble provides seemingly endless examples of how Google's search engine reinforces stereotypes, especially in searches for "black girls," "Latinas," and "Asian girls." She also demonstrates that internet searching played a key role in pointing Dylann Roof to the racist ideas that led him to murder nine African Americans while they worshipped in South Carolina. Finally, Noble also refers to a Washington Post story saying that Google's top search result for "final election results" regarding the 2016 presidential election pointed to a "news" site incorrectly declaring that Donald Trump won both the electoral and the popular vote.25 While there is no doubt that Google has worked to address these issues, in 2019 at least one researcher suggested that the problems persisted and that reducing autocomplete functionality does not address the root problem.26

Credit: Memac Ogilvy & Mather Dubai, developed for UN Women (2013)

Next, in 2019, Shoshana Zuboff's book The Age of Surveillance Capitalism: The Fight for a Human Future at the New Frontier of Power continued the conversation, with a dense and chilling exploration of the degree to which the unethical use of information extends beyond algorithms and beyond search engines all the way to becoming a "rogue mutation" of capitalism. This expansive book defies any easy summary (e.g., the definition of surveillance capitalism is actually eight definitions). Zuboff unrelentingly explores surveillance and the use and misuse of data from the perspective of what she considers to be the key questions we must address: "Who knows? Who decides? Who decides who decides?" Along with asking questions about what is going on and who has the power to make decisions, she suggests that just as the Industrial Revolution ravaged the natural world, this new form of capitalism threatens humanity by claiming "human experience as free raw material for hidden commercial practices of extraction, prediction, and sales." This process, she argues, is currently underway, with very little regulation or control.

In the time between O'Neil's book and Zuboff's book, the march of new technologies quickened, with examples that include the pernicious and the ridiculous. Where to start? The list grows weekly.

The March of the Apps

While not focused on higher education, several AI applications claim to put the power of artificial intelligence in the hands of the people. For example, the Mei mobile messaging app promises "to help users become the best version of themselves by putting AI, data, and even other people easily within the reach of anyone with a smartphone." The app replaces a phone's texting functionality and provides analytics about the texts sent and received. Marketed as a "relationship assistant," Mei provides a percentage assessment about whether people you text may or may not "have a crush" on you. Using text analysis, the app goes even further, giving advice on how to raise your crush quotient (e.g., "Thomas is more of a do-er, so talk less and do more").27 Meanwhile, the now-defunct Predictim app allowed individuals to scan the web footprint and social media of a prospective babysitter and determine how risky a choice the person might be, based on an algorithm scanning for signs of bullying/harassment, disrespectful attitude, explicit content, and drug use.28 These examples may seem inconsequential—unless, of course, you are the one whose data is being scanned and you are the one being judged and found wanting.

It's very easy to drift from here to similar but more dramatically problematic examples, such as the controversial "gaydar" artificial intelligence that higher education researchers developed, claiming they can determine whether someone is straight or gay based solely on scanning a face.29 And then there is the deeply problematic face-scanning application called Faception, the "first-to-technology and first-to-market" app with "proprietary computer vision and machine learning technology for profiling people and revealing their personality based only on their facial image." The company is blunt that theirs is a for-profit pitch and that this is a "multi-billion-dollar opportunity." It promises to identify terrorists and pedophiles—and also academic researchers and poker players—with a simple face scan. A Faception YouTube video reveals an AI application whose developers do not seem to be pausing to consider ethics. In fact, the company's "chief profiler" seems to shrug off ethics concerns by saying: "We [are] only recommending—whatever the authorities will do with that, it's their own business."30 This will not be reassuring to those living in countries with repressive authorities or to the 20 percent who will be incorrectly tagged as terrorists or pedophiles (if you believe the company's aggressive claims of 80 percent accuracy).

Another company is Clearview AI. Following up on his iPhone app that let people put Donald Trump's hair on other people's photos, Hoan Ton-That developed "a tool that could end your ability to walk down the street anonymously and provided it to hundreds of law enforcement agencies." Al Gidari, a privacy professor at Stanford Law School, sees this as the beginning of an uncomfortable trend: "It's creepy what they're doing, but there will be many more of these companies. There is no monopoly on math. . . . Absent a very strong federal privacy law, we're all screwed."31 Writing about facial recognition, Heather Murphy summed up the pressure to profit: "And all around Silicon Valley . . . entrepreneurs were talking about faces as if they were gold waiting to be mined."32 Facial-recognition applications continue to proliferate, even while the accuracy of the technology is evolving. In the United States, a December 2019 National Institute of Standards and Technology study found facial recognition to be flawed, confirming "popular commercial systems to be biased on race and gender."33 While studies like this in the United States and the United Kingdom cause a stir, viral videos like the "racist soap dispenser" video, viewed by millions, add an exclamation mark to the story and humanize the ethical issues to a much broader audience.34

Another powerful way to highlight the human impact that follows from the opacity of algorithms is Kate Crawford and Trevor Paglen's compelling article "Excavating AI."35 The authors dig into the practical matter of how AI systems are trained, looking at the "the canonical training set" of images called ImageNet, which consists of more than 14 million labeled images. Crawford and Paglen find that the process of labeling and categorizing is not only flawed but sometimes "problematic, offensive, and bizarre." These are not subtle examples. There are photos of US President Barack Obama ("anti-semite"), a seemingly random man ("good person"), a seemingly random woman sunning on the beach ("kleptomaniac"), and the actress Sigourney Weaver ("hermaphrodite"). The authors even made the ImageNet database available to the general public to see for themselves how labeling works. Before the site went offline, I uploaded my simple EDUCAUSE headshot and was shocked by the labels assigned to it: baron, big businessman, business leader, king, magnate, and mogul. Hearing me gasp, my spouse looked over my shoulder to see what had offended me so audibly, and we couldn't resist uploading her very similar headshot to see what outrageous epithets were given to hers. While my labels were mystifying and detailed, her one label was mystifying and brief: sister. When I loaded in the headshot of a female president of a higher education presidential association, she was neither queen nor baroness, and her label was also just one word: sociologist. Imagining that labels like these could be involved in determining who gets a loan, who is fit to babysit, or who might be a terrorist is deeply concerning. This important work provides non-AI experts with a view into the practical dimension of bias in artificial intelligence while also underscoring the societal and human dimension of the misrepresentation of images. After all, the authors point out, "Struggles for justice have always been, in part, struggles over the meaning of images and representations." Algorithmic systems we create are supposed to help us make better decisions, anticipate our needs, and enhance our lives, but this result seems unlikely when the systems are built on a foundation of flawed assumptions that we are unable to scrutinize.

Meanwhile newer, equally problematic technologies are being considered, including emotion recognition applications, which one estimate suggests is a $20 billion market—and growing. The AI Now Institute's 2019 report insists: "AI-enabled affect recognition continues to be deployed at scale across environments from classrooms to job interviews, informing sensitive determinations about who is 'productive' or who is a 'good worker,' often without people's knowledge." This occurs although we "lack a scientific consensus as to whether it can ensure accurate or even valid results"—a fact that connects this technology with discredited 19th-century sciences like phrenology.36

If we believe that history teaches, we might pause here to acknowledge again the stark parallels to phrenology in the 19th century. Among other purposes, phrenology was used to provide a scientific justification for the supposed racial and gender superiority of Caucasian males. The shape of the head was also purported to determine whether a man would be a reliable and "genuine" husband.37 These examples may seem more patently outrageous than claiming to identify some percentage of terrorists or academic researchers based solely on faces, but at least the caliper-wielding phrenologists drew their conclusions from measurements that were transparent instead of from opinions embedded in code that is neither explainable nor appealable.

Meanwhile, the AI applications continue to proliferate today, especially in the area of human resources and interviewing. For someone with numerous candidates to choose from, the desire to use algorithms to shorten the list is understandable, but for the candidates, interviewing is hard enough without knowing that an algorithm is silently judging and interpreting their every move. In HireVue, for example, after candidates record their answers within the video platform, the algorithm analyzes the number of prepositions used and whether or not the candidate smiled. Chief Technology Officer Loren Larsen says the tool can examine "around 25,000 different data points per video, breaking down your words, your voice, and your face."38 Why? Are those who smile a lot or use gestures a lot considered to be better hires than those who do not?39 In any case, these data points are clear invitations for opinions and potential bias embedded in code.

More critically, AI decisions could also be involved in life-or-death circumstances. Consider the famous "trolley problem," a hypothetical scenario in which a runaway trolley is heading toward a group of people. If you're in control of the trolley, should you pull the switch to redirect the trolley so that it kills only one person instead? Which choice is more ethical? Today the problem is no longer hypothetical: autonomous vehicle software is being designed to make exactly these kinds of decisions in milliseconds. What happens if someone pulls out in front of a self-driving car? A decision will be made—and it will be made too quickly for the human in the car to discuss, object, or appeal. A 2018 study revealed that ethical perspectives on how an autonomous vehicle should behave in trolley-like problems vary by culture; as a result, some cars will make decisions based on ethical principles that could contradict those of their drivers. According to a February 2020 article, only one country has taken a position on how self-driving cars should behave. In its official guidelines, Germany states: "In the event of unavoidable accident situations, any distinction based on personal features (age, gender, physical or mental constitution) is strictly prohibited. It is also prohibited to offset victims against one another. General programming to reduce the number of personal injuries may be justifiable."40

Digital Ethics Closer to Home

According to HolonIQ, a global education market intelligence firm, artificial intelligence has produced an "explosion" in innovation and investment in education, with an estimated doubling in the growth of the global education technology market by 2025. Clearly, AI applications and the ethical pitfalls some of them bring will increasingly demand attention.

Credit: Charles Taylor / Shutterstock

However, there are also powerful ethical implications for other, non-AI technologies being actively piloted and deployed in higher education. One example is the constellation of innovations around augmented/virtual/mixed reality. Emory Craig and Maya Georgieva have effectively mapped out the degree to which immersive technologies invite various ethical challenges, what the authors see as a number of unsettling questions related to immersive experiences. Craig and Georgieva point to a wave of ethical concerns: student data, privacy, and consent; harassment; and accessibility issues.41

However, there are also powerful ethical implications for other, non-AI technologies being actively piloted and deployed in higher education. One example is the constellation of innovations around augmented/virtual/mixed reality. Emory Craig and Maya Georgieva have effectively mapped out the degree to which immersive technologies invite various ethical challenges, what the authors see as a number of unsettling questions related to immersive experiences. Craig and Georgieva point to a wave of ethical concerns: student data, privacy, and consent; harassment; and accessibility issues.41

Considering the ethical issues more generally, EDUCAUSE has issued a call for caution. In 2019, the EDUCAUSE Top 10 IT issues focused on the data and analytics that are at the heart of the predictive technologies and AI applications entering the edtech marketplace. In fact, half of the items in the 2019 Top 10 list were data-related. Information Security Strategy was #1 on the list, with Privacy appearing for the first time on the list, at #3. This reflected a growing realization highlighted several years earlier by the New America Foundation's 2016 report "The Promise and Perils of Predictive Analytics in Higher Education," which recounted the troubling story of a university that planned to use data from a student survey to urge at-risk students to drop out.42

This year, the 2020 Top 10 IT Issues list continues to call out Information Security Strategy (again #1) and Privacy (moving up to #2), but it also returns to the importance of data in other areas, including Digital Integrations (#4). The report authors squarely bring together data, artificial intelligence, and ethics: "Sustainability also has a new dimension. Data is often described as a new currency, meaning that higher education now has two currencies to manage: money and data. Data storage may be cheap, but little else is inexpensive in the process of managing and securing data and using AI and analytics to ethically support students and institutional operations."43

In a separate statement in 2019, one with the intentionally provocative title "Analytics Can Save Higher Education—Really," the Association for Institutional Research (AIR), EDUCAUSE, and the National Association of College and University Business Officers (NACUBO) noted that campus analytics efforts have stalled in spite of all the talk. Beneath the hyperbolic title, the analytics statement was unmistakably clear about the importance of attending to digital ethics. We argued that it's time to "Go Big" with analytics in order to achieve institutional goals, but we also insisted that we need to go carefully. One section—" "Analytics Has Real Impact on Real People"—elaborated that "responsible use of data is a non-negotiable priority." AIR followed up the statement with its own "Statement of Ethical Principles"; the EDUCAUSE 2019 Annual Conference featured several sessions on ethics; and NACUBO put "Ethics at the Core" on the cover of its Business Officer magazine, which included suggestions for pushing the vendor community for more transparency. All three associations will continue the discussion throughout 2020.44

This focus is welcome, because in the last six months of 2019, a flurry of articles in the mainstream and higher education press observed that higher education had its own potential "creepy line" problem. In fact, much of the news coverage is skeptical or deeply critical. For example, the Chronicle of Higher Education "Students Under Surveillance?" article answered its own question with the subtitle "Data-Tracking Enters a Provocative New Phase." A Forbes end-of-the-year prediction article on higher education bluntly concluded that "campus tech will get even more creepy." Meanwhile the Washington Post published an article titled "Colleges Are Turning Students' Phones into Surveillance Machines, Tracking the Locations of Hundreds of Thousands."45

A couple of months earlier, the Washington Post had reviewed records from a range of public and private colleges and universities and reported that at least 44 were contracting with outside consulting companies to gather and analyze data on prospective students, "tracking their Web activity or formulating predictive scores to measure each student's likelihood of enrolling." The article asserted that "the vast majority of universities" don't inform students that they are collecting students' information. The authors stated that when they reviewed online the privacy policies of the 33 institutions they found using web-tracking software, only 3 disclosed the purpose of the tracking. The other 30 omitted any explanation or did not explain the full extent or purpose of their

tracking.

Credit: Nicolás Ortega

The article also claimed that "many" institutions don't give students the ability to opt out of data gathering. A clear example of how these stories can take a harsh turn is the New York Times Magazine's critique of college admissions offices' use of predictive modeling. Paul Tough declared that the modeling is used not to advance diversity and excellence but, instead, is driven by the "thirst for tuition revenue." Specifically targeting elite institutions, Tough asserted: "Colleges' predictive models and the specific nature of their inputs may differ somewhat from one institution to another, but the output is always the same: Admit more rich kids."46 In short, the headlines have not been supportive, and articles about the effective and appropriate use of predictive modeling are simply not that intriguing to the mainstream press.

Credit: Nicolás Ortega

The article also claimed that "many" institutions don't give students the ability to opt out of data gathering. A clear example of how these stories can take a harsh turn is the New York Times Magazine's critique of college admissions offices' use of predictive modeling. Paul Tough declared that the modeling is used not to advance diversity and excellence but, instead, is driven by the "thirst for tuition revenue." Specifically targeting elite institutions, Tough asserted: "Colleges' predictive models and the specific nature of their inputs may differ somewhat from one institution to another, but the output is always the same: Admit more rich kids."46 In short, the headlines have not been supportive, and articles about the effective and appropriate use of predictive modeling are simply not that intriguing to the mainstream press.

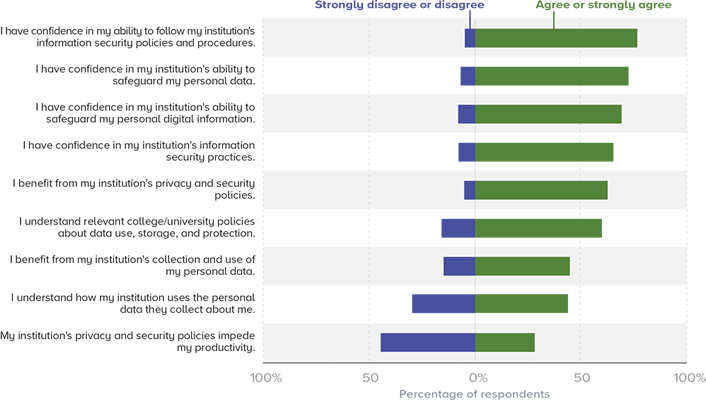

EDUCAUSE findings from our 2019 student survey reinforce a clear concern (see figure 2). The majority of students (70%) agree that they have confidence their college or university is safeguarding their personal data, yet less than half (45%) agree that they benefit from the collection and use of personal data. Even fewer (44%) agree that they understand how their college or university uses the personal data collected. Meanwhile, the findings from the EDUCAUSE 2019 faculty survey show marked drops in faculty members' confidence in their institution's ability to safeguard student/faculty/research data and in their own understanding of relevant policies.47

Figure 2. Students' Perspectives on Institutional Data Policies, Collection, and Use

Source: Joseph D. Galanek and Ben Shulman, "Not Sure If They're Invading My Privacy or Just Really Interested in Me," Data Bytes (blog), EDUCAUSE Review, December 11, 2019

Regulation and Resistance in the Wild West

The current situation is, to say the least, dynamic. For all the proliferation of new products there is a wide variety of strong voices and forces working to respond to digital ethics concerns. In many ways, the current situation feels a bit like the wild west, both in terms of the rush to be first-to-market with edtech products and in terms of the relatively uneven approach to regulation and legislation. Still, regulation and legislation are happening all over, spurred on by a chorus of demands from world leaders, influential billionaires, activist groups, and celebrities, along with the majority of a skeptical general population. Strangely, some technology companies have joined the choir as well, with CEOs of major firms calling for regulation. Naturally, some people are more cynical about these calls for regulation by those who would be regulated and see them either as a strategy to slow competitors or as an effort to take some control and limit the scope of inevitable regulations.48

Meanwhile, Europe continues to work aggressively to further privacy, while also advancing the technologies involved. At the same time that it is increasing funding for artificial intelligence by 70 percent

Credit: Nicolás Ortega

and supporting an "AI-on-demand platform" to bring together a community around AI development, the European Union is clear that it intends to deal with digital ethics around artificial intelligence and ensure a sound legal and ethical framework. For example, the European Commission's document "Ethics Guidelines for Trustworthy Artificial Intelligence (AI)" stresses that artificial intelligence must work "in the service of humanity and the public good," with an emphasis on trustworthiness. The key requirements, which read like chapter titles in a book about digital ethics, include "transparency," "diversity, non-discrimination and fairness," and "accountability."49

and supporting an "AI-on-demand platform" to bring together a community around AI development, the European Union is clear that it intends to deal with digital ethics around artificial intelligence and ensure a sound legal and ethical framework. For example, the European Commission's document "Ethics Guidelines for Trustworthy Artificial Intelligence (AI)" stresses that artificial intelligence must work "in the service of humanity and the public good," with an emphasis on trustworthiness. The key requirements, which read like chapter titles in a book about digital ethics, include "transparency," "diversity, non-discrimination and fairness," and "accountability."49

A separate report on liability certainly suggests a path for continued regulation across the countries of the European Union, and in January 2020, the European Commission announced that it is contemplating a ban on facial recognition in public areas for three to five years. Brexit aside, the United Kingdom joins the European call for regulations, as is evident from the publication of new recommendations for holding AI companies accountable, including both more scrutiny and a proposed new regulating body for "online harms." In fact, things could get personal in the United Kingdom, with a 2019 government position paper suggesting that the executives of technology companies should face "substantial fines and criminal penalties" for damaging and unlawful activities.50

While the European Union leads the charge on regulation of artificial intelligence and use of data and algorithms, the United States lags—or at least lacks unified national action. With little clarity on accountability at the federal level, some individual states are taking bolder actions. For example, a growing number of states have enacted some form of legislation related to autonomous vehicles. Many new laws are first laws of their kind, like a January 2020 Illinois law that requires employers to explain to job applicants how artificial intelligence will be used and get their consent. In California, several cities have banned facial recognition, while the state has passed laws prohibiting political and pornographic deepfakes and also passed the B.O.T. Act (Bolstering Online Transparency Act), making it unlawful for certain bots to pass themselves off as humans to sell products or influence voters. In another "first-ever," California passed the California Consumer Privacy Act (CCPA), which is considered comprehensive but whose impact is not yet fully clear.51

Individual state regulations are no substitute for federal laws, and the inconsistencies from state to state contribute to the "wild west" state of affairs, with different sheriffs in different states drawing different lines in different sands. Meanwhile, federal action may happen in 2020, as there are several bills before Congress, tracked online by the Center for Data Innovation's AI Legislation Tracker. The Algorithmic Accountability Act of 2019 has received a great deal of attention, as it adds federal AI oversight for artificial intelligence and data privacy, according to the National Law Review, which compares this proposed legislation to GDPR. In certain cases, large companies would be forced to audit for bias and discrimination and to fix any problems identified. As an article in the MIT Technology Review summarized: "Only a few legislators really know what they're talking about, but it's a start."52 There is something of a hype cycle at work for legislation as well as for technology, with flurries of legislative activity closely following flurries of shocking headlines. Practically speaking, the first wave of legislative action and rulemaking may amount simply to raising awareness or influencing the later debate when larger legislative momentum builds. Writing for Bloomberg Law, Jaclyn Diaz concludes that employers and tech companies need not worry too much about new bills in the near future because legislators' focus is on trying to understand the impact of artificial intelligence.53

Ultimately, ad hoc efforts are underway throughout the world at the city, state/province, and national levels, but they are exceedingly inconsistent, and these kinds of efforts will always lag behind dynamic technology developments. At the global level, rather than a gathering consensus pointing to unified action around ethics and artificial intelligence, we are seeing signs of a "global split," with Europe and Japan moving in different directions from the United States and China. Nonetheless, there is growing recognition that the best regulatory approach would be global—and that this is also the most difficult to achieve. Yoshua Bengio, a Turing Award winner and Montréal Declaration advocate, argues that without a mandatory global approach, companies will not willingly give up a competitive advantage in order to be more ethical.54

Regulation and legislation are producing, and will continue to produce, noticeable changes, but other voices are important and unprecedented, such as technology company employees who are taking an increasingly activist role in responding to ethical concerns. There have been walk-outs by employees to protest company actions, along with high-profile cases like that of Google's Meredith Whittaker, who inspired worker unrest across technology companies and, after leaving Google, co-founded (with Kate Crawford) the AI Now Institute, which is focused on the social and ethical implications of artificial intelligence.55 These kinds of actions produced results. For example, after conversations with its employees, Salesforce established an Office of Ethical and Humane Use of Technology and hired a chief ethical and humane use officer to create guidelines and evaluate the ethical use of technology. Not all the responses were positive, however. Google established an advisory board to deal with the company's challenges related to facial recognition and fairness in AI/machine learning and to advance diverse perspectives in general. Within a week after it was launched, Google's ethics board fell apart, in a very public way, over controversy among appointees. This result contributed to a larger conversation about whether ethics boards are likely to make a difference.56

As the world grapples with digital ethics, mainstream entertainment has popularized many of the key themes involved. After all, while most of us could possibly name a book or two related to digital ethics, we could rattle off a much longer list of mainstream films that dramatize the consequences of ethical lapses linked to technology. The 1931 film version of Frankenstein has faded from memory, but in its place are many blockbuster films and television series like Minority Report, Jurassic Park, Ex Machina, Star Trek, and Westworld. These examples and many others have addressed the topic of digital ethics in compelling, approachable ways.

Minority Report (2002) was particularly prescient, including a famous mall scene that is often pointed to as a glimpse of the future. The screens that bombard Tom Cruise's character with personalized messages and invitations to buy products have been highlighted as an example of sophisticated new technologies to come (never mind that the film is actually a damning critique of this kind of surveillance capitalism). Cruise's character walks briskly through the mall because he is being surveilled, tracked, and stalked by authorities—drawing comparisons to China's explosive proliferation and use of surveillance cameras (from 70 million now to 140 million planned) or Russia's use of facial recognition to enforce COVID-19 quarantines. What's more, Minority Report is about "pre-crime" technologies that identify crimes and criminals before they happen, which directly points to contemporary efforts to use artificial intelligence to predict crime. China's use of these technologies has been in the headlines most recently, but the United States and Italy have employed similar technologies for many years.57

The influence of creative expressions, especially in the form of films seen by tens of millions, cannot be underestimated. It's no surprise that some colleges and universities are using science fiction to teach ethics to computer scientists.58 Forward-looking academic centers like Arizona State University's Center for Science and the Imagination are making intentional contributions in this way as well.

Leading the Way

I'm constantly amazed and inspired by how often the people who make up our higher education community are focused on making a difference. Part of what sustains our community is, simply put, the good that colleges and universities do. The growth of higher education and increased access to education have strengthened democracy, raised wages, reduced poverty, contributed to local economies, boosted national economies, improved lives with research, and generally benefited society overall. In short, given that higher education has led the way in all these kinds of social changes, it is perfectly natural to look to higher education as our best hope for taking on the challenges related to digital ethics. Legislative action and regulation can punish and reward behaviors, but changing culture is more difficult—and more important.

With its glacier-like speed, higher education has never been known for agility. But for lasting change, it's hard to argue with a glacier when it has found its path. Higher education has the potential to engender comprehensive change and to bring new values to the next generation of computer and data scientists, developers, and architects we are currently teaching at our colleges and universities—and to the larger society as well.

I am convinced that higher education can and will lead the way in four broad areas: audacious approaches; policies and ethical frameworks; embedded ethics; and student demand for digital ethics.

Audacious Approaches

Some higher education institutions are involved in decisive change with strong embedded ethical themes. For example, Georgia Tech's plan for 2040—Deliberate Innovation, Lifetime Education—is a deeply technology-rich vision of higher education, focusing on a new kind of learner by deploying online learning, blockchain, microcredentials, analytics-rich advising, "personalization at scale," and advanced AI systems (Georgia Tech is the home of Jill Watson, the AI teaching assistant one student wanted to nominate for a teaching award). The approach is, as its title suggests, deliberately innovative when it comes to the technologies deployed, and it is also innovative in its intentional emphasis on intrapersonal skills with a powerful ethics theme: "Overarching themes in the Grand Challenges and Serve-Learn-Sustain programs give ethical and societal contexts for whole-person education." Even at the level of classroom projects, this focus is impossible to miss, requiring students "to explore societal or ethical issues prior to making judgments about the scope or duration of the project."59

Northeastern University is another institution that is taking on sweeping change in a way that engages awareness of ethics as an intentional element in its transformation. Its president, Joseph Aoun, lays out his vision for higher education in his book Robot-Proof: Higher Education in the Age of Artificial Intelligence (2017), where he acknowledges that artificial intelligence is going to produce dramatic and even uncomfortable change. His response is to suggest college/university curriculum changes that will prepare the next generation of students to thrive in a world where artificial intelligence and robotics are altering the very definition of workforce. An ethicist would warm to Auon's plan to make students robot-proof because it is grounded in the humanities—in fact, he argues for a discipline called "humanics." Auon writes: "Machines will help us explore the universe, but human beings will face the consequences of discovery. Human beings will still read books penned by human authors and be moved by songs and artworks born of human imagination. Human beings will still undertake ethical acts of selflessness or courage and choose to act for the betterment of our world and our species." In his vision of the new university in the age of artificial intelligence and robotics, he carves out a central role for human agency—not in opposition to, but along with, technological change.

Southern New Hampshire University takes yet another ethical perspective when it comes to using emerging technologies. SNHU's Global Education Movement (GEM) is working to provide access to fully accredited SNHU degrees to refugees in camps and urban areas across five countries in Africa and the Middle East. It's difficult to imagine a more ethically inspired initiative than this one, which puts a university education within reach for a population with an otherwise 3 percent rate of access. Central to being able to make this kind of global change is finding ways to reduce the cost of offering degrees. To do so, GEM leaders are exploring using artificial intelligence, working alongside a human evaluator, to make their effort more sustainable.

Policies and Frameworks

I am not alone in looking to higher education to take responsibility for reimagining digital ethics. Headlines in higher education media—such as "Can Higher Education Make Silicon Valley More Ethical?" or "Colleges Must Play a Role in Bridging Ethics and Technology" or "Will Higher Ed Keep AI in Check?"—call on academia as well. According to the University of Oxford Centre for the Governance of AI, there is mixed support for the growth of artificial intelligence, and there is a decided lack of trust all around. Nonetheless, the highest level of public trust lies with campus researchers.60 One way that higher education is rising to this challenge is through the development of policies and frameworks to advance the cause. EDUCAUSE Core Data Service data between 2017 and 2018 shows a marked increase (from 70% to 76%) in US institutions acknowledging that they have developed and maintain policies and practices to safeguard student success analytics data, including specifications for access privileges and "ethics of data use."

Key to policy development is having someone on staff to focus on ethical considerations such as privacy on campus. One indicator of growing leadership is the rise of the chief privacy officer (CPO), a relatively new position that reflects and advances privacy and ethics as a priority concern. The Higher Education Information Security Council (HEISC) has published, in partnership with EDUCAUSE, a CPO welcome kit and also a CPO roadmap. The welcome kit makes the challenges of this position clear: "Colleges and universities have multiple privacy obligations: they must promote an ethical and respectful community and workplace, where academic and intellectual freedom thrives; they must balance security needs with civil and individual liberties, opportunities for using big data analytics, and new technologies, all of which directly affect individuals; they must be good stewards of the troves of personal information they hold, some of it highly sensitive; and finally, they also must comply with numerous and sometime overlapping or inconsistent privacy laws." Since creating and properly resourcing the CPO position is a critical step, it is encouraging to hear Celeste Schwartz, information technology vice president and chief digital officer for Montgomery County Community College in Pennsylvania, point out that CPOs are on the rise in higher education. "I think most colleges will have privacy officers in the next five to seven years," she said, predicting that this will, in fact, be required by law.61

Higher education expresses its values through policies, an important vehicle for bringing about change—not necessarily quickly but comprehensively. Depending on how broadly or narrowly "ethics policies" are defined, there may be many dozens at play. Many higher education institutions list hundreds. These vast collections of individual policies are clearly necessary, but the key to leadership on ethics and digital ethics is an overarching institutional policy or statement that connects them all. One example is the University of California's "Statement of Privacy Values," which defines privacy from an institutional lens and identifies it as an important value and priority that must be in balance with the other values and commitments of the university. The United Kingdom's Open University has a "Policy on the Ethical Use of Student Data for Learning Analytics" that provides this kind of broad ethical understanding, along with a section devoted to aligning the use of student data to core university values, underscoring the institution-wide perspective. Some institutions, like Siena College, have specific IT employee policies that address the ethical concerns unique to technology professionals. According to Mark Berman, the former chief information officer for Siena College, all IT staff are required to sign the code of ethics statement on an annual basis, not just when they are hired.62

Finally, in addition to the ways that specific colleges and universities use policies and statements to reinforce commitments and expectations, national and international organizations are working to ensure that higher education provides leadership in this area. The Association for Computing Machinery provides a comprehensive code of ethics and professional conduct to all computing professionals, as well as illuminating specific case studies and additional resources. AIR's "Statement of Ethical Principles," similarly maps out overarching ethical priorities involved in the use of data "to guide us as we promote the use of data, analytics, information, and evidence to improve higher education." Organizations are also developing or adopting important frameworks that provide more specific, concrete ethical actions that can be taken. Examples are New America's five-point framework for ethical predictive analytics in higher education and the international Montréal Declaration for a Responsible Development of Artificial Intelligence, which seeks to develop an ethical framework and open channels for an international dialogue about equitable, inclusive, and ecologically sustainable AI development (nearly 2,000 individuals and more than 100 organizations have signed the declaration).63

Policies are one clear way higher education is ensuring that digital ethics concerns remain top of mind, but there are many related ways to accomplish this goal. Kathy Baxter's article on ethical frameworks, tool kits, principles, and oaths offers numerous examples. To be most effective, these high-level policies or oaths should be supported by concrete efforts as well. For example, DJ Patil, Hilary Mason, and Mike Loukides make a strong case that the use of checklists is a critical way to "connect principle to practice." They offer a short version of an ethics checklist below and point to a ten-page version as well.64

Checklist for People Working on Data Projects

- Have we listed how this technology can be attacked or abused?

- Have we tested our training data to ensure it is fair and representative?

- Have we studied and understood possible sources of bias in our data?

- Does our team reflect diversity of opinions, backgrounds, and kinds of thought?

- What kind of user consent do we need to collect to use the data?

- Do we have a mechanism for gathering consent from users?

- Have we explained clearly what users are consenting to?

- Do we have a mechanism for redress if people are harmed by the results?

- Can we shut down this software in production if it is behaving badly?

- Have we tested for fairness with respect to different user groups?

- Have we tested for disparate error rates among different user groups?

- Do we test and monitor for model drift to ensure our software remains fair over time?

- Do we have a plan to protect and secure user data?

Source: DJ Patil, Hilary Mason, and Mike Loukides, "Of Oaths and Checklists," O'Reilly (website), July 17, 2018.

It's clearly useful for colleges, universities, and the larger community of higher education to develop ethics policies, overarching policies, and frameworks to map out what leadership in digital ethics can look like. However, if higher education institutions make progress but those building the edtech products don't share our values, we are unlikely to make more comprehensive progress. EDUCAUSE Core Data Service data from 2019 shows that 74 percent of institutions agree/strongly agree that they "have a procedure for vetting third parties or vendors (e.g., cloud services, connected applications) with respect to data security and privacy." Though this is a high percentage, it's worrisome for the one-third of institutions without these procedures in place. Keeping this in mind, EDUCAUSE and our partners are working to find productive ways to urge the supplier community to consider our values and demonstrate their commitment with action. The Higher Education Community Vendor Assessment Toolkit (HECVAT)—developed by the HEISC and members from EDUCAUSE, Internet2, and the Research and Education Networking Information Sharing and Analysis Center (REN-ISAC)—is a useful tool that compiles vetted and standardized prompts to use in procurement processes. While HECVAT was not designed specifically for AI applications and does not yet have a series of prompts overtly focused on digital ethics, both the EDUCAUSE HECVAT Community Group and the EDUCAUSE Chief Privacy Officer Community Group are discussing ways to incorporate privacy-related questions into the HECVAT. Meanwhile, the current version of the tool requires providers not just to identify if they have a data privacy policy but also, if so, to demonstrate whether the policy matches the institution's ethical principles. In addition, the HECVAT asks vendors and service providers to demonstrate their willingness to comply with policies related to user privacy and data protection.65

Embedded Ethics

Policies and other commitments are important ways to spur and mark progress in societal momentum around digital ethics, but the specific efforts of individual institutions also represent important and concrete progress. Early in 2018 the New York Times reported on institutions like Cornell, Harvard, MIT, Stanford, and the University of Texas at Austin—all of which were developing and, in varying degrees, requiring ethics courses for students. In fact, in order to be accredited by the Accreditation Board for Engineering and Technology (ABET), computer science programs must ensure that students understand ethical issues related to computing. Harvard's nationally recognized model seeks to imbue students with ethical thinking. According to Barbara Grosz, a professor of natural sciences at Harvard, stand-alone courses are part of the solution, but she notes that this approach could signal the wrong message that ethics is more a capstone that is completed after the "real work" is completed. Harvard is widely sharing the model in the hopes that this approach will catch fire because the university envisions a culture shift that will lead to "a new generation of ethically minded computer science practitioners" and that will inspire "better-informed policymakers and new corporate models of organization that build ethics into all stages of design and corporate leadership." Bowdoin College is representative of other institutions that are working with faculty to bring about a similar shift: "So instead of just teaching one course in the subject, the aim is to help students develop what we are calling an 'ethics sensibility,' so developers will be aware of the implications of their work from the outset. We also want to help computer science faculty feel more comfortable teaching this type of content within their technical courses by providing the pedagogical framework and instructional resources to do so."66

Academic centers have an important role to play in leading the way. For example, founded in 1986, the Markkula Center for Applied Ethics at Santa Clara University is dedicated to an interdisciplinary approach to digital ethics. While working to ensure that Santa Clara graduates are ethics-minded, the center is uniquely dedicated to sharing free resources with other colleges and universities, including case studies, briefings, videos, and hundreds of articles and other materials on applied ethics across many disciplines and fields. Recently, the center added special resources for "Ethics in Technology Practice," designed for use in a professional setting, rather than in an academic one. Among the resources that the center offers, without charge, to any other college or university are three complete modules developed by the technology ethicist Shannon Vallor (data ethics, cybersecurity ethics, and software engineering ethics)—modules that have been used by instructors at more than two hundred institutions around the world. The center's Framework for Ethical Decision Making comes with a smartphone app designed to walk users through a more thoughtful and ethical decision-making process.

Another example of institutional action is the ambitious new MIT Schwarzman College of Computing, which demonstrates a significant early example of higher education reshaping itself to respond to the tectonic changes that artificial intelligence is introducing. MIT's press release and video accompanying the announcement of this billion-dollar investment describe it as the "most significant structural change to MIT since the early 1950s." The new college has a strong focus on ethics and a truly interdisciplinary approach. Half of the fifty new faculty positions will be appointed jointly with departments across MIT, seeking to benefit from the insights from other disciplines. Donor Stephen A. Schwarzman—chairman, CEO, and co-founder of Blackstone, a leading global investment firm—summarized the unique contribution higher education can make: "With the ability to bring together the best minds in AI research, development, and ethics, higher education is uniquely situated to be the incubator for solving these challenges in ways the private and public sectors cannot." MIT President L. Rafael Reif also underscored the ethical focus of the college: "Technological advancements must go hand in hand with the development of ethical guidelines that anticipate the risks of such enormously powerful innovations. This is why we must make sure that the leaders we graduate offer the world not only technological wizardry but also human wisdom—the cultural, ethical, and historical consciousness to use technology for the common good."67

Emerging from a period punctuated with dour public statements such as when Elon Musk called artificial intelligence "humanity's existential threat" and likened its growth to "summoning the demon," Stanford University launched its own billion-dollar project: the Institute for Human-Centered Artificial Intelligence (HAI), also a deliberately interdisciplinary group that "puts humans and ethics at the center of the booming field of AI." With value statements heavily focused on integrity, humanity, and interdisciplinarity, the institute, according to its press release, "will become the most recent addition to Stanford's existing interdisciplinary institutes that harness Stanford's collaborative culture to solve problems that sit at the boundary of disciplines."68

The interdisciplinary focus of higher education ethics centers reaches its full realization when colleges and universities like California State University–Long Beach develop "Ethics Across the Curriculum" initiatives modeled on the "writing across the curriculum" work of previous decades. Utah Valley University has been engaged in this work for decades, developing best practices for ethics education, such as a student symposium, a faculty summer seminar, fellowships, and more. It is no surprise that the university's more recent ethics work has had a strong digital ethics focus. Its "Ethics Awareness Week" in 2019, for example, focused on "Ethics, Technology, and Society" and included speakers on surveillance ethics, biomedical ethics, the ethics of digital literacy, artificial intelligence, and academic technology.

Student Demand for Digital Ethics

The final indicator that higher education is effectively leading the way—as no one else can—is when the awareness and commitment around digital ethics is apparent where it matters most: in students. After all, one could argue that the flurry of reactive work of corporations, regulators, and others amounts to managing the contents of Pandora's box after it has been opened. But in higher education, we have the opportunity to transform the approach to digital ethics for the next generation of students, who will, in turn, make up the next generation of coders, architects, data scientists, computer scientists, software developers, start-up entrepreneurs, CEOs, and decision-makers of all kinds. This is surely why the Markkula Center's vision statement includes the commitment to "double down on forming the ethical character of the next generation." There is already some evidence that students are responding to the opportunity. According to the New York Times, many students who have "soured on Big Tech" and who previously swooned at the prospects of working at high-profile technology companies are now seeking jobs that are both "principled and high-paying." For these students, "there is a growing sentiment that Silicon Valley's most lucrative positions aren't worth the ethical quandaries."69

Seven Questions about Digital Ethics

- Is there is a community of concern related to digital ethics on your campus? Should you launch one?

- Does your campus have written policies or guidelines related to privacy and digital ethics? Can you find them?

- Do you know whose full-time job it is to worry about ethical issues? Have you had lunch with her or him?

- When someone on campus develops an application that uses student data is any ethical framework used before work begins? Required?

- When someone on campus buys an application is there any ethical review required?

- Do you know what your campus is doing to ensure that the next generation of developers and technology professionals (our students) have a strong digital ethics mind-set?

- Are you more informed about digital ethics this year than last? Will you be even more informed next year? How will you make this happen with everything else going on?

Next-Gen Ethics

One reality that cannot be ignored by anyone interested in advancing digital ethics is that change could very well happen only incrementally. Another daunting reality is the simple fact that at about the time we have fully wrapped our minds around the current set of worries, pitfalls, outrages, and solutions, there will be a new set of digital ethics quandaries before us. For example, there is already some initial consideration about the need for a new academic discipline focused on "machine behavior," based on the idea that "we cannot certify that an AI agent is ethical by looking at its source code, any more than we can certify that humans are good by scanning their brains." Instead, determining whether or not a given artificial intelligence is ethical may depend on our detailed academic study of behavior, just as with human beings. And at about the time we tackle that, we will be starting to grapple with whether artificial entities should have ethical protections—or at least the ethical protections that are afforded to animals. Northeastern University's John Basl argues: "In the case of research on animals and even on human subjects, appropriate protections were established only after serious ethical transgressions came to light (for example, in needless vivisections, the Nazi medical war crimes, and the Tuskegee syphilis study). With AI, we have a chance to do better."70

This time around we have a chance to do better and a moral imperative to do much better. Each of us individually—and higher education collectively—can and must lead the way. In The Age of Surveillance Capitalism, Zuboff draws our attention to the idea that surveillance capitalism has turned human experience, as expressed through data, into a new commodity primed for exploitation and misuse, much like "nature's once-plentiful meadows and forests before they fell to the market dynamic." This is a powerful lens through which to appreciate the beauty that is threatened and the consequences of inaction. Ireland was once an island of dense forests, with 80 percent forest cover. With the demand for wood to build navies and to fuel the fires of the industrial revolution, forest cover fell to 1 percent by the end of the 19th century. Through grants and incremental advances, the Irish government hopes to achieve near 20 percent forest cover by 2046.

Creating a sense of wonder and opportunity, the world of technology innovation energizes and inspires us. Our conviction that these innovations on the horizon will change the world for the better is a source of optimism. At the same time, the unrelenting drive to march forward could blind us to the need to pay attention to crucial, though far less shiny, ethical and moral imperatives. If we fail to take our ethical responsibilities seriously at this early stage of creating new digital ecosystems, the consequences will be dramatic and as hard to reverse as a vanished forest. Acting now on our individual college and university campuses can help us avoid the need to remediate later. Acting now can also enable us to continue to stride forward, enjoying the development of innovative new technologies, while also confident that we are moving ahead on ethically sound ground.

Notes

- John Del Signore, "OMGGGGGG! Texting Teen Girl Falls into Open Manhole," Gothamist, July 10, 2009; Charlie Sorrel, "Girl Falls into Manhole While Texting, Parents Sue," Wired, July 13, 2009. ↩

- Audrey Watters wrote: "There's something about our imagination and our discussion of education technology that, I'd contend, triggers an amnesia of sorts. We forget all history—all history of technology, all history of education. Everything is new. Every problem is new. Every product is new. We're the first to experience the world this way; we're the first to try to devise solutions." Watters, "Ed-Tech and the Commercialization of School," Hack Education (blog), June 14, 2016. ↩

- "The Great Exhibition" [https://www.clevelandart.org/research/in-the-library/collection-in-focus/great-exhibition], Cleveland Museum of Art (website), [n.d.]. ↩

- The Whitby Museum's tempest prognosticator also goes by its more precise name: "Atmospheric Electromagnetic Telegraph conducted by Animal Instinct." ↩

- Vincze Miklós, "Seriously Scary Radioactive Products from the 20th Century," Gizmodo, May 9, 2013. See also "Radioactive Quack Cures," Oak Ridge Associated Universities, Health Physics Historical Instrumentation Museum Collection (website), May 28, 1998. ↩