Is it possible to have the best of both a networked learning environment and an adaptive learning model implemented in the same system? The authors share their vision of the "next" next generation digital learning environment, the N2GDLE.

Two years ago, the EDUCAUSE Learning Initiative published its widely cited white paper The Next Generation Digital Learning Environment: A Report on Research. The authors' objective was to "explore the gaps between current learning management tools and a digital learning environment that could meet the changing needs of higher education." In doing so, they joined a long line of critics and reformers who had similarly observed that the learning management system (LMS) had been "highly successful in enabling the administration of learning but less so in enabling learning itself."1

Since the very dawn of the LMS era, learning theorists, practitioners, and technologists have expressed concerns about a technology paradigm inherently focused on "managing" learning. Indeed, multiple studies and reports have concluded that the LMS is largely used for content distribution and administrative purposes and, therefore, is not a significant driver of innovation and fundamental change in higher education.2

So where do we go from here? To the "next" next generation digital learning environment—or what we have dubbed the "N2GDLE."3 We believe that learning technology is maturing to the stage that it can be an "exoskeleton" for the mind. Higher education is on the cusp of a tectonic shift that will see human learning and intellectual capability substantially augmented by technology. But we need to move beyond LMS-centric thinking to realize that potential.

An Exoskeleton for the Mind

Why do we need to move beyond the LMS "instructor paradigm" technologies and thinking about learning? Simply put, we face an urgent societal need to fully, efficiently, and effectively help all individuals realize their potential as learners and practitioners across an expected lifetime of learning. This cannot happen through current methods and tools for knowledge dissemination. We must unlock human potential by empowering all individuals to learn and contribute meaningfully in disciplines and fields of their choosing. We must utilize the tools we have at our disposal to finally close Benjamin Bloom's 2-sigma gap in achievement between personally tutored students and students in a traditional classroom.4

Augmenting Human Intellect: A Conceptual Framework, a 1962 SRI Summary Report, provides a touchstone for thinking about technology in service of human endeavors. Doug Engelbart, the author of the report, states in the introductory paragraph:

Increased capability in this respect is taken to mean a mixture of the following: more-rapid comprehension, better comprehension, the possibility of gaining a useful degree of comprehension in a situation that previously was too complex, speedier solutions, better solutions, and the possibility of finding solutions to problems that before seemed insoluble. . . . We do not speak of isolated clever tricks that help in particular situations. We refer to a way of life in an integrated domain where hunches, cut-and-try, intangibles, and the human "feel for a situation" usefully co-exist with powerful concepts, streamlined terminology and notation, sophisticated methods, and high-powered electronic aids."5

What Engelbart describes here amounts to an "exoskeleton for the mind." The suggested approach leverages technology to enable more effective interrogation of facts, concepts, and ideas, ultimately instilling habits of mind including meta-skills or attributes like curiosity, open-mindedness, intellectual courage, thoroughness, and humility. Technology, properly designed and implemented, can indeed function as a set of tools and processes that augment human learning and intellectual capability.

Since the dawn of the computer, instructional designers and learning scientists have envisioned the emergence of "intelligent tutoring systems" (ITSs) that would approximate the benefits of a live tutor. Unfortunately, ITSs have not lived up to their hoped-for potential. Most college and university classes are taught largely the way they were before the computer age.

Why is this the case? Early ITS efforts incorporated sound principles of instructional design: begin with the learning goal in mind, decide how it will be measured, then determine how students will be enabled to move from where they are to the achievement of the goal. This process of "backward design" was at the heart of pre-web learning systems built on centralized, "heavy iron" computers.

Through the 1970s and 1980s, ITS development and deployment saw some notable successes but was largely limited to specific disciplines (notably language instruction) and corporate training contexts. Fully mapping a learner's complete journey through a sequence of increasingly complicated learning outcomes was simply too expensive and too complicated. Even more importantly, the ITS model threatened to undo the roles and relationships at the heart of education. If a computer could intelligently tutor, what was the role of the teacher? Was the classroom experience necessary? The tension in these questions was never resolved, and attention from them was diverted with the emergence of networked computers.

The focus on collaborative learning networks grew exponentially with the birth of the Internet and later the World Wide Web. The paradigmatic change accelerated the marginalization of now passé ITS-like systems. Focus and attention shifted to resource sharing, web-based discussion, idea sharing, and collaboration. In its earliest manifestations, networked learning in higher education was grassroots, with innovative faculty members building course web pages replete with hyperlinked resources. The more adventurous faculty included discussion boards, polls, and quizzes. The unstoppable democratization of the web soon yielded tools that all faculty members could use to build their own course web pages.

In 2017, the ascendancy and ubiquity of personal computing is taken for granted. We do more on mobile devices today than was dreamt of with early PCs.6 But what happened to the vision of intelligent tutoring technology that was to become the exoskeleton for learners' minds? For much of the past two decades since the birth of the LMS, technological innovation in teaching and learning has been focused on collaboration and new and better ways to present content. Unsurprisingly, neither of these domains has led to significant change to the traditional roles of or relationships between teachers and learners.

Because the LMS is anchored in semester-based sections of instructor-led courses, anything resembling an innovation is largely "bolted on" rather than transformational. Although this is heading in the right direction, simply adding collaboration and assessment tools to the LMS leaves core learning processes and roles largely unchanged. As a result, there is no significant change in the ways learners are provided context and guidance as they work to achieve their learning and credential goals.

While there has been consistent, incremental, feature-adding innovation from the LMSs, the most (potentially) transformational recent developments have come from a return to the promise of the ITS. Numerous adaptive learning providers have emerged, making the same aspirational promises as the proponents of the ITS model forty years ago. But this time around, a voracious appetite for innovative solutions that promise to improve learning and a willingness to work through or ignore the fundamental challenge to traditional instructor and student roles are providing more fertile ground for an intelligent tutoring renaissance. Because of advances in technology, machine learning, AI, and learning science—with growing urgency around retention, persistence, and completion—interest in these solutions has increased.

How could the adoption of networked learning technology (i.e., the LMS) pave the way for the reemergence of the once universally rejected ITS model? The familiarity of the LMS and the ubiquity of "big data" and "recommendation engines" in other parts of our lives have diminished resistance to the idea of computers prompting and guiding decision making and "pathing" in the learning process. We have witnessed a slow, natural-selection process that brings us to the possibility of the N2GDLE vision, a model that includes the networked learning capabilities of the traditional LMS and the computer-guided learning vision of the ITS. The N2GDLE will augment and enhance learning by connecting learners with instructors and fellow learners meaningfully in structured ways to enable, accelerate, and support the learning process. By adaptively and dynamically updating learners' paths across programs, the N2GDLE increases the probability that students will achieve completion and earn credentials. The transformational impact of the N2GDLE will not come by simply arranging and presenting content in more personalized ways. Rather, it will come through the synergistic combination of networked learning and smart pathing, enabling instructors to track the complex pathways being taken by large numbers of students and make timely, pinpointed interventions and nudges to propel cohorts and individuals along their way.

The N2GDLE Model

The 2015 NGDLE white paper explicitly called for progress in five key areas: (1) Interoperability and Integration, (2) Personalization, (3) Analytics, Advising, and Learning Assessment, (4) Collaboration, and (5) Accessibility and Universal Design.

These are unarguably critical areas to focus on with regard to a high-quality digital learning environment (DLE). As important as all of these are, they are in some ways too generic to describe the kind of transformational DLE that is required to take us into the "next generation" of teaching and learning. A modern DLE of any generation is virtually unthinkable without standards support built in, readily available to connect and share data with a myriad of other tools and services. If the N2GDLE is to support both networked and adaptive learning models, it will almost certainly include learning tool components that need to talk to each other. Standards-based integration and interoperability enables each component of a federated DLE to do a single thing or a few things very well—instead of trying to do all things poorly.

Both of us have committed significant time and energy to learning technology standards and specification definition, refinement, and implementation, particularly through IMS Global. Initially a project of EDUCAUSE, IMS spun off as an independent organization in 1999. Functioning as a nonprofit, it brings together institutions, vendors, and practitioners to identify, design, adopt, and validate learning technology interoperability standards available under royalty-free licenses (see sidebar).

Selected IMS Global Learning Technology Specifications and Initiatives

-

Maturation and adoption growth of the Learning Tool Interoperability (LTI) specification v1.2 and 2.0

-

Caliper 1.0 analytics specification (and initial alignment with xAPI)

-

Open Badges Initiative (OBI) 2.0 Candidate Final

-

Competency Based Education / Extended Transcript Project

-

OneRoster v1.1

-

Thin Common Cartridge v1.3 and Common Cartridge v1.3

As new adaptive, competency-based, and programmatic learning platforms emerge, they are likely to be integrated with existing LMSs and an ecosystem of other tools. As envisioned in the 2015 NGDLE white paper: "If the equivalent of the Lego specification could be articulated for the NGDLE, it would serve as the basis for the confederation we propose. We are suggesting an NGDLE-conformant standard or specification, which would be based on adherence to a coordinated set of component standards. Once such a standard is in place, future investments and development efforts could be designed around the NGDLE specifications."

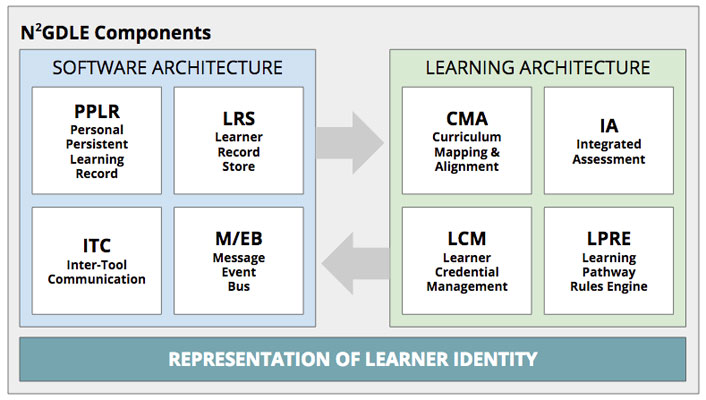

Our vision for the N2GDLE builds on the interoperable, Lego-model DLE. It assumes interoperability. It incorporates both the networked learning model of the LMS and the adaptive, personalized learning model. While assuming many of the elements initially envisioned for the NGDLE, we reframe the 2017 version with two major categories of required components: software architecture and learning architecture (see figure 1).

Figure 1. N2GDLE Components

Software Architecture

Personal Persistent Learning Record (PPLR): While it might have been possible for a traditional LMS vendor to assert that it met all the requirements of the original NGDLE, the N2GDLE is different at its roots. Most significantly, it is built from the ground up around individual learners: their learning goals, activities, assessments, and achievements. The N2GDLE, therefore, requires a personal student learning record that tracks learner engagement in and with programs, courses, learning activities, content, other students, projects, internships, and co- and para-curricular experiences. It will also provide holistic, integrated views of a learner's experience across all of these (currently disparate and siloed) experiences.7 This vision explicitly requires the PPLR to receive learning activity and achievement data from multiple sources and systems. Since it serves as the repository of all learner goals, achievements, activities, and interactions, it necessarily includes data from multiple learning platforms, environments, and even institutions. Data interoperability and formatting standards—via Inter-Tool Communication and the Message/Event Bus discussed below—are absolutely essential to the PPLR.

Learner Record Store (LRS): The LRS is fast emerging as the system of record for transactional learning and achievement data. The transactional LRS includes a meta-layer of learner behavior data that is essential to an understanding of and intervention in the learning process. The IMS Global's Caliper and xAPI standards are enabling frameworks for instrumenting data so that it is dynamically emitted by the systems used by learners and is then "sensed" and stored in the LRS.

Inter-Tool Communications (ITC): In the Lego model, multiple tools can be used seamlessly throughout the learning experience. Not only is single-signon launch and use of these tools essential, but they must also be able to communicate with each other. In some cases, a simple data return from a launched tool back to the consumer is sufficient. In other cases, an N2GDLE designer, a learning engineer, an instructional designer, or a faculty member might want two or more tools to more actively "listen" to each other. In these cases a persistent, real-time Message/Event Bus (see below) may be required.

Message/Event Bus (MEB): As tools in the N2GDLE multiply, the need for dynamic, real-time messaging between them is critical. Every tool generates its own activity stream log. But some events are more important and relevant than others. Learning tools are increasingly likely to support the monitoring of these events with sensors. They act to transport the pulses of synaptic activity recording the learning interaction. This is information that has value not just for the learner's immediate progress through a learning sequence but also for actions that might be taken by related supporting systems and tools (as described above). Timely actions require stream processing, and that stream is the MEB.

Learning Architecture

Curriculum Mapping and Alignment (CMA): Most currently available learning environments do not readily enable curriculum managers, learning engineers, instructional designers, and faculty members to document learning outcomes and competencies and then establish dynamic data relationships (e.g., via meta-tagging) among those outcomes, the learning activities intended to help learners achieve them, and the assessments designed to measure (formatively or summatively) their progress toward and demonstration of the outcomes. The N2GDLE must allow various curricular stakeholders to create these maps; ensure alignment between outcomes, activities, and assessments; monitor learner progress through backward-designed and aligned programs; see individual and cohort data to drive success; and then analyze data periodically to improve the curriculum. More advanced versions of CMA functionality would allow learners to specify their own learning goals, map them to learning activities and experiences, and discover ways to self-validate achievement of those goals.8

Integrated Assessment (IA): Once learning goals or competencies have been articulated and aligned with assessments, those assessments need to be presented to learners in the right sequence and flow of the learning experience. Curriculum designers and learning engineers need the ability to deploy a formative assessment anywhere, anytime. Support must also be provided for multiple assessment types, including a variety of traditional computer-scored items, interactive assessments and simulations, homework activities, and rubric-based assessments of student work or performance. Any and all of these should be easily integrated at any juncture of the curriculum, in either formative or summative mode.

Learner Credential Management (LCM): LCM is an essential component of the N2GDLE because it allows institutions to decide what credentials they will grant when learners demonstrate various competencies. Associate's and bachelor's degrees are as important as they ever were, but other kinds of credentials—including badges, certificates, and other forms of microcredentials—are growing in relative importance. Institutions need the flexibility to grant credentials for the knowledge, skills, and abilities they want to certify for their learners. The architecture behind the storage and sharing of these credentials—for example, through "badgechain" projects9—is the subject of another discussion.

Learning Pathway Rules Engine (LPRE): An LPRE enables N2GDLE administrators, learning engineers, and others to establish rules, triggers, and logic that will dynamically update pathways to maximize learning effectiveness and efficiency. This allows for a design pattern library to ease the construction of learning sequences in the N2GDLE. The LPRE opens the door to personalized pathing at the level where it matters most: degree and credential attainment. While it is necessary for students to succeed in their courses, this is not sufficient. They must also acquire the knowledge, skills, and abilities that they can then apply in more advanced courses. And our systems need to be smart enough to direct learners back to review and relearning activities when the learners are struggling to remember or effectively apply previously demonstrated competencies at later stages in a program. For millions of students, this co-reiterative approach made possible by the LPRE might literally be the difference between earning credentials and dropping out.

Representation of Learner Identity

Finally, learners need the ability to curate their own credentials over time and across multiple institutions and organizations. These credentials can be curated and stored in the learner's exported version of the PPLR, in a portfolio, and/or via extended transcript. But this is not a static record. It needs to be constructed, assembled, and presented differently depending on the audience the learner is trying to reach, from the immutable elements of recognized learning. Managing the representation of a learner's skills and abilities to potential employers, collaborators, and clients will become an essential capability. Whether loosely coupled with the N2GDLE or closely integrated into it, the representation of learner identity is a must-have component of future DLEs.

The Path Forward

We have outlined an aggressive and aspirational vision of the N2GDLE. Is it possible to have the best of both a networked learning environment and an adaptive learning model implemented in the same "system"? We believe the answer is yes. And the components we describe above are all within reach.

The reasons for such a system are the justification for pursuing, designing, and implementing it. By definition, the N2GDLE will produce learning programs that are aligned with clearly articulated goals and competencies. Learner progress toward them will be dynamically supported through rich interaction and personalized learning paths. And learner achievements will be validated through aligned assessments represented in either a traditional course structure or emerging competency frameworks.

The path from where you are today to N2GDLE begins with four concrete steps.

1. Map out your current learning infrastructure. Create a rubric to compare it with the principles set out in the original NGDLE white paper and in this article on the N2GDLE. Assemble key leaders together from across your institution to engage in a conversation about what learning workflows you want to support at your institution.

Write a learning charter that articulates what would be better for learners, teachers, administrators, and other institutional stakeholders if you implemented an N2GDLE that supported the learning workflows you have mapped out. Build support for the charter. Include students, faculty, and administrators in the discussion, refinement, and codification of the charter.

Concurrently, diagram and architect a version of the N2GDLE that supports your new learning model. Once you have defined the core, add on the appropriate learning tool Lego blocks to flesh out your learning environment.

2. Begin with your current enterprise learning technology providers. Invite them to help you think through a short-, medium-, and long-range plan to get there. You are not going to turn off (or significantly alter the way you use) your current LMS and launch your N2GDLE in six months or a year. This process will take time, planning, and leadership. And resources. So create a plan now. And take your first steps toward it.

If your current technology providers are not able or are unwilling to embrace your vision and help you see it through, explore relationships with others, including nontraditional platform partners, particularly those who have a learner- and learning-centric approach and architecture. And seek out partner institutions that share the same vision and can collaborate with you and your selected technology partners on design, development, and implementation. Form your coalition, and start laying the groundwork for an implementation of your N2GDLE.

3. Understand that as difficult as the technology might be to envision, articulate, and implement, the culture changes required between where you are now and where you need to be to implement it will be much, much harder. This change comes in two parts. First is the curriculum-level design. Begin with the end in mind. Have a clear vision for the improvements you're targeting in learner retention, success, attainment, and placement. Clearly and explicitly articulate examples of what you want learners to know and to be able to do when they finish any program you offer. Ask how you can tell if a learner has demonstrated that ability. Focus on supporting the knowledge, skills, and abilities that are the building blocks of the program. Ensure that your curriculum is tightly aligned with its goals and that assessments thereof are authentic, valid, and reliable.

One of the most important considerations in this process will be to thoughtfully identify what kinds of interactions and modalities make the most sense for which learning activities, for which learner, in which contexts, and in what order. Certainly, conducting some activities face-to-face continues to make the most sense, when feasible. But what about when doing so is not feasible? How can we scale effective learning? Or how can we make scaled learning (i.e., large lecture hall courses) more effective by leveraging the networked and personalized learning capabilities of an N2GDLE? How might faculty, student, administrator, advisor, mentor, and other roles change and why? Can you scale introductory courses to leverage fewer high-quality intructors to expand more personalized experiential learning later in the program?

To be successful, this effort must be collaborative across all the faculty members who teach in a program. This will require a good deal of work, deliberation, cooperation, compromise, and culture change. Making the transition from a "my course" worldview to an "our course" perspective is difficult. Moving to an "our shared program" view is harder still. Some institutions, colleges, departments, and programs are ready for such a journey. Others are not. Start where you are, and begin having the conversation.

4. Reconceive course and program design as a team activity. This final shift is perhaps the biggest. To realize the transformational potential of the N2GDLE, we need to get beyond our narrow section-by-section, semester-to-semester micro-innovation projects. As Herbert Simon observed: "Improvement in post-secondary education will require converting teaching from a 'solo sport' to a community-based research activity."10 At least in part, this means that all who are responsible for helping learners succeed in a program need to rethink and redesign the interactions of the learner in the classroom, in other face-to-face contexts, and in a DLE. These should all be designed through an integrated, holistic lens to maximize learner success. Doing so means going far beyond simply providing support for learning; it means a move to designing and implementing new and different learning interactions and sequences that will be instrumental in the teaching and learning process.

As Simon emphasized, research must be the unifying activity in this collaborative, holistic approach to curriculum. The pedagogical responsibility of learning design morphs from the purview of individual faculty members to a shared responsibility of a team of professionals working together to drive specific improvements in the teaching and learning process. These professionals bring skills from learning sciences, pedagogy, cognitive and social psychology, neuroscience, the performance arts, design, assessment and psychometrics, statistics, programming, graphic design, UI/UX, video production, project management, and more. New roles, such as learning engineers,11 are likely to emerge. All of these are contributors to the faculty expertise in the disciplines around which programs, courses, and learning activities are focused.

Start by identifying a degree program and assembling a multidisciplinary team with the range of professional skills described above. Then empower this group by giving members the responsibility of designing an integrated, programmatic learning experience that will drive learner success toward terminal degree or credentials, complete with all the networking and personalized learning capabilities of the N2GDLE. They should begin with the end in mind, imagining various student starting points and pathways to success. They should focus more on learning experiences than on courses, although the end result may well be delivered via traditional courses. Then subgroups can tackle the specific activities and courses that roll up to the programmatic paths envisioned.

In this model, faculty leaders, faculty teams, and individual faculty members remain at the center of the teaching and learning process. They are the face of learning for their students. Using Simon's analogy of sports, they are the quarterback. But they now take the field with an entire team focused on learner success.

****

It would be far too easy to finish reading this article on the N2GDLE (however you pronounce that) and conclude that it is a nice, aspirational thought exercise. Indeed, if we are content with the status quo, we can simply stand pat with the tools, processes, and role definitions that structure teaching and learning at our higher education institutions today. But if we want to transform that structure and dramatically change results, a new paradigm is required. Now is the time to start our journey.

Notes

- Malcolm Brown, Joanne Dehoney, and Nancy Millichap, The Next Generation Digital Learning Environment: A Report on Research, an EDUCAUSE Learning Initiative (ELI) white paper (April 2015), 2.

- See, for example: Glenda Morgan, "Faculty Use of Course Management Systems," EDUCAUSE Center for Applied Research (ECAR) Research Study 2 (2003); George Siemens, "Learning Management Systems: The Wrong Place to Start Learning," [http://www.elearnspace.org/Articles/lms.htm] elearnspace, November 22, 2004; Joanne L. Badge, Alan J. Cann, and Jon Scott, "E-Learning versus E-Teaching: Seeing the Pedagogic Wood for the Technological Trees," Bioscience Education 5, no. 1 (2005); Leigh Blackall, "Die LMS Die! You Too PLE!" Teach and Learn Online, November 13, 2005; Niall Sclater, "Web 2.0, Personal Learning Environments, and the Future of Learning Management Systems," ECAR Research Bulletin 2008, no. 13 (June 24, 2008); Jon Mott and David Wiley, "Open for Learning: The CMS and the Open Learning Network," in education 15, no. 2 (2009); Louis Pugliese, "A Post-LMS World," EDUCAUSE Review 47, no. 1 (January/February 2012).

- This is a bit of a tongue-in-cheek name for whatever platforms come next. Given the wide variety of names for emerging technologies, the baggage of the LMS designation, and the painful attempts to pronounce "NGDLE" ("Ung-gud-ull?"), we are intentionally choosing an esoteric name that is unlikely to roll off anyone's tongue. We prefer the rather simpler "learning environment" moniker.

- Benjamin S. Bloom, "The 2 Sigma Problem: The Search for Methods of Group Instruction as Effective as One-to-One Tutoring," Educational Researcher 13, no. 6 (June-July 1984).

- Douglas C. Engelbart, Augmenting Human Intellect: A Conceptual Framework, SRI Summary Report AFOSR-3223 (Menlo Park, CA: Stanford Research Institute, 1962), 1.

- See "Processing Power Compared" [http://pages.experts-exchange.com/processing-power-compared/], Experts Exchange (website), accessed April 17, 2017.

- This approach will rightly challenge the traditional distinction between the curricular and co-curricular dimensions of the collegiate experience. To that end, we encourage academic and student life administrators to reach across the proverbial aisle and rethink the boundaries that separate them.

- This is in the spirit of self-asserted aspirational badging, wherein learners express their interest in achieving a competency and seek community help to articulate the path toward it. Agreed-upon interim milestones could be formally endorsed by content experts (e.g., faculty) to qualify the steps toward achievement.

- See the UTBadgeChain [http://utbadgechain.org/] project and OpenBlockchain.

- Herbert A. Simon, "Need Teaching Be a Loner's Sport?," Distinguished Lecture Series at Carnegie Mellon University, Pittsburgh, PA, April 1996.

- Herbert A. Simon, "The Job of a College President," Educational Record 48 (Winter 1967); Bill Jerome, "The Need For Learning Engineers (and Learning Engineering)," e-Literate, April 14, 2013; Bror Saxberg, "Why We Need Learning Engineers," Chronicle of Higher Education, April, 20, 2015.

Phillip D. Long is Chief Innovation Officer, Project 2021, and Associate Vice Provost for Learning Sciences at the University of Texas at Austin.

Jon Mott is Chief Learning Officer at Learning Objects.

© 2017 Phillip D. Long and Jon Mott. The text of this article is licensed under the Creative Commons Attribution-ShareAlike 4.0 International License.

EDUCAUSE Review 52, no. 4 (July/August 2017)