© 2008 Michael Gardner, John Scott, and Bernard Horan

EDUCAUSE Review, vol. 43, no. 5 (September/October 2008)

MiRTLE

In this article, we describe our collaborative research toward creating a “mixed reality teaching and learning environment†(MiRTLE) that enables teachers and students participating in real-time mixed and online classes to interact with avatar representations of each other. The longer-term hypothesis is that avatar representations of teachers and students will help create a sense of shared presence, engendering a sense of community and improving student engagement in online lessons.

Project Wonderland

Sun’s Project Darkstar (http://www.projectdarkstar.com) is a computational infrastructure to support online gaming.1 Project Wonderland (http://lg3d-wonderland.dev.java.net) is an open-source project offering a client-server architecture and set of technologies to support the development of virtual- and mixed-reality environments. A noteworthy example of this is Sun’s MPK20 application: a virtual building designed for online real-time meetings between geographically distributed Sun employees (http://research.sun.com/projects/mc/mpk20.html), illustrated in Figure 1.

Figure 1. Sun’s MPK20 Environment

Project Wonderland is based on several technologies, including Project Looking Glass (http://www.sun.com/software/looking_glass/) to generate a scene and jVoiceBridge (http://jvoicebridge.dev.java.net) for adding spatially realistic immersive audio. The graphical content that creates the visible world, as well as the screen buffers controlling the scene, currently use Java3D. Additional objects and components in Project Wonderland (such as a camera device to record audio and video seen from a client) make use of other technologies, such as the Java Media Framework (http://java.sun.com/products/java-media/jmf). Graphical content can be added to a Project Wonderland world by creating objects with a graphics package such as Blender or Maya (http://www.blender.org/; http://www.autodesk.com/maya). Project Wonderland provides a rich set of objects for creating environments, including building components (e.g., walls) and furniture (e.g., desks), and it supports shared software applications, such as word processors, web browsers, and document-presentation tools. For example, one or several users can draw on a virtual whiteboard and view PDF documents and presentations. A user is represented by an avatar augmented with the login name of its human controller (eventually each avatar is intended to have an appearance similar to that of its controller). A controller can speak through the avatar to others in the world by means of the voice-bridge and a microphone and speaker or by the use of a dedicated chat window for text messages. The scene generated by Wonderland can be viewed from a first-person or several third-person perspectives.

MiRTLE

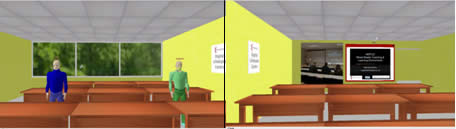

The objective of MiRTLE is to provide a mixed-reality environment for a combination of local and remote students in a traditional instructive higher education setting. The environment will augment existing teaching practices with the ability to foster a sense of community among remote students and between remote and co-located venues. The mixed-reality environment links the physical and the virtual worlds. Our longer-term vision is to create an entire mixed-reality campus; so far we have developed the first component in this process: a mixed-reality classroom. In the physical classroom lecturers will be able to deliver their lectures as usual, but they will have the addition of a large display screen, mounted at the back of the room, that shows avatars of the remote students who are logged in to the virtual counterpart of the classroom (see Figure 2, left). Thus, while delivering the lecture, the lecturer will be able to see and interact with a mix of students who are present in the real world and the virtual world. Audio communication between the lecturer and the remote students logged in to the virtual world is made possible via the voice-bridge mentioned earlier. An additional item of equipment located in the physical world is a camera placed on the rear wall of the room to provide a live audio and video stream of the lecture to the virtual world.

Figure 2. Lecturer’s View of Remote Students (left) and Remote Students’ View of the Lecture (right)

From the remote students’ perspective, they log into the MiRTLE virtual world and enter the classroom where the lecture is taking place (see Figure 2, right). Here they see a live video of the lecture as well as any slides that are being presented or applications that the lecturer is using. Spatial audio is employed to enhance their experience so that it is more realistic. Remote students have the opportunity to ask questions just as they would in the physical world, through audio communication. Additionally, a messaging window is provided for written questions or discussions.

A means by which students can relay their emotional state to the lecturer is also being investigated,2 together with the use of Sun’s Small Programmable Object Technology, or SPOT (http://www.sunspotworld.com), as a means of interfacing between physical and virtual worlds. The MiRTLE world has been developed using open-source tools. Blender has been used to create the objects that populate the world. These objects are then exported to the X3D open standards file format for use in the world. The platform uses a client-server architecture and, to aid ease of use and to ensure that users receive the current version of the client, Java Web Start Technology (http://java.sun.com/products/javawebstart).

Evaluation

We are currently planning to deploy and test MiRTLE in a number of different settings. The main evaluation of MiRTLE will be in conjunction with the Network Education College of Shanghai Jiao Tong University (SJTU), which currently delivers fully interactive lectures to PCs, laptops, PDAs, IPTV, and mobile phones. The core of the platform includes a number of smart classrooms distributed around Shanghai, the Yangtze River Delta, and even in remote western regions of China such as the Tibet Autonomous Region, Yan’an, Xinjiang, and Ningxia.

The MiRTLE simulation will be developed to closely model the SJTU smart classroom. Sun Microsystems is providing a Darkstar server, which is located at the University of Essex and will serve out MiRTLE. A server will offer a forward-looking camera view of the smart classroom (i.e., from the students’ position, toward the lecturer), together with a number of simulated instances of the smart classroom (each instance being a particular student’s environment and view). The Darkstar server will be interfaced to the existing smart classroom servers and processors, enabling Darkstar-based students to access the full range of educational media available in the smart classroom. To access the system, students will need to use the Internet (broadband or wireless) to log in to the Sun Darkstar server in Shanghai, which will create their avatars (which they will have previously selected as part of customizing their accounts). We are planning to use this customization as one of the vehicles to explore the effects of cultural diversity by providing a rich set of operational modes, which will reflect social preferences. For example, students will be able to create environments in which they are either isolated or highly social avatars. Likewise, the amount of personal information available to other online students will be under their control, as will some of the options for interaction with lecturers and other students.

We are also planning a number of other evaluation trails, including using MiRTLE to link the various campuses of the University of Essex and to provide English as a Foreign Language (ESL) courses from Essex to Shanghai. MiRTLE is also part of the Sun Microsystems Education Grid, which is provided free of charge to the general public and to Immersive Education Initiative (http://immersiveeducation.org) members. The plan is that Immersive Education Initiative members can conduct classes and meetings within Project Wonderland virtual worlds on the Education Grid. Initiative members can also use the Education Grid to build custom Project Wonderland virtual learning worlds, simulations, and learning games.

With the increasing global outreach of online education, designing online learning that can be engaging to a global audience is critical to its success. Recent studies have found that students learn better when they are socially, cognitively, and emotively immersed in the learning process.3 Social presence is about presenting oneself as a real person in a virtual learning environment. Cognitive presence is about sharing information and resources and constructing new knowledge. Emotive presence is about learners’ expressing their feelings of self, the community, the learning atmosphere, and the learning process. Learners’ cultural attributes can affect how they perceive an online learning setting and how they present themselves online—cognitively, socially, and emotively.4 A key objective of our approach is to counter the isolation of remote network-based learners, engendering a sense of community and social presence, which can improve student engagement and the overall learning experience. At the heart of our vision is the hypothesis that a mixed-reality version of the smart classroom, with avatar representations of teachers and students, will help foster the social environment that has been shown to improve student engagement in online lessons.

Future Plans

An important component of this work is the mixed-reality environment, and to that end, we are applying an online game server, based on Sun’s Project Darkstar and Project Wonderland tools, to create a shared virtual classroom. The technology itself will form a vehicle to advance the cultural insights into the design of e-learning systems, which are becoming increasing global in reach and nature. Looking further into the future, we recognize that other human qualities can play an important factor in learning performance. As part of the social space, we are integrating some of our work on emotion monitoring and mediation, as part of this experimental framework. Thus, while we are aiming to use this work for a number of shorter-term deployments of mixed-reality technology, we are also seeking to create a framework for much longer-term research addressing more speculative and less understood aspects of remote education, such as the role of culture and emotion. We look forward to reporting on these aspects as our research progresses.

We are grateful to Sun Microsystems for financial support of the MiRTLE project. We are especially pleased to acknowledge the important role that Kevin Roebuck of Sun Microsystems has played in enabling the MiRTLE project.

- Brendan Burns, Darkstar: The Java Game Server (Sebastopol, Calif.: O’Reilly Media, 2007).

- Liping Shen, Enrique Leon, Victor Callaghan, and Ruimin Shen, “Exploratory Research on an Affective eLearning Model,†Workshop on Blended Learning ’2007, University of Edinburgh, United Kingdom, August 15–17, 2007; Christos Calcanis, “Towards End-user Physiological Profiling for Video Recommendation Engines,†4th IET International Conference on Intelligent Environments, University of Washington, Seattle, July 21–22, 2008; Liping Shen and Victor Callaghan, “Affective e-Learning in Residential and Pervasive Computing Environments,†Journal of Information Systems Frontiers, special issue on “Adoption and Use of Information & Communication Technologies (ICT) in the Residential/Household Context,†vol. 10, no. 3 (October 2008).

- Minjuan Wang and J. Kang, “Cybergogy of Engaged Learning through Information and Communication Technology: A Framework for Creating Learner Engagement,†in D. Hung and M. S. Khine, eds., Engaged Learning with Emerging Technologies (New York: Springer, 2006).

- Minjuan Wang, “Correlational Analysis of Student Visibility and Performance in Online Learning,†Journal of Asynchronous Learning Networks, vol. 8, no. 4 (December 2004), pp. 71–82, <http://www.sloan-c-wiki.org/JALN/v8n4/pdf/v8n4_wang.pdf>; Chun-Min Wang and Thomas C. Reeves, “The Meaning of Culture in Online Education: Implications for Teaching, Learning, and Design,†in Andrea Edmundson, ed., Globalizing E-Learning Cultural Challenges (Hershey, Penn.: Information Science, 2007), pp. 2–17.